A joint calibration method between 360-degree panoramic laser and multiple vision systems

A vision system, joint calibration technology, applied in the field of environmental perception

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

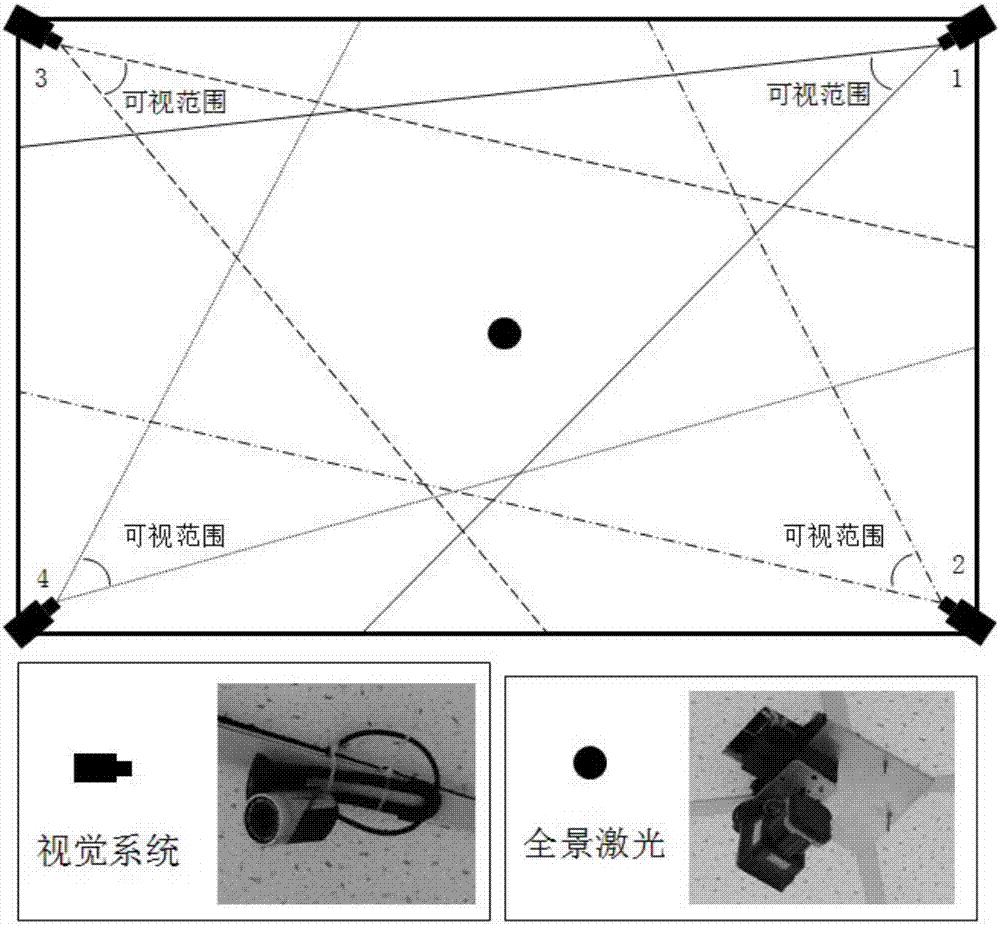

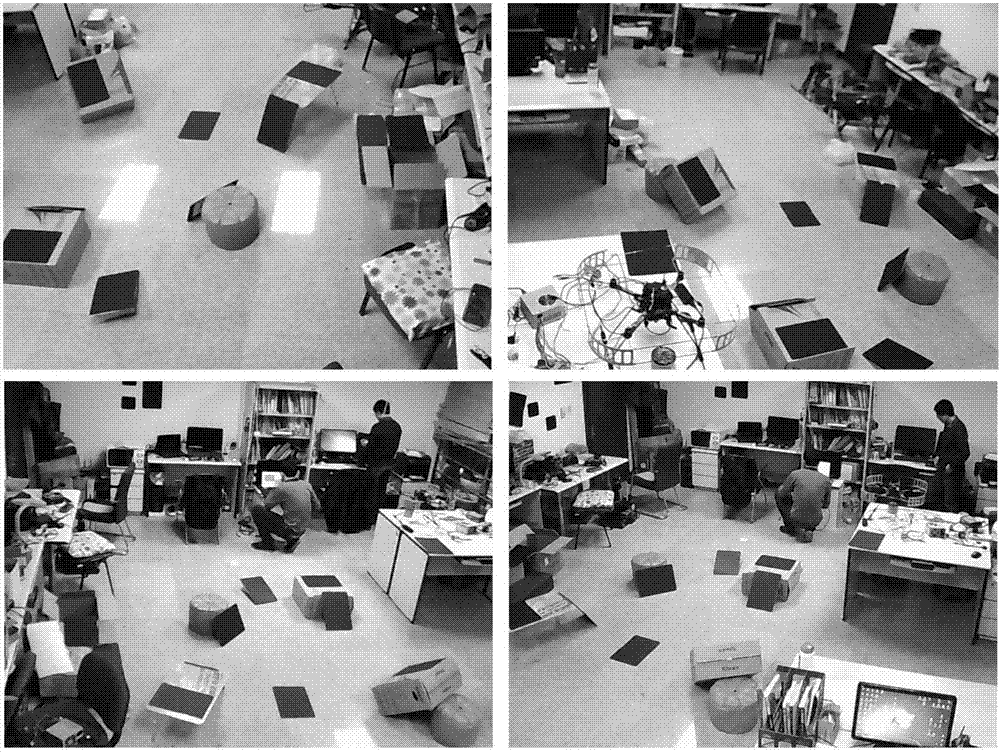

[0057] In order to verify the effectiveness of this method, the use of figure 2 The constructed sensor system was used to verify the calibration method. The panoramic laser sensor consists of a Hokuyo UTM-30LX laser sensor and a rotating pan / tilt. The plane scanning angle of the laser sensor is 0-270 degrees, and the frequency range of the stepping motor of the pan / tilt is 500-2500Hz. Use the motor to drive the laser sensor to obtain the three-dimensional laser ranging data of the scene. The four visual systems are all common ANC FULL HD1080P network cameras, using USB2.0 interface, with a viewing angle of 60 degrees and a resolution of 1280×960. The calibration device uses nine 300mm×100mm black papers, which are placed at different positions in the scene.

[0058] The pictures of the calibration device in the scene were collected from the four vision systems respectively (such as image 3 shown), the calibration device can be extracted from the picture using the correspo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com