Collaborative scheduling method and system based on GPGPU system structure

A technology of collaborative scheduling and architecture, applied in the field of high-performance computing, can solve problems such as the optimization of the scheduling strategy in the Fetch stage without mentioning it, and achieve the effect of strong usability and practicability, reducing possibilities, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

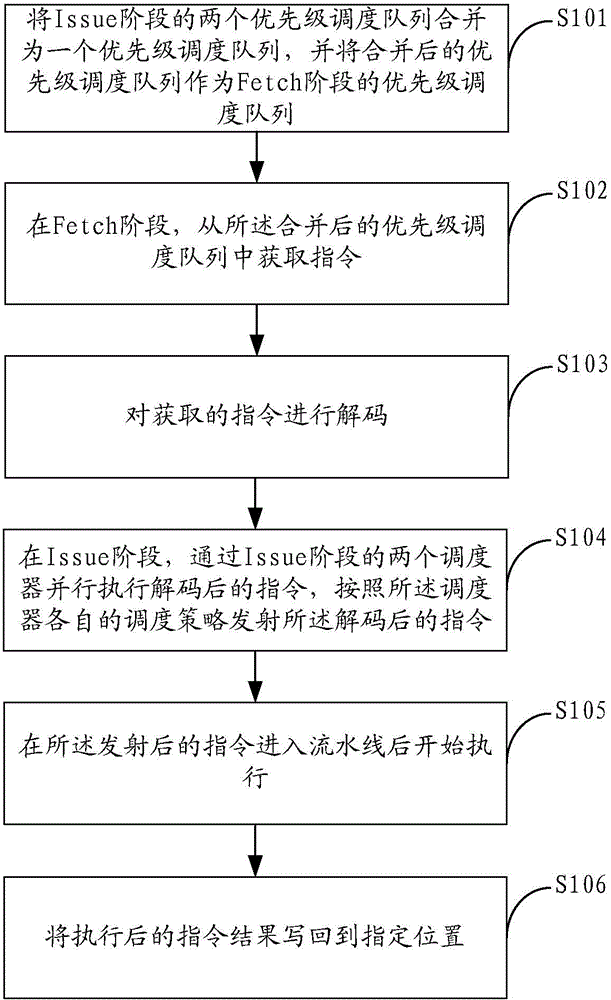

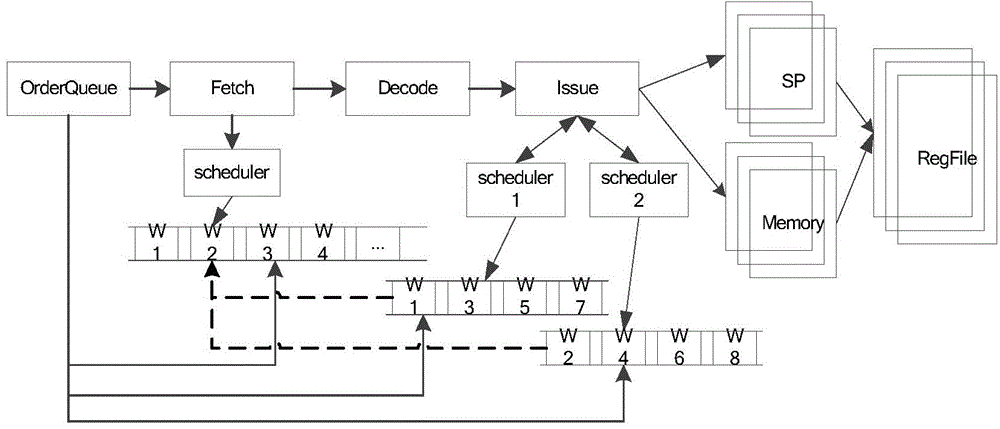

[0031] figure 1 It shows the implementation process of the collaborative scheduling method based on the GPGPU architecture provided by Embodiment 2 of the present invention, and the method process is described in detail as follows:

[0032] In step S101, the two priority scheduling queues in the Issue stage are merged into one priority scheduling queue, and the merged priority scheduling queue is used as the priority scheduling queue in the Fetch stage;

[0033] In step S102, in the Fetch stage, an instruction is obtained from the merged priority scheduling queue;

[0034] In step S103, the acquired instruction is decoded;

[0035] In step S104, in the Issue stage, the decoded instructions are executed in parallel by the two schedulers in the Issue stage, and the decoded instructions are launched according to the respective scheduling policies of the schedulers;

[0036] In step S105, execution starts after the issued instruction enters the pipeline;

[0037] In step S106, ...

Embodiment 2

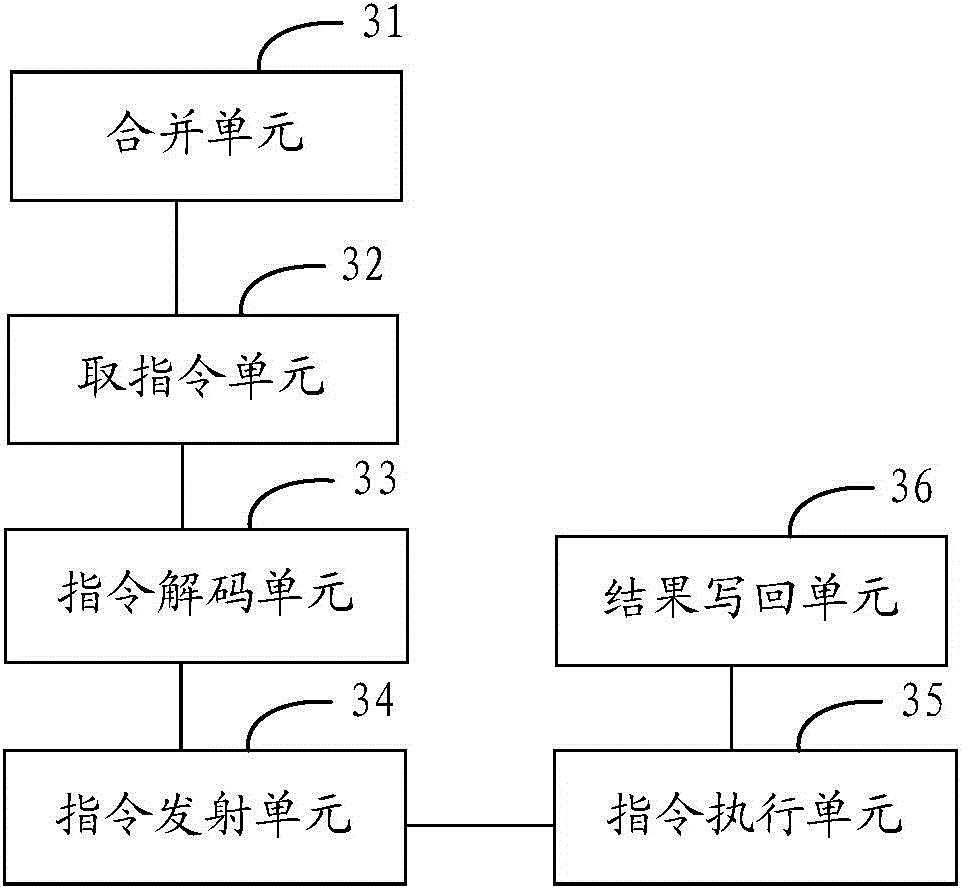

[0041] image 3 The composition structure of the cooperative scheduling system based on the GPGPU architecture provided by the second embodiment of the present invention is shown. For the convenience of description, only the parts related to the embodiment of the present invention are shown.

[0042] The collaborative scheduling system based on the GPGPU architecture can be a software unit, a hardware unit, or a combination of software and hardware built into terminal devices (such as personal computers, notebook computers, tablet computers, smart phones, etc.), or as an independent pendant Integrate into the terminal device or the application system of the terminal device.

[0043] The collaborative scheduling system based on the GPGPU architecture includes:

[0044] The merging unit 31 is used to merge the two priority scheduling queues in the Issue stage into one priority scheduling queue, and use the merged priority scheduling queue as the priority scheduling queue in the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com