An improved method of ptam based on ground features of intelligent robots

A technology of intelligent robots and robots, applied in the field of robot vision, can solve the problems of camera movement restrictions and the inability to establish metric maps

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0091] Below in conjunction with accompanying drawing, the patent of the present invention is described in further detail.

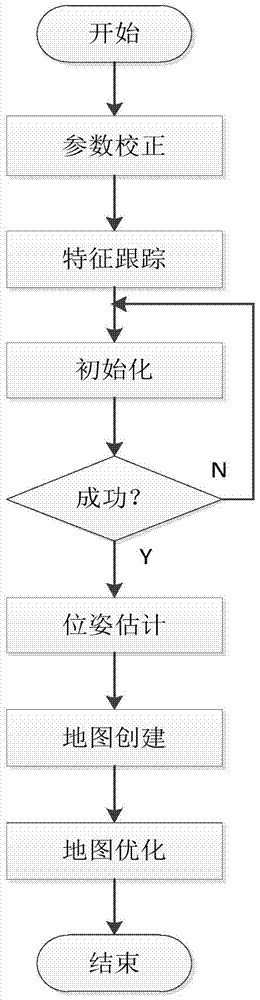

[0092]The PTAM improved algorithm flow chart based on ground features is shown in Figure (1), which specifically includes the following steps:

[0093] Step 1, parameter correction

[0094] Step 1.1, parameter definition

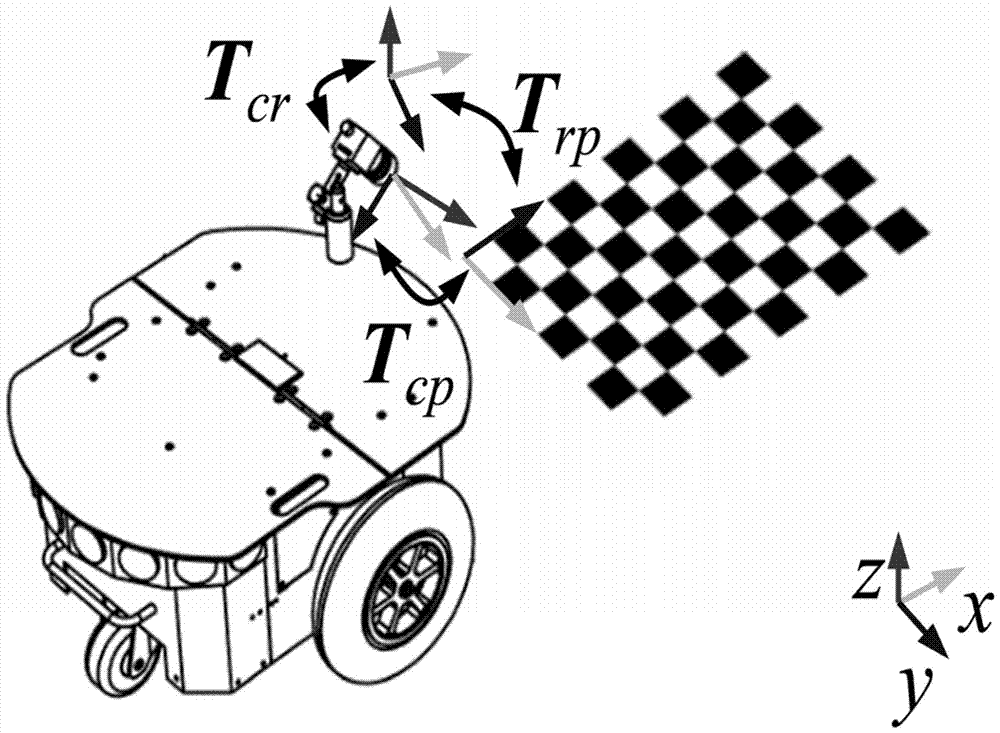

[0095] The robot pose representation is constructed from the relationship between the robot coordinate system and the world coordinate system, and the ground plane calibration parameters are determined by the pose relationship between the camera and the target plane.

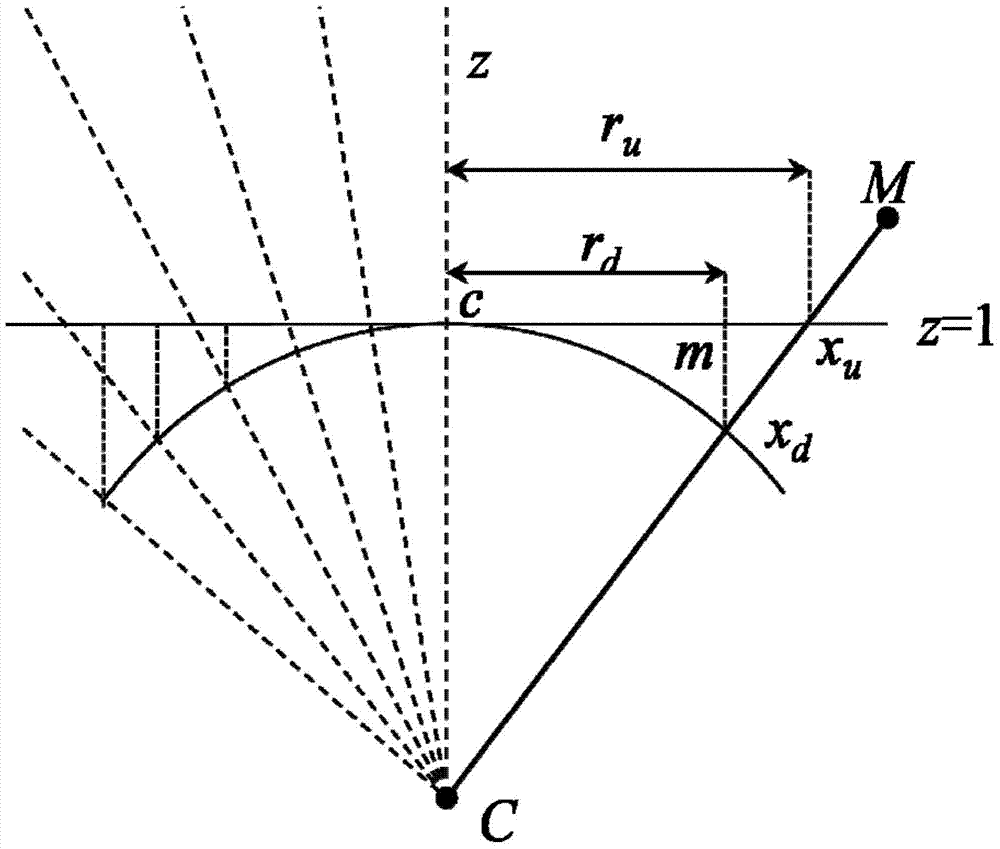

[0096] Step 1.2, Camera Correction

[0097] The FOV model is used to correct the monocular camera, and the image pixel coordinates are mapped to the normalized coordinate plane. At the same time, the image distortion correction is realized by combining the camera internal parameter matrix K.

[0098] Step 2, initialization based on ground features

[0099] Step 2.1, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com