Data caching method

A data cache and data technology, applied in the direction of electrical digital data processing, memory systems, instruments, etc., can solve the problems of easy memory jitter, memory reduction, low efficiency, etc., to achieve the effect of avoiding memory jitter, improving efficiency and speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

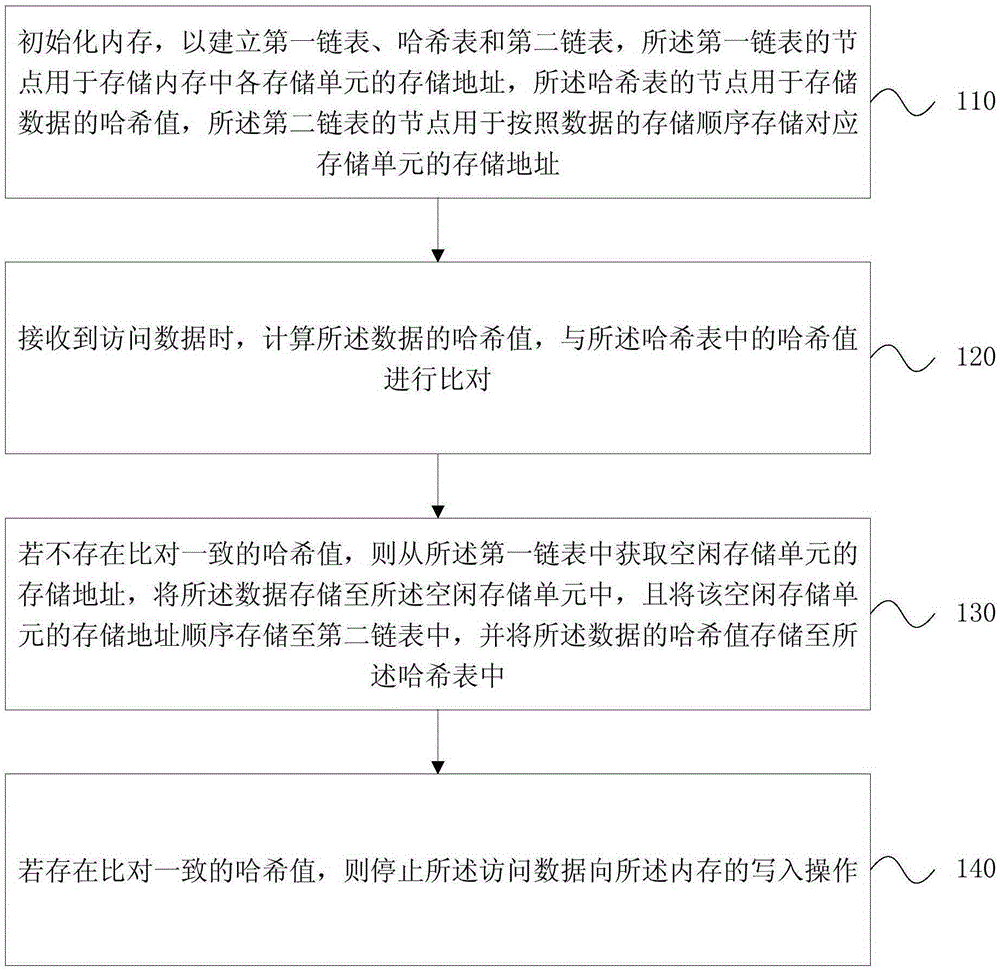

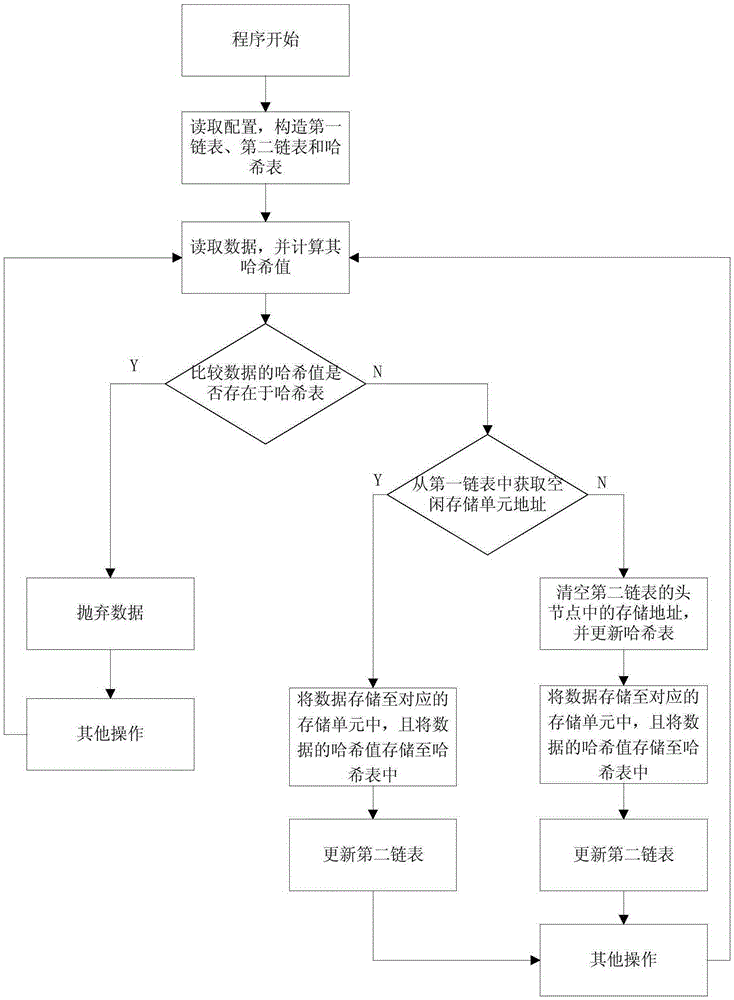

[0025] figure 1 It is a flow chart of the data caching method provided in Embodiment 1 of the present invention. This embodiment is applicable to any data caching situation. The method can be executed by a data processing device configured on a terminal, and specifically includes the following steps:

[0026] S110. Initialize the memory to establish a first linked list, a hash table and a second linked list, the nodes of the first linked list are used to store the storage addresses of each storage unit in the memory, and the nodes of the hash table are used to store data hash value, the nodes of the second linked list are used to store the storage address of the corresponding storage unit according to the storage order of the data;

[0027] Wherein, the storage order of the storage addresses in the nodes of the second linked list can be read-in time order, the first read-in is stored in the head node of the second linked list, and other nodes are analogously stored in the stor...

Embodiment 2

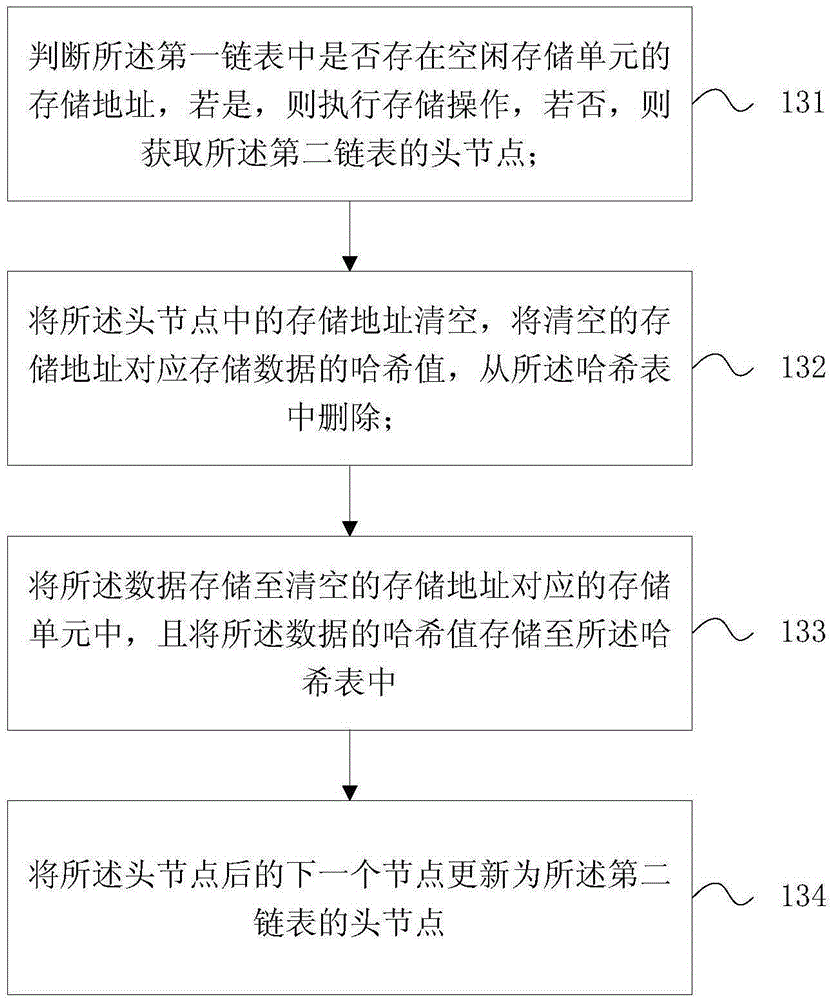

[0039] figure 2 It is a flow chart of the data caching method provided in Embodiment 2 of the present invention. This embodiment is based on the foregoing embodiments, and further provides a specific implementation of data caching, that is, step 130 in the data caching process specifically includes:

[0040] S131. Determine whether there is a storage address of a free storage unit in the first linked list, if yes, perform a storage operation, and if not, obtain the head node of the second linked list;

[0041] Wherein, the head node of the second linked list stores the memory address where the stored data with the relatively earliest read-in time is located.

[0042] S132. Empty the storage address in the head node, and delete the hash value of the stored data corresponding to the emptied storage address from the hash table;

[0043] S133. Store the data in the storage unit corresponding to the emptied storage address, and store the hash value of the data in the hash table; ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com