Depth map recovery method

A recovery method and a depth map technology, applied in image communication, electrical components, stereo systems, etc., can solve problems such as slow convergence of computational complexity and unsatisfactory results, and achieve low signal-to-noise ratio, simplified methods, and improved quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

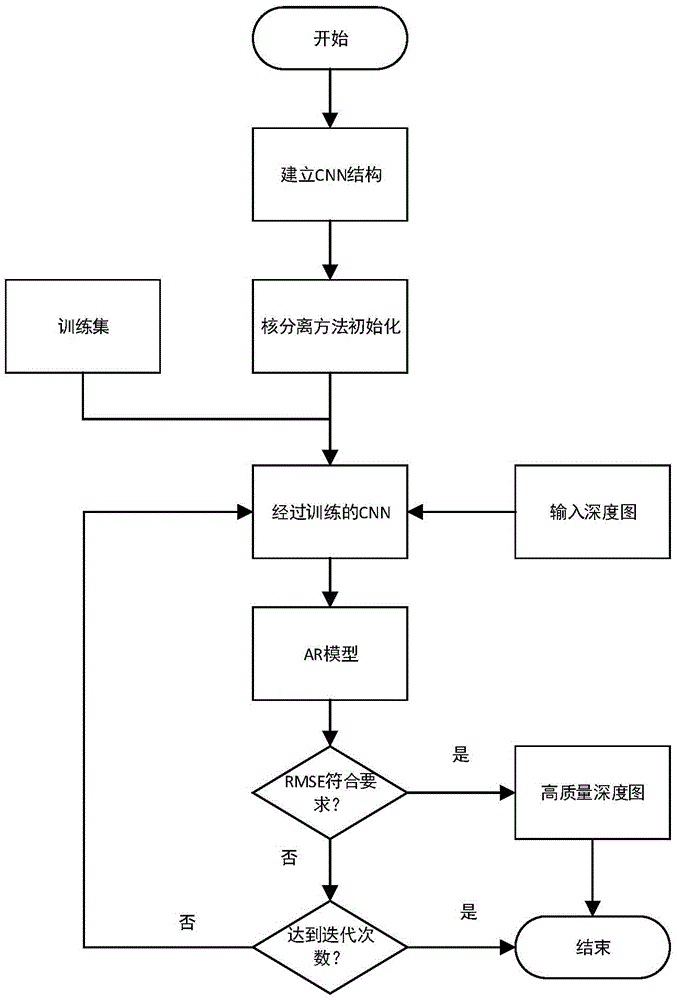

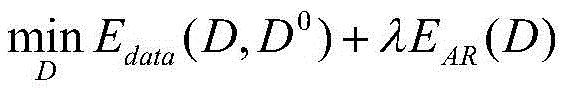

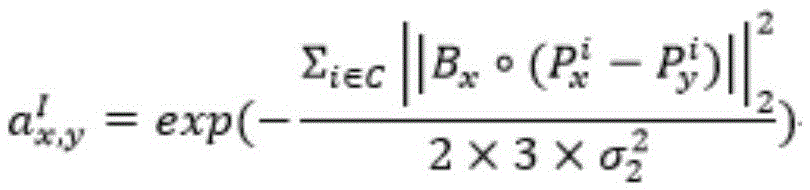

[0009] In this embodiment, a training set is established for the depth map, and the parameter structure of the training convolutional neural network is used, so that the CNN can classify the degraded depth map. The method of kernel decomposition is used to initialize the hidden layer in the CNN structure, so that the CNN structure has the characteristics of deconvolution, which plays the role of denoising and filtering while classifying, and partially solves the degradation problem of the depth map. The AR model is established, and the parameters of the AR model are adjusted according to the main degradation models. Connect the output layer of the CNN with the input layer of the AR model, and input the corresponding output result of the CNN into the AR model.

[0010] A depth map restoration method based on a convolutional neural network and an autoregressive model proposed in this embodiment includes the following steps:

[0011] A1: The training set consists of a large numb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com