Gesture control man-machine interactive system based on WiFi

A human-computer interaction and gesture control technology, applied in the field of gesture-controlled human-computer interaction systems, can solve the problems of inconvenient use, unsatisfactory effects, and inability to be used by deaf-mute users, and achieve the effect of low cost and simple method.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

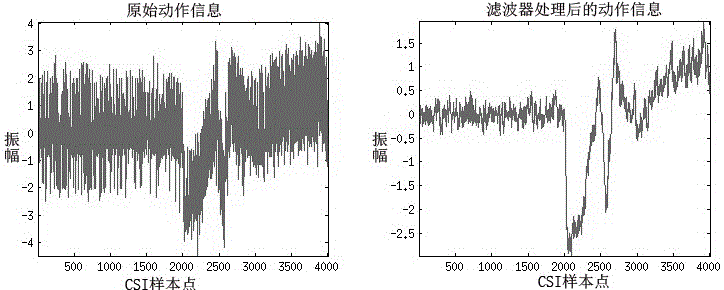

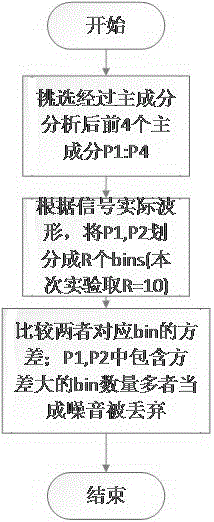

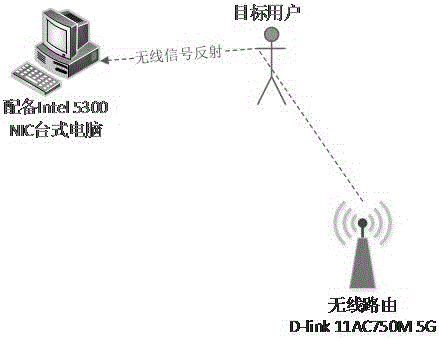

[0047] A gesture control human-computer interaction system based on WiFi, its system architecture diagram is as follows image 3 As shown, the system processing flow chart is as follows Figure 4 As shown, where: after the signal acquisition module obtains the channel state information (CSI) of the physical layer in the wireless network environment, it hands over to the signal processing module to perform signal processing operations such as denoising, filtering, and smoothing on the original signal, and the processed CSI data Enter the action extraction module. The gesture action extraction module uses an appropriate segmentation algorithm to extract target user action segments contained in it according to the characteristics of the CSI waveform. The target user's action fragments extracted by the action extraction module are passed through the action instruction mapping module, and the classification algorithm is used to classify and identify the target user's actions, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com