Laser and vision-based hybrid location method for mobile robot

A mobile robot and hybrid positioning technology, applied in navigation computing tools and other directions, can solve the problems of ultrasonic accuracy, low environmental recognition, and high maintenance cost of topological maps, achieving diversity assurance, wide application range, and making up for visual positioning. unstable effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The solution of the present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

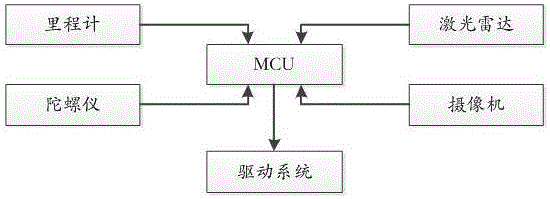

[0024] Such as figure 2 As shown, the mobile robot in this embodiment includes an odometer, a gyroscope, a lidar, a camera (vision sensor), a drive system, and a core control board (MCU). Specifically, the drive system in this embodiment consists of left and right It is composed of wheels and driven by different motors. It should be understood that the mobile robot can also include other parts (such as dust collection system, sensor system, alarm system, etc.), which are not related to the technical solution of the present invention. Description.

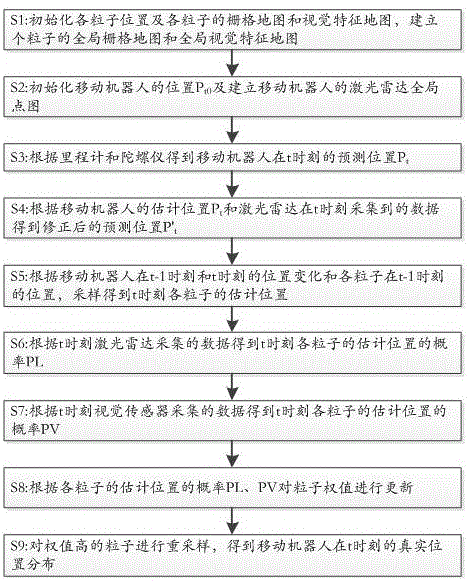

[0025] Such as figure 1 As shown, the positioning method based on the particle filter algorithm of the mobile robot of the present invention includes the following steps:

[0026] S1: Initialize the position of each particle and the grid map and visual feature map of each particle, and estab...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com