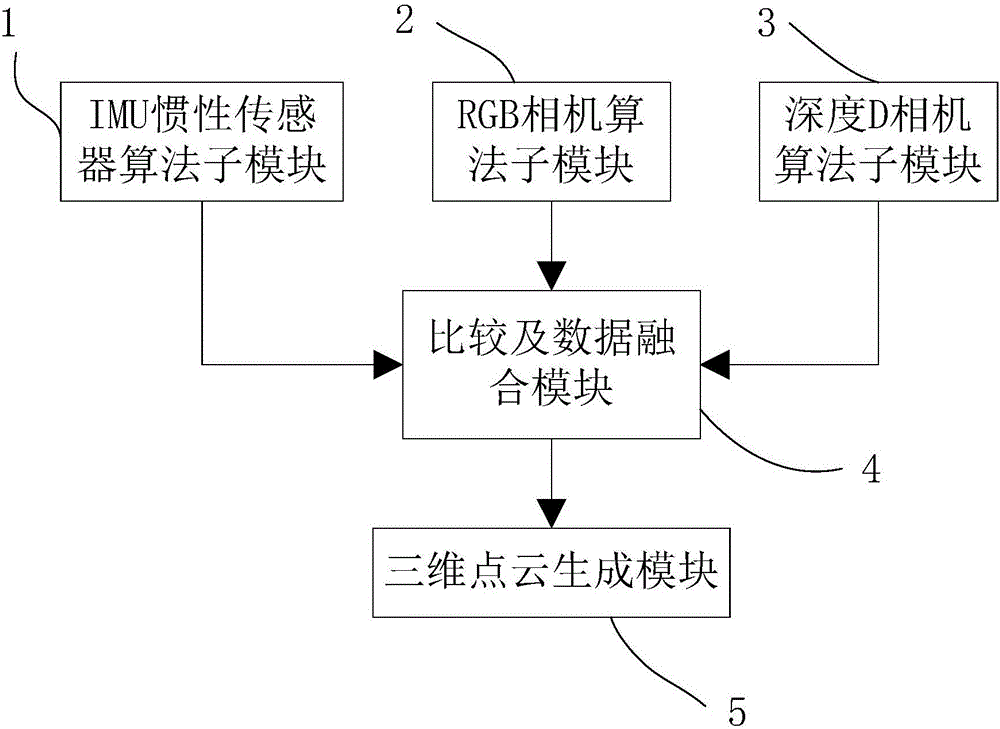

Real-time three-dimensional scene reconstruction system and method based on inertia and depth vision

A real-time 3D, depth vision technology, applied in the details of processing steps, image data processing, 3D modeling, etc., can solve problems such as system robustness reduction, reconstruction difficulty, algorithm failure, etc., to improve accuracy and fault tolerance. Strong and efficient effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

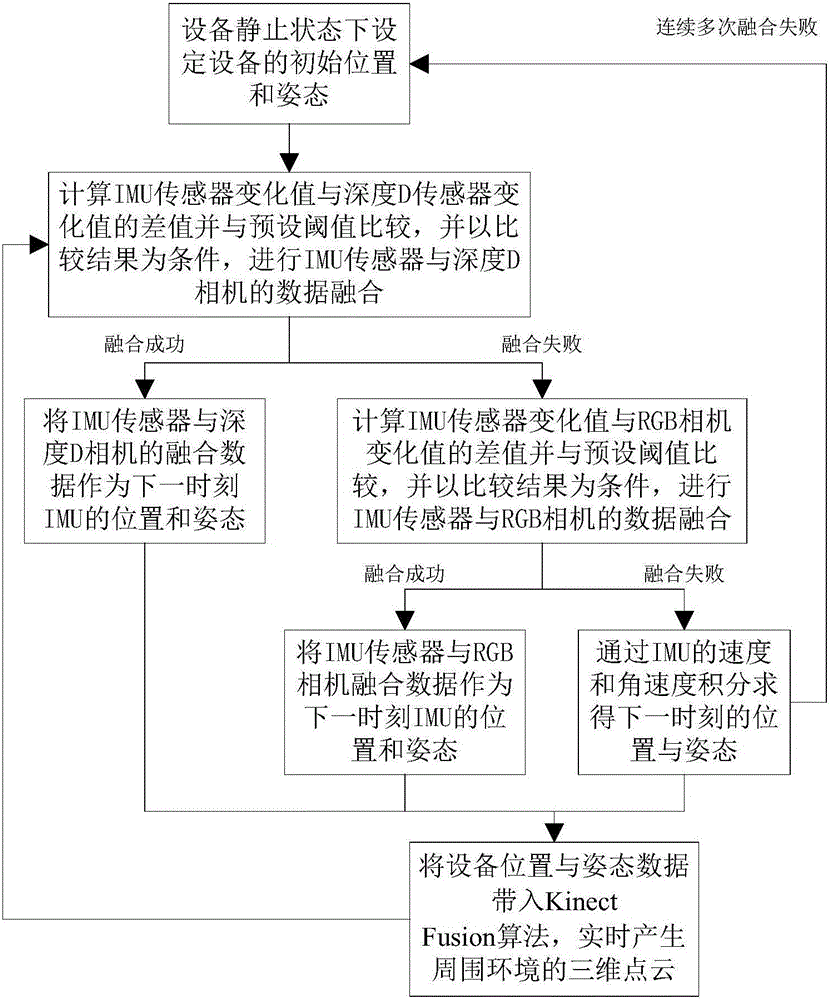

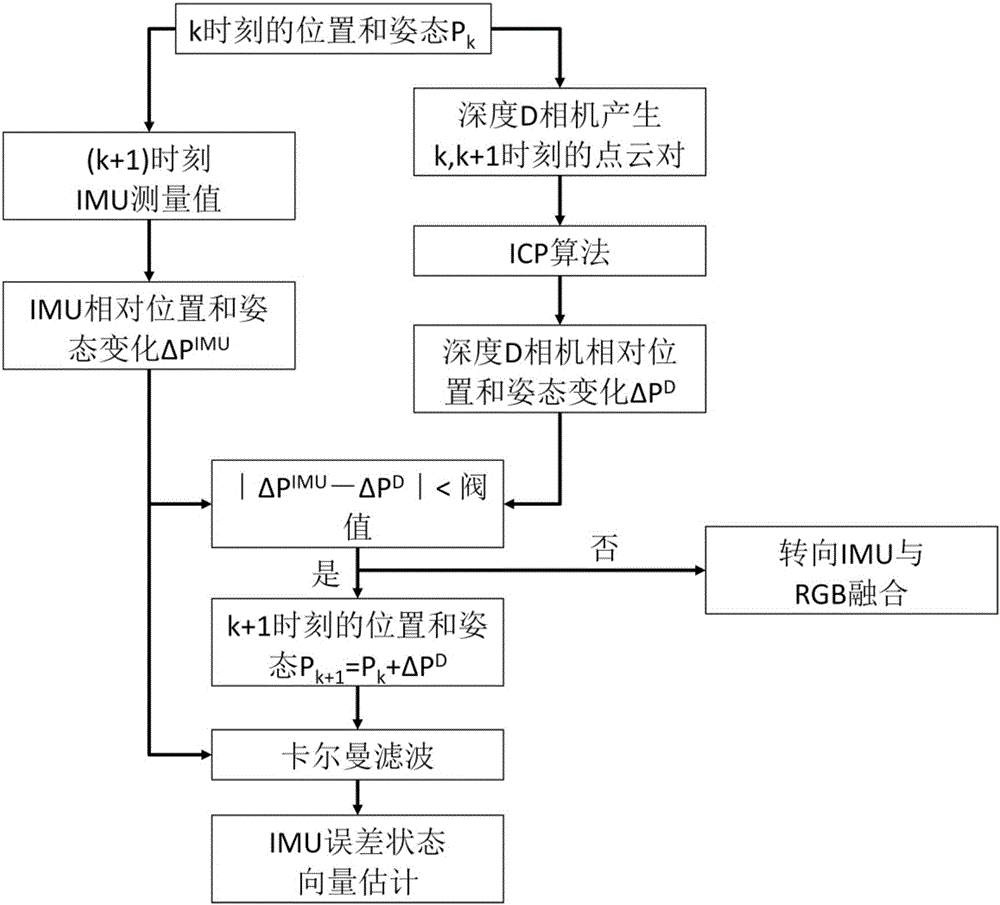

Method used

Image

Examples

Embodiment Construction

[0045] The present invention will be further described below in conjunction with the accompanying drawings and embodiments. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that the sensor combination involved in the present invention is only a special case of the present invention, and the present invention is not limited to IMU, RGB and depth D sensors. Based on the concept of the present invention, several modifications and improvements can be made, such as using a binocular vision system instead of a monocular camera, or using an IMU with higher precision. Inspired by the algorithm idea of the present invention, those skilled in the field of computer vision and multi-sensor fusion can also make improvements, such as using better feature tracking algorithms to enhance the accuracy of visual odometry in estimating relative motion. These improvements...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com