Stereo visual calibration method integrating neural network and virtual target

A virtual target and neural network technology, applied in the field of machine vision, can solve the problems of large-scale target production and processing, camera nonlinear distortion calibration model, complexity, etc., achieve powerful nonlinear mapping capabilities, easy production and processing, and solve production and processing difficult effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] In order to enable those skilled in the art to better understand the technical solution of the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments. And the features in the embodiments can be combined with each other.

[0042] The equipment used in the present invention mainly includes: one set of Global series three-coordinate measuring machine of American Brown & Sharpe Company, and its measurement range is: 700mm × 1000mm × 660mm; , frame rate 130fps; one single-corner target; two tripods; one PC and two GIGE interface data lines, etc. In order to compare and analyze the performance of the method of the present invention, the widely used linear calibration method (DLT) is used as a comparative experiment.

[0043] Concrete implementation steps of the present invention are as follows:

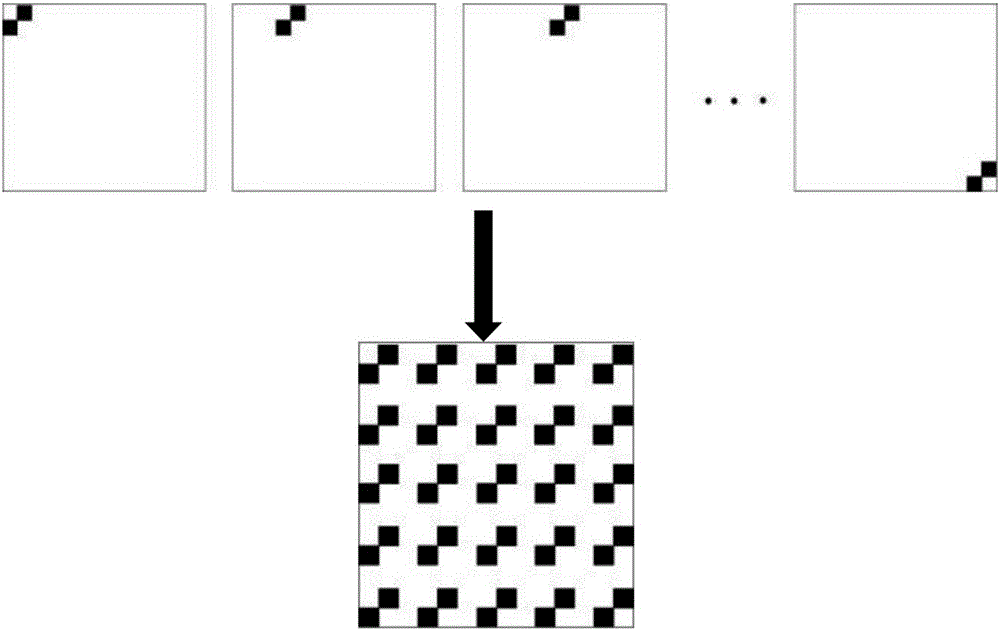

[0044] S1. Construct a three-dimensional virtual target with a scale of 560mm×400...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com