Image distortion method based on matrix inverse operation in virtual reality (VR) mobile end

A technology of virtual reality and image distortion, applied in the field of virtual reality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

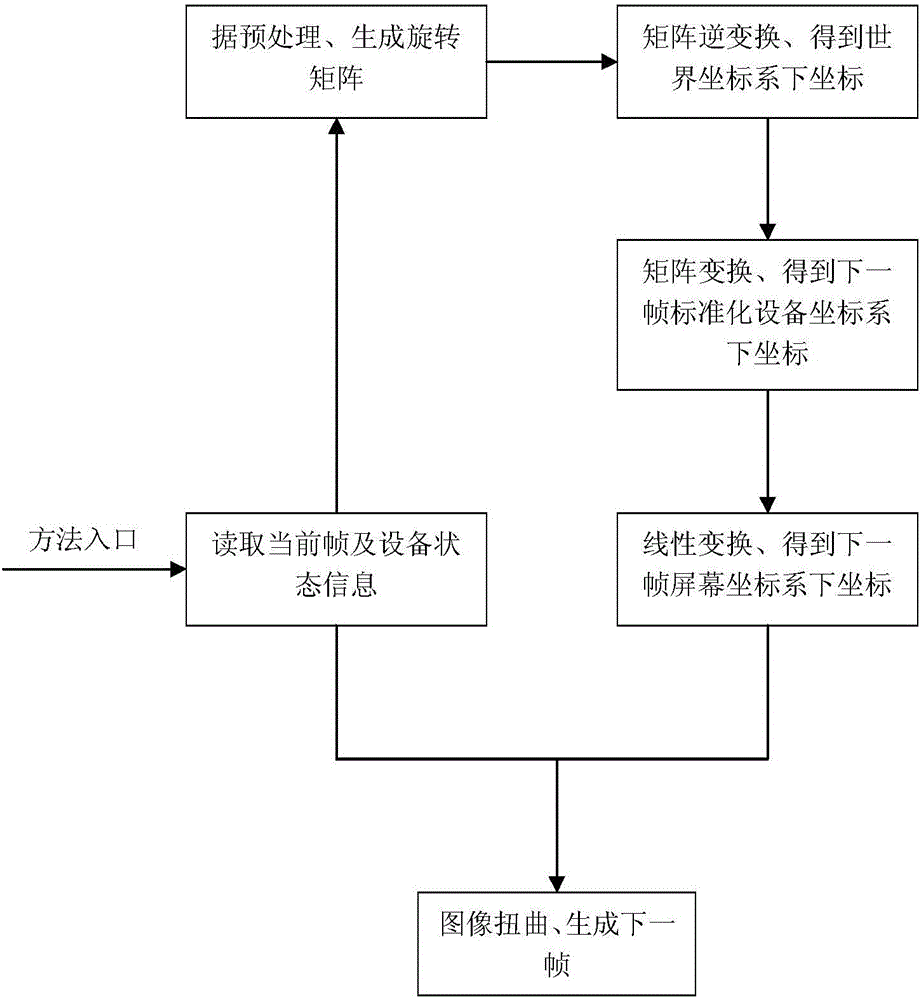

[0057] Embodiment 1: an image warping method based on matrix inverse operation in a virtual reality mobile terminal, comprising the following steps:

[0058] Step 1, read the current frame information, and get the coordinates in the screen coordinate system at the current frame moment (x original ,y original ) T , read the status of the current frame and the next frame of the device;

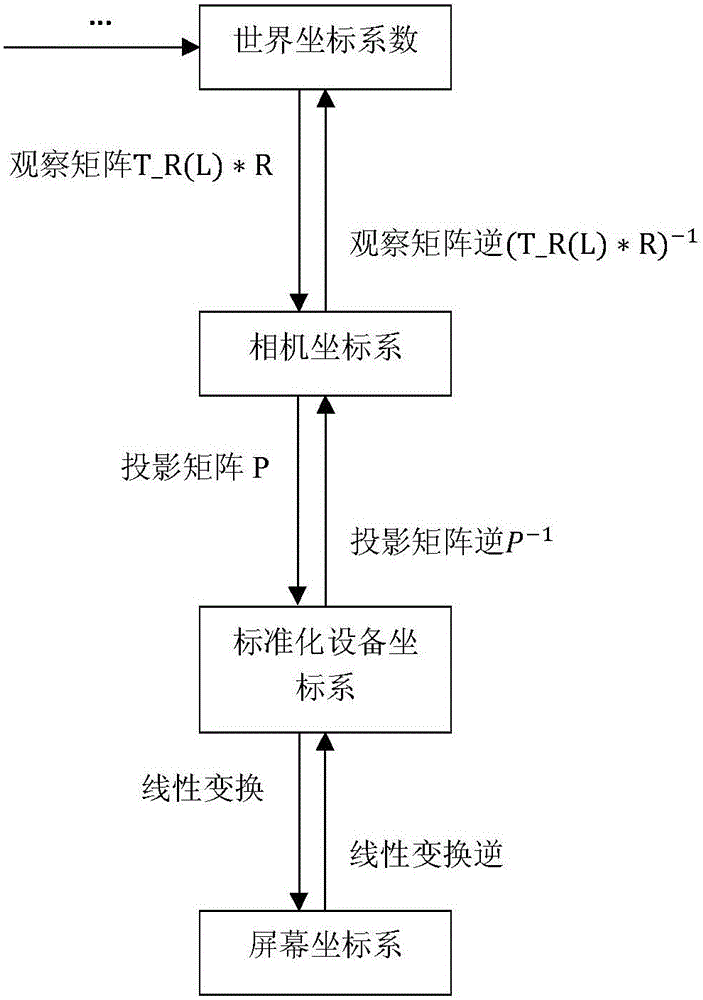

[0059] Step 2, perform necessary preprocessing on the read-in data in step 1, and set the coordinates (x original ,y original ) T Convert to coordinates (x, y, z, w) in the standardized device coordinate system at the current frame time T , generate the first rotation matrix R and the second rotation matrix R' according to the device state;

[0060] Step 3, according to the projection matrix P, the first rotation matrix R, and the viewpoint translation matrix T, standardize the coordinates (x, y, z, w) in the device coordinate system for each current frame moment T Perform matrix inverse ...

Embodiment 2

[0068] Embodiment 2: As described in Embodiment 1, a method for image warping based on matrix inverse operation in a virtual reality mobile terminal, step 1 includes:

[0069]Before the image is about to be displayed on the screen device, read the current frame information, that is, the framebuffer content, including the colorbuffer and depthbuffer content that has been rendered into a texture, and obtain the coordinates of the image pixel at the current frame moment in the screen coordinate system (x original ,y original ) T ;The content in the colorbuffer is the RGB value of the pixel of the image to be displayed, define the image width as WindowWidth, and the image height as WindowHeight, then 0≤x original original original ,y original ) T , the corresponding depth information value is depth original (x original ,y original );

[0070] To read the device status at the current frame and the next frame is to read the gyroscope data at two moments of the device. OpenGL ...

Embodiment 3

[0071] Embodiment 3: As described in Embodiment 2, an image warping method based on matrix inverse operation in a virtual reality mobile terminal, step 2 includes the following steps:

[0072] Step 2-1, set the coordinates in the screen coordinate system at the current frame time (x original ,y original ) T Convert to coordinates (x, y, z, w) in the standardized device coordinate system at the current frame time T ,Specifically: z=2*depth original (x original ,y original )-1, w=1.0;

[0073] Step 2-2, generate rotation matrices R, R′ according to the device state, the specific steps are:

[0074] Step 2-2-1, generate four elements through Euler angles, the conversion formula is:

[0075]

[0076] Step 2-2-2, generate a rotation matrix through four elements, the conversion formula is:

[0077] R q = 1 - 2 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com