Dual-mode video emotion recognition method with composite spatial-temporal characteristic

A technology of spatio-temporal feature and emotion recognition, applied in the field of pattern recognition, can solve the problem of VLBP feature performance degradation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

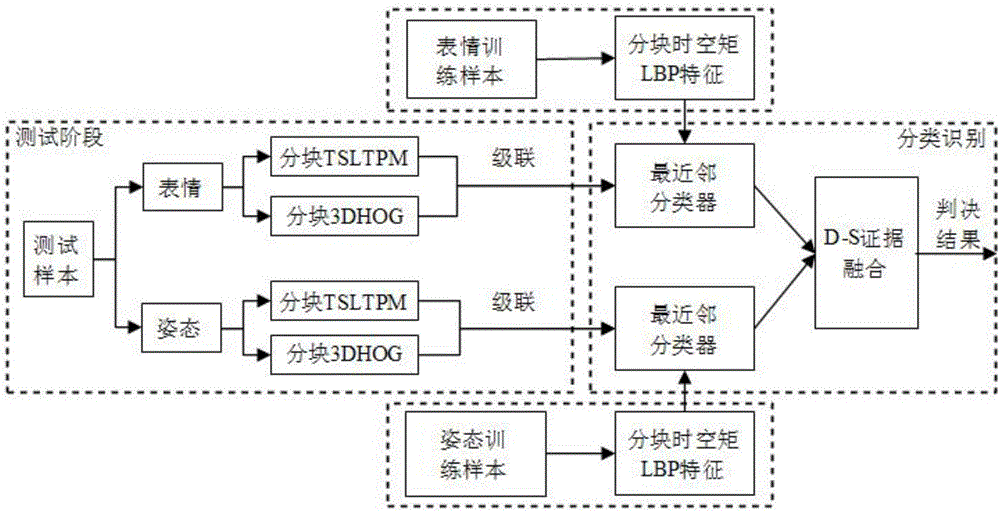

Method used

Image

Examples

Embodiment

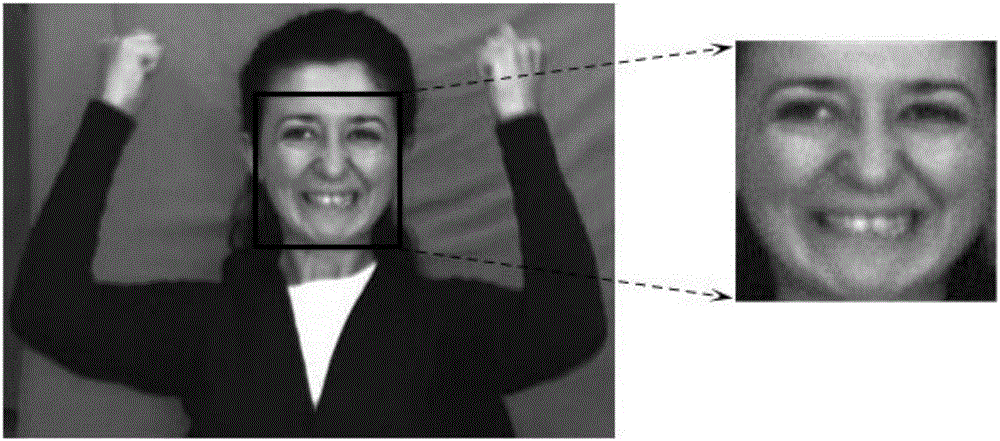

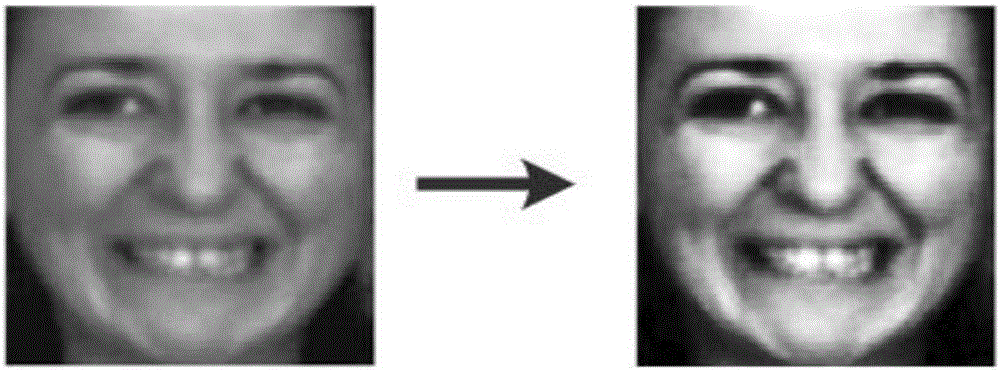

[0185] In order to verify the effectiveness of the present invention, the only public bimodal database is used in the experiment: FABO expression and posture bimodal database. Since the database itself is not fully marked, the present invention selects 12 people with a large number of samples and relatively uniform emotional categories to carry out related experiments during the experiment. The selected samples include five types of emotions: happiness, fear, anger, boredom, and uncertainty, all of which have been labeled, including 238 samples for gestures and expressions. The experiment in this paper is implemented under Windows XP system (dual-core CPU2.53GHz memory 2G), using VC6.0+OpenCV1.0. In the experiment, the facial expression picture frame and the upper body posture picture frame are uniformly sized as 96×96 pixels and 128×96 pixels respectively. Part of the image after the facial expression picture and the gesture picture are unified in size is as follows: Figur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com