A Brain-Computer Interface-Based Automated Assistance Method for Robotic Arms

A technology of brain-computer interface and robotic arm, which is applied in the field of brain-computer interface application research, can solve the problems of not fully utilizing the characteristics and advantages of brain-computer interface and robotic arm, so as to improve the ability of independent living, reduce burden, and facilitate Applied effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

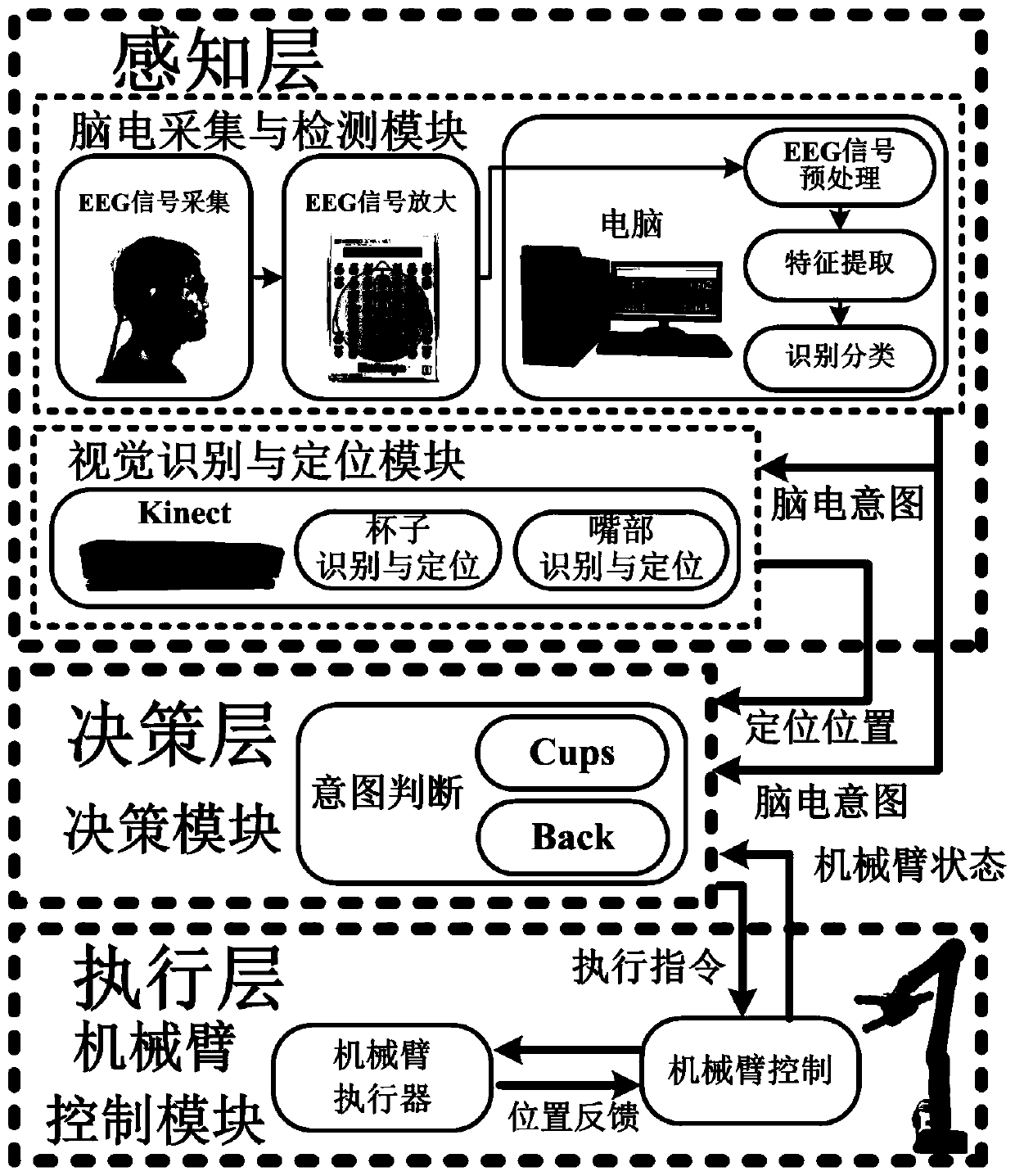

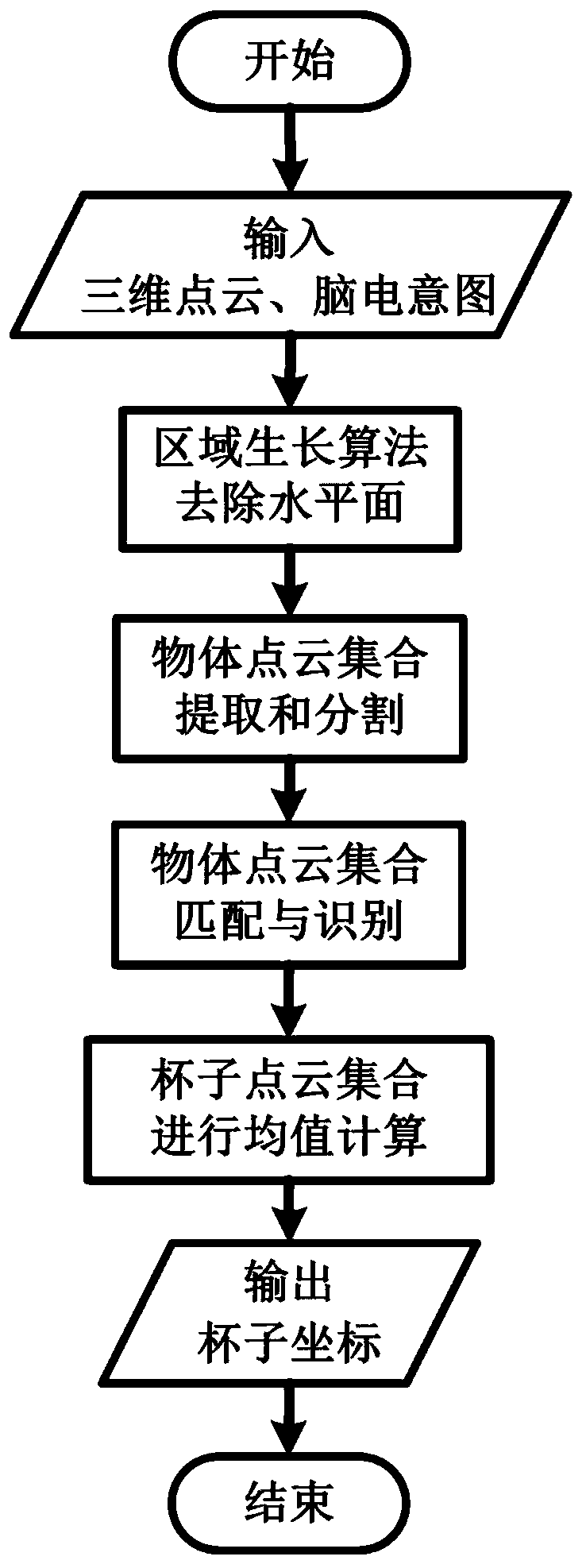

[0046] Such as figure 1 As shown, this embodiment provides a brain-computer interface-based robotic arm autonomous assistance system. The system is built according to the three-layer structure of the perception layer, decision-making layer, and execution layer. The perception layer includes EEG acquisition and detection modules and visual recognition and a positioning module, the EEG acquisition and detection module is used to collect EEG signals, analyze and identify user intentions, and the visual recognition and positioning module is used to identify and locate the corresponding cup and the position of the user's mouth according to the user intention position; the execution layer includes a manipulator control module, which is a carrier for assisting people in actual operations, and performs trajectory planning and control on the manipulator according to the execution instructions received from the decision-making module; the decision-making layer includes A decision-making...

Embodiment 2

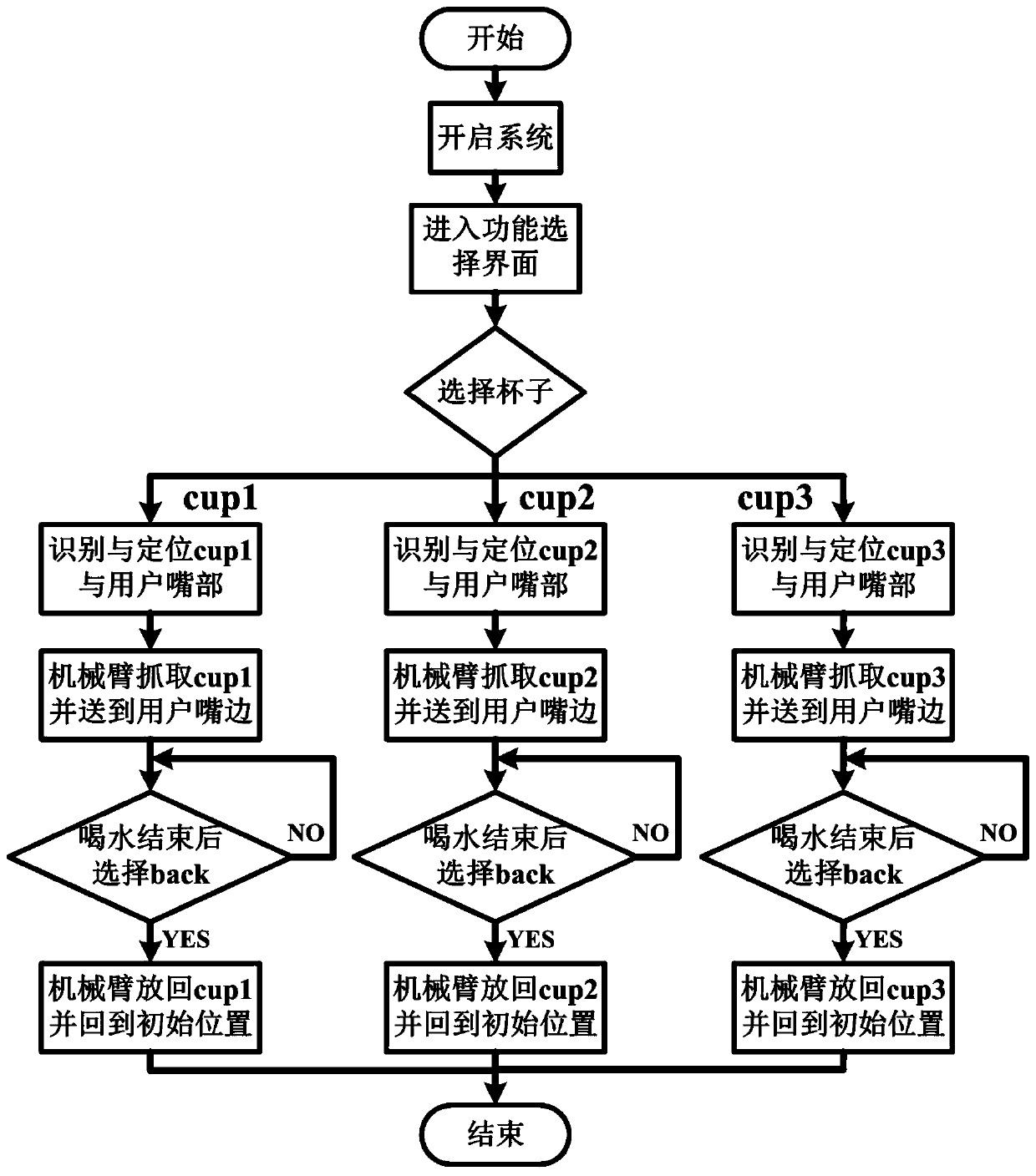

[0052] This embodiment provides a brain-computer interface-based robotic arm autonomous assistance method, such as figure 2 As shown, the method includes the following steps:

[0053] 1) The user sits in front of the first computer screen, adjusts the position, wears the electrode cap for EEG acquisition, turns on the EEG acquisition instrument and the first computer, and confirms that the signal acquisition is in good condition;

[0054] 2) Start the autonomous auxiliary system of the robotic arm based on the brain-computer interface, confirm that the Microsoft Kinect visual sensor that recognizes and locates the user's mouth can correctly capture the user's mouth, and confirm that the three preset cups to be grabbed are correctly placed Within the field of view of the Microsoft Kinect vision sensor used to identify and locate the cup to be grabbed;

[0055] 3) The first computer screen enters a flickering visually stimulating function key interface, which includes four fun...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com