Multi-core shared final stage cache management method and device for mixed memory

A technology of last-level cache and management methods, applied in memory systems, electrical digital data processing, instruments, etc., to achieve the effect of reducing interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

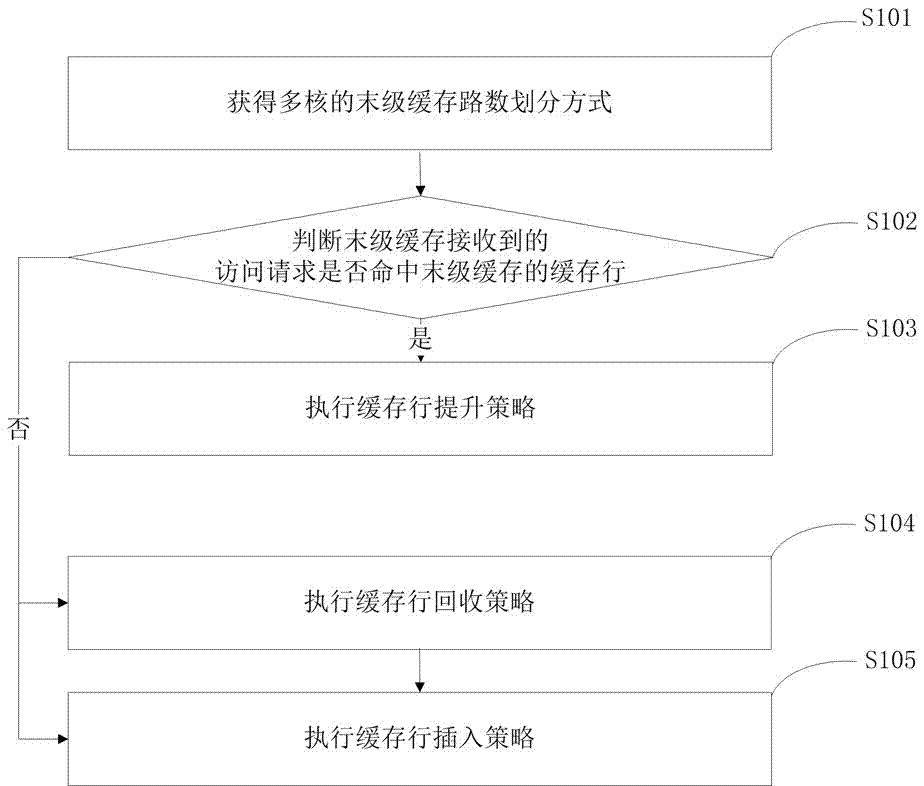

[0072] refer to figure 1 , shows a mixed main memory-oriented multi-core shared last-level cache management method provided by the present invention, the mixed main memory includes DRAM and NVM, and the last-level cache is divided into multiple cache groups, and each cache group includes multiple caches row, the data in the mixed main memory and the last level cache have a multi-way set associative mapping relationship, and the management method includes the following steps:

[0073] S101: Obtain a method for dividing the number of last-level cache ways of the multi-core of the processor.

[0074] S102: Determine whether the access request received by the last-level cache hits a cache line of the last-level cache,

[0075]If hit, proceed to step S103 to execute the cache line promotion policy (Promotion Policy);

[0076] If there is no hit, you need to obtain data from the upper-level cache or main memory, and directly proceed to step S104 to execute the cache line insertion...

Embodiment 2

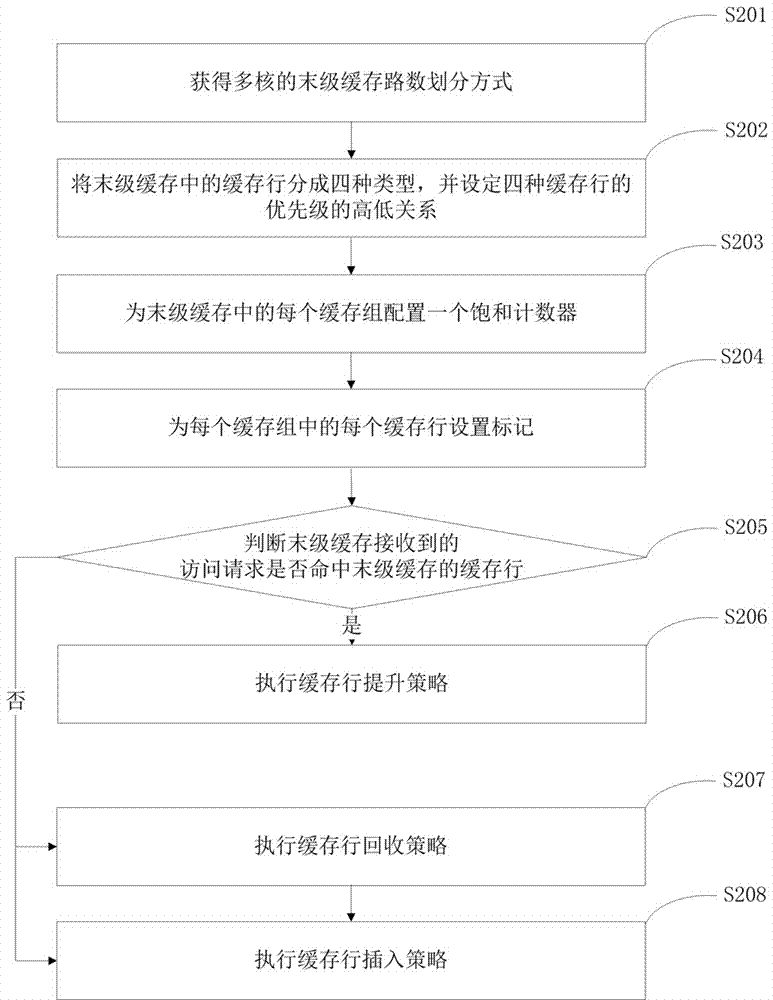

[0092] refer to figure 2 , shows another mixed main memory-oriented multi-core shared last-level cache management method provided by the present invention, the mixed main memory includes DRAM and NVM, and the last-level cache is divided into multiple cache groups, and each cache group includes multiple The cache line, the data in the mixed main memory and the last level cache have a multi-way set associative mapping relationship, and the management method includes the following steps:

[0093] S201: Obtain a method for dividing the number of last-level cache ways of the multi-core of the processor.

[0094] S202: Divide the cache line (cache line) in the last level cache (Last Level Cache, referred to as LLC) into four types: dirty NVM data (Dirty-NVM, denoted as DN), dirty DRAM data (Dirty-DRAM, denoted as DD), clean NVM data (Clean-NVM, denoted as CN) and clean DRAM data (Clean-DRAM, denoted as CD), the priorities of the four cache lines of DN, DD, CN and CD are respective...

Embodiment approach

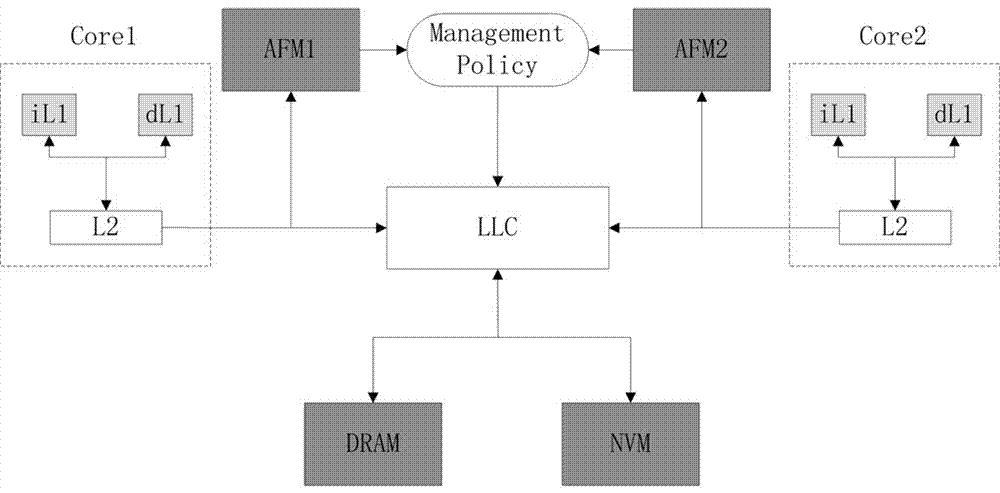

[0125] refer to image 3 , which shows a schematic diagram of the overall system architecture provided by this embodiment. The main memory of the system is composed of DRAM and NVM. It is in the same linear address space. The on-chip cache system presents a multi-level hierarchical structure. Cores (core1 and core2) are shared. In addition, the present invention sets an AFM for each core of the processor to identify the memory access characteristics of the application program on the corresponding core, so as to obtain the hit situation of the cache line corresponding to the application program.

[0126] refer to Figure 4 , shows a schematic diagram of the internal structure of the AFM provided by this embodiment. The time when the sum of the number of instructions run by multiple cores of the processor reaches 100Million from zero is taken as a counting cycle. At the beginning of each counting cycle, 32 buffers are selected. A group is used as a monitoring sample of the acc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com