Patents

Literature

69results about How to "Improve memory access performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

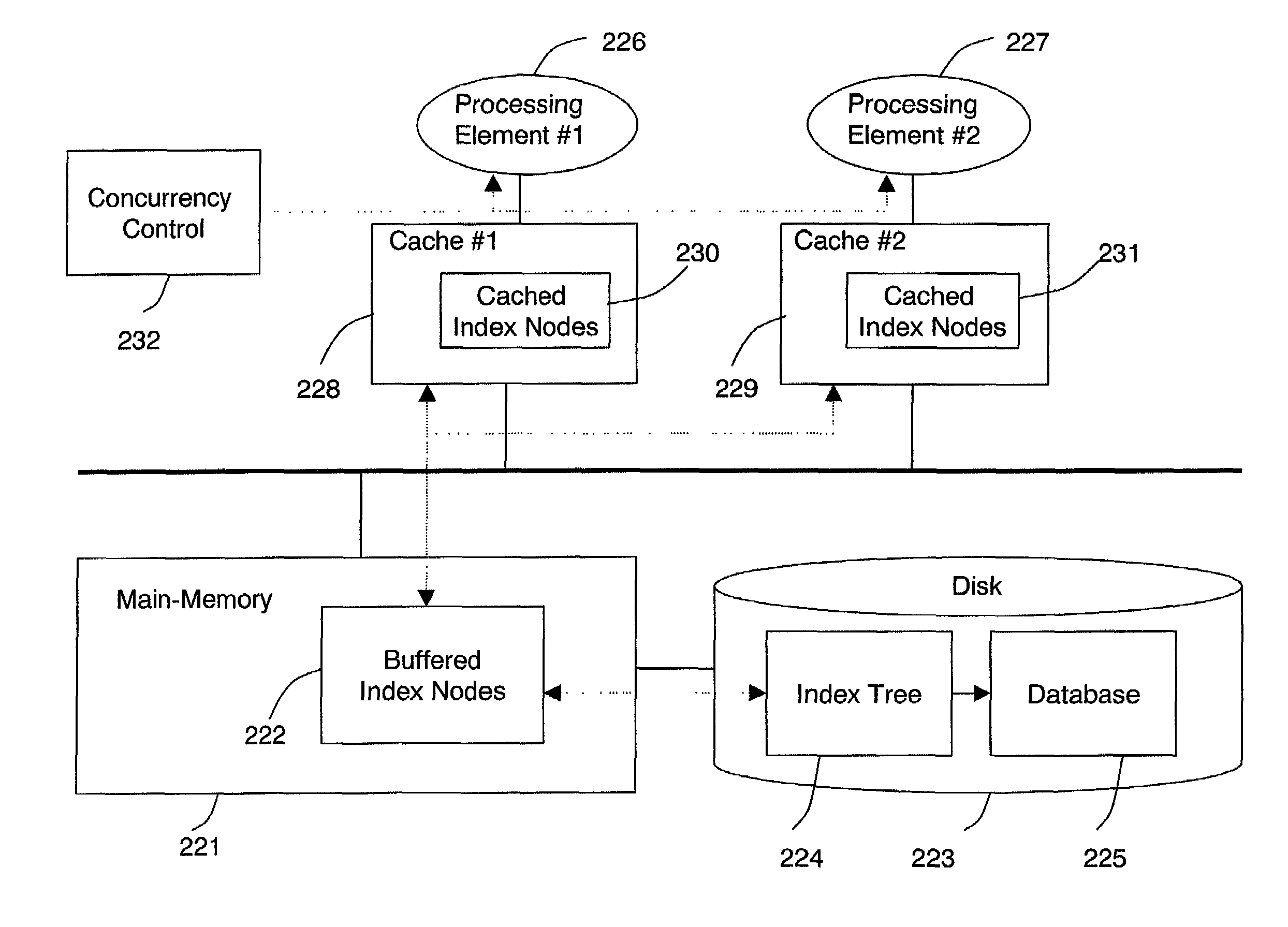

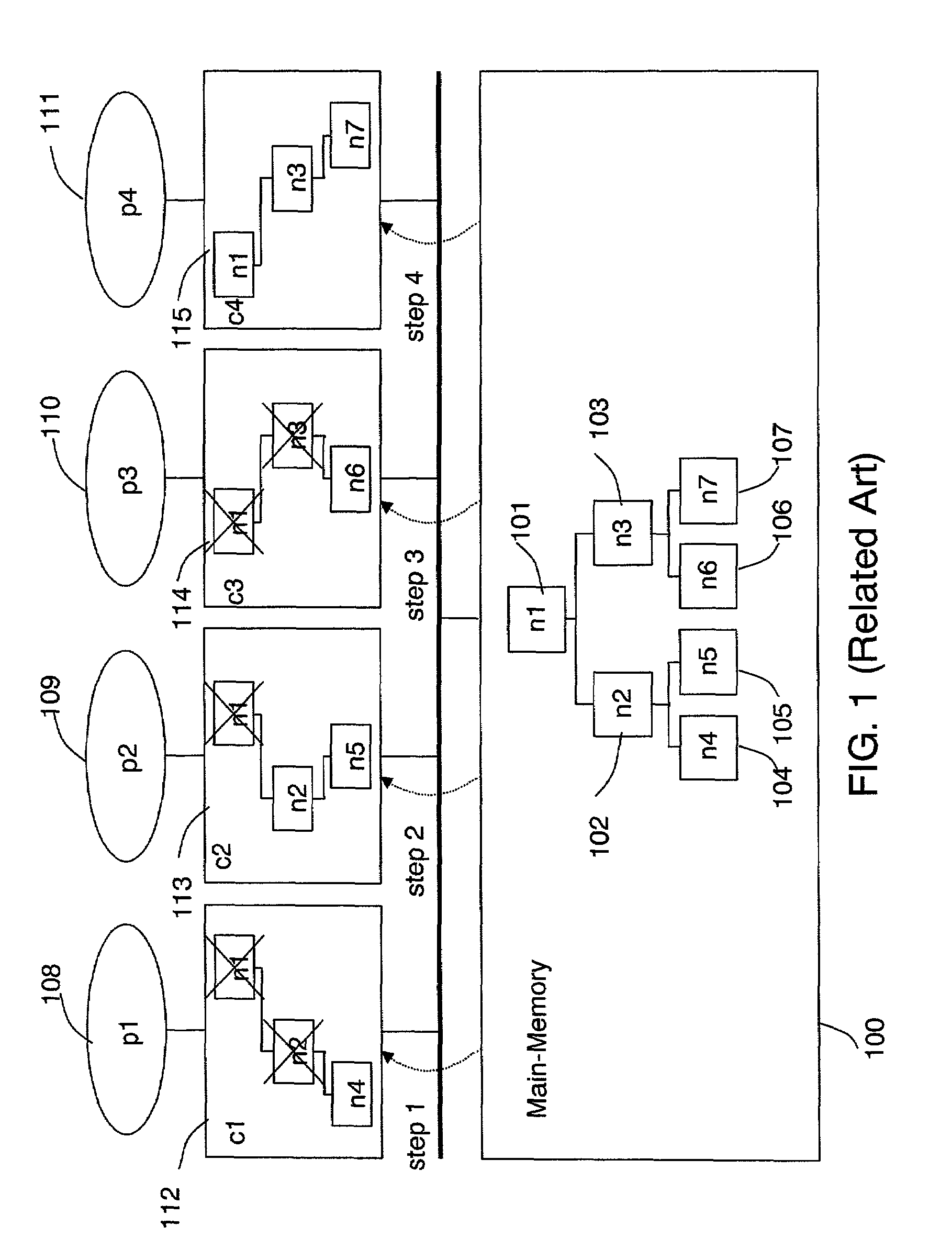

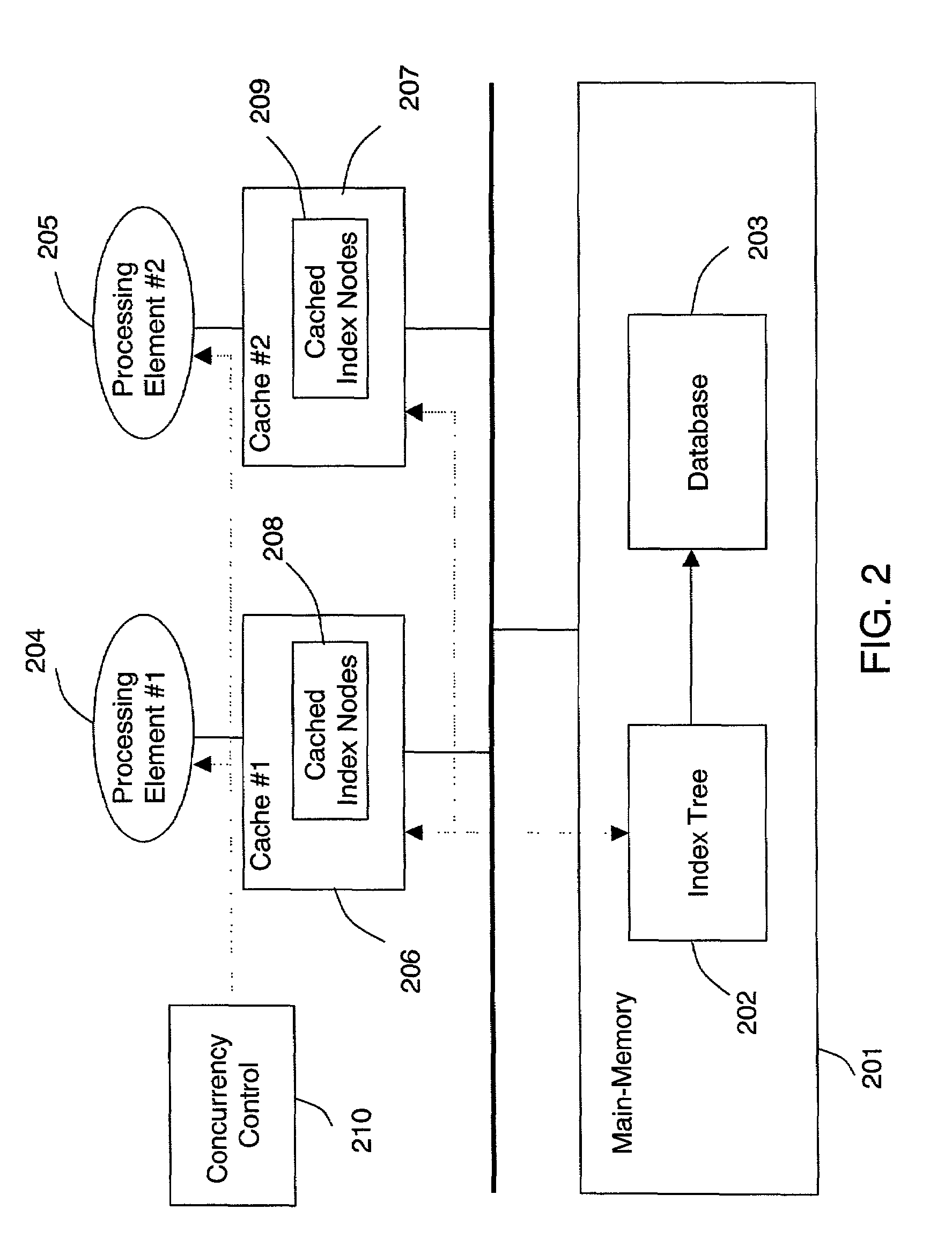

Cache-conscious concurrency control scheme for database systems

ActiveUS7293028B2Efficient index structureEffective structureData processing applicationsDigital data information retrievalProcessor registerConcurrency control

An optimistic, latch-free index traversal (“OLFIT”) concurrency control scheme is disclosed for an index structure for managing a database system. In each node of an index tree, the OLFIT scheme maintains a latch, a version number, and a link to the next node at the same level of the index tree. Index traversal involves consistent node read operations starting from the root. To ensure the consistency of node read operations without latching, every node update operation first obtains a latch and increments the version number after update of the node contents. Every node read operation begins with reading the version number into a register and ends with verifying if the current version number is consistent with the register-stored version number. If they are the same, the read operation is consistent. Otherwise, the node read is retried until the verification succeeds. The concurrency control scheme of the present invention is applicable to many index structures such as the B+-tree and the CSB+-tree.

Owner:TRANSACT & MEMORY

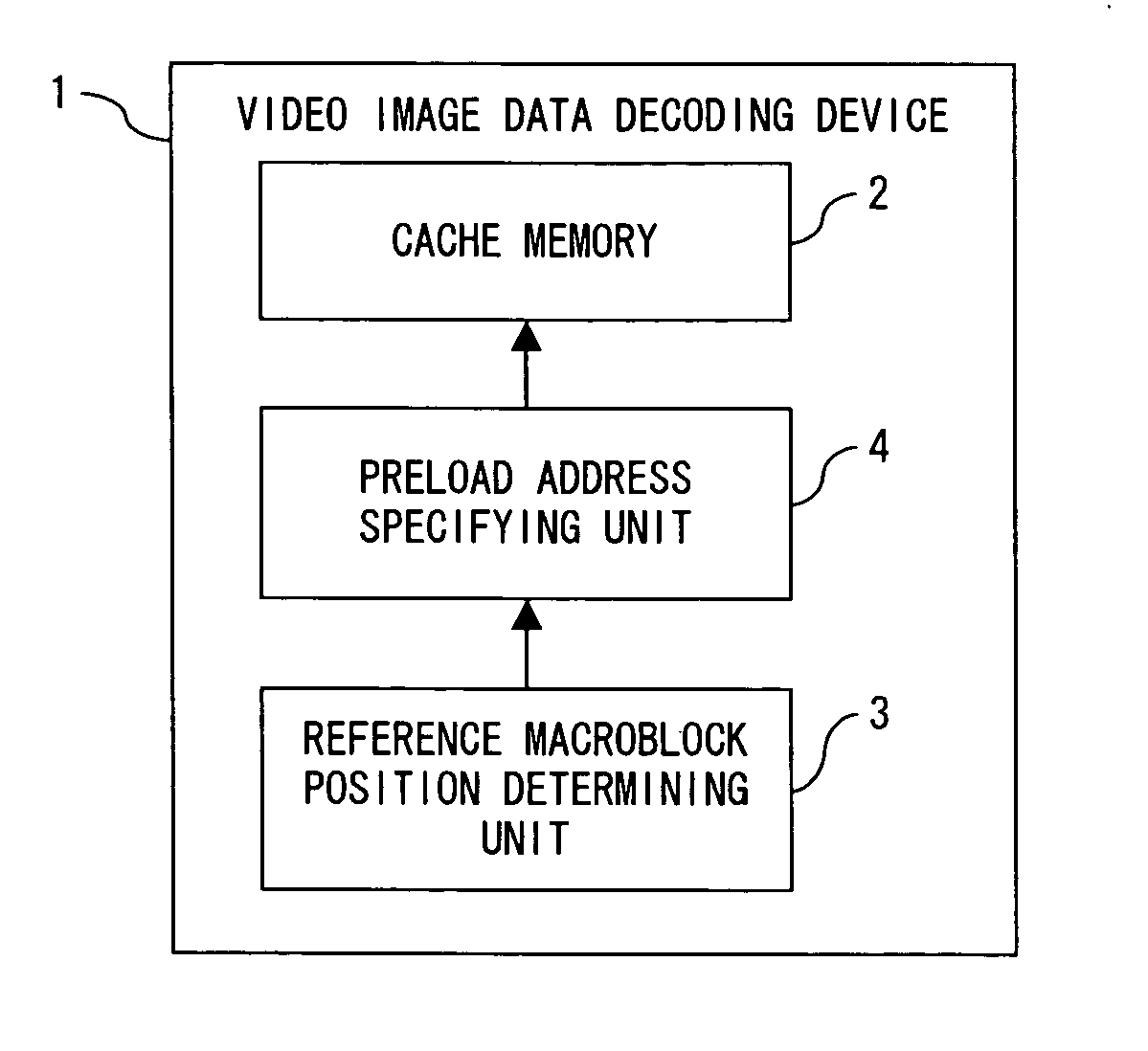

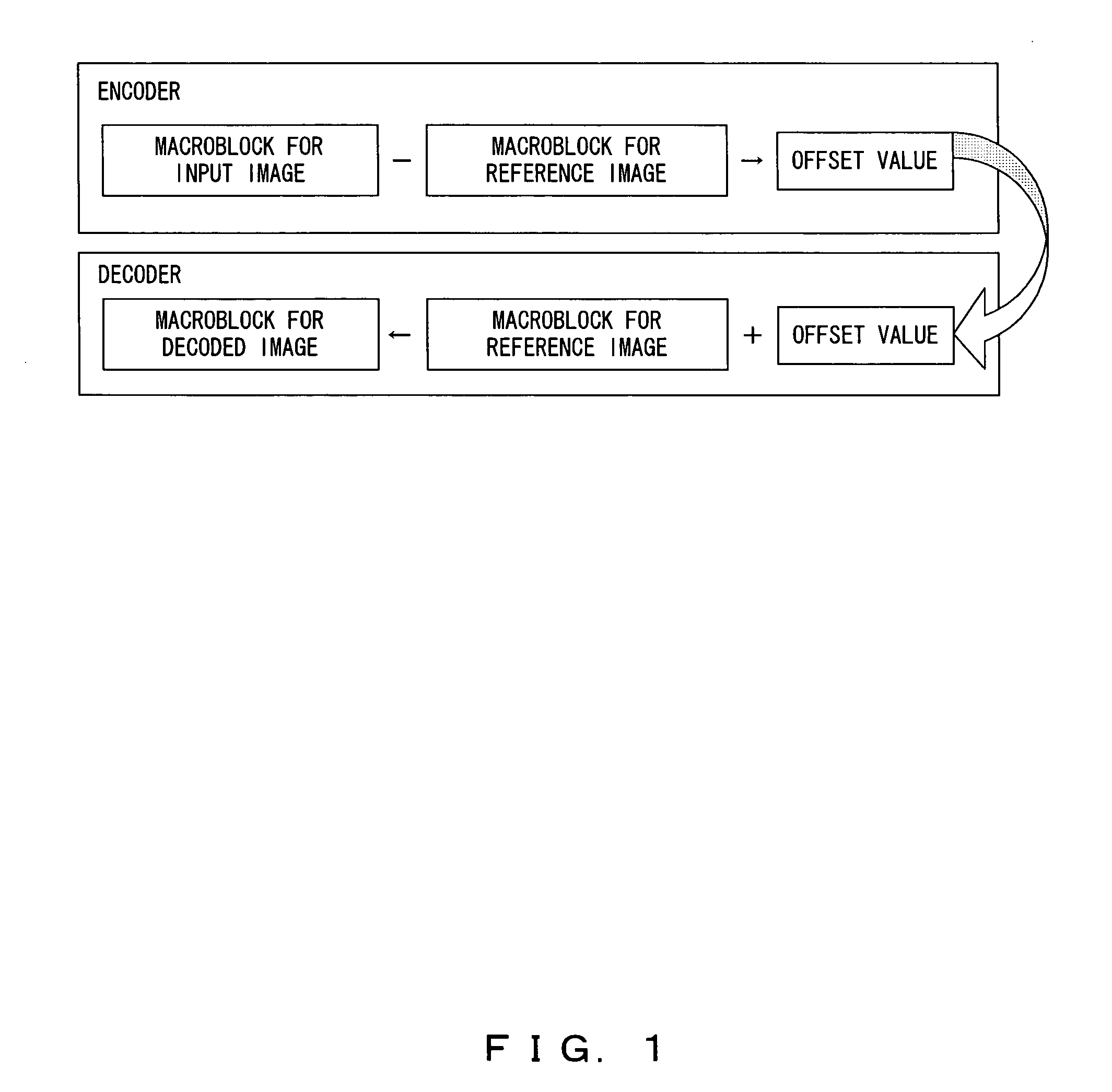

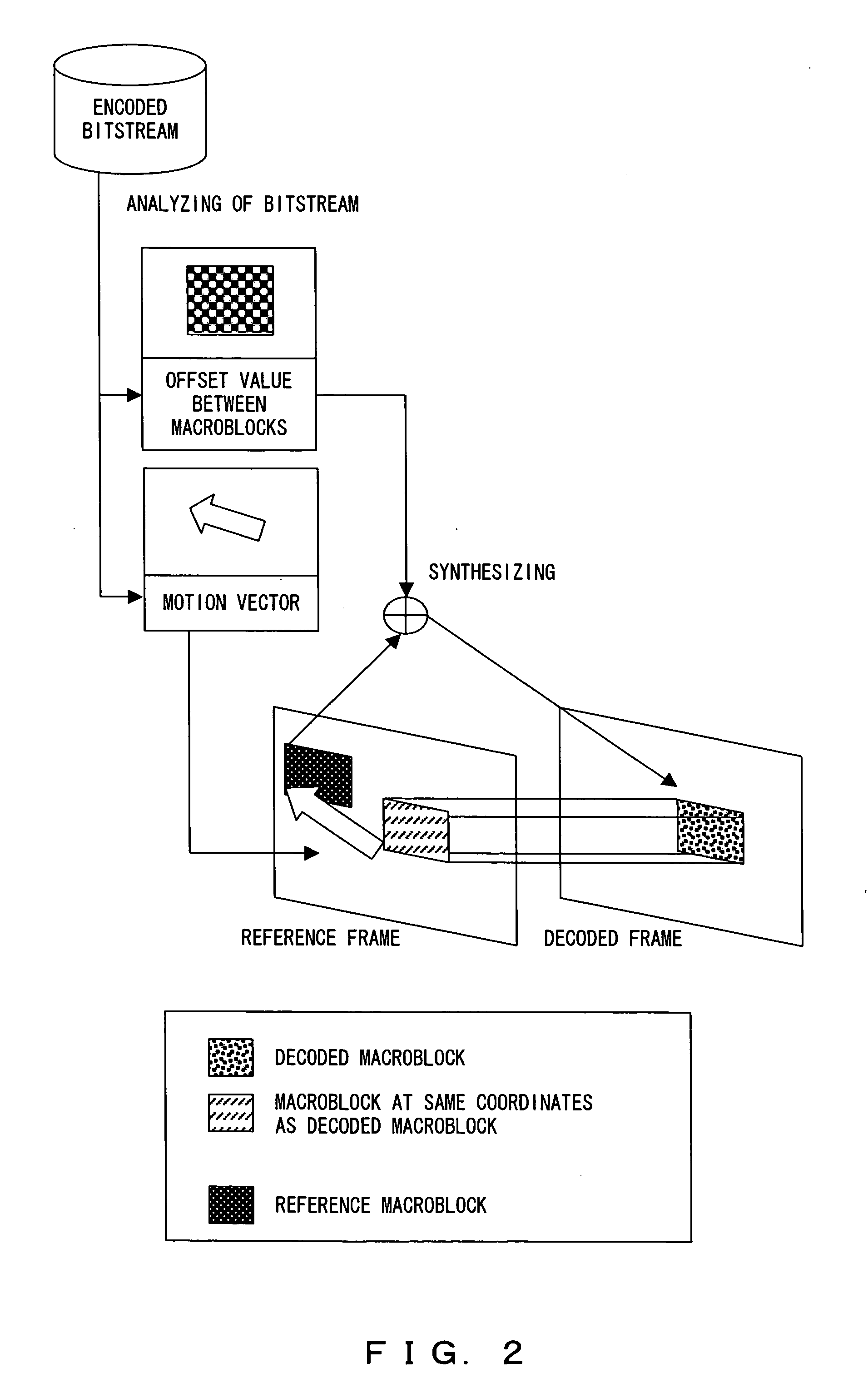

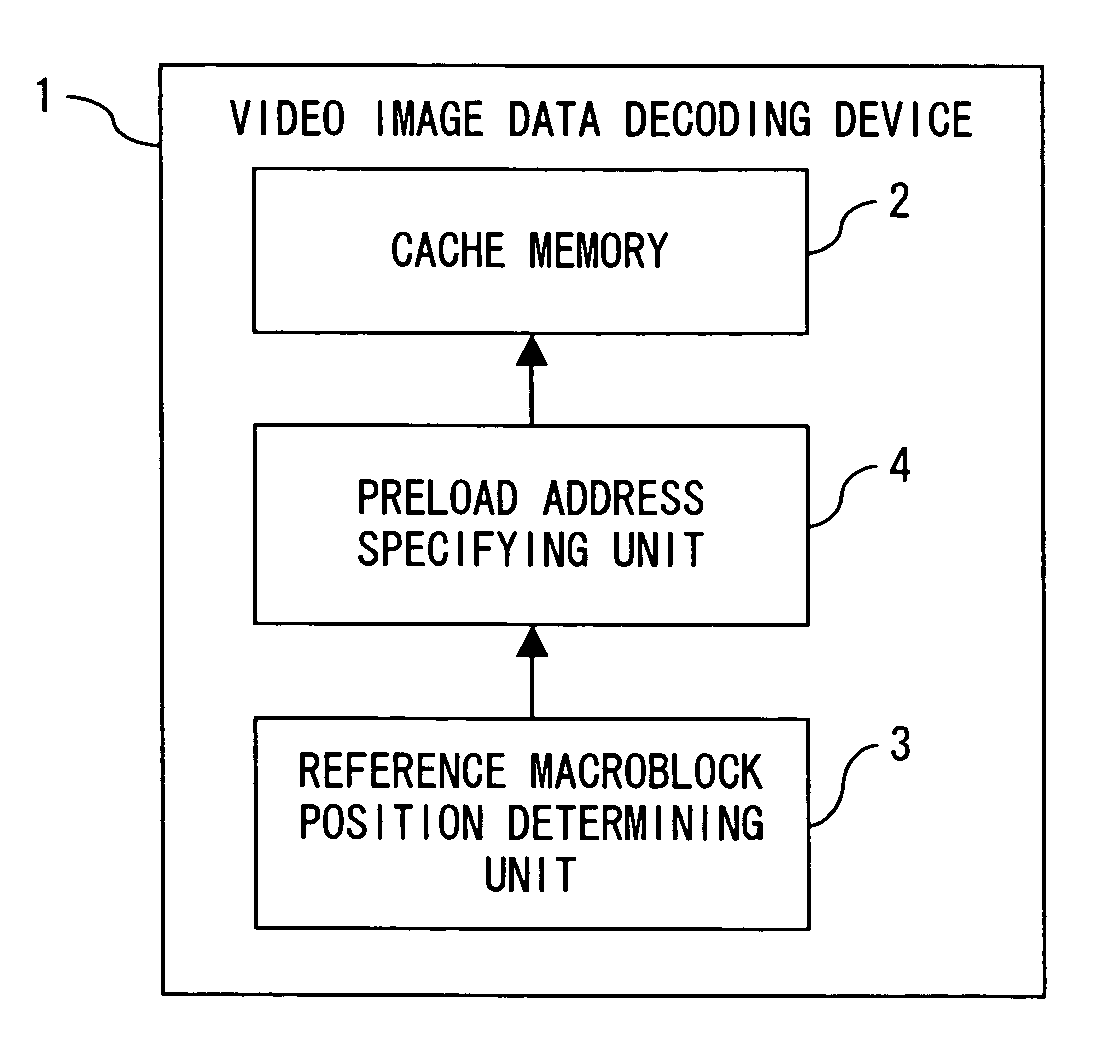

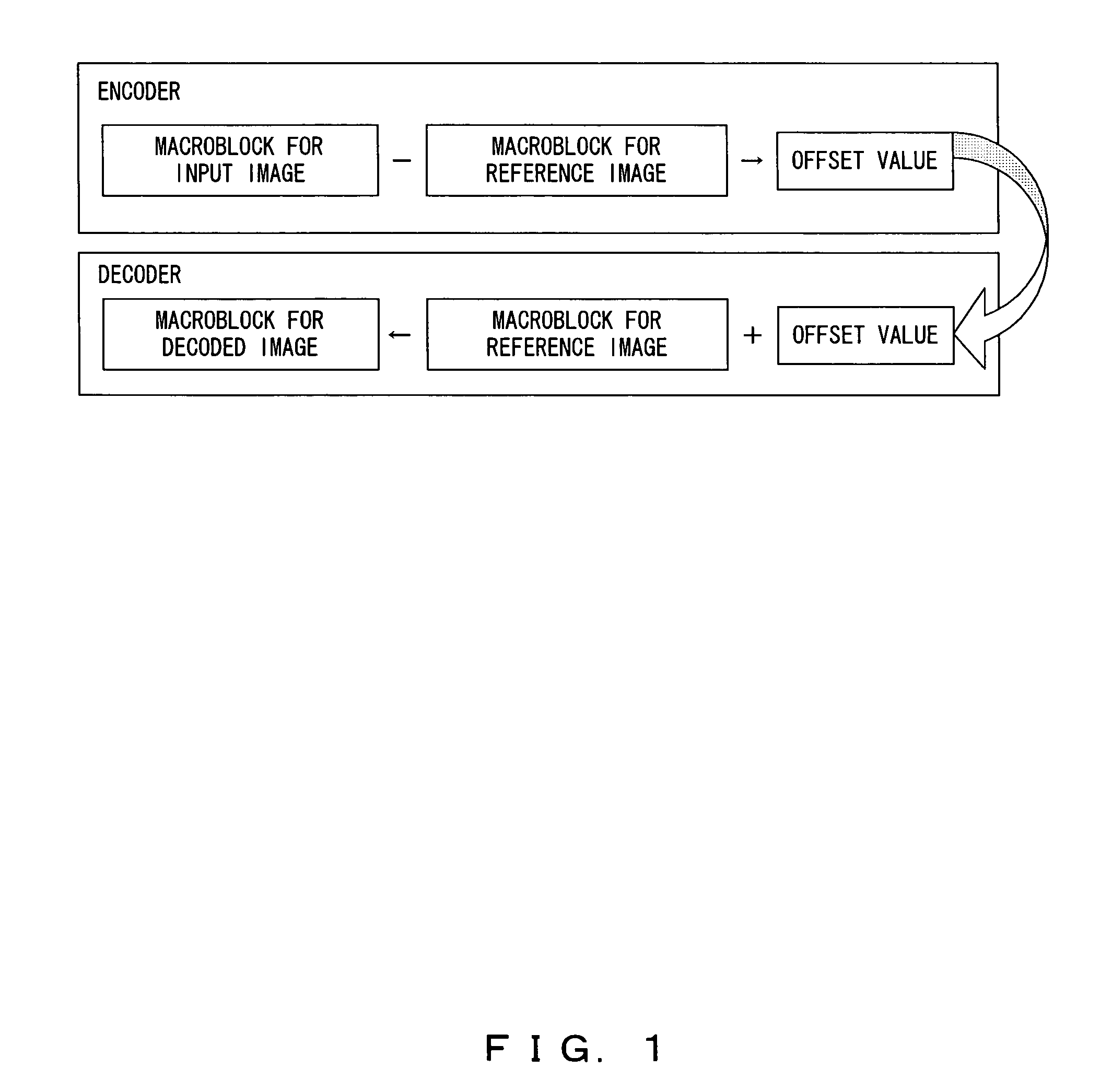

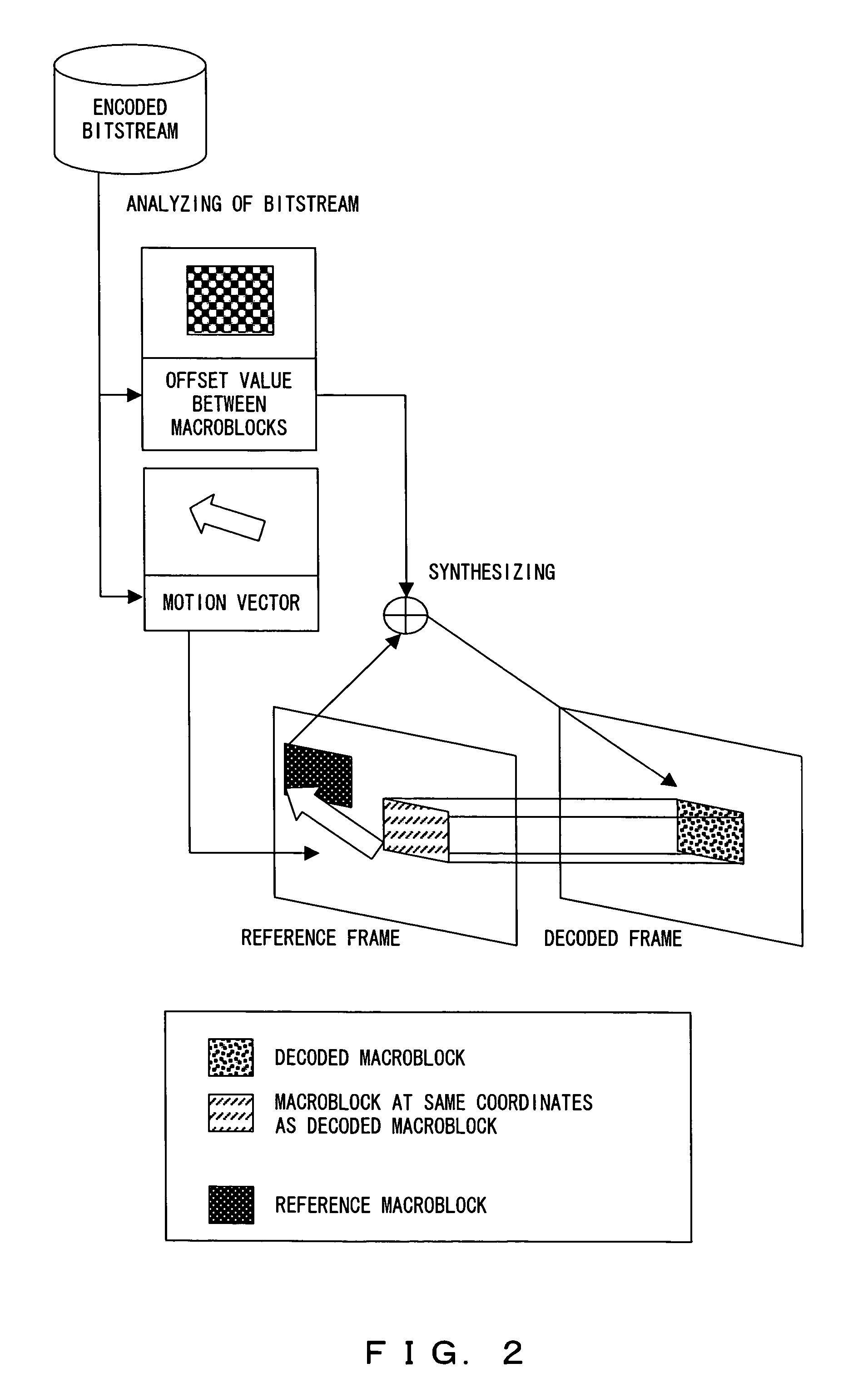

Decoding device and decoding program for video image data

InactiveUS20060023789A1Reduce incidenceImprove memory access performanceColor television with pulse code modulationColor television with bandwidth reductionMotion vectorVideo image

A decoding device comprises a cache memory for temporally storing video image data, a unit for determining a position of a reference macroblock corresponding to a macroblock to be decoded based on a motion vector obtained by analyzing an encoded bitstream, and a unit for determining whether or not a reference macroblock includes a cacheline boundary when data of the reference macroblock is not stored in the memory and for specifying the position of the boundary as a front address for the data preload from a memory storing the data of the reference macroblock.

Owner:FUJITSU LTD

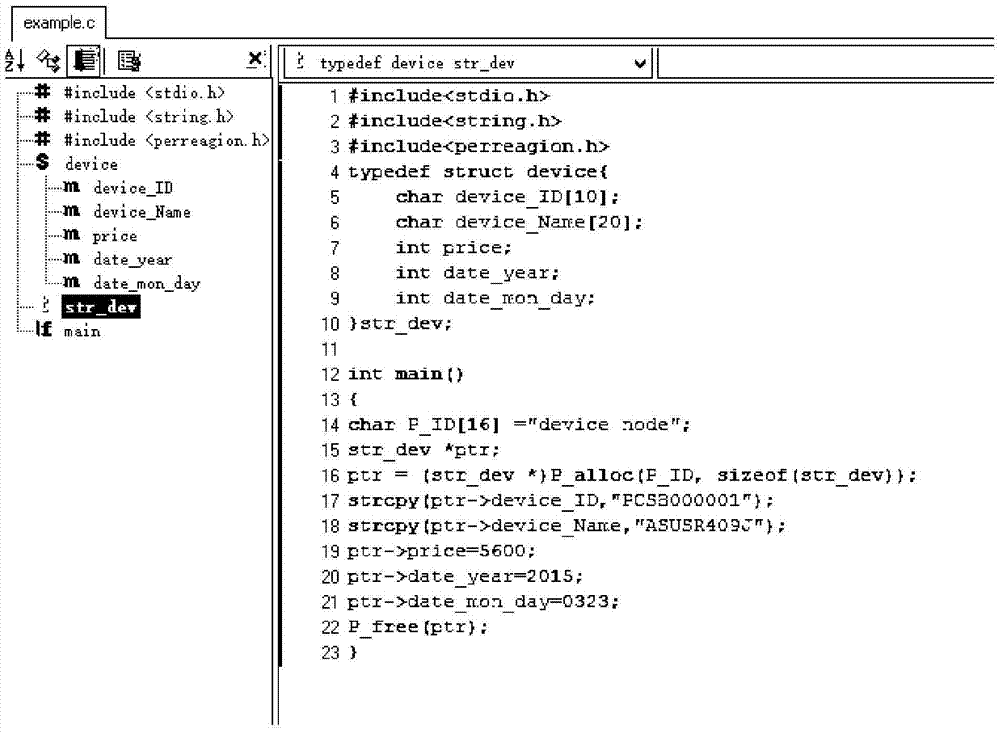

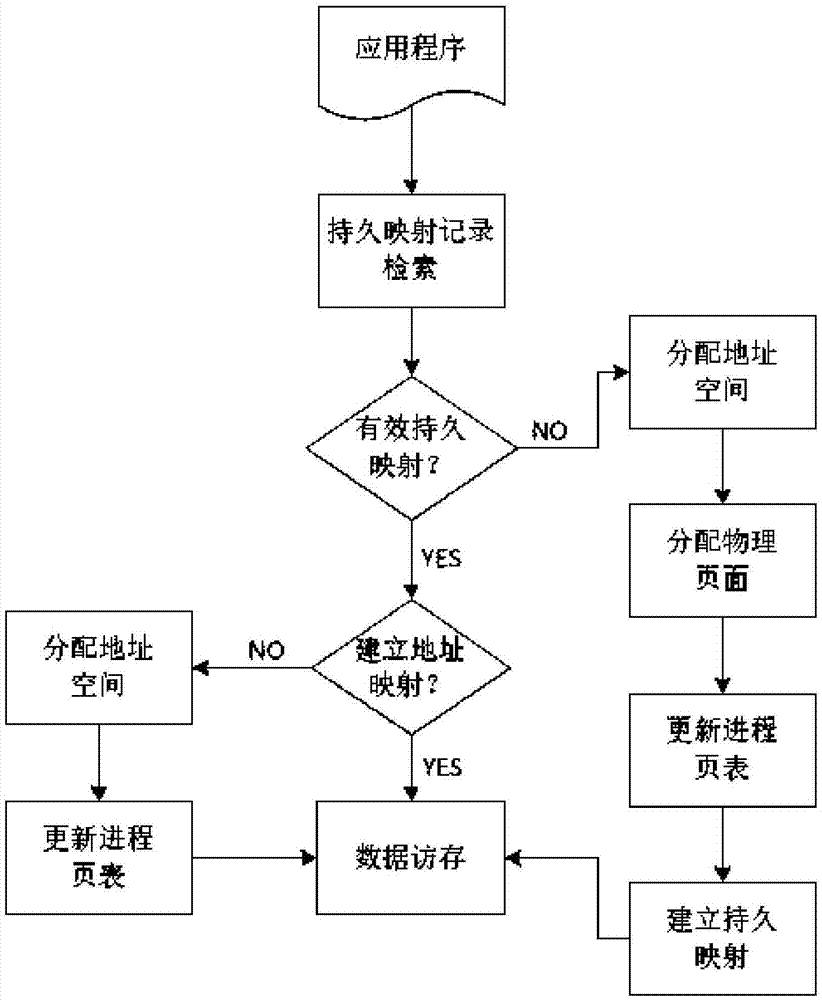

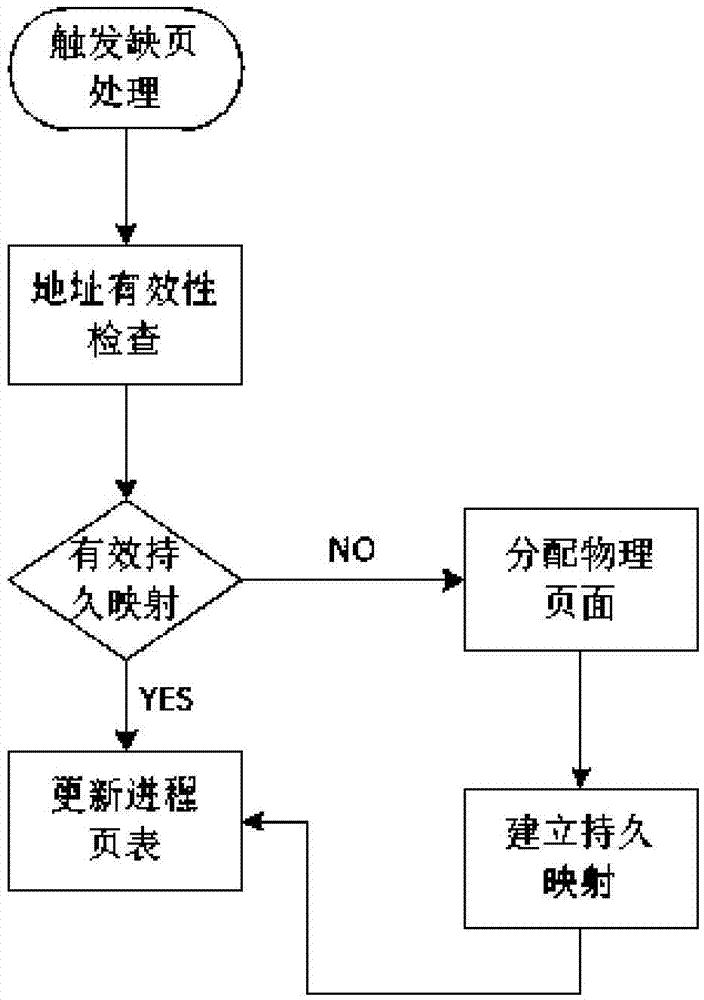

Memory data quick persistence method based on storage-class memory

ActiveCN105446899ALower latencyLow densityMemory systemsMetadata managementApplication programming interface

The present invention provides a memory data quick persistence method based on a storage-class memory. The method is based on a flat mixed memory architecture, and is implemented by collaborative design of a user layer and a kernel layer. The method comprises: abstracting an SCM with a certain capacity and data stored in the SCM into a persistent area; designing an application programming interface (API) at the user layer, and making a response to memory access of an application to the persistent area; extending a Buddy system of a kernel to implement heterogeneous mixed memory management; and designing a persistent area manager to implement the functions of persistent mapping in the persistent area, metadata management in the persistent area and the like. According to the memory data quick persistence method based on the storage-class memory, a data linearization process required for data persistence in a traditional storage architecture can be avoided, direct access and in-place update of persistent data can be implemented, hierarchy invoking of a software stack in the traditional architecture is simplified, the I / O bottleneck can be effectively alleviated, and the memory access performance of the persistent data can be improved.

Owner:SHANGHAI JIAO TONG UNIV

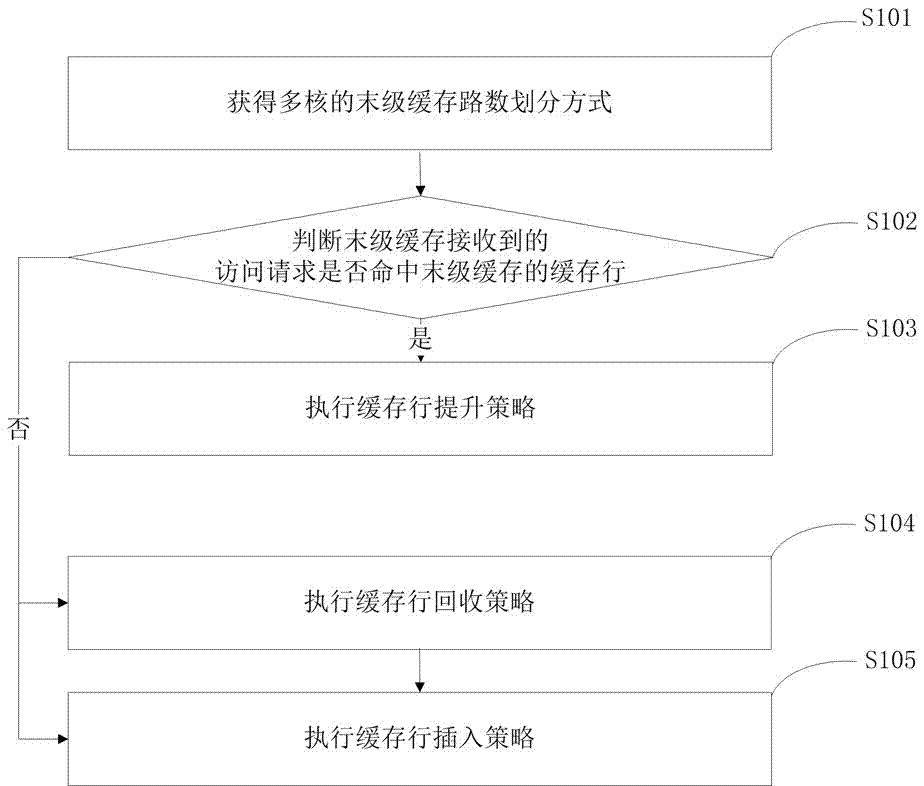

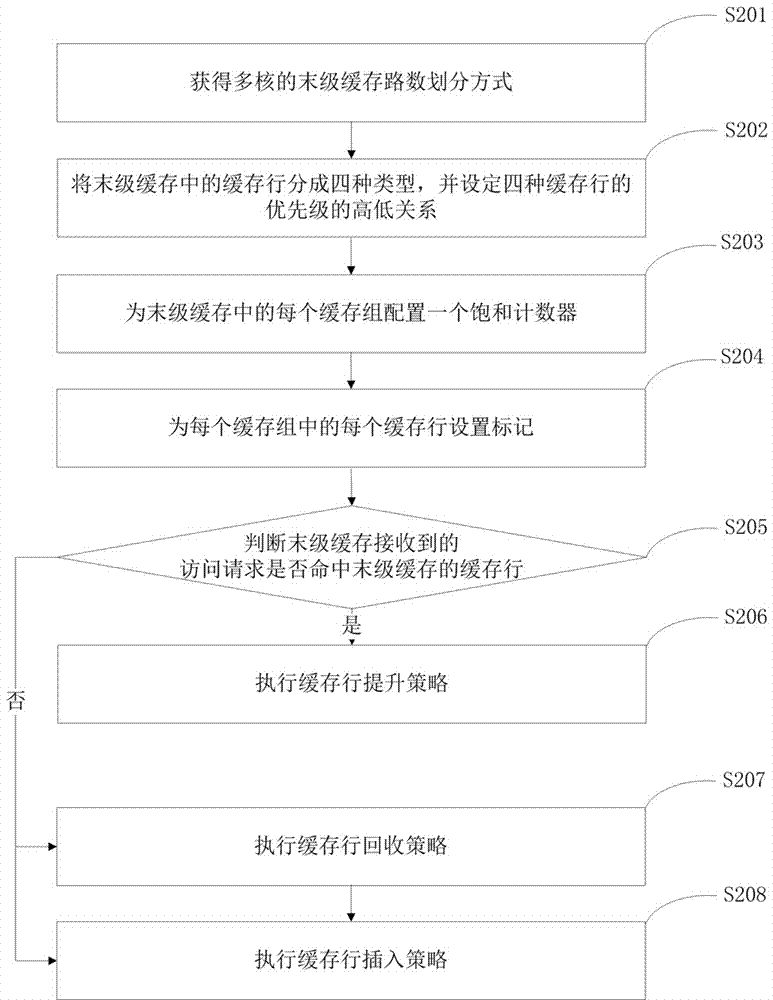

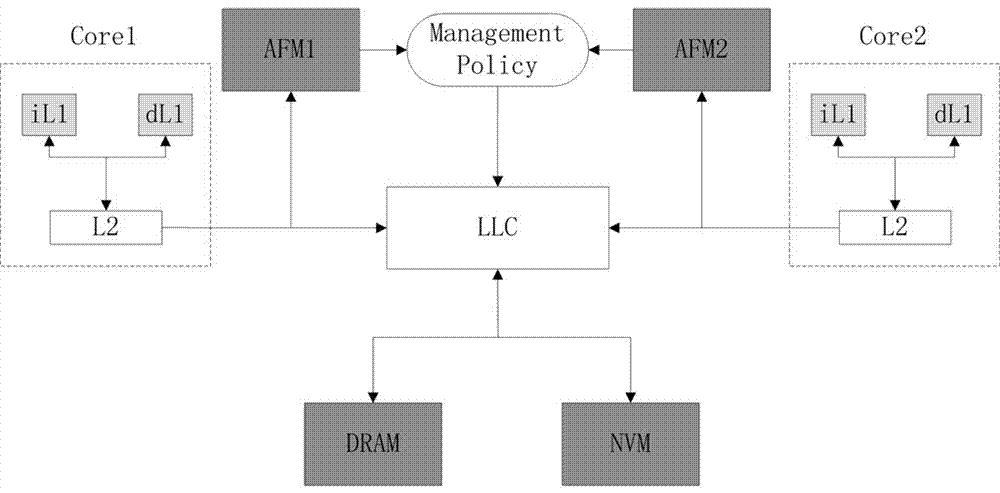

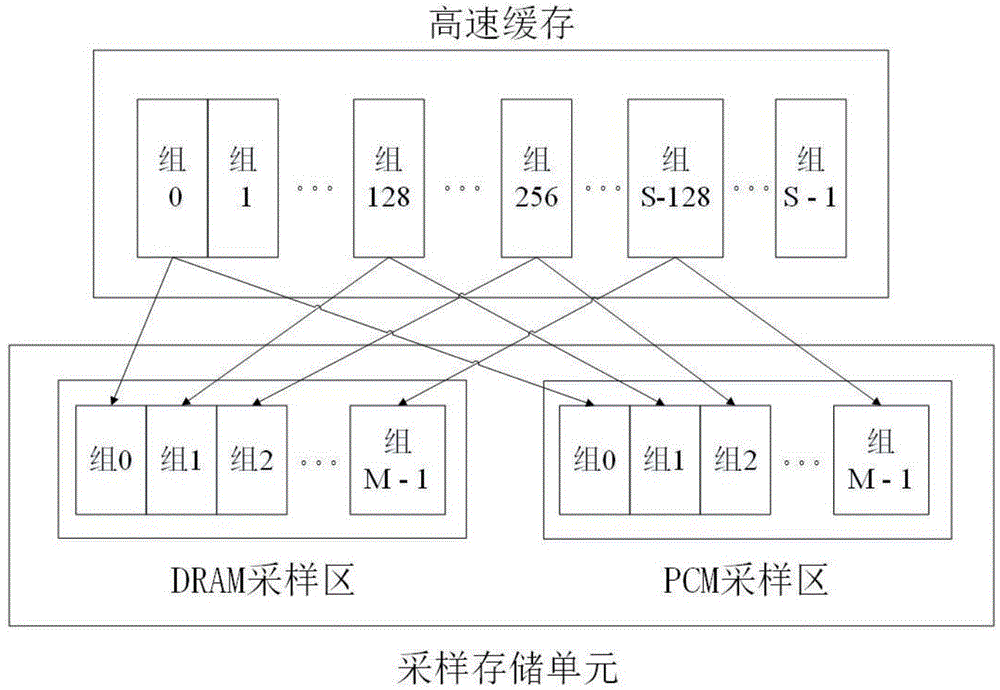

Multi-core shared final stage cache management method and device for mixed memory

ActiveCN106909515AReduce distractionsImprove hit rateEnergy efficient computingMemory systemsHit rateCache management

The invention relates to the technical field of computer storage, in particular to a multi-core shared final stage cache management method and device for a mixed memory. The invention discloses a multi-core shared final stage cache management method for the mixed memory. The method comprises the following steps: obtaining a final stage cache number partition mode of a processor, and judging whether or not the access request received by the final stage cache hits a cache line of the final stage cache. The invention also discloses a multi-core shared final stage cache management device for the mixed memory. The device comprises a final stage cache number partition module and a judgment module. The multi-core shared final stage cache management method and device for the mixed memory have the advantages of synthetically considering the physical characteristics of different main memory media in a mixed memory system, optimizing the traditional LRU replacement algorithm aiming at reducing the number of deletions, reducing storage energy overhead, achieving the purpose of reducing inter-cell interference and improving the hit rate, and effectively improving the memory access performance of the final stage cache.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

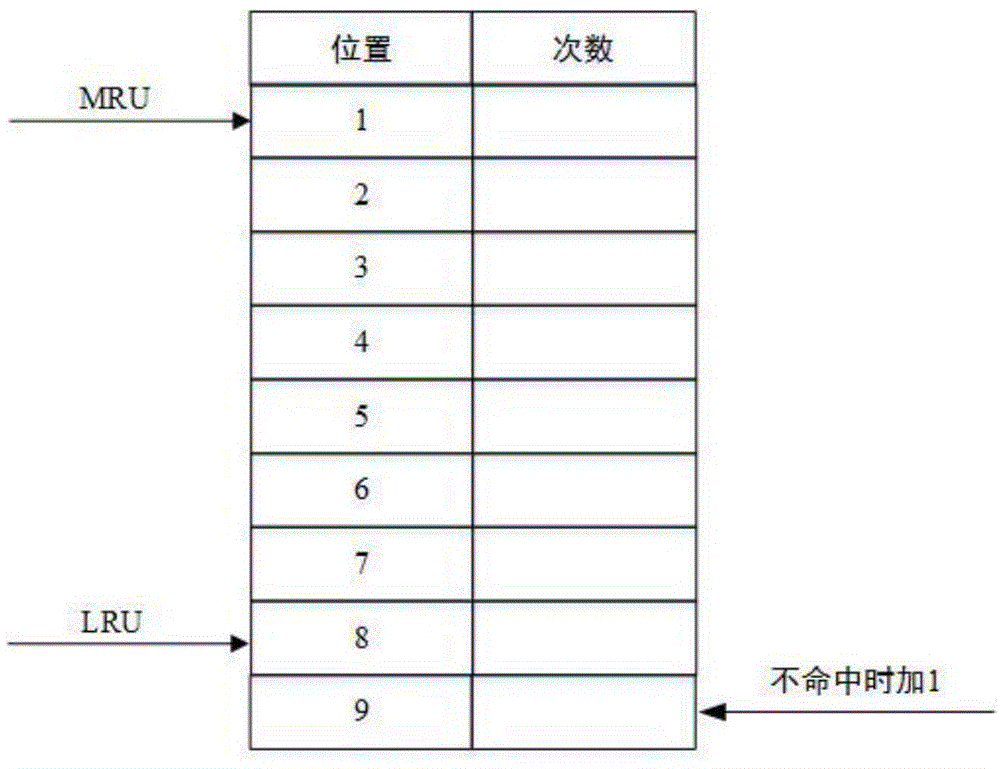

Cache replacement method under heterogeneous memory environment

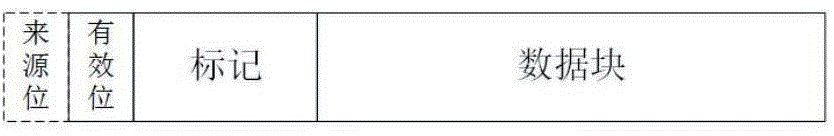

ActiveCN104834608AComprehensive consideration of memory access characteristicsIncrease space sizeMemory adressing/allocation/relocationHardware structurePhase-change memory

The invention discloses a cache replacement method under heterogeneous memory environment. The method is characterized by comprising the steps: adding one source flag bit in a cache line hardware structure for flagging whether cache line data is derived from a DRAM (Dynamic Random Access Memory) or a PCM (Phase Change Memory); adding a new sample storage unit in a CPU (Central Processing Unit) for recording program cache access behaviors and data reusing range information; the method also comprises three sub methods including a sampling method, an equivalent position calculation method and a replacement method, wherein the sampling sub method is used for performing sampling statistics on the cache access behaviors; the equivalent position calculation sub method is used for calculating equivalent positions, and the replacement sub method is used for determining a cache line needing to be replaced. According to the cache replacement method, for the cache access characteristic of a program under the heterogeneous memory environment, a traditional cache replacement policy is optimized, the high time delay cost that the PCM needs to be accessed due to cache missing can be reduced by implementing the cache replacement method, and thus the cache access performance of a whole system is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

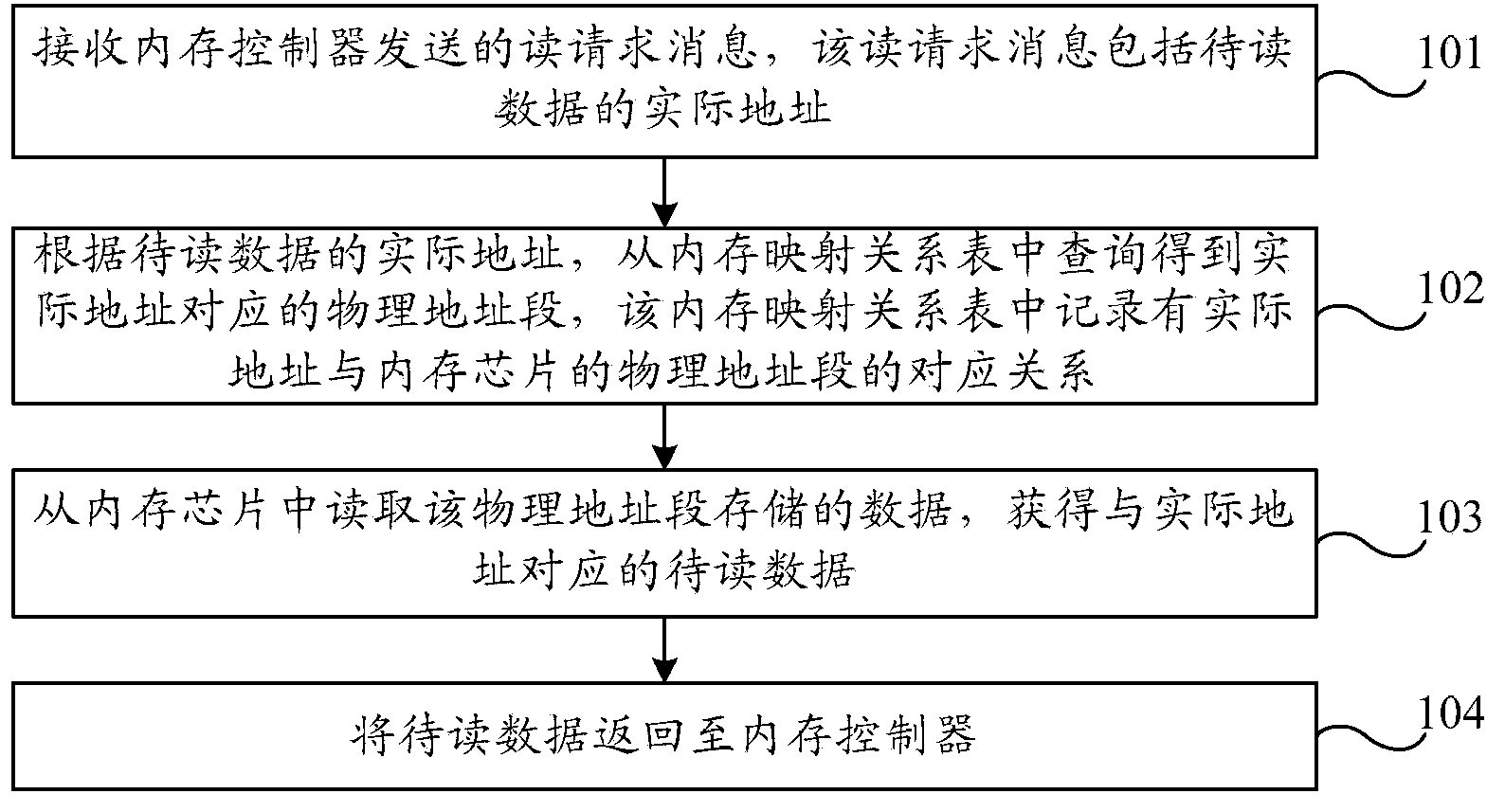

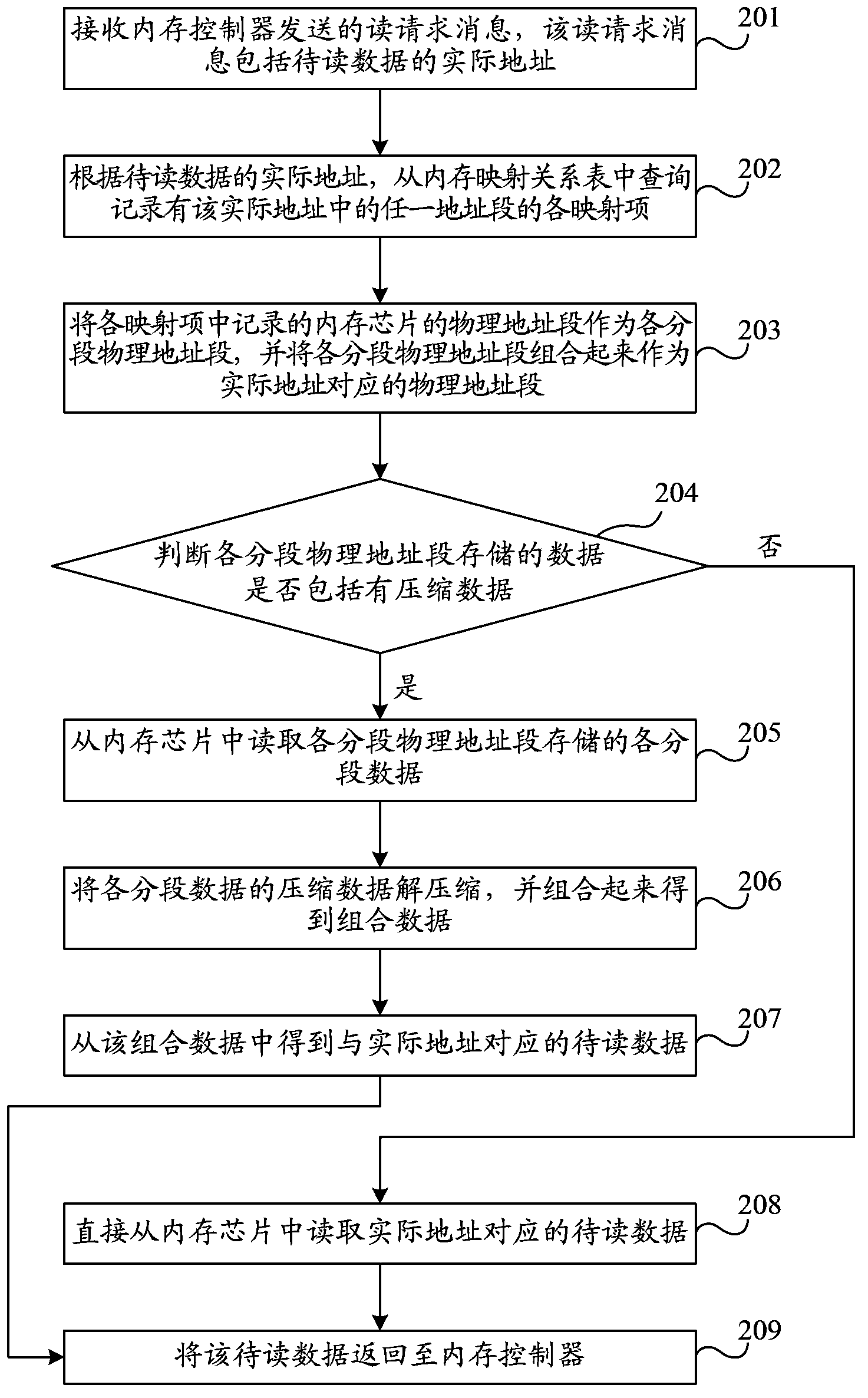

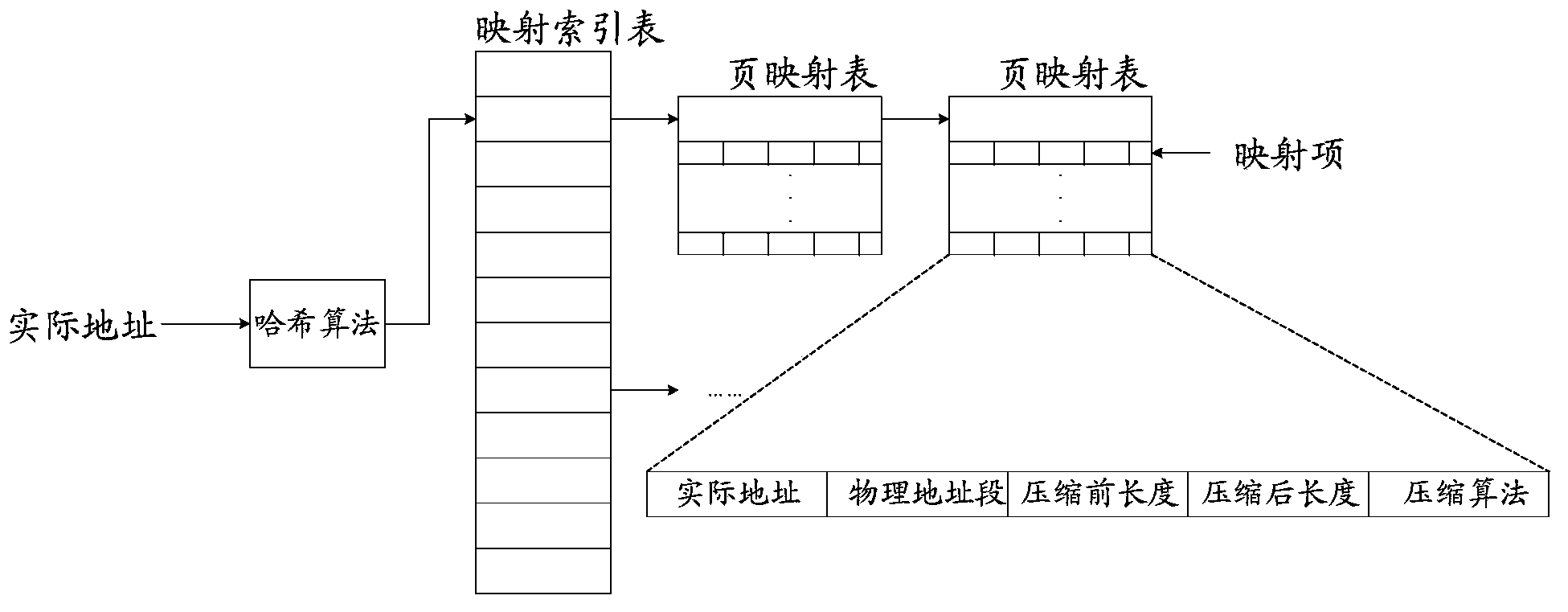

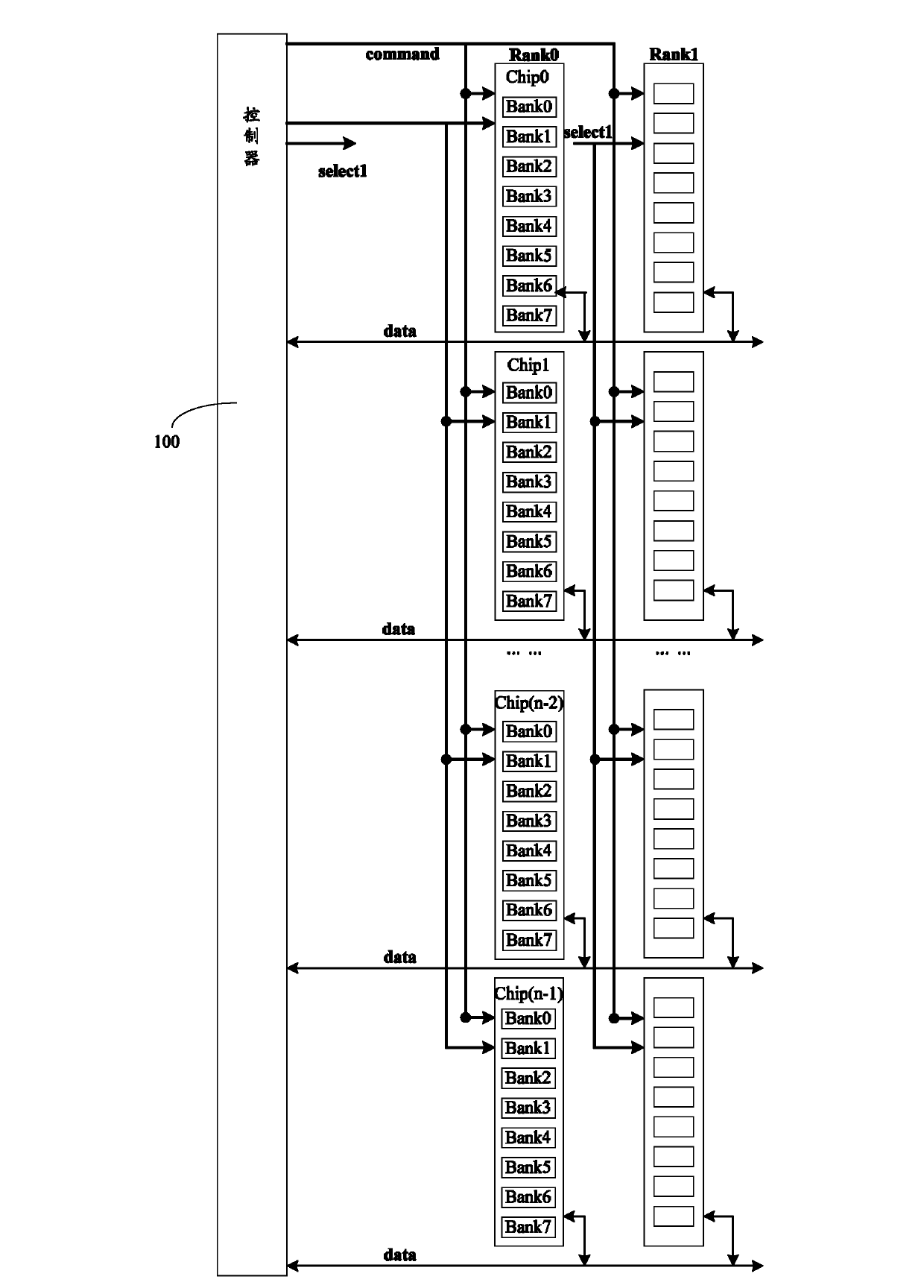

Compressed memory access control method, device and system

ActiveCN103902467ASolve wasteImprove memory access performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory chipPhysical address

Embodiments of the present invention provide a compressed memory access control method, an apparatus and a system. The method comprises: receiving a read request message sent by a memory controller, wherein the read request message comprises an actual address of to-be-read data; according to the actual address of the to-be-read data, querying a memory mapping relationship table for a physical address segment corresponding to the actual address, wherein the memory mapping relationship table records a mapping relationship between the actual address and the physical address segment of a memory chip; reading data stored in the physical address segment from the memory chip and obtaining the to-be-read data corresponding to the actual address; and returning the to-be-read data to the memory controller. The embodiments of the present invention can process the compressed memory and reduce a problem of a bandwidth resource waste brought in prior compressed memory access. In addition, data can be transferred in compressed data mode between a processor and the memory chip during a memory access process, so that a bandwidth resource occupied for memory access can be further reduced.

Owner:HUAWEI TECH CO LTD +1

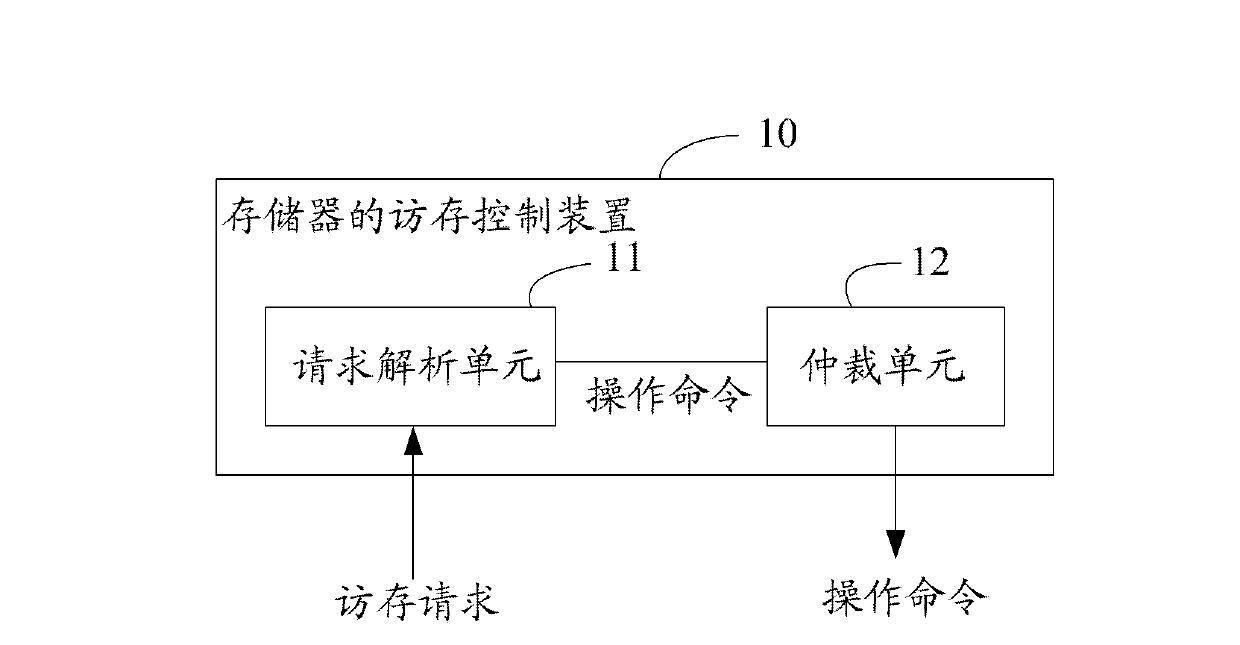

Access-memory control device and method of memorizer, processor and north-bridge chip

ActiveCN103377154AReduce average processing timeImprove memory access performanceDigital data processing detailsConcurrent instruction executionMemory performanceReal-time computing

Provided are an access-memory control device and method of a memorizer, a processor and a north-bridge chip. The access-memory control device of the memorizer comprises an analysis requesting unit for accessing and analyzing a request to form an operation command sequence including a plurality of operation commands, and an arbitration unit for performing arbitration of the operation commands in the operation command sequence according to arbitration conditions so as to send the operation commands to the memorizer. Compared with the prior art, the access-memory control device concurrently sends the operation command sequence through the analysis requesting unit and utilizes first temporal constraint, second temporal constraint and third temporal constraint to control a time interval from the sending time of an existing operation command to the sending time of a previous operation command adjacent to the existing operation command in the same operation command sequence, and concurrent accessing of multiple memorizers can be performed. In addition, multiple memorizer groups can be concurrently accessed, multi-dimensional concurrent accessing is achieved, the average handling time of request accessing and memorizing is remarkably shortened, and the overall access-memory performance of a system is improved.

Owner:JIANGNAN INST OF COMPUTING TECH

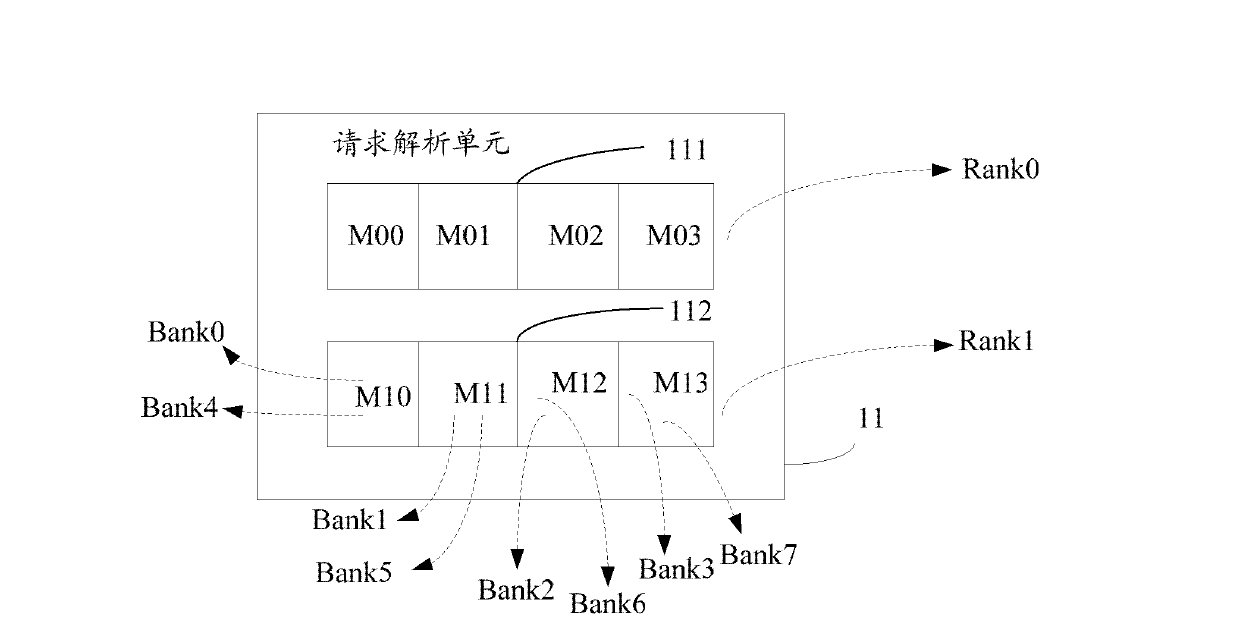

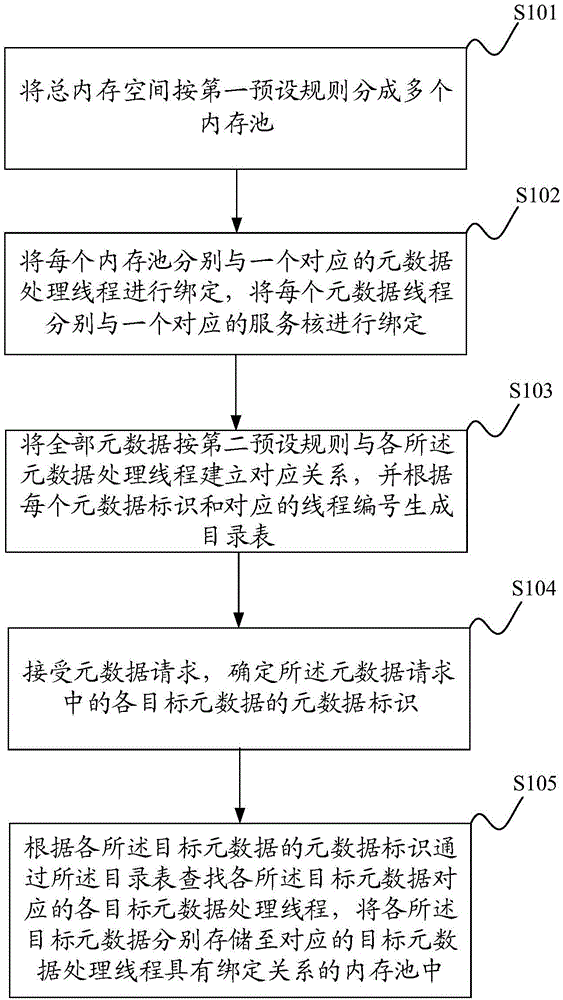

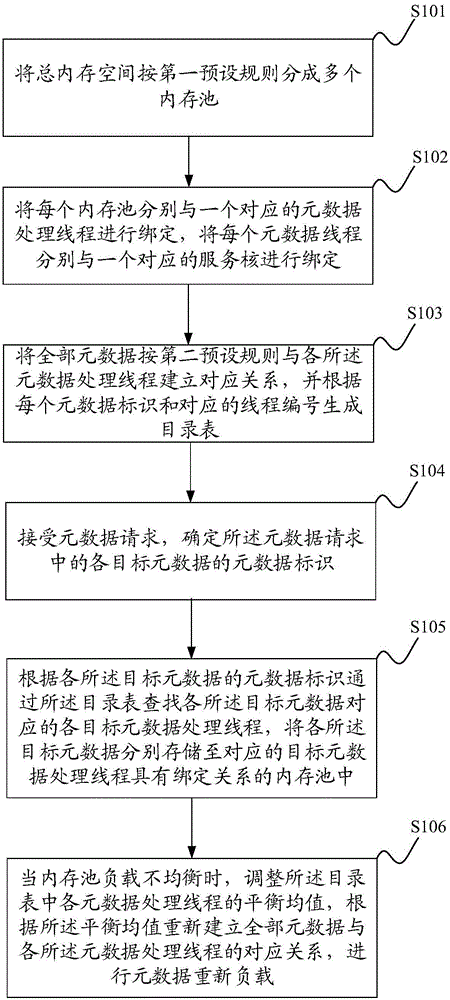

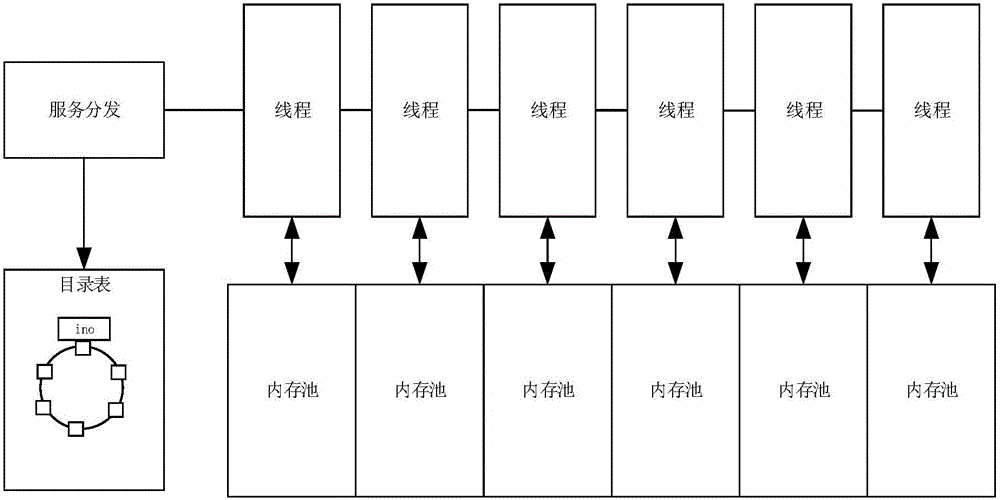

File request processing method and system

ActiveCN105094992AImprove caching capacityImprove hitResource allocationSpecial data processing applicationsMetadataMemory pool

The invention discloses a file request processing method and system. A total memory space is divided into multiple memory pools according to a first preset rule, each memory pool is bound to one corresponding metadata processing thread, each metadata processing thread is bound to one corresponding service core, correspondence relations between all metadata and all the metadata processing threads are established according to a second preset rule, a directory table is generated according to each metadata identifier and the corresponding thread number, a metadata request is accepted, metadata identifiers of all target metadata in the metadata request are determined, target metadata processing threads corresponding to the target metadata are searched through the directory table according to the metadata identifiers of the target metadata, all the target metadata are stored to the memory pools bound to the corresponding target metadata processing threads respectively, and multi-thread cache performance and memory access performance are improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

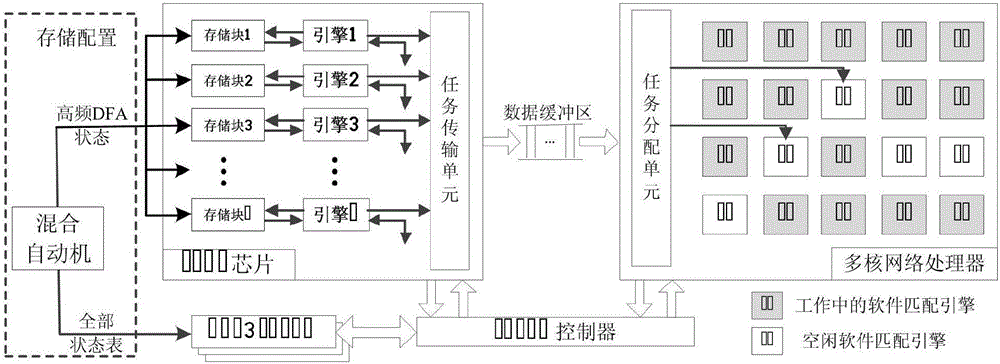

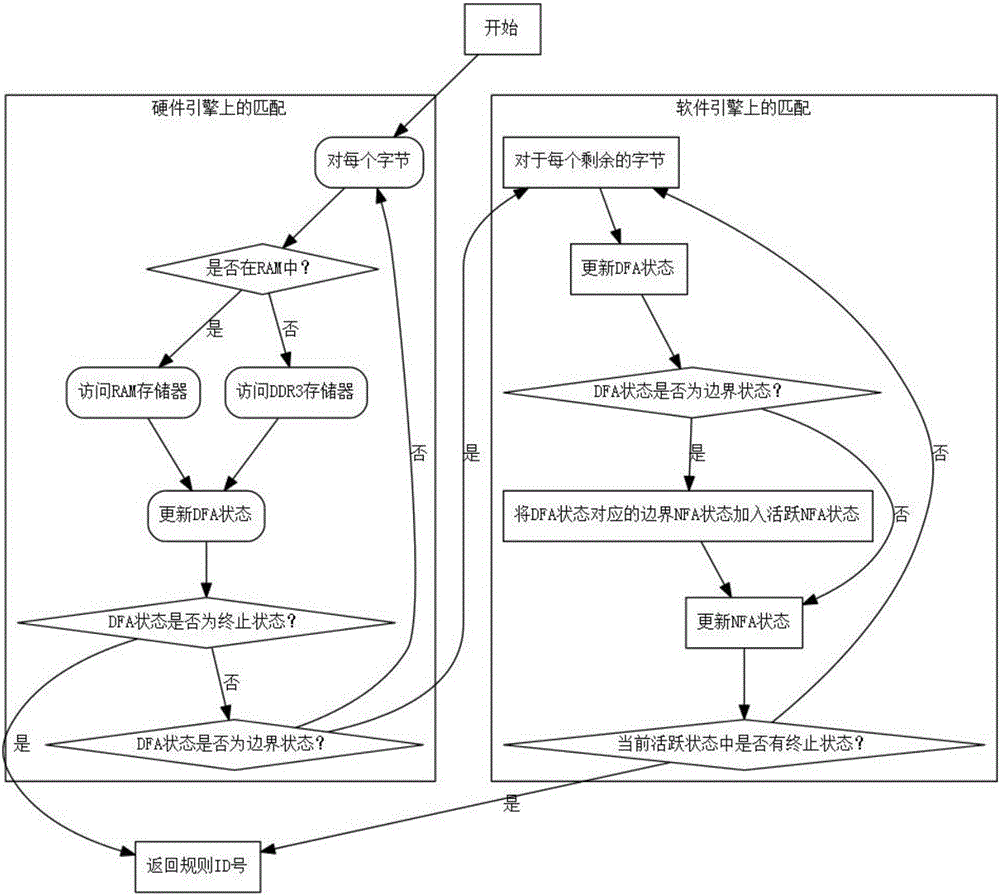

High speed regular expression matching hybrid system and method based on FPGA and NPU (field programmable gate array and network processing unit)

ActiveCN106776456AHeavy workloadReduce workloadDigital computer detailsData switching networksDouble date rateSoftware engine

The invention provides a high speed regular expression matching hybrid system and method based on FPGA and NPU (field programmable gate array and network processing unit); the system is mainly composed of an FPGA chip and a multicore NPU; a plurality of parallel hardware matching engines are implemented on the FPGA chip, a plurality of software matching engines are instantiated on the NPU, and the hardware engines and the software engines operate in running water manner. In addition, a high speed RAM (random-access memory) on the FGPA chip and an off-chip DDR3 SDARM (double-date-rate three synchronous dynamic random-access memory) are used to construct two-level storage architecture; secondly, a regular expression rule set is compiled to generate a hybrid automaton; thirdly, state table items of the hybrid automaton are configured; fourthly, network messages are processed. The high speed regular expression matching hybrid system and method based on FPGA and NPU have the advantages that matching performance under complex rule sets is improved greatly, and the problem that the complex rule sets have poor performance is solved.

Owner:NAT UNIV OF DEFENSE TECH

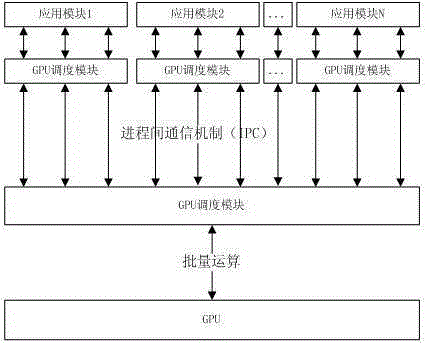

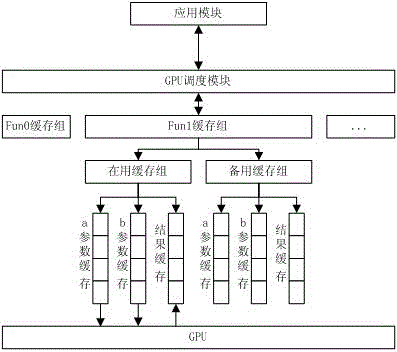

Method and apparatus for scheduling GPU to perform batch operation

InactiveCN105224410AImprove memory access performanceGive full play to computing powerProgram initiation/switchingResource allocationEngineeringConcurrent computation

The present invention relates to the field of GPU parallel computing, in particular to a method and an apparatus for scheduling a GPU to perform batch operation. Aimed at the problems in the prior art, the present invention provides the method and the apparatus for scheduling the GPU to perform the batch operation. According to the method and the apparatus provided by the present invention, a separate GPU scheduling module is designed and coordinates with the GPU to perform calculation task processing together with an API application module so as to sufficiently exert the operational capability of the GPU; the API application module sends an operation task to the GPU scheduling module and the GPU scheduling module stores calculation tasks received within a cycle in a cache; and when the GPU completes the calculation tasks in the last batch, the GPU scheduling module submits the cached calculation tasks to the GPU in batches and then the API application module completes the subsequent operations of the calculation tasks in a synchronous mode or an asynchronous mode.

Owner:成都卫士通信息产业股份有限公司

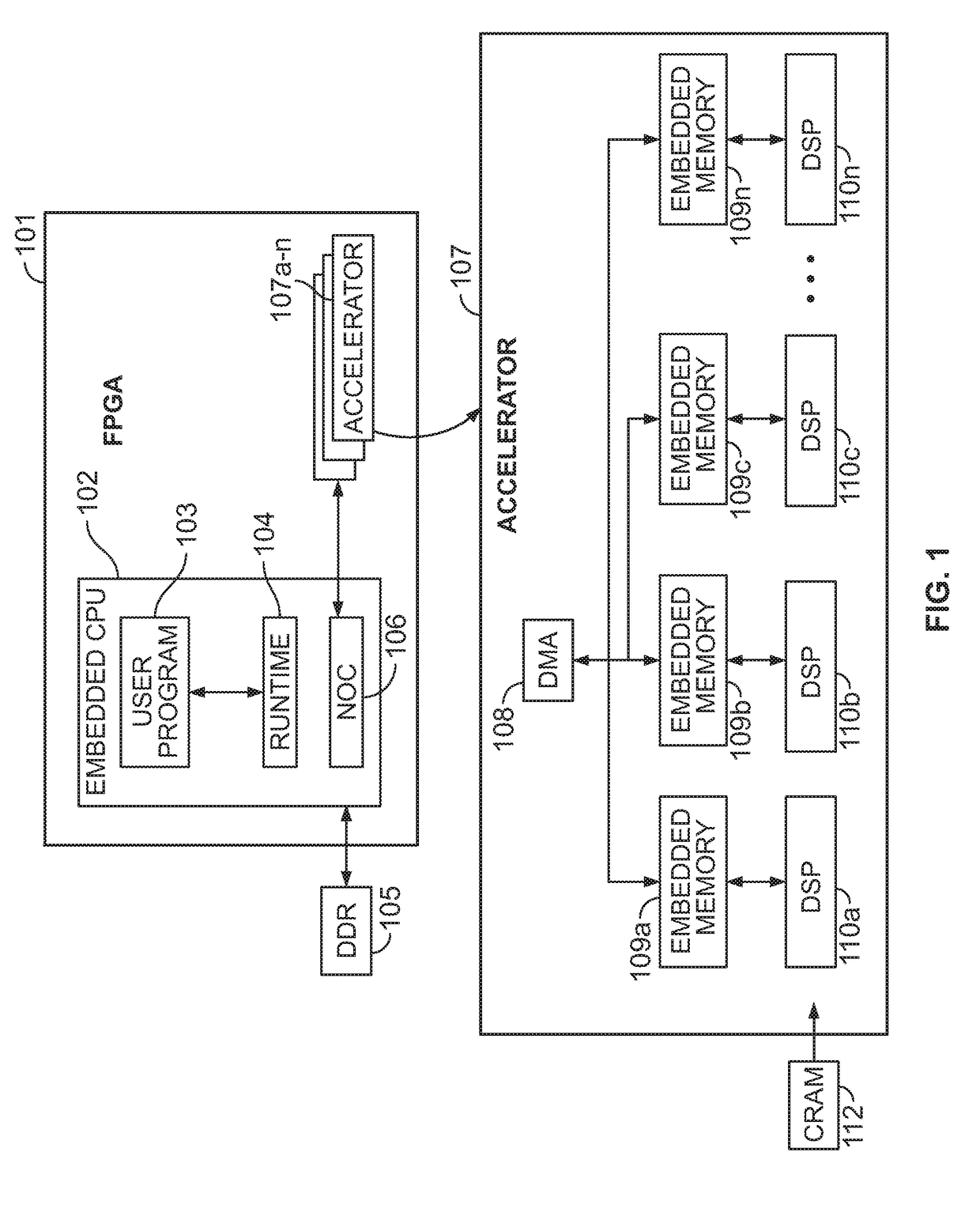

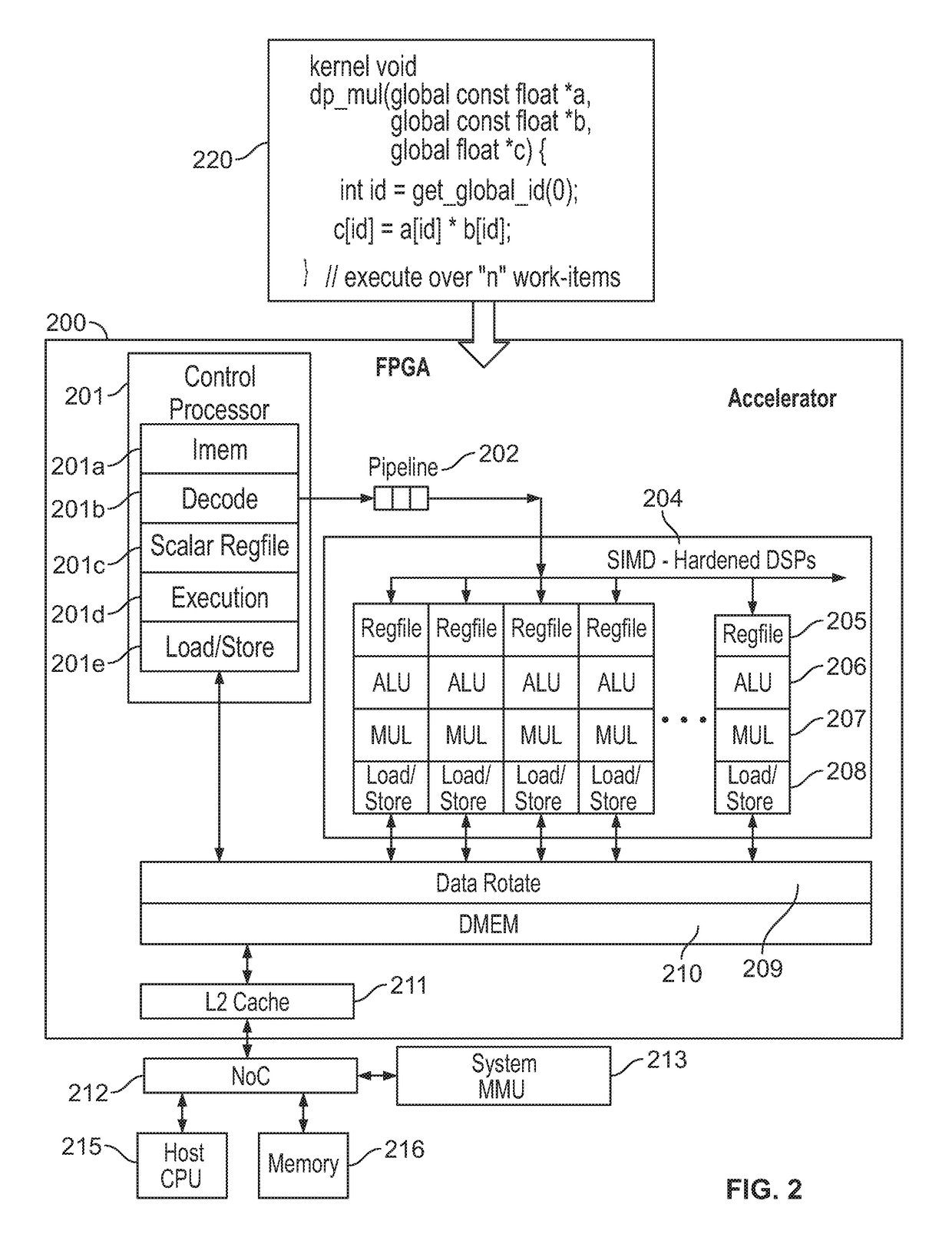

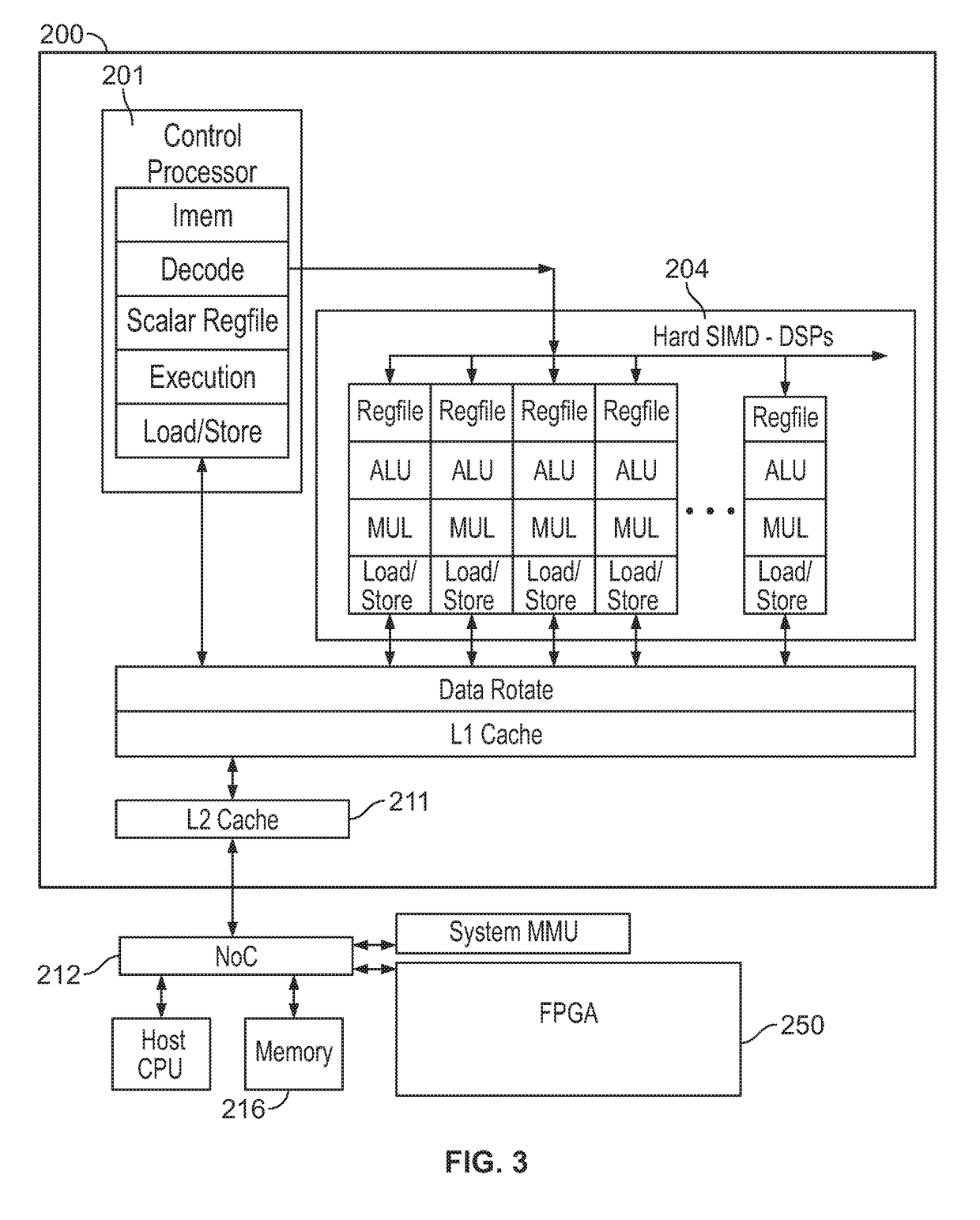

Accelerator architecture on a programmable platform

ActiveUS20190065188A1Effective movementReduce constant access attemptInterprogram communicationDigital computer detailsComputer architectureDigital signal

An accelerated processor structure on a programmable integrated circuit device includes a processor and a plurality of configurable digital signal processors (DSPs). Each configurable DSP includes a circuit block, which in turn includes a plurality of multipliers. The accelerated processor structure further includes a first bus to transfer data from the processor to the configurable DSPs, and a second bus to transfer data from the configurable DSPs to the processor.

Owner:ALTERA CORP

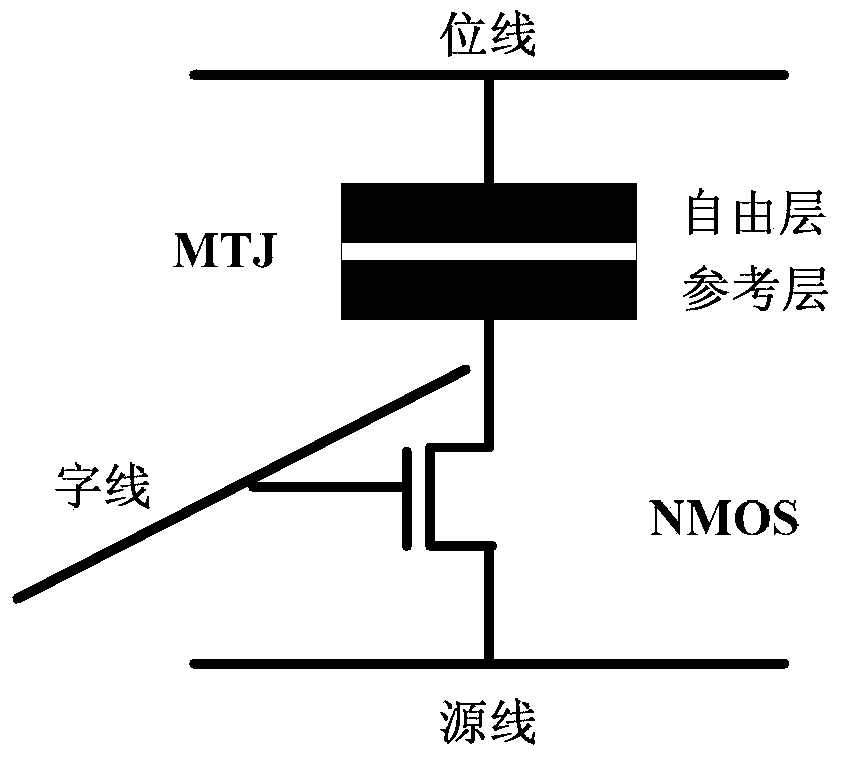

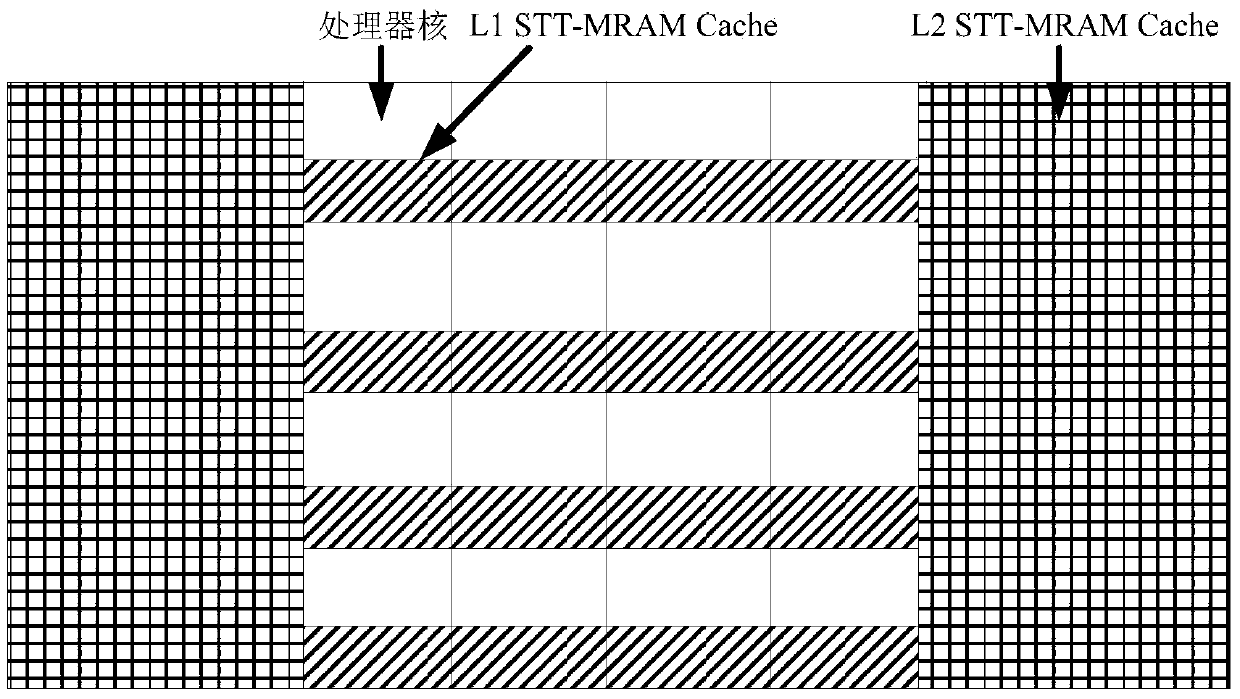

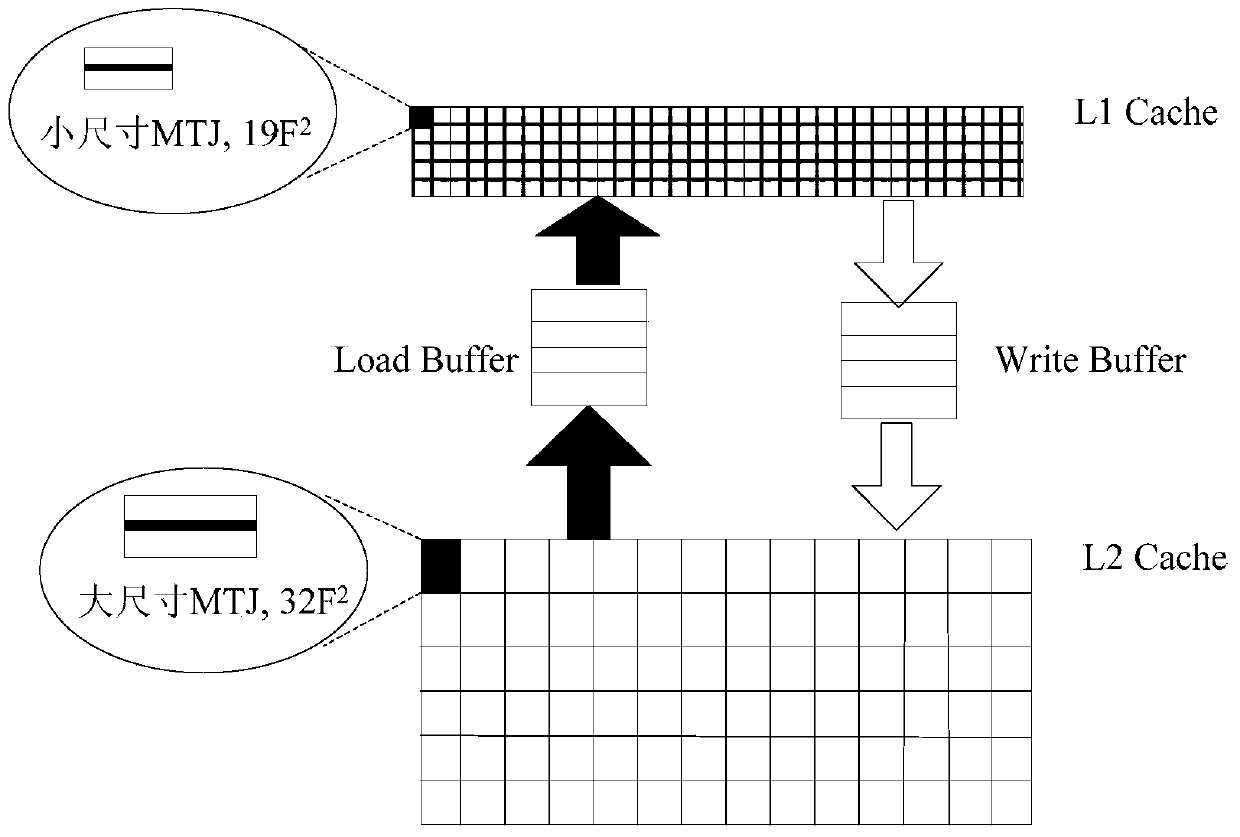

Novel STT-MRAM cache design method

ActiveCN103810118AImprove yieldReduce write energy consumptionMemory adressing/allocation/relocationDigital storageParallel computingDesign methods

A novel STT-MRAM cache design method includes the three major steps. The relation among the size of MTJ, write currents and write energy consumption is utilized, a novel STT-MRAM cache structure is designed, and an L1Cache and an L2Cache are achieved through MTJ memory units with different sizes respectively. Compared with the mode that MTJ memory units only with the same size are used, write energy consumption is reduced, and performance is improved. Compared with the structure that an SRAM and an STT-MRAM are mixed, static power consumption is remarkably reduced. Due to the fact that the L1Cache is composed of the MTJ memory units with the small size, the L1Cache is smaller in size and higher in memory density, the miss rate of the Cache is remarkably lower than that of an SRAM Cache, and the memory access performance is improved. In addition, only the STT-MRAM production process is adopted, the yield of chips is increased, and production cost is reduced.

Owner:BEIHANG UNIV

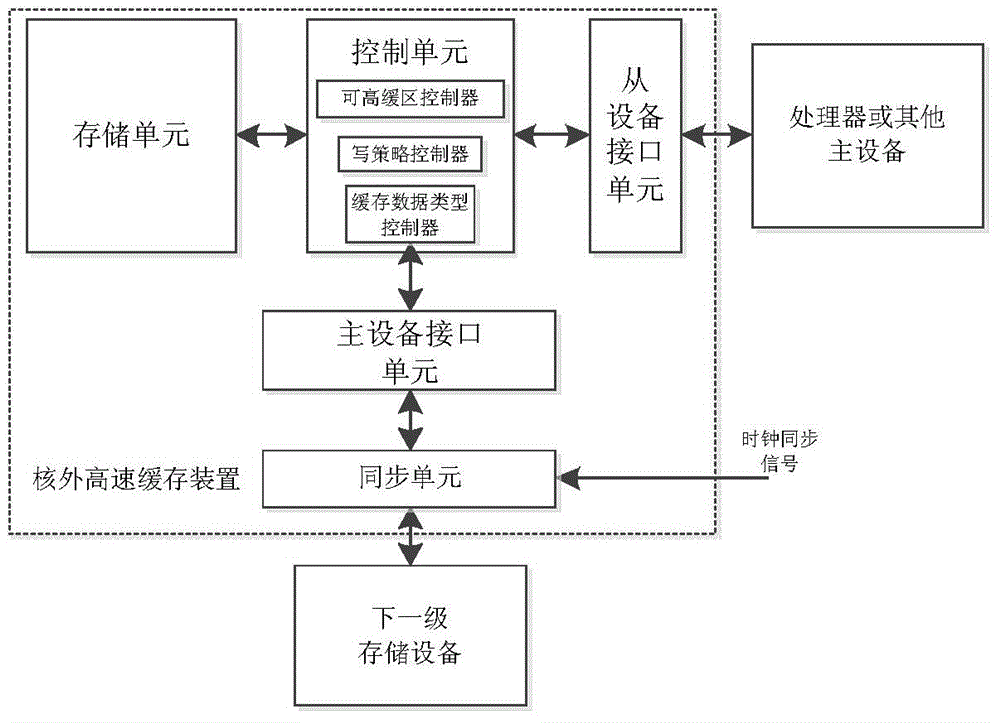

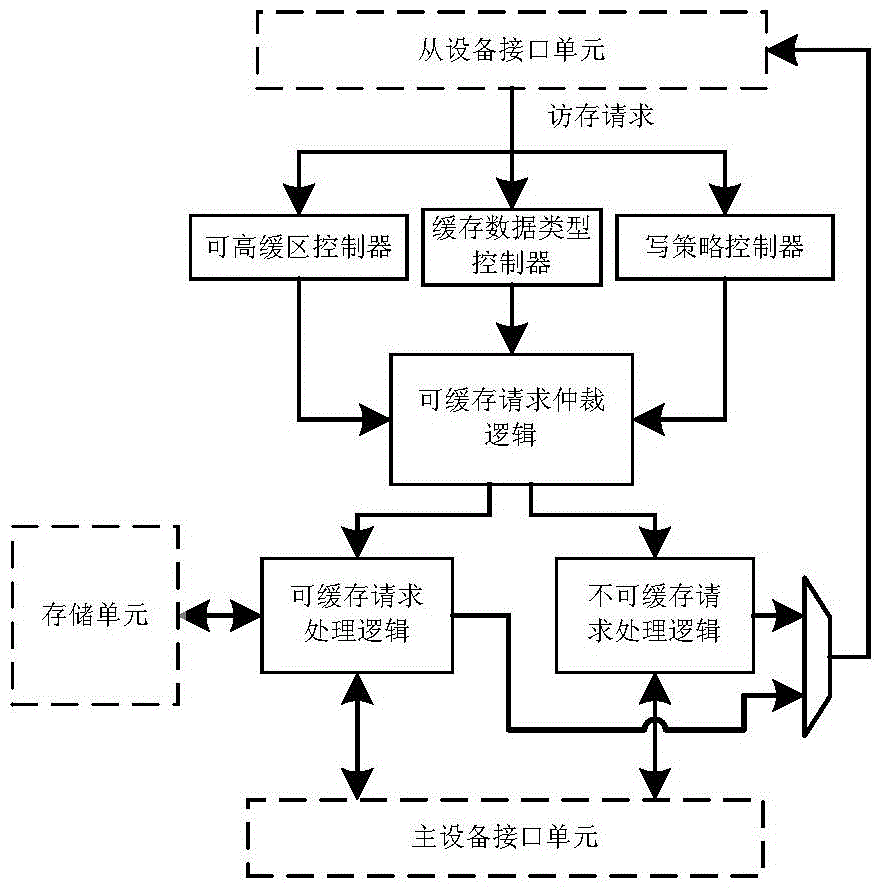

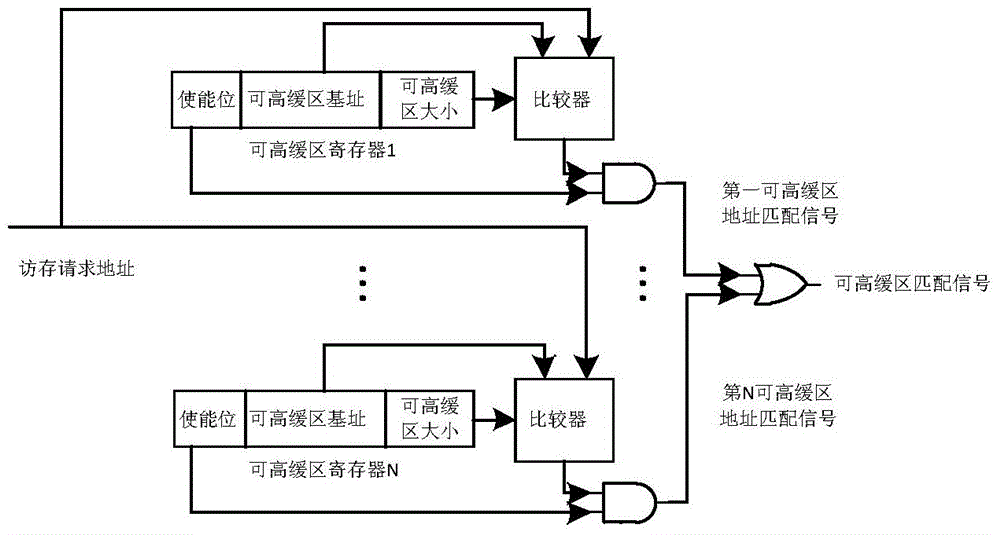

Off-core cache device

ActiveCN104615386AReduce access latencyImprove memory access performanceInput/output to record carriersMemory adressing/allocation/relocationRegister allocationProcessor register

An off-core cache device comprises a slave device interface unit, a control unit, a storage unit, a master device unit and a synchronizing unit. The slave device interface unit receives and responds to an access request of a master unit. The control unit processes the request transmitted by the slave device interface unit, according to configuration information of a register, gains access to the storage unit or transmits the request to the master device interface unit, and refills cache lines. The storage unit caches data written by the control unit and mark information thereof. The master device interface unit is used for transmitting an access request to a next-level storage device and acquires cache data required. The synchronizing unit converts the access request transmitted by the master device interface unit, into a signal meeting a clock timing sequence of the next-level storage device. The off-core cache device has the advantages that access relay of a nonvolatile memory is effectively decreased, and memory accessing performance of a processor is improved.

Owner:C SKY MICROSYST CO LTD

Decoding device and decoding program for video image data

InactiveUS7447266B2Reduce incidenceImprove memory access performanceColor television with pulse code modulationColor television with bandwidth reductionMotion vectorVideo image

A decoding device comprises a cache memory for temporally storing video image data, a unit for determining a position of a reference macroblock corresponding to macroblock to be decoded based on a motion vector obtained by analyzing an encoded bitstream, and a unit for determining whether or not a reference macroblock includes a cacheline boundary when data of the reference macroblock is not stored in the memory and for specifying the position of the boundary as a front address for the data preload from a memory storing the data of the reference macroblock.

Owner:FUJITSU LTD

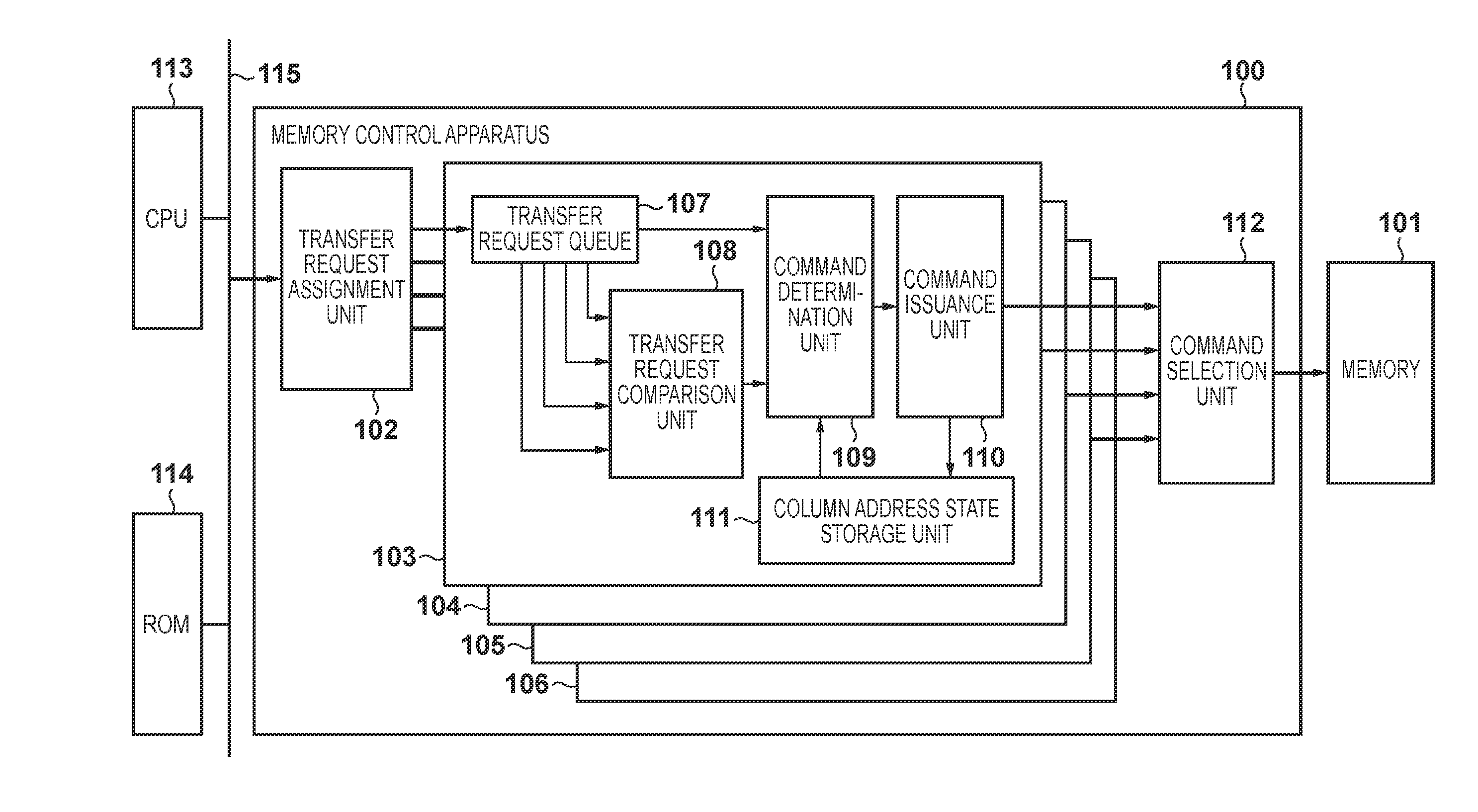

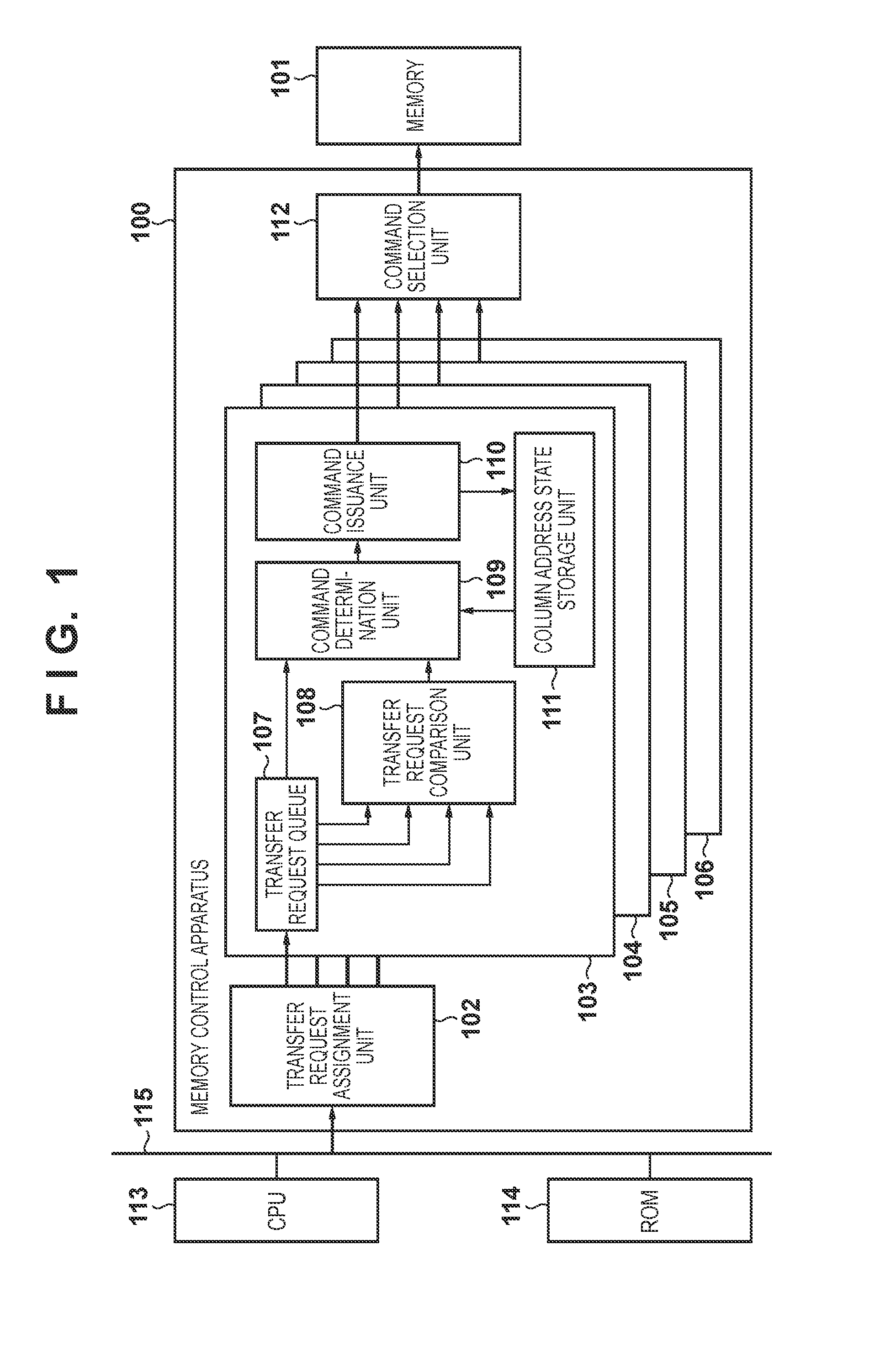

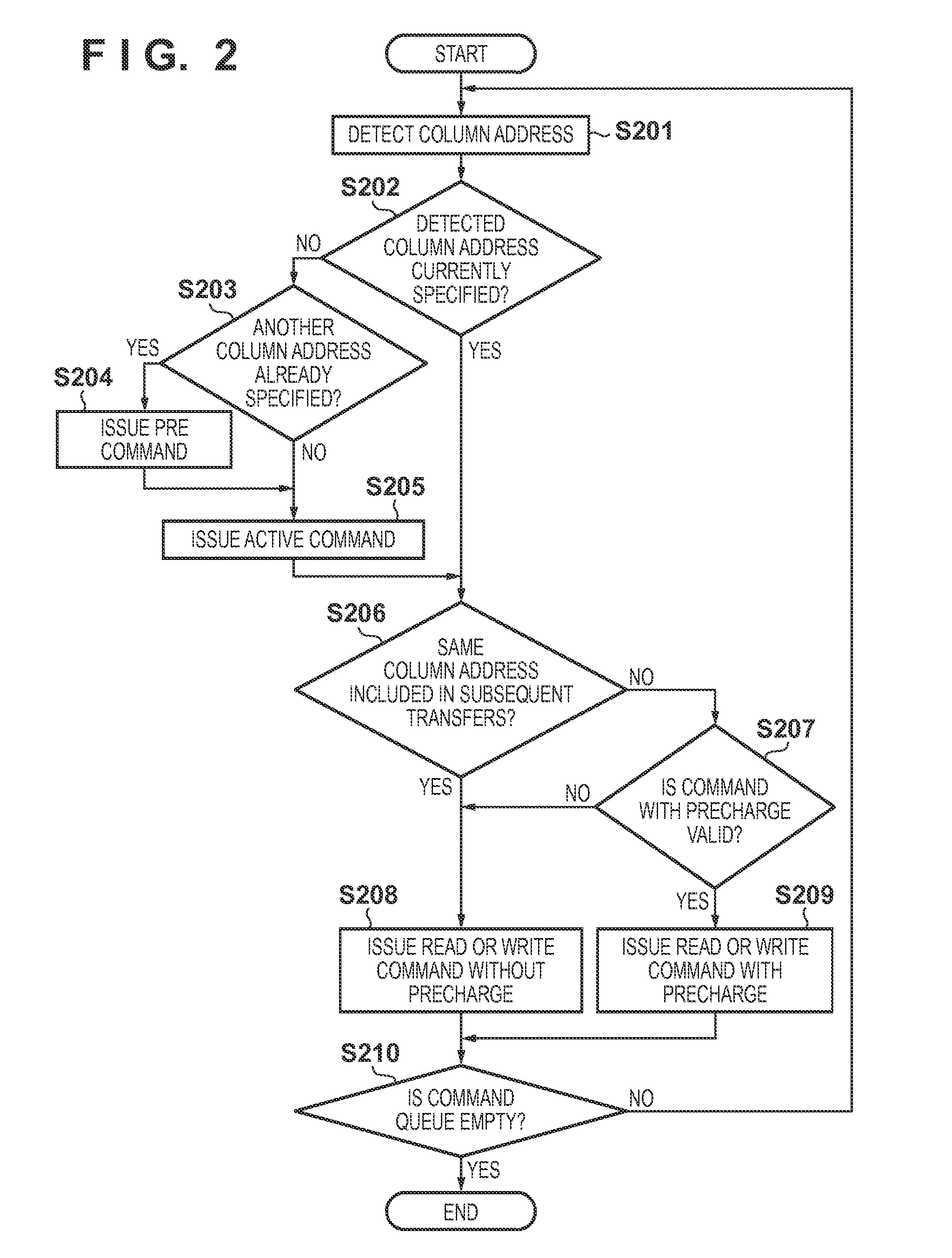

Memory control apparatus and memory control method

ActiveUS20140122789A1Improve memory access performanceMemory adressing/allocation/relocationElectrical testingOperating systemMemory control

In a memory control apparatus for issuing a command for a bank corresponding to a transfer request, the transfer request for the corresponding bank is stored. The column address of the transfer request stored at the first is compared with the column addresses of a plurality of subsequent transfer requests. It is determined based on the comparison result whether to issue a command with precharge or a command without precharge for the transfer request stored at the first. The determined command is issued.

Owner:CANON KK

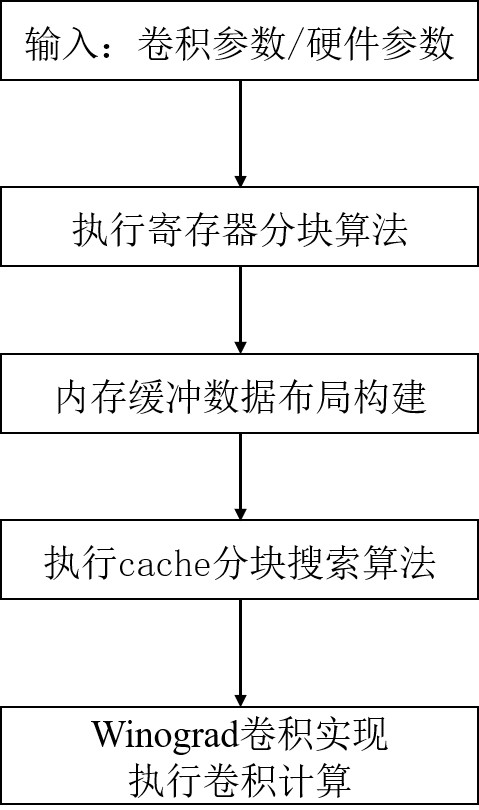

Winograd convolution implementation method based on vector instruction acceleration calculation

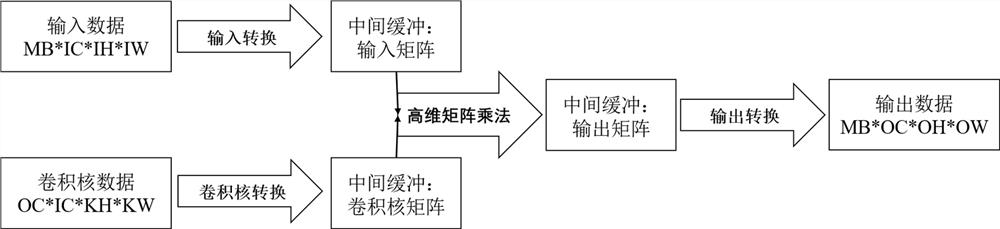

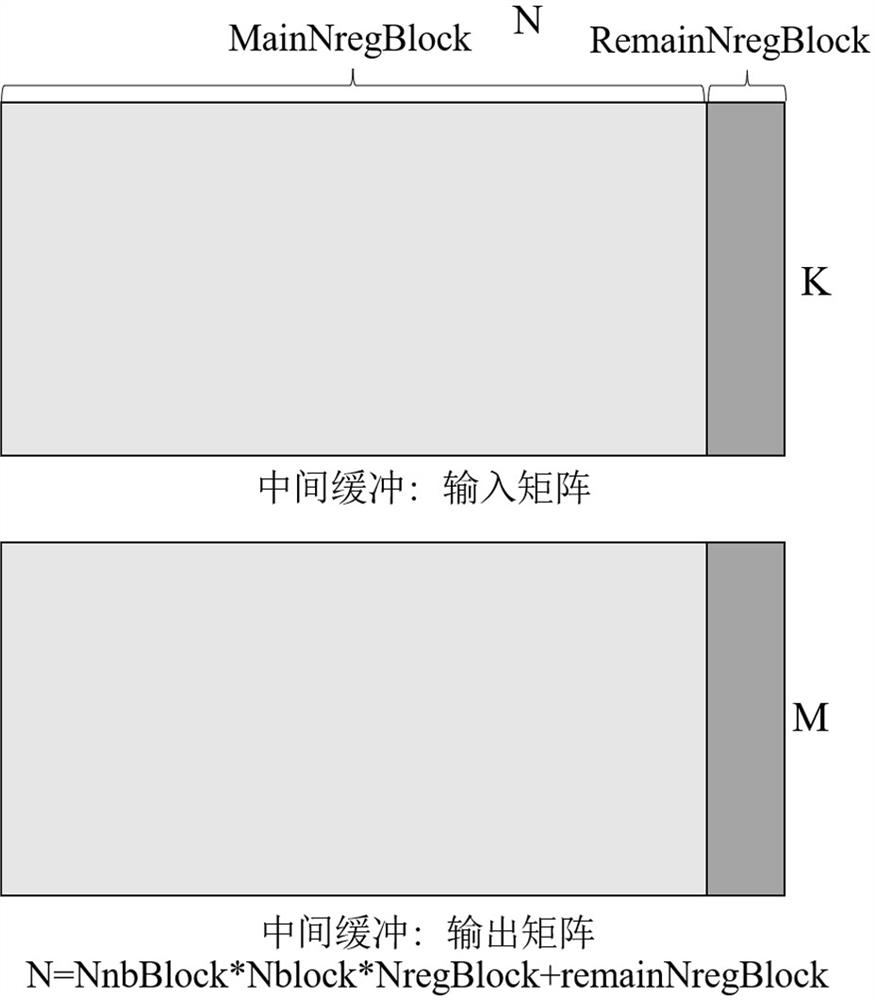

ActiveCN113835758AImprove resource utilizationIncrease profitResource allocationRegister arrangementsData transformationOriginal data

The invention discloses a Winograd convolution implementation method based on vector instruction acceleration calculation. The method comprises the following steps: S1, constructing a register partitioning strategy, and in a Winograd convolution implementation process on a central processing unit (CPU), when original data is converted to a Winograd data field, performing vector partitioning and register partitioning on data buffered in the middle; S2, constructing a memory data layout strategy, arranging the original data of the Winograd convolution and the data of the intermediate buffer on the memory, and arranging the Winograd block dimension to the position of the innermost layer for the data layout of the intermediate buffer relative to the optimality of matrix multiplication; and S3, constructing cache block search, searching a performance optimal solution of a cache block in a small range determined according to the CPU hardware parameters and the convolution parameters, storing the performance optimal solution and the corresponding convolution parameters, and subsequently directly adopting the performance optimal solution through the convolution parameters.

Owner:ZHEJIANG LAB

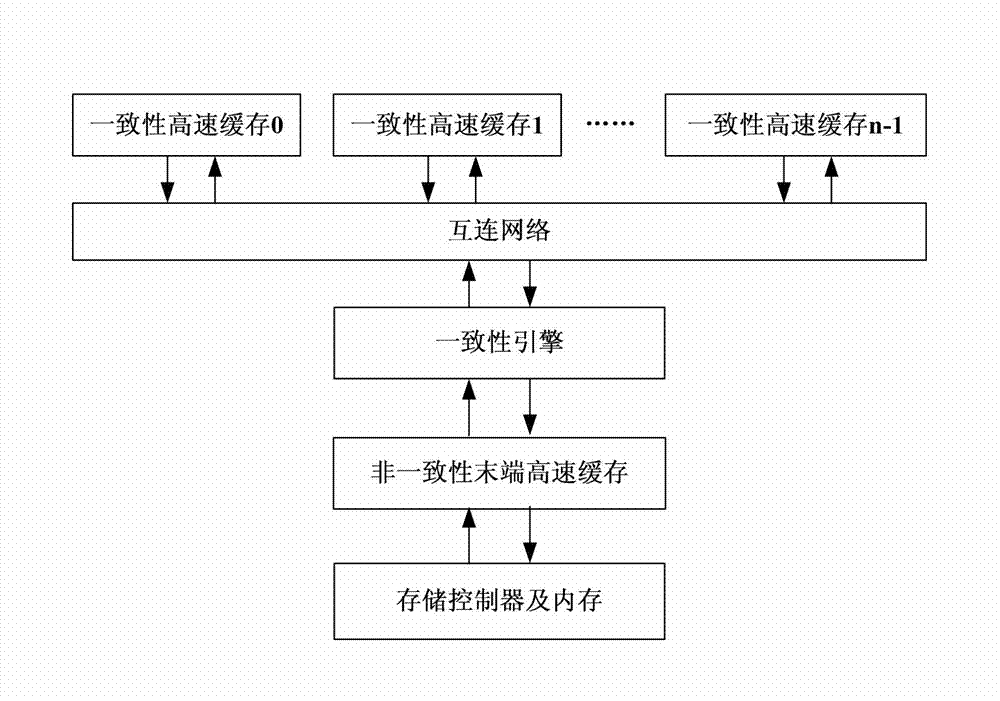

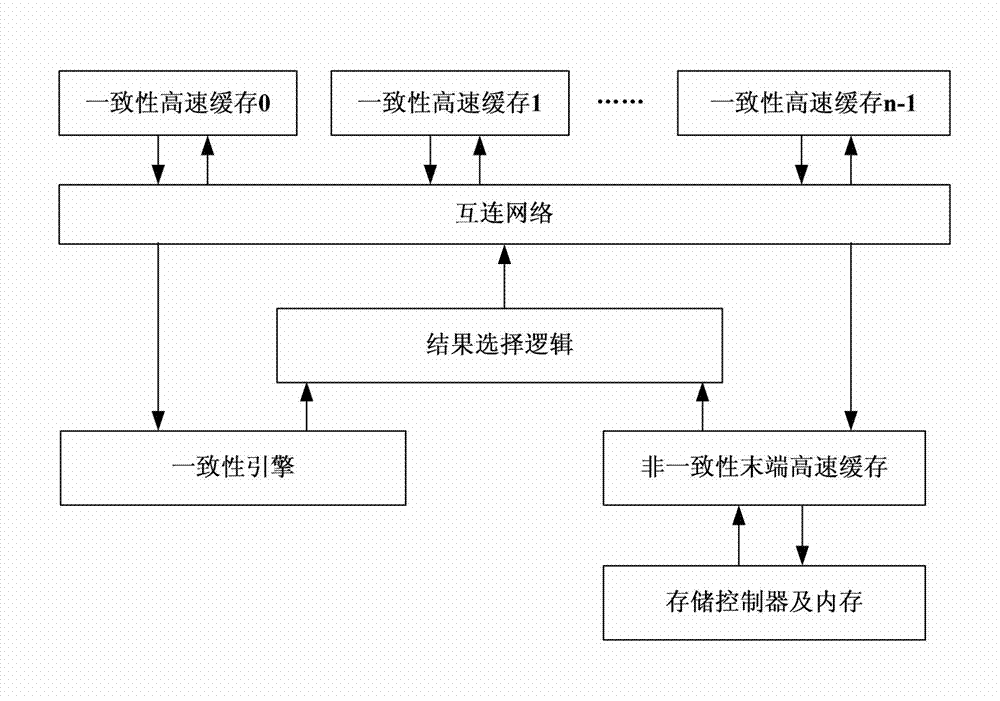

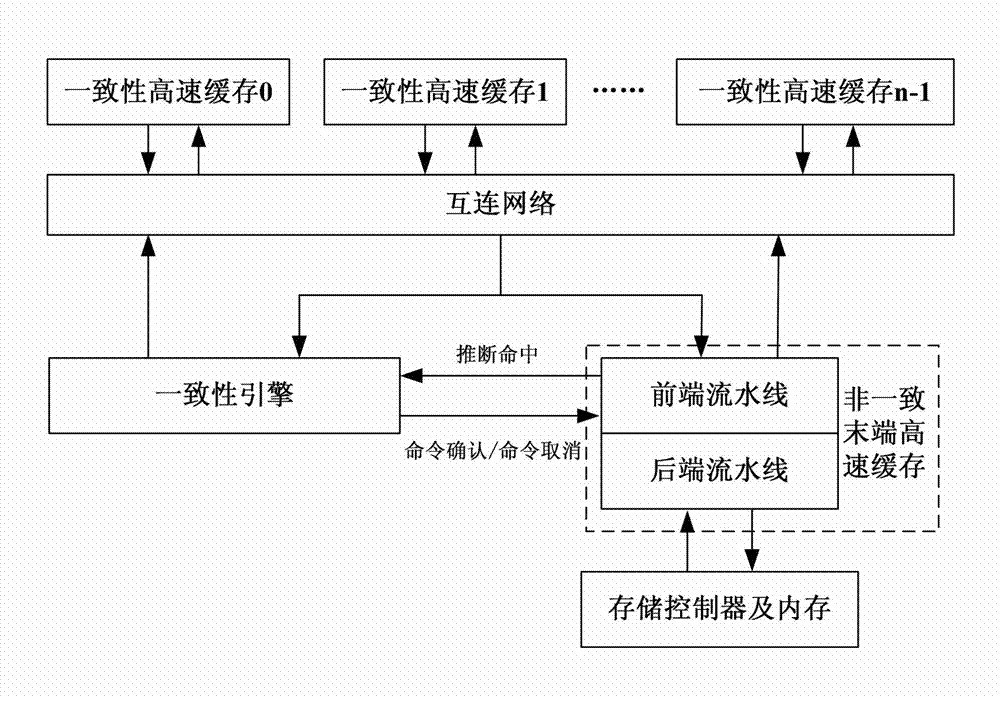

Command cancel-based cache production line lock-step concurrent execution method

ActiveCN102819420ALow memory access latencyImprove memory access performanceMemory adressing/allocation/relocationConcurrent instruction executionOperating systemBeat Number

The invention discloses a command cancel-based cache production line lock-step concurrent execution method, which is implemented through the following steps of: (1) performing lock-step concurrent execution by a consistency engine and a last-level cache according to a beat number appointed by a production line and receiving a message from a consistency cache respectively; (2) judging, by the consistency engine, whether the message hits the consistency cache, and judging, by the last-level cache, whether the message hits the last-level cache; and (3) judging, by the consistency engine, whether the last-level cache is required to be accessed, transmitting, by the consistency engine, a command confirmation signal to the last-level cache if the last-level cache is required to be accessed, allowing the last-level cache to access an off-chip memory, if the last-level cache is not required to be accessed, transmitting, by the consistency engine, a command cancel signal to the last-level cache to prevent the last-level cache from accessing the off-chip memory. The command cancel-based cache production line lock-step concurrent execution method has the advantages of low access and storage delay and high access and storage performance.

Owner:NAT UNIV OF DEFENSE TECH

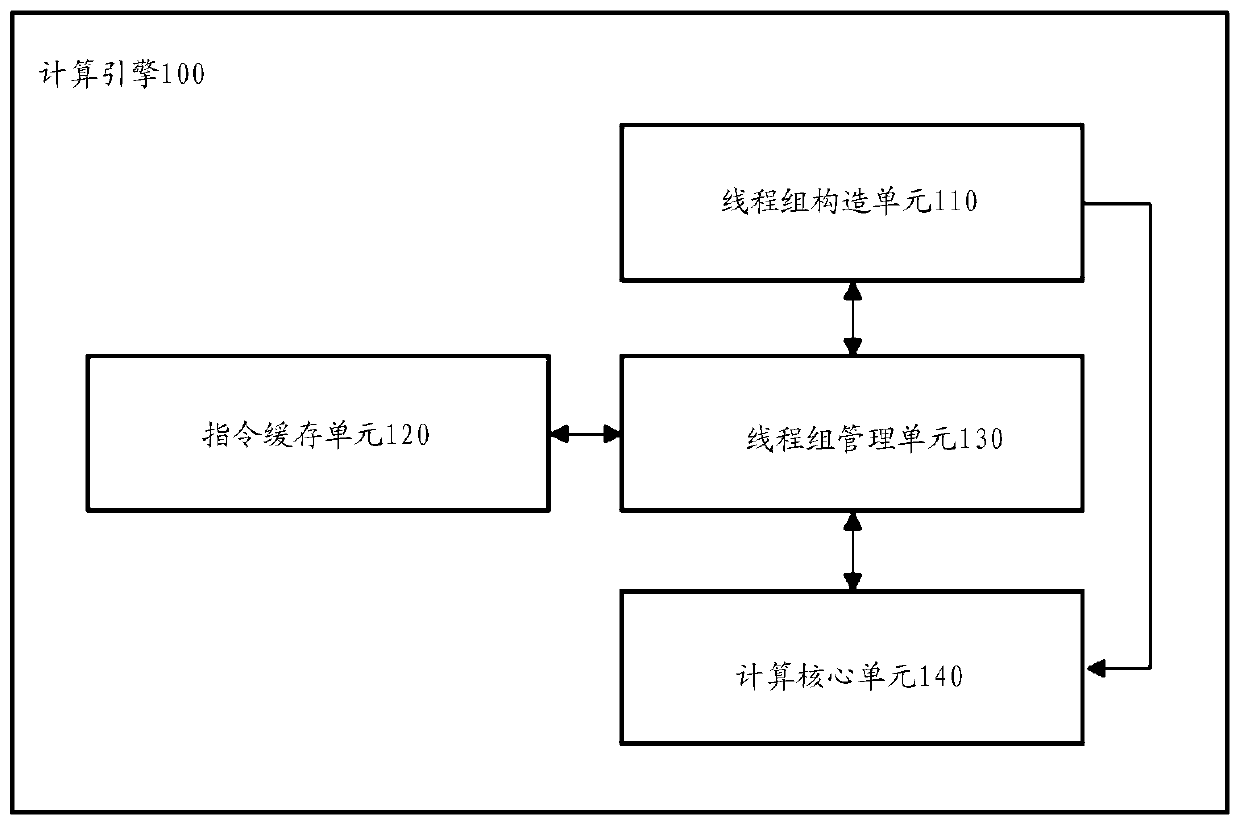

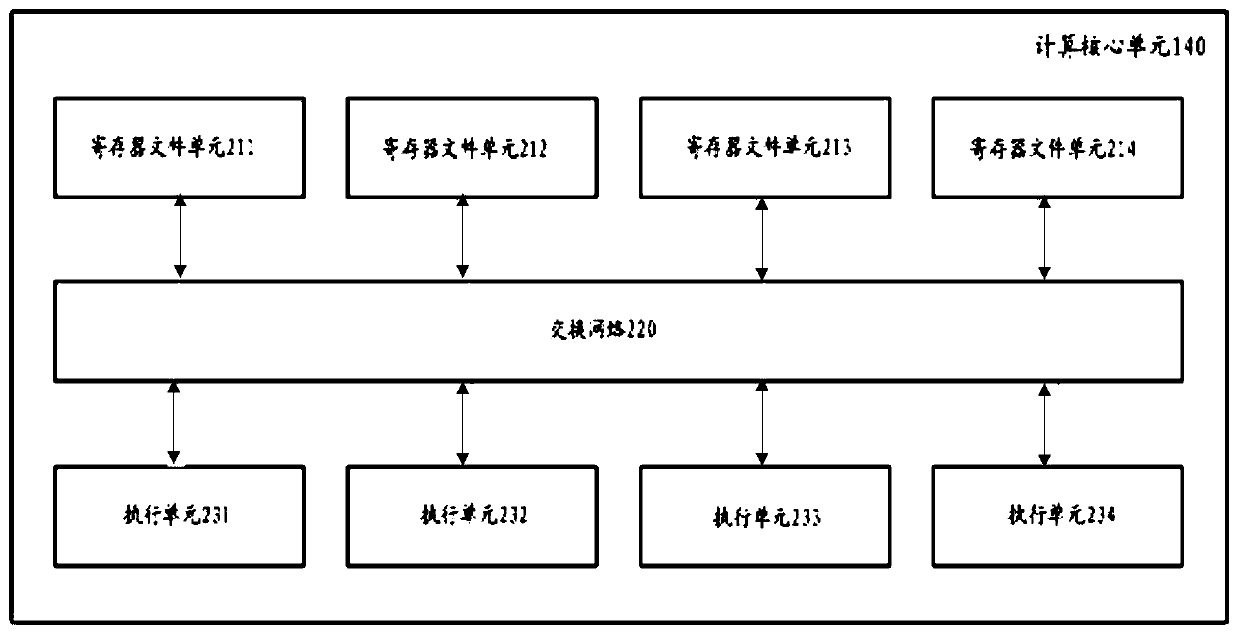

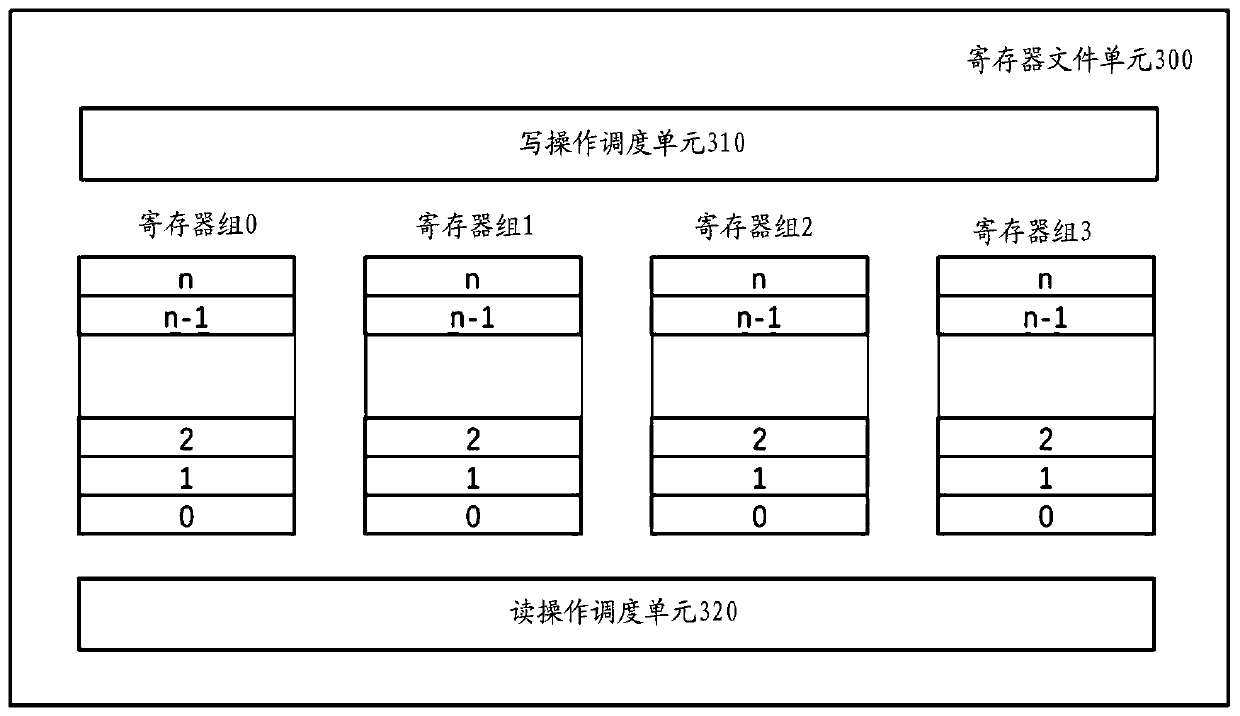

Method of managing register file units

ActiveCN111459543AReduce Design ComplexityImprove memory access performanceRegister arrangementsEngineeringMulti port

The invention provides a method for managing register file units. The register file unit is composed of a single-port memory, the single-port memory is a register used for providing operands associated with threads, and the method comprises: allocating associated registers to a plurality of threads, and organizing the allocated registers into a plurality of register groups; uniformly distributingregisters associated with each thread in a plurality of register groups, wherein associated data of different threads is stored at the same position of the plurality of register groups; and for the read-write operation of the register, scheduling the arrangement mode of operands associated with a plurality of threads, so that a plurality of register groups of the register file unit only have one read operation or one write operation in the same clock period. By utilizing the method provided by the invention, the function of the multi-port memory can be simulated by using single-port storage, so that the design cost of the register file unit is reduced, and the memory access performance is improved.

Owner:SHANGHAI DENGLIN TECH CO LTD

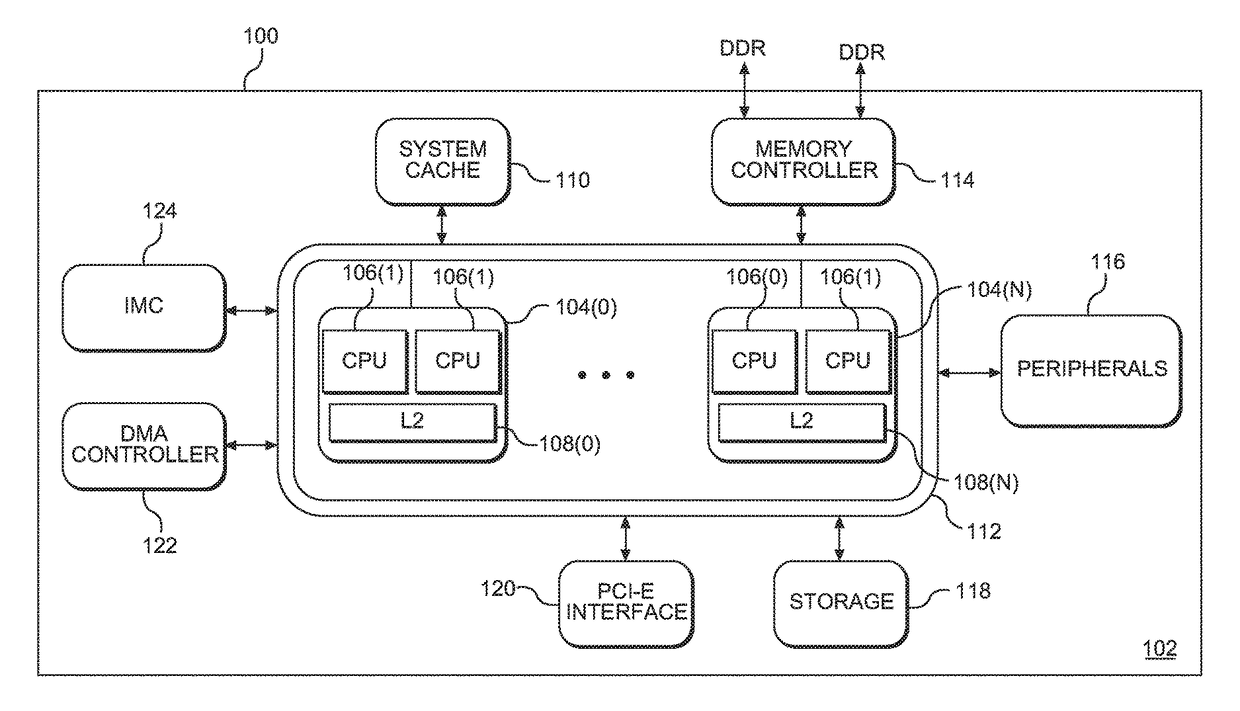

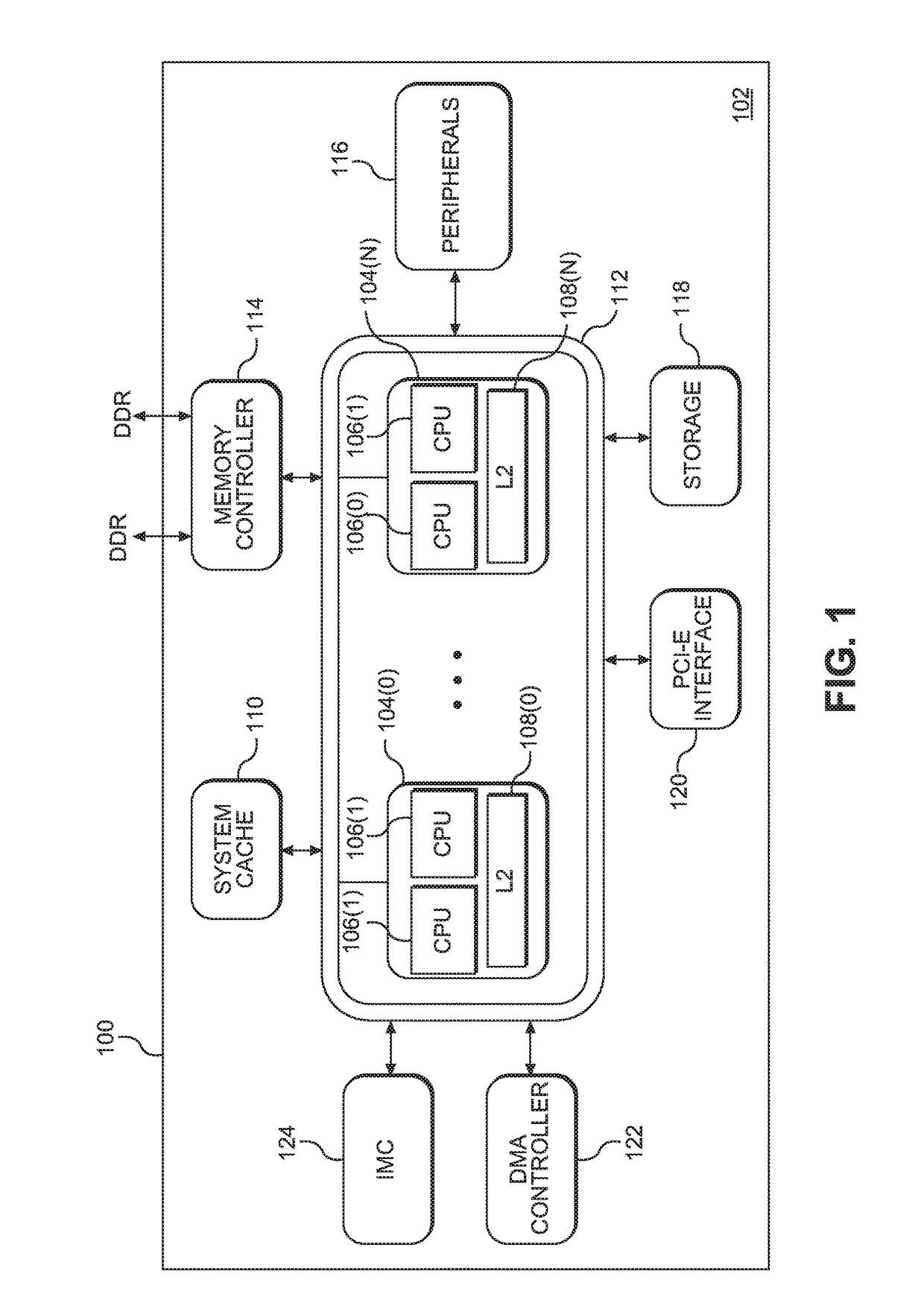

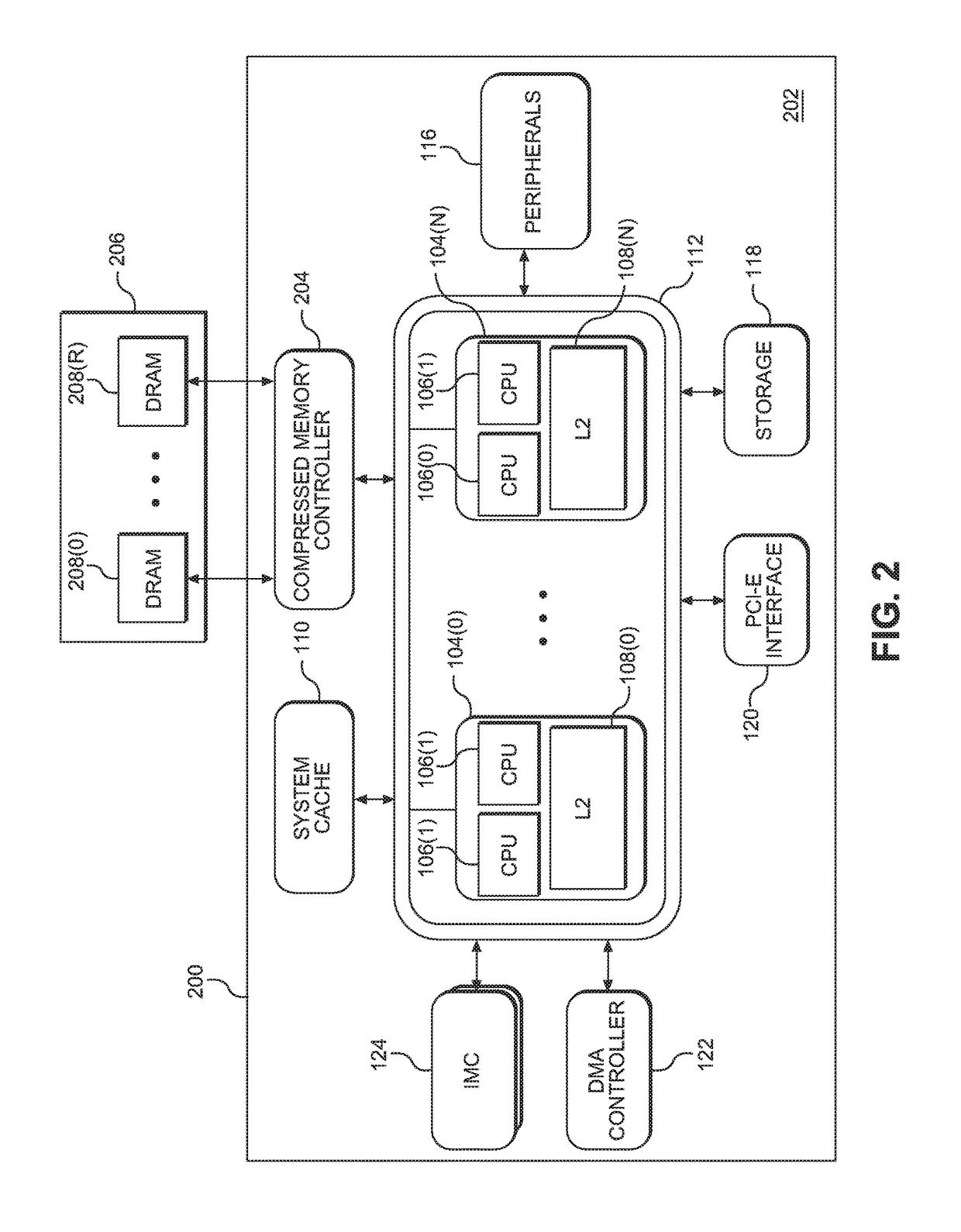

Providing memory bandwidth compression using compression indicator (CI) hint directories in a central processing unit (CPU)-based system

ActiveUS20170286001A1Improve memory access performanceAvoid consumptionMemory architecture accessing/allocationInput/output to record carriersParallel computingPhysical address

Providing memory bandwidth compression using compression indicator (CI) hint directories in a central processing unit (CPU)-based system is disclosed. In this regard, a compressed memory controller provides a CI hint directory comprising a plurality of CI hint directory entries, each providing a plurality of CI hints. The compressed memory controller is configured to receive a memory read request comprising a physical address of a memory line, and initiate a memory read transaction comprising a requested read length value. The compressed memory controller is further configured to, in parallel with initiating the memory read transaction, determine whether the physical address corresponds to a CI hint directory entry in the CI hint directory. If so, the compressed memory controller reads a CI hint from the CI hint directory entry of the CI hint directory, and modifies the requested read length value of the memory read transaction based on the CI hint.

Owner:QUALCOMM INC

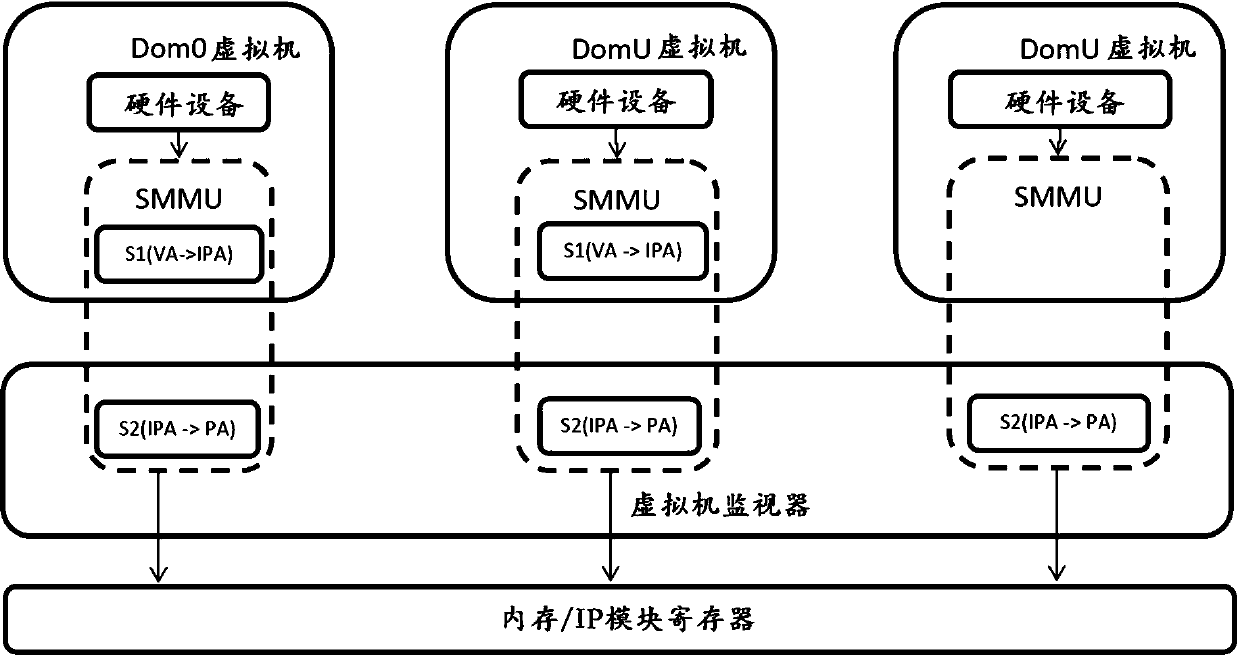

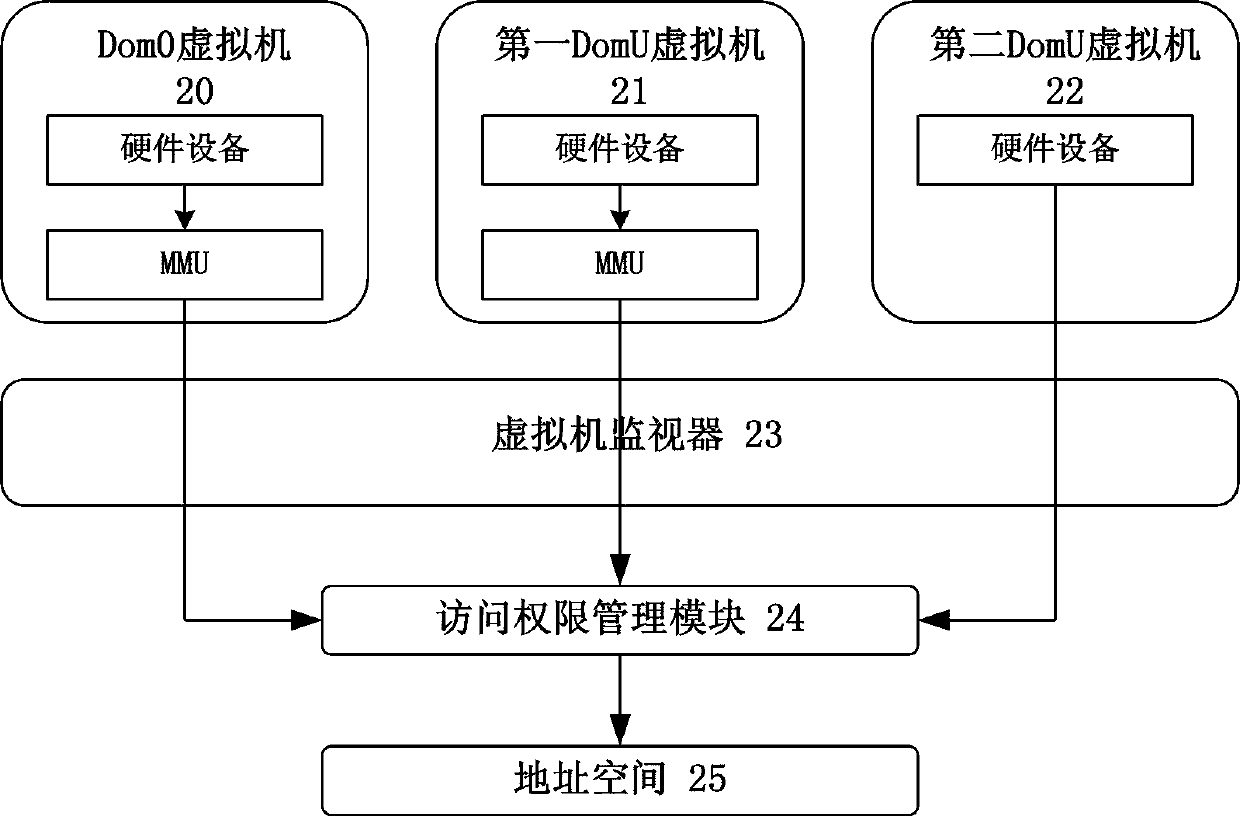

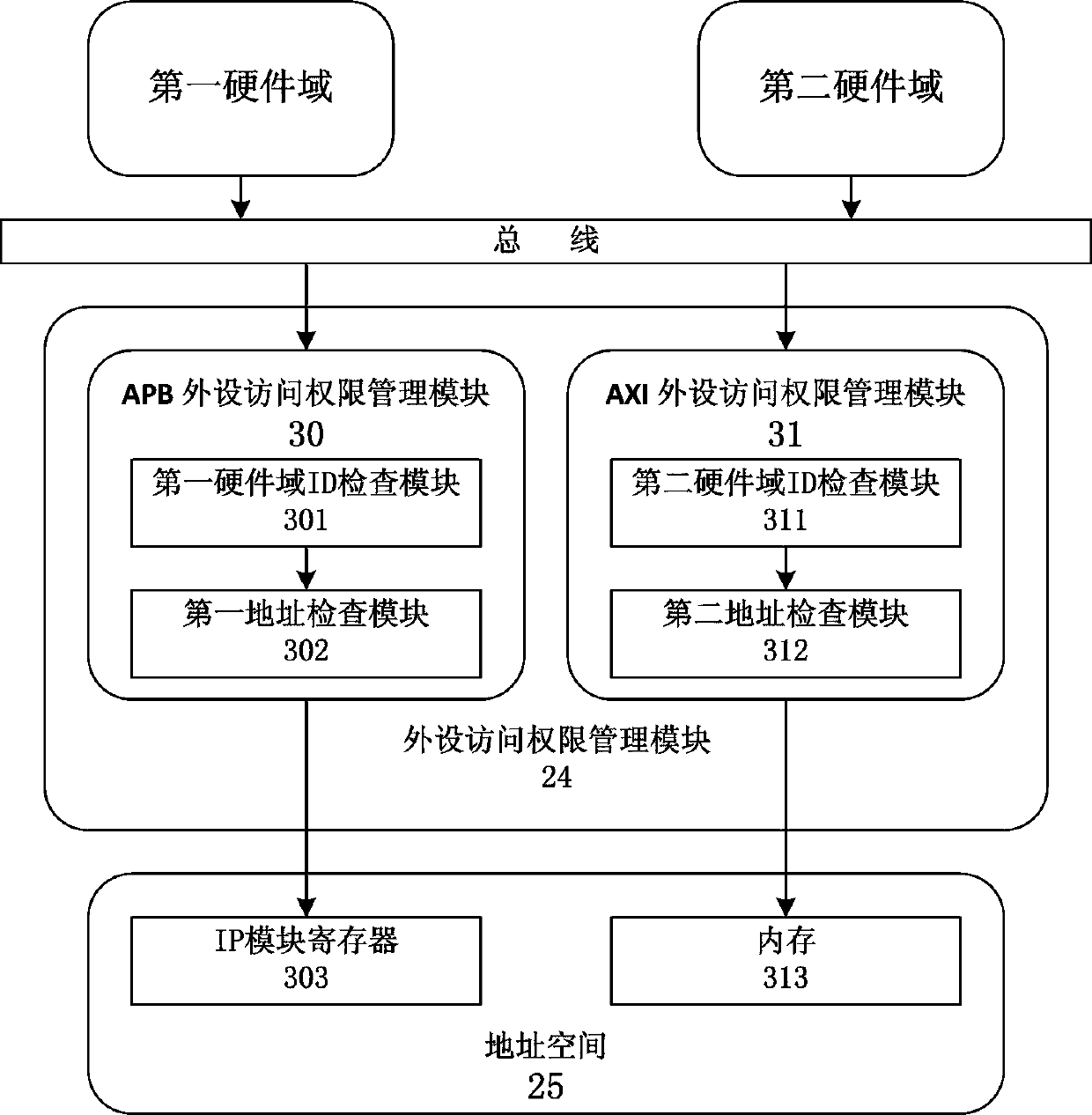

Virtualized address space isolation system and method

ActiveCN110442425AReduce complexityReduce areaUnauthorized memory use protectionSoftware simulation/interpretation/emulationVirtualizationSoftware design

The invention discloses a virtualized address space isolation system. The system comprises a Dom0 virtual machine, a DomU virtual machine, a virtual machine monitor, an access authority management module and an address space; the Dom0 virtual machine is used for creating the DomU virtual machine and setting the physical address space which can be accessed by the DomU virtual machine through the virtual machine monitor; the virtual machine monitor is used for managing resources and trapping and simulating privilege sensitive designation; and the access permission management module performs permission check on the access request of the DomU virtual machine to complete isolation of physical address access between DomUs. The invention further provides a virtualization address space isolation method, hardware isolation of the physical addresses of the virtual machines can be achieved without using SMMU, the complexity of software design is reduced, meanwhile, the chip area is reduced, the memory access delay is prolonged, and the memory access performance of the system is improved.

Owner:NANJING SEMIDRIVE TECH CO LTD

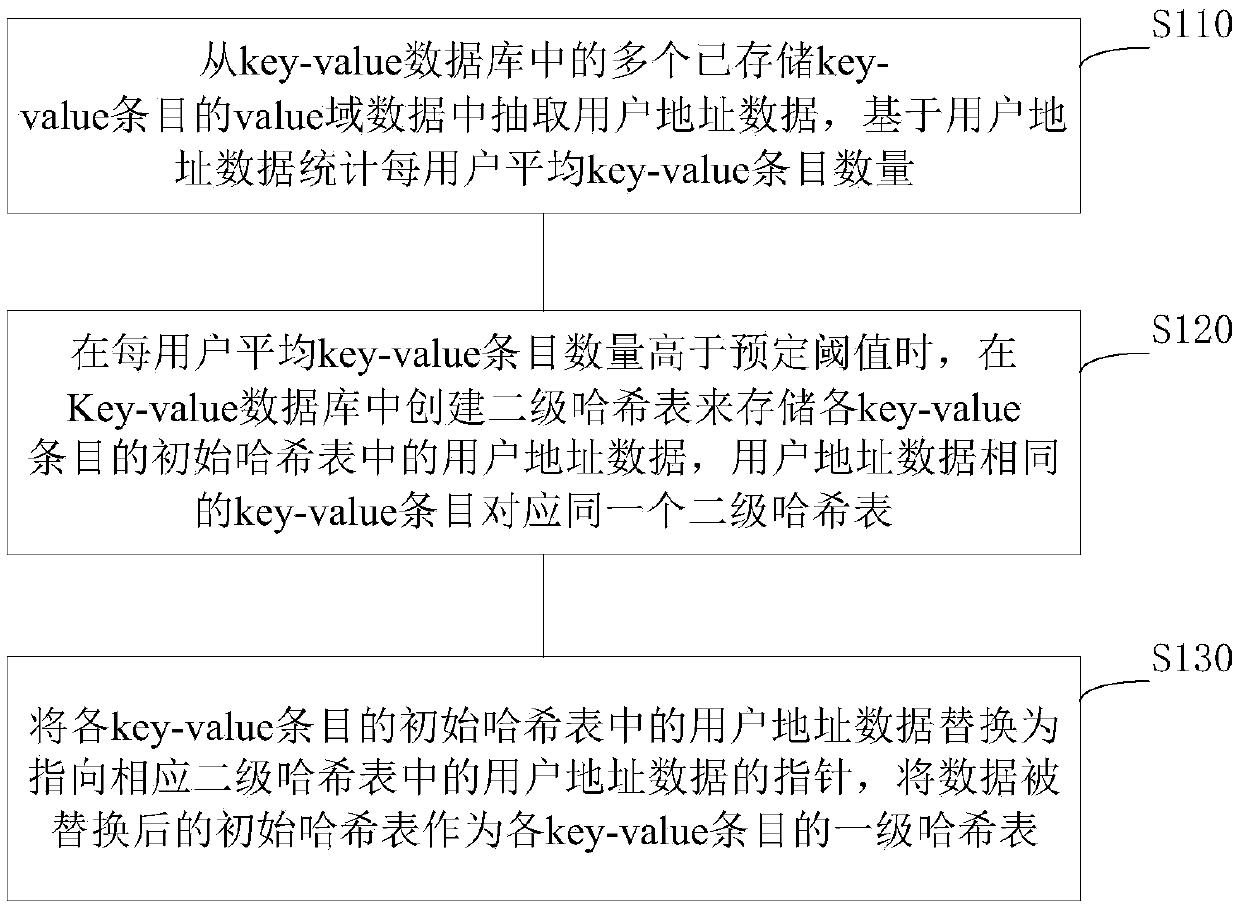

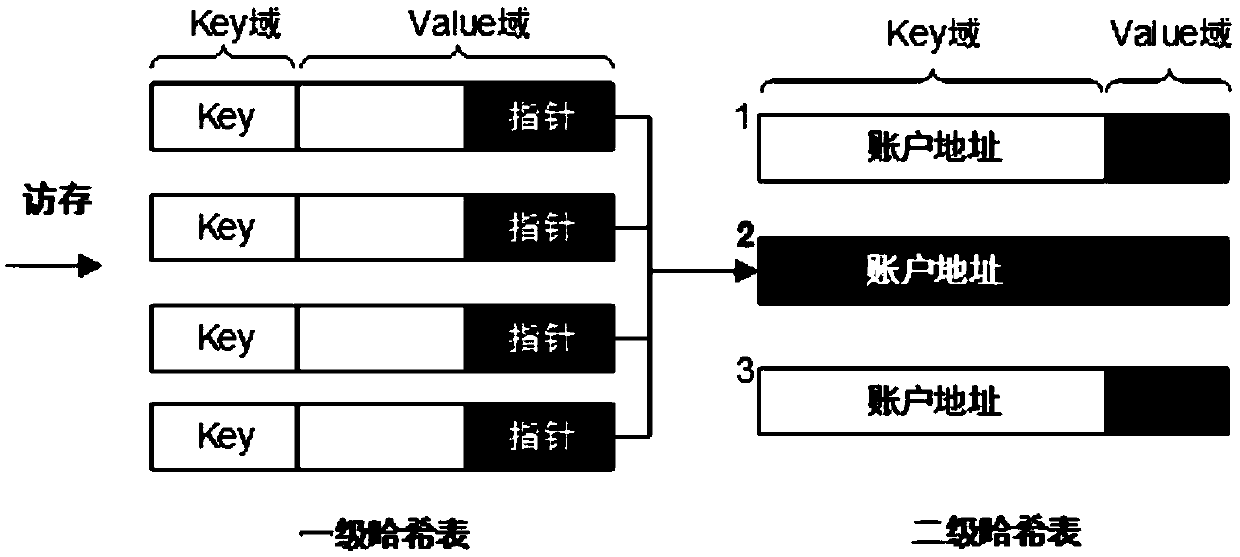

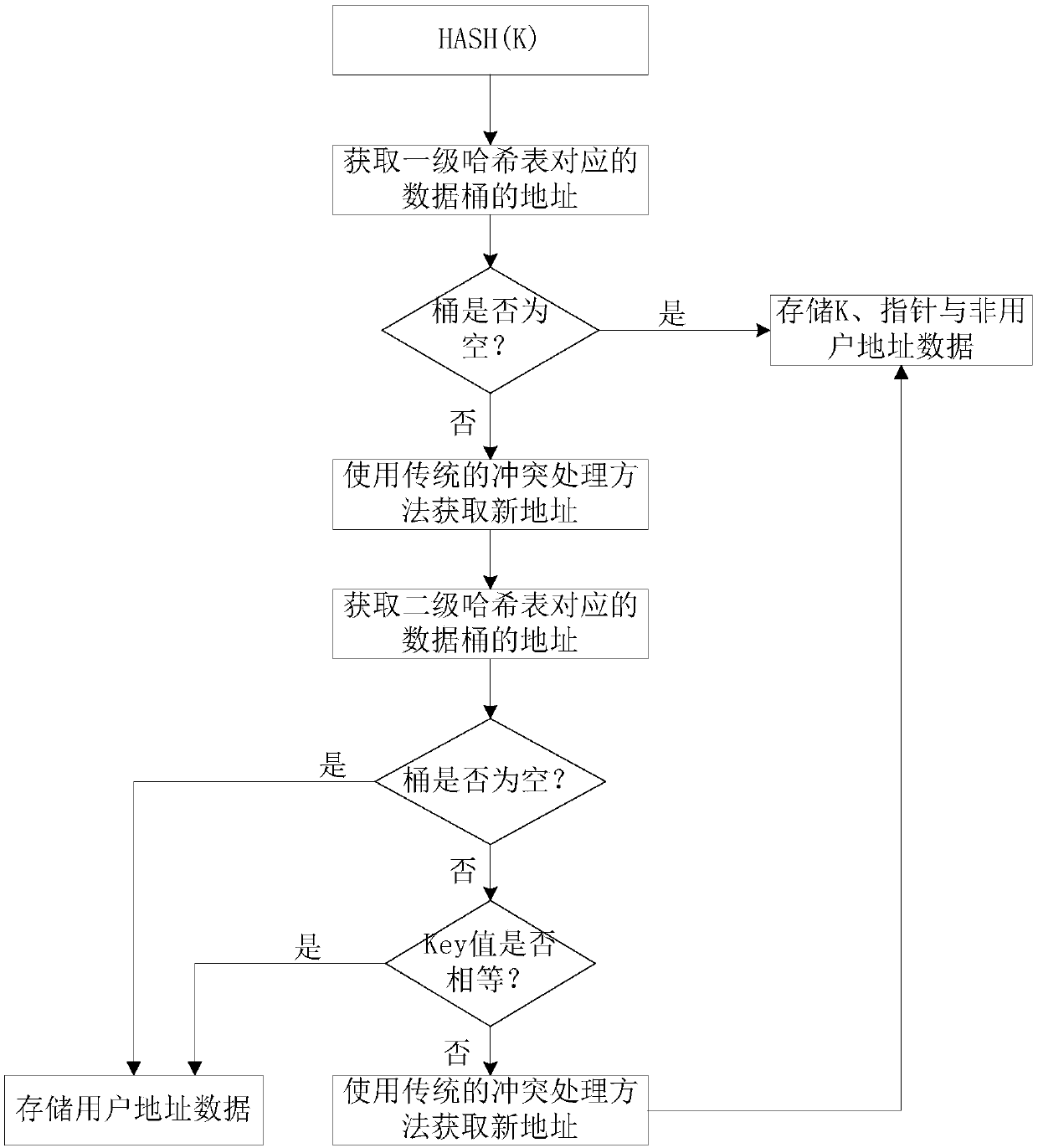

Data compression method, storage method, access method and system in key-value database

The invention provides a data compression method, storage method, an access method and system in a key-value database. the data compression method comprising: extracting user address data from value domain data of a plurality of stored key-value entries in a key-value database, and counting an average user key-value entry quantity based on the user address data; When the number of value entries ishigher than the predetermined threshold, creating a secondary hash table in the key-value database to store the user address data in the initial hash table of each key-value entry, so that the key-value entry with the same user address data corresponds to the same secondary hash table; replacing the user address data in the initial hash table of each key-value entry with a pointer to the user address data in the corresponding secondary hash table, and storing the initial hash table after the user address data is replaced as a first-level hash table of each key-value entry in the key-value database.The method and the system of the invention can improve the memory access performance in the block chain database.

Owner:YUSUR TECH CO LTD

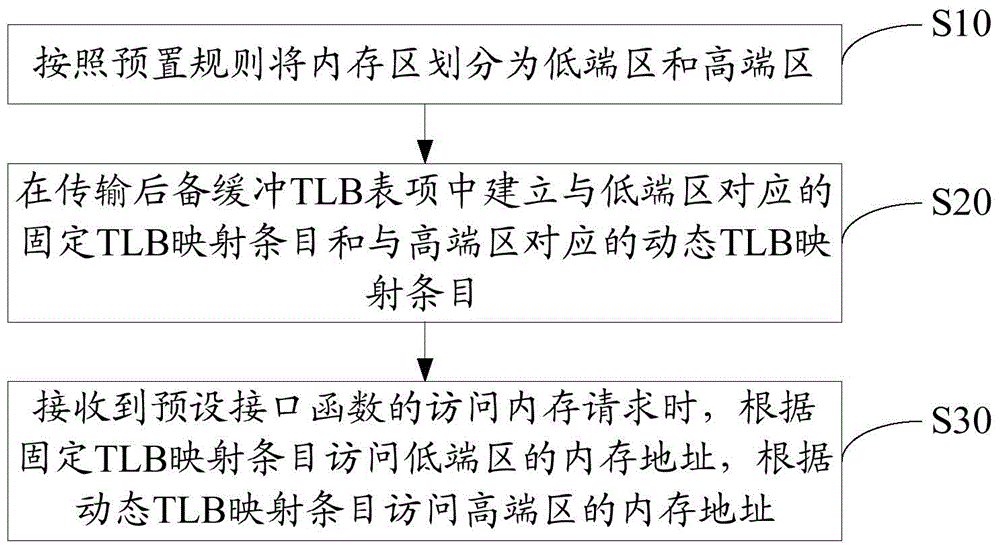

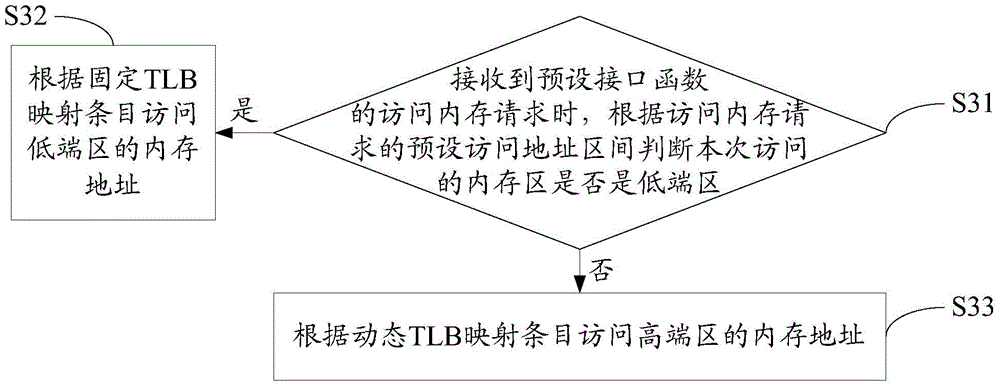

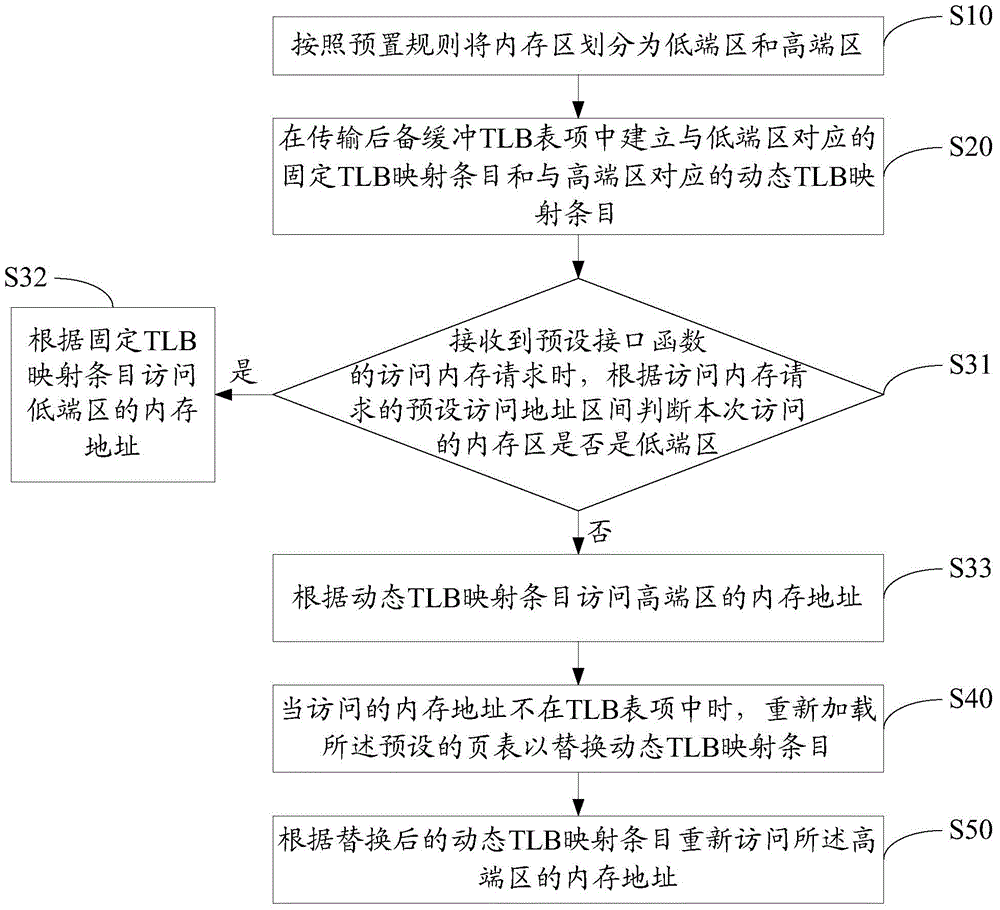

Memory access processing method and device

ActiveCN106326150AReduce occupancyImprove memory access performanceMemory adressing/allocation/relocationMemory addressOccupancy rate

The invention discloses a memory access processing method. The memory access processing method comprises the following steps: partitioning a memory area into a low area and a high area according to a preset rule; building a fixed translation lookaside buffer (TLB) mapping entry corresponding to the low area and a dynamic TLB mapping entry corresponding to the high area in a TLB table entry, wherein the dynamic TLB mapping entry is used for replacing a mapping relationship according to a preset page table; and when a memory access request of a preset interface function is received, accessing a memory address of the low area according to the fixed TLB mapping entry, and accessing a memory address of the high area according to the dynamic TLB mapping entry. The invention also discloses a memory access processing device. Through adoption of the memory access processing method and device, the memory access performance of a system is enhanced, and the occupancy rate of a CPU (Central Processing Unit) is reduced.

Owner:ZTE CORP

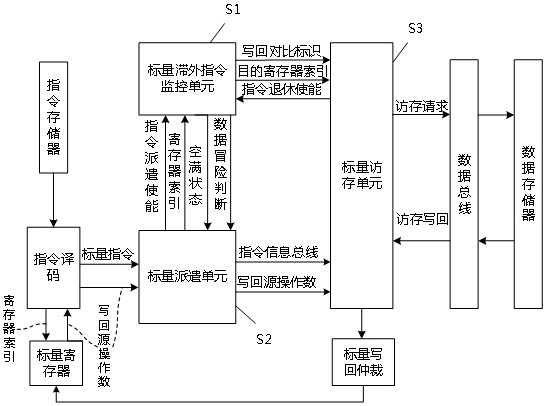

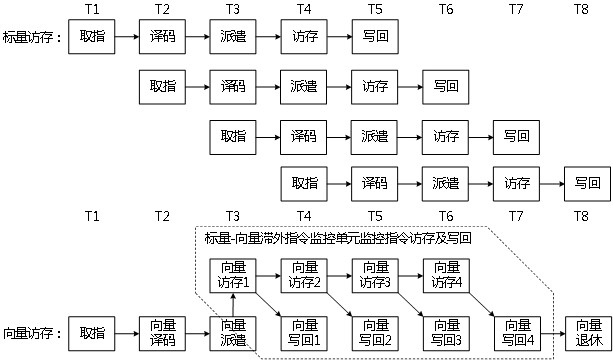

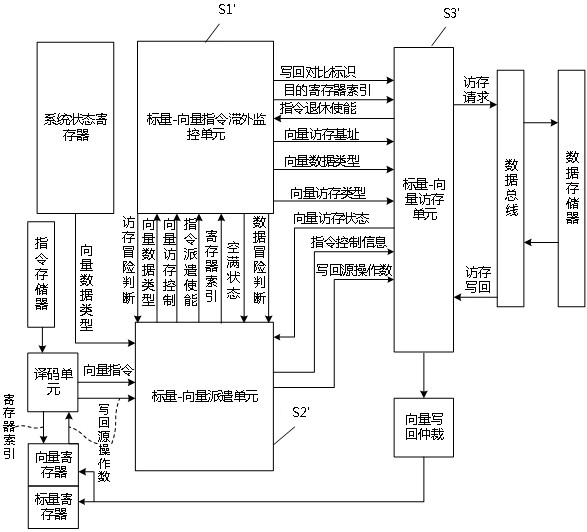

RISC-V vector memory access processing system and processing method

PendingCN114579188AImprove memory access performanceImprove processing efficiencyInstruction analysisEnergy efficient computingInstruction memoryComputer architecture

The invention discloses an RISC-V vector memory access processing system and method, and the system comprises a scalar-vector dispatching unit, a scalar-vector instruction lag monitoring unit and a scalar-vector memory access unit which can transmit data mutually, and a decoding unit and a system state register which are connected with the scalar-vector dispatching unit. The decoding unit is also connected with an instruction memory, a vector register and a scalar register; the scalar-vector access unit is connected with a data bus and a vector write-back arbitration unit, and the vector write-back arbitration unit is also connected with the vector register and the scalar register. On the basis of an existing scalar processing system, only a small number of hardware function units are added, the memory access capacity of the scalar processor for the vector data is improved, the invalid dispatching-logout time of the processor is shortened, and the processing efficiency of the scalar processor for the vector data is greatly improved.

Owner:成都启英泰伦科技有限公司

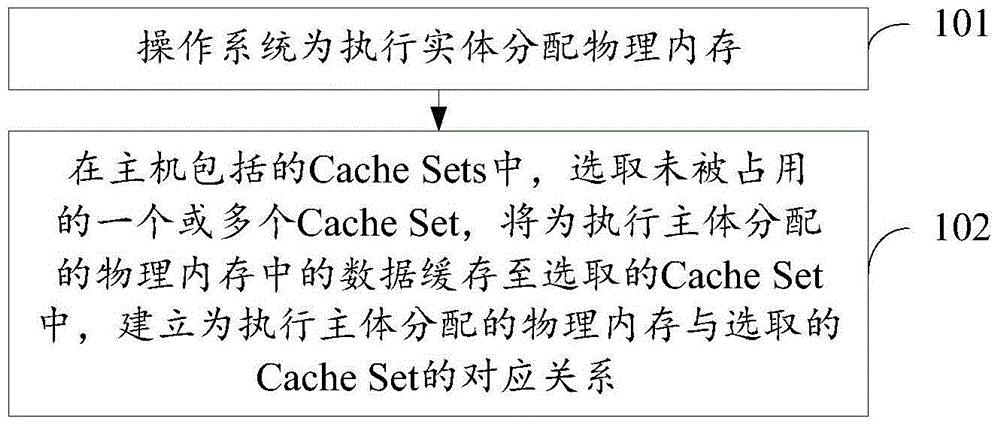

Cache partitioning method and device

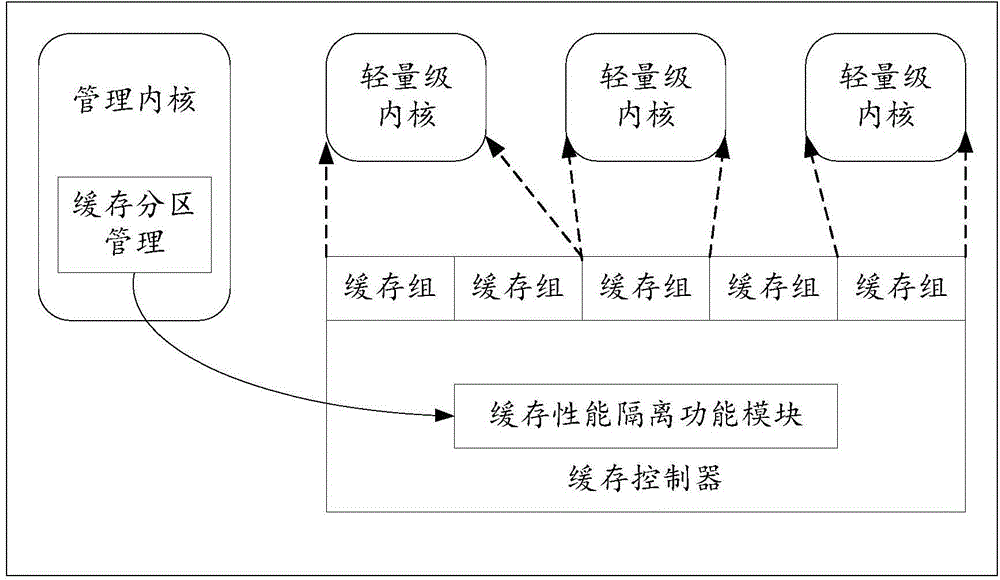

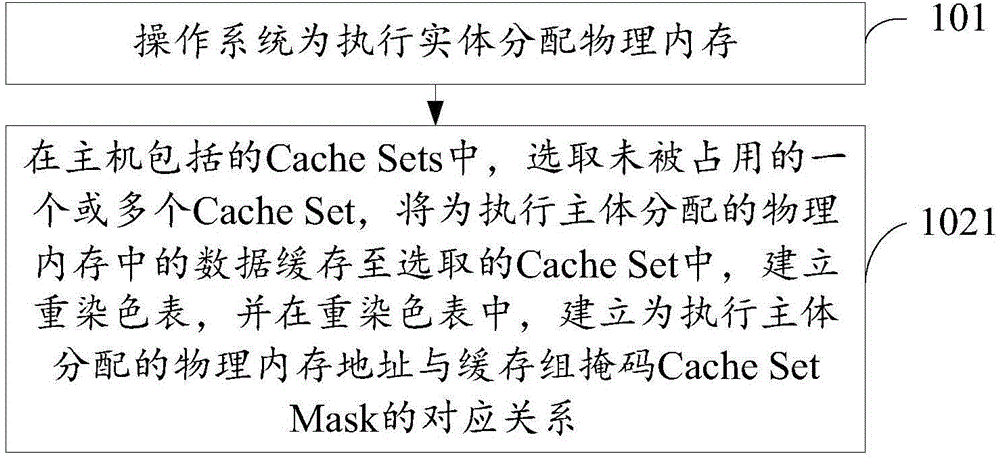

ActiveCN105095105AImprove memory access performanceReduce distractionsMemory adressing/allocation/relocationOperational systemParallel computing

Disclosed are a Cache partitioning method and device, which relate to the field of electronic information technology, and can flexibly allocate physical memories and Cache Sets for executive entities, thereby reducing interference generated by a plurality of executive entities in the usage of a Cache and improving the performance of memory access. The method comprises: allocating, by an operating system, a physical memory to an executive entity (101); and selecting one or more unoccupied Cache Sets from Cache Sets included in a host, caching data from the physical memory allocated to the executive entity into the selected Cache Set, and establishing a correlation between the physical memory allocated to the executive entity and the selected Cache Set (102). The method is suitable for a scenario where an appropriate Cache Set is allocated to an executive entity.

Owner:HUAWEI TECH CO LTD +1

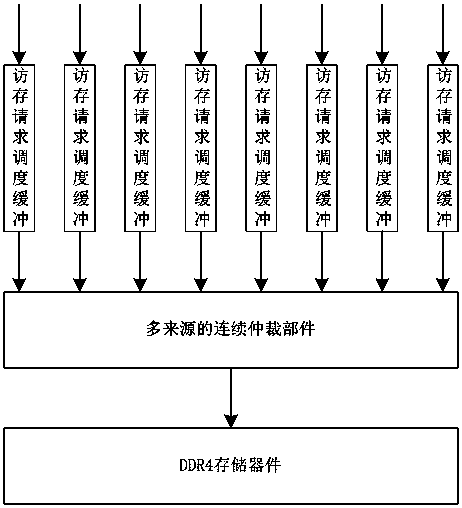

DDR4 performance balance scheduling structure and method for multiple request sources

InactiveCN110716797AImprove memory access bandwidthImprove memory access performanceProgram initiation/switchingResource allocationComputer networkSystem structure

The invention relates to the technical field of computer system structures and processor microstructures, in particular to a DDR4 performance balance scheduling structure and method for multiple request sources. A DDR4 performance balance scheduling structure for multiple request sources comprises a plurality of memory access request scheduling buffers used for improving memory access bandwidths corresponding to the memory access request sources; a multi-source continuous arbitration component used for selecting one memory access request to be transmitted; and a DDR4 storage device used forreceiving the memory access request transmitted by the multi-source continuous arbitration component. The invention discloses a DDR4 performance balance scheduling method for multiple request sources.The DDR4 performance balance scheduling method comprises the following steps: L1, setting a memory access request scheduling buffer for a memory access request of each memory access request source; and L2, enabling the multi-source continuous arbitration component to select one memory access request to transmit through an arbitration strategy. A plurality of memory access request scheduling buffers are respectively set for multiple request sources, so that the influence on memory access delay can be reduced while the memory access bandwidth is improved, and the comprehensive memory access performance of a system is improved.

Owner:JIANGNAN INST OF COMPUTING TECH

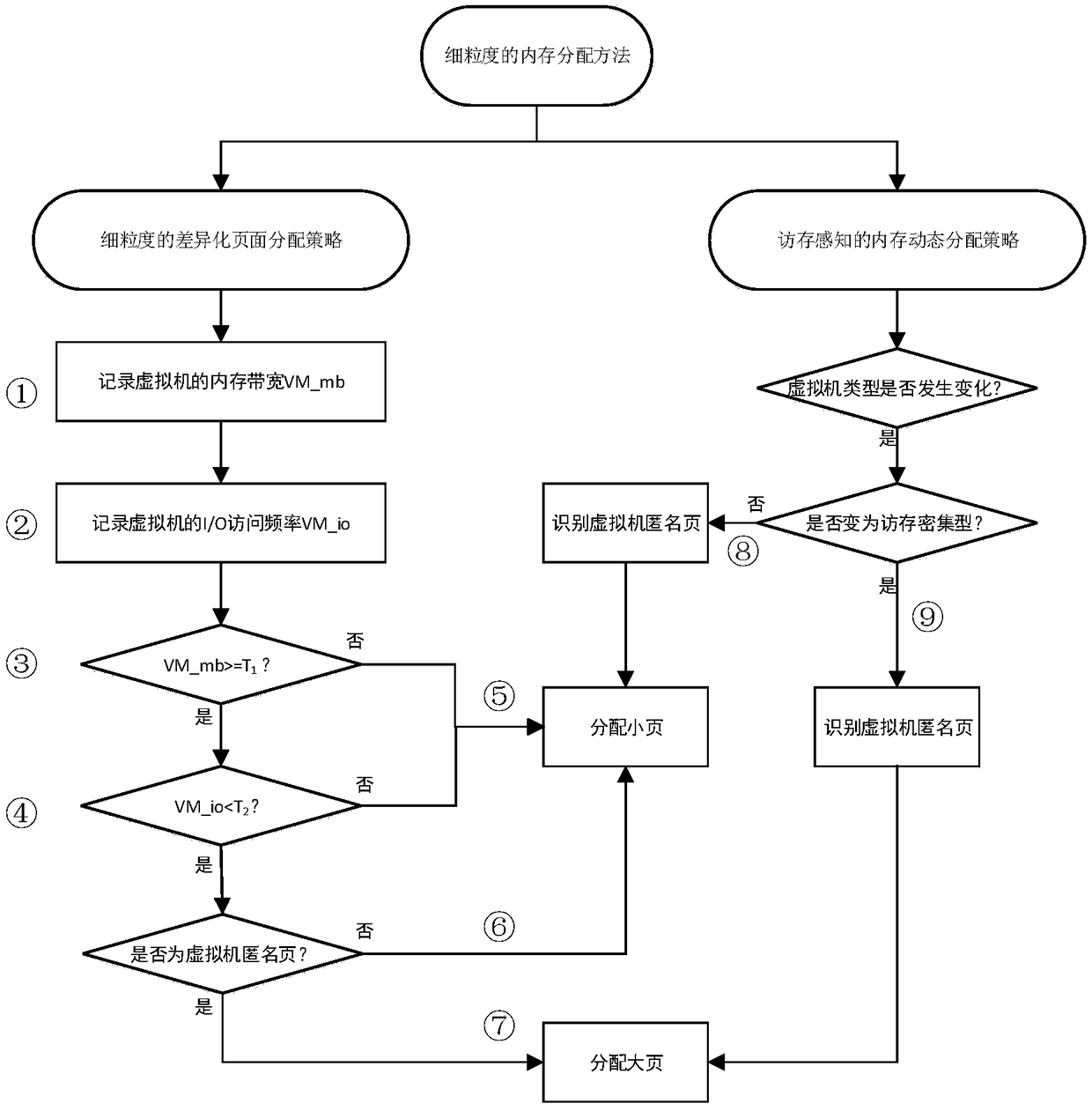

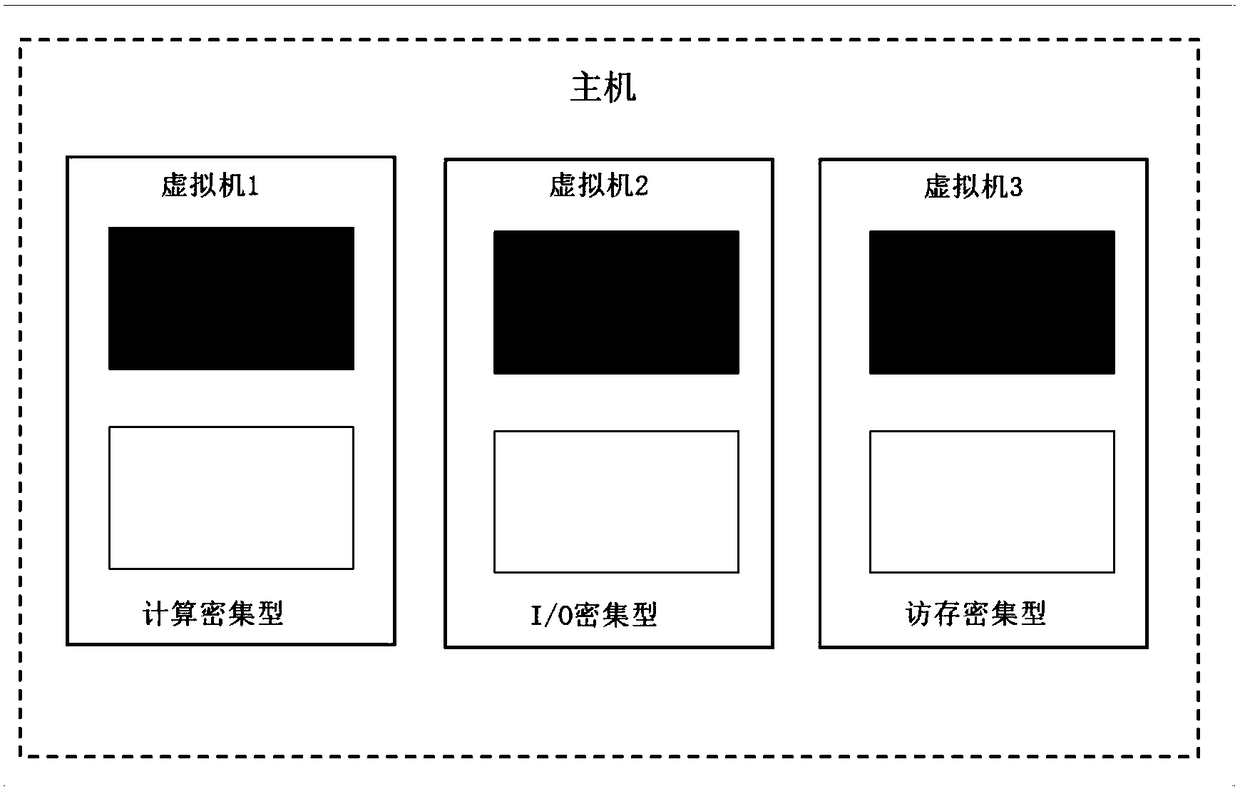

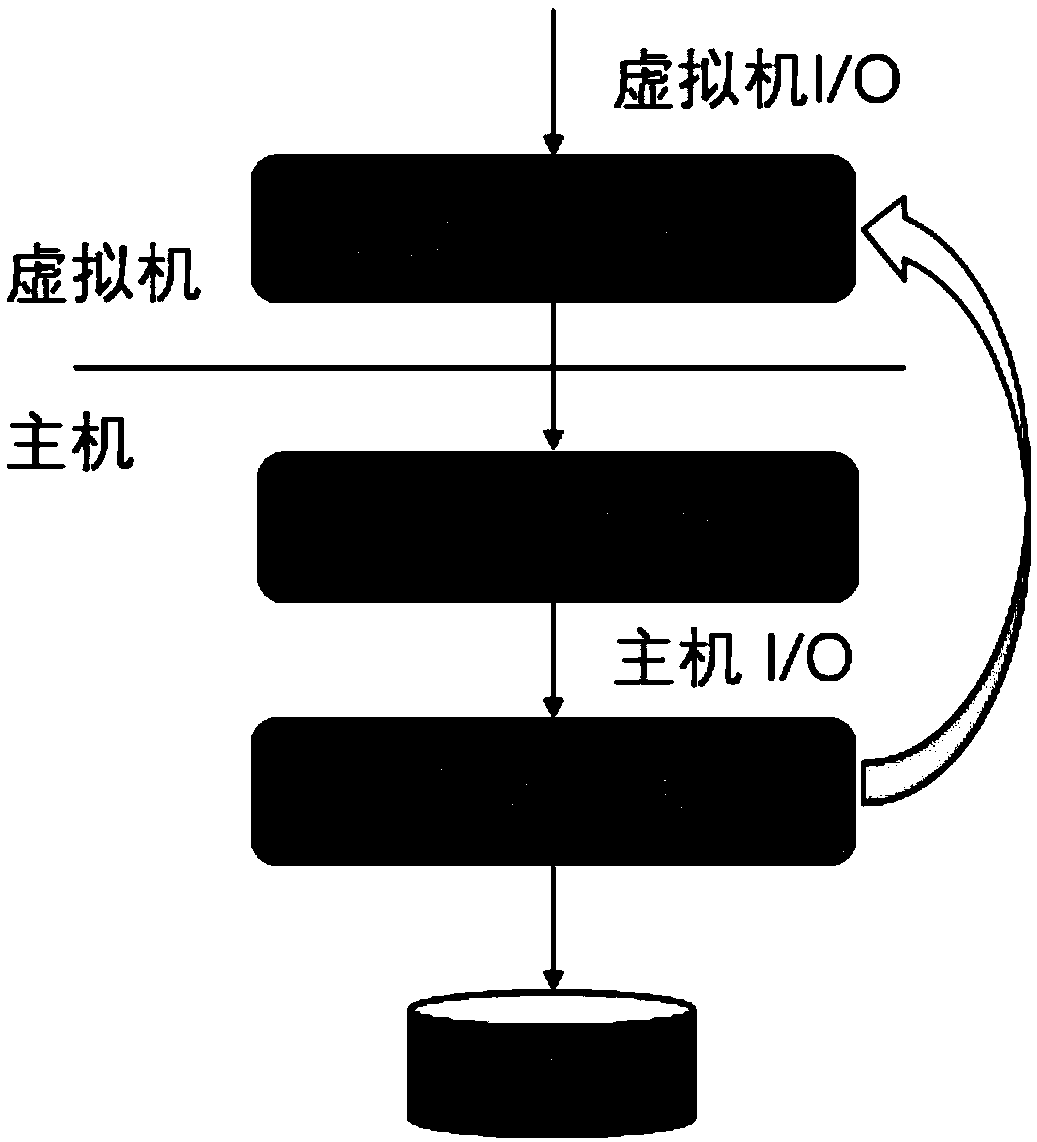

Memory allocation method based on fine granularity

ActiveCN108920254AImprove deduplication rateAlleviate memory bloatSoftware simulation/interpretation/emulationDistribution methodGranularity

The invention discloses a memory allocation method based on fine granularity. The method is characterized by adopting detection of a virtual machine type, detection of the internal page type of a virtual machine, a differential page allocation strategy of fine granularity and a memory dynamic allocation strategy for access sensing. Since the type of the virtual machine is distinguished, pages usedby an I / O intensive type virtual machine and a computational intensive type virtual machine are all small. Compared with a strategy in which a default option of a system is to allocate large pages for virtual machines, the method has the advantages that memory expansion is relieved, the expense for memory allocation is reduced, and the repeated deletion rate of a memory is decreased; meanwhile, as for an access-intensive type virtual machine, allocated anonymous pages of the virtual machine are large, high memory access performance can be maintained, and since allocated Page Cache pages and kernel pages of the access-intensive type virtual machine are small, compared with the strategy in which the default option of the system is to allocate large pages, the method has the advantages thatthe memory expansion is relieved, the expense for memory allocation is reduced, the repeated deletion rate of the memory is increased, and the loss of system performance is reduced as much as possible.

Owner:UNIV OF SCI & TECH OF CHINA

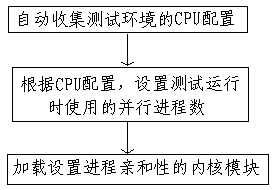

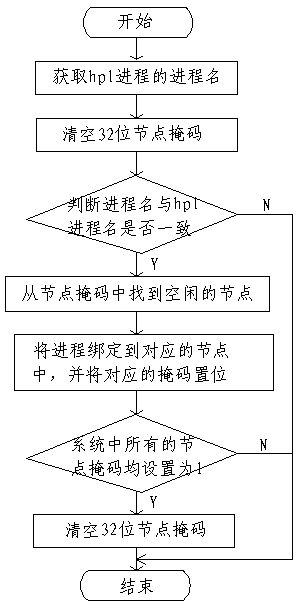

Method for hpl test optimization based on memory affinity

InactiveCN107832213AImprove test resultsImprove memory access efficiencyResource allocationSoftware testing/debuggingParallel computingTest optimization

The invention discloses a method for hpl test optimization based on memory affinity and belongs to the technical field of computers. The method for hpl test optimization based on memory affinity specifically includes the following steps of S1, automatically collecting CPU configuration of a test environment by use of system files; S2, setting the number of parallel processes used during the test run according to the CPU configuration; and S3, loading a kernel module which sets process affinity. The method for hpl test optimization based on memory affinity can avoid the overhead of process or memory migration to greatly improve the system's memory performance and has a good popularization and application value.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

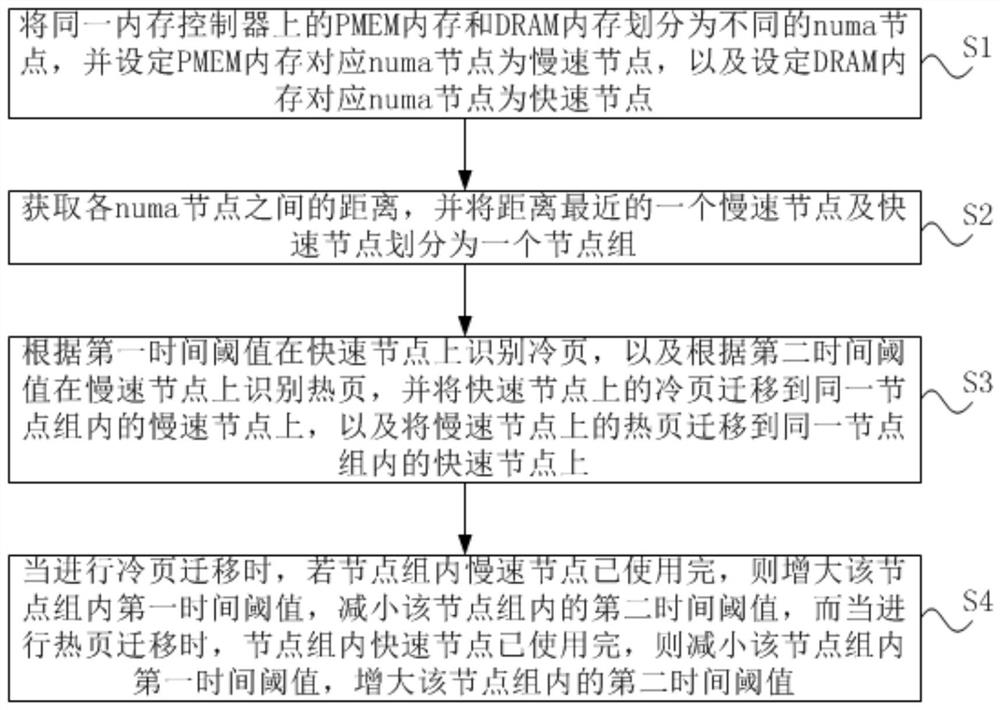

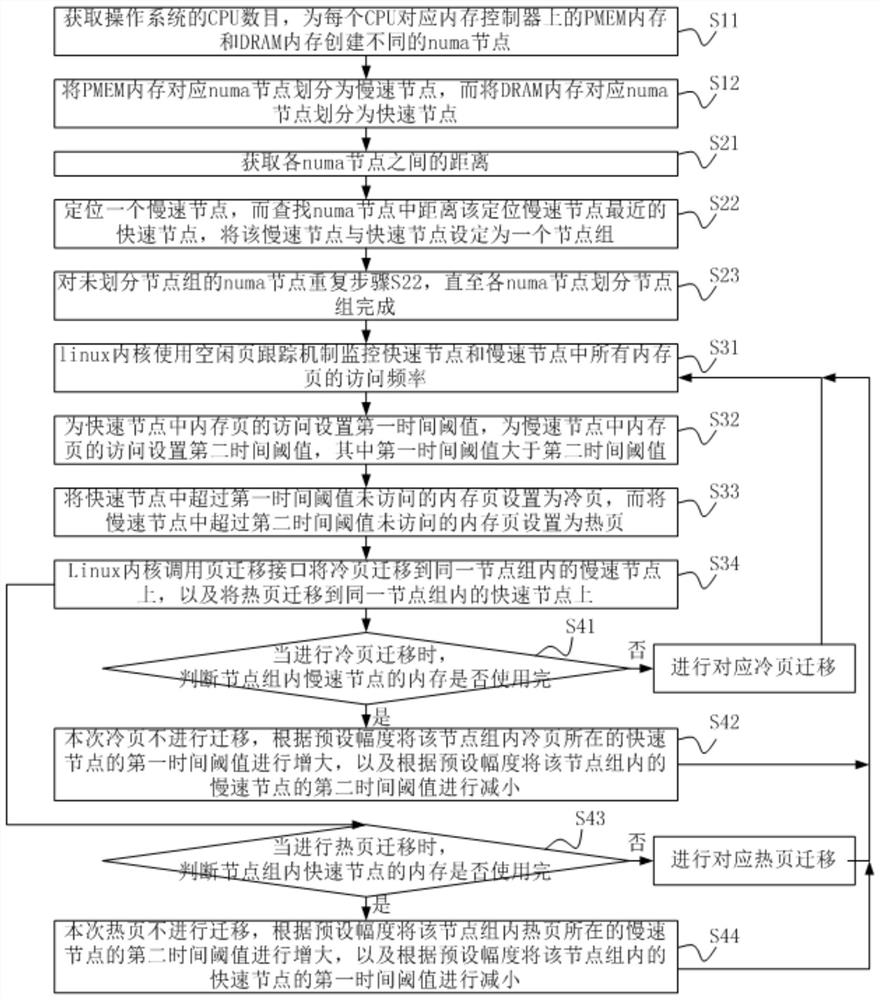

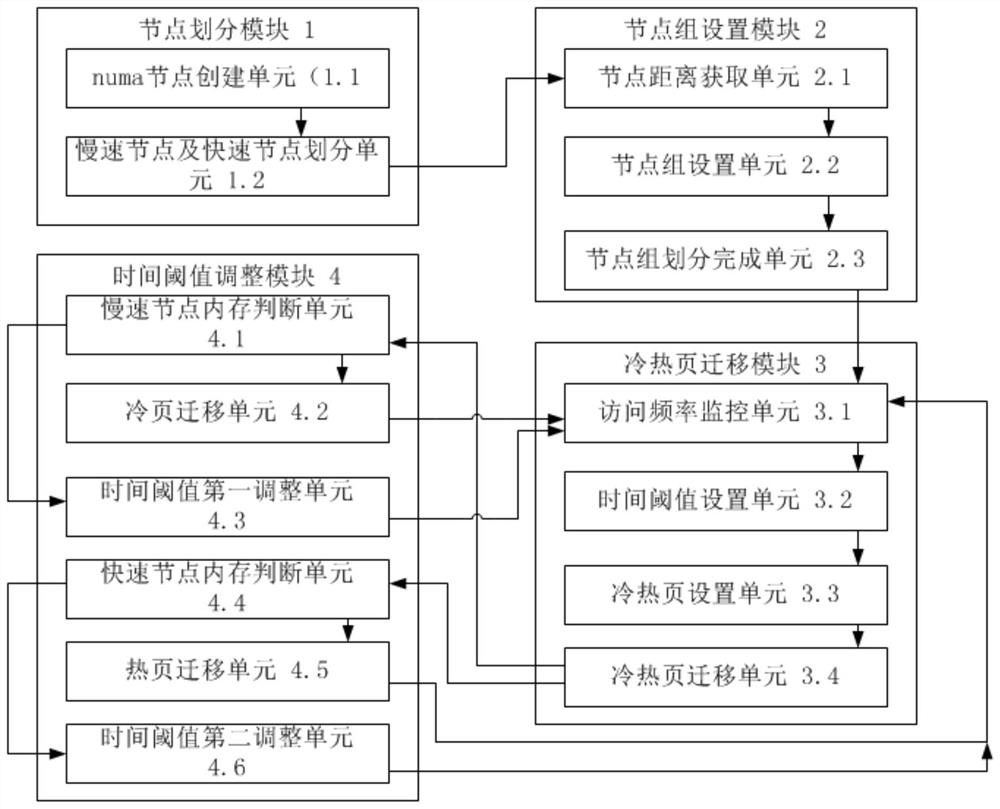

Method and device for realizing cold and hot data migration between DRAM and PMEM

ActiveCN114442928ARealize automatic adjustmentSmooth migrationInput/output to record carriersEnergy efficient computingParallel computingTerm memory

The invention provides a method and device for achieving cold and hot data migration between a DRAM and a PMEM, and belongs to the technical field of memory management.The method comprises the steps that a PMEM and a DRAM are divided into different nodes, and slow nodes and fast nodes are set; obtaining the distance between the nodes, and dividing a low-speed node and a high-speed node into a node group according to the distance; identifying a cold page on the fast node according to a time threshold value, identifying a hot page on the slow node, migrating the cold page to the slow node in the same node group, and migrating the hot page to the fast node in the same node group; and when cold and hot page migration is carried out, if the memory of the destination node is used up, adjusting a cold and hot page identification threshold. According to the method, cold and hot identification of the memory page is realized, the hot page can be migrated from the PMEM to the DRAM, the cold page can be migrated from the DRAM to the PMEM, the identification time is adjusted, and the cold and hot memory migration tends to be stable.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

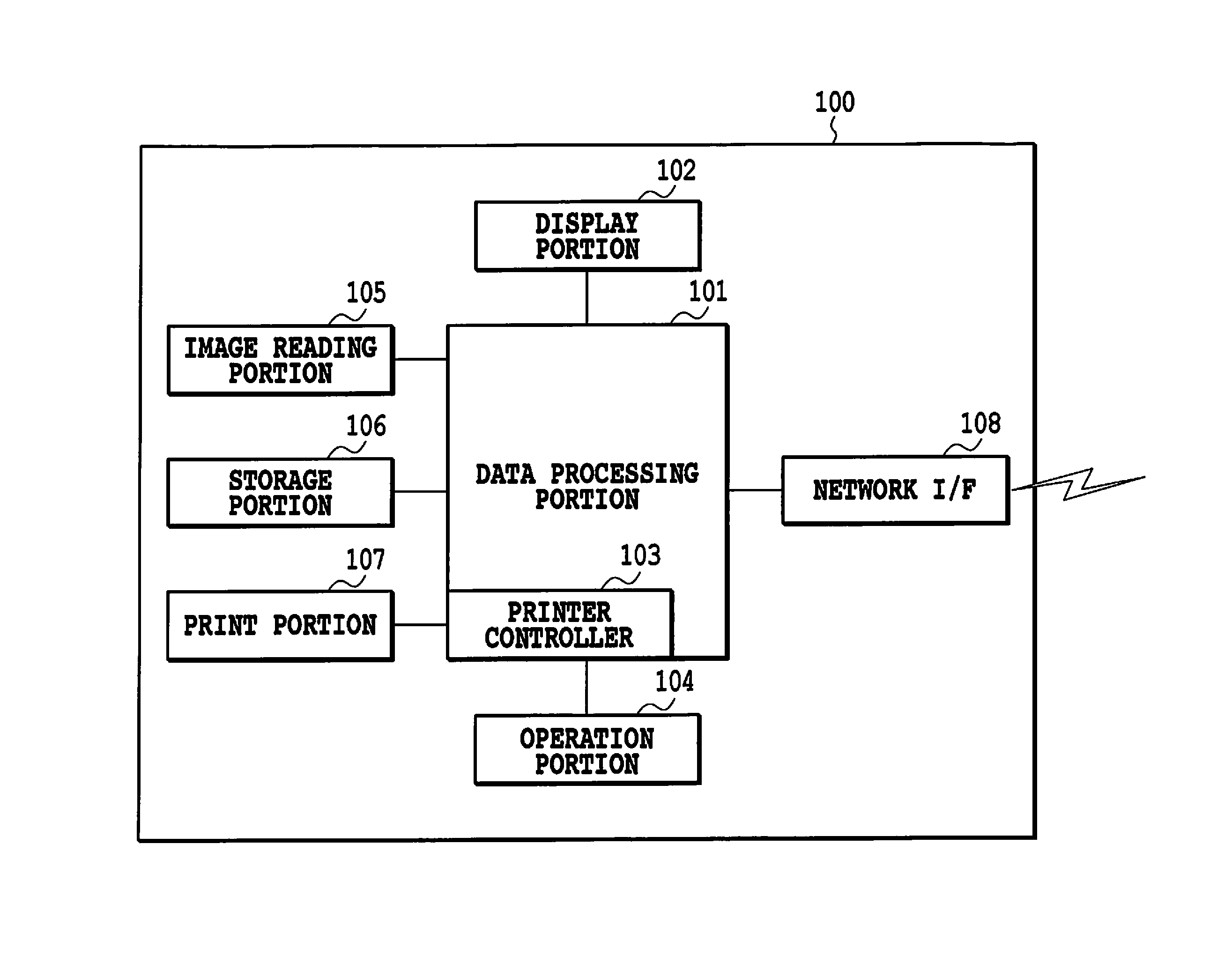

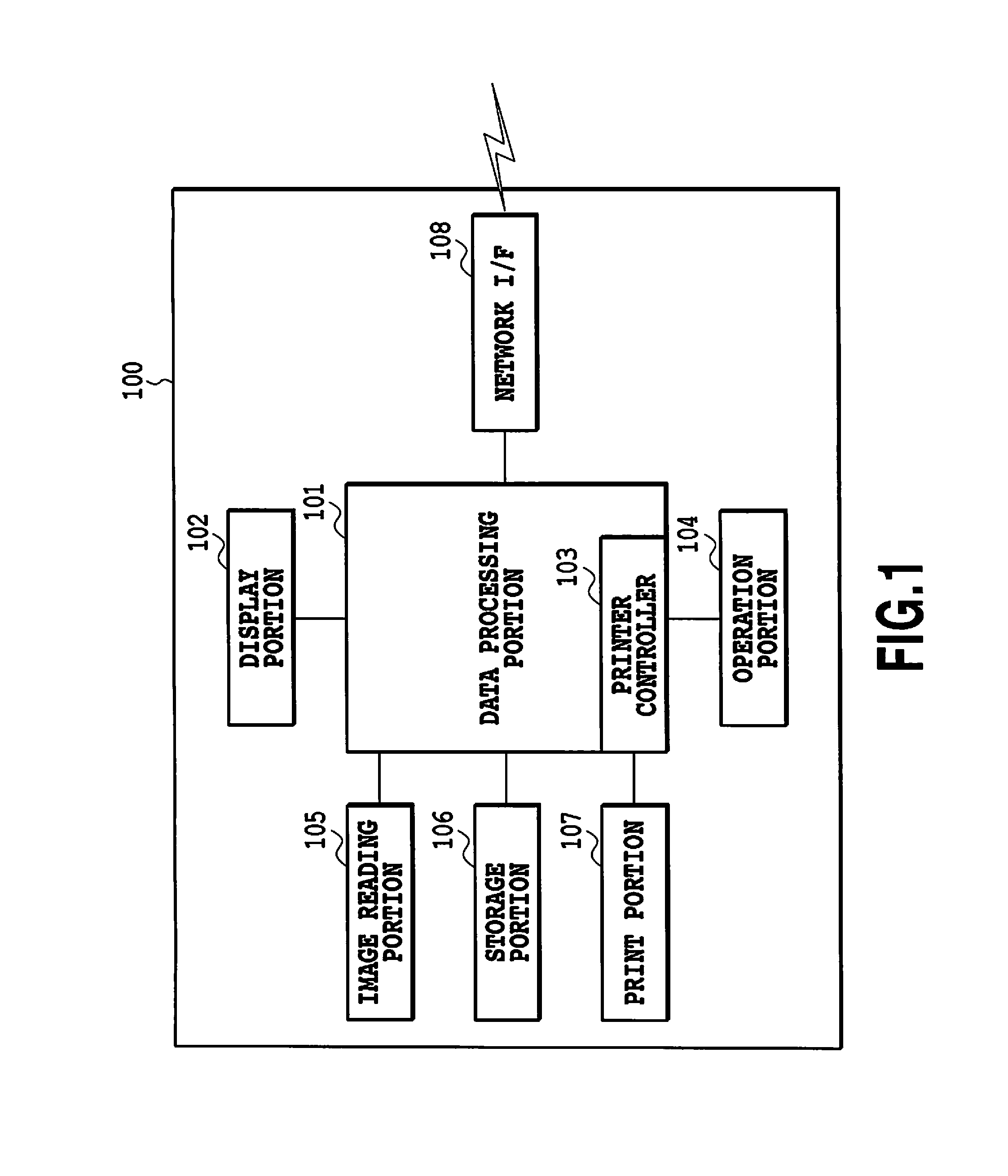

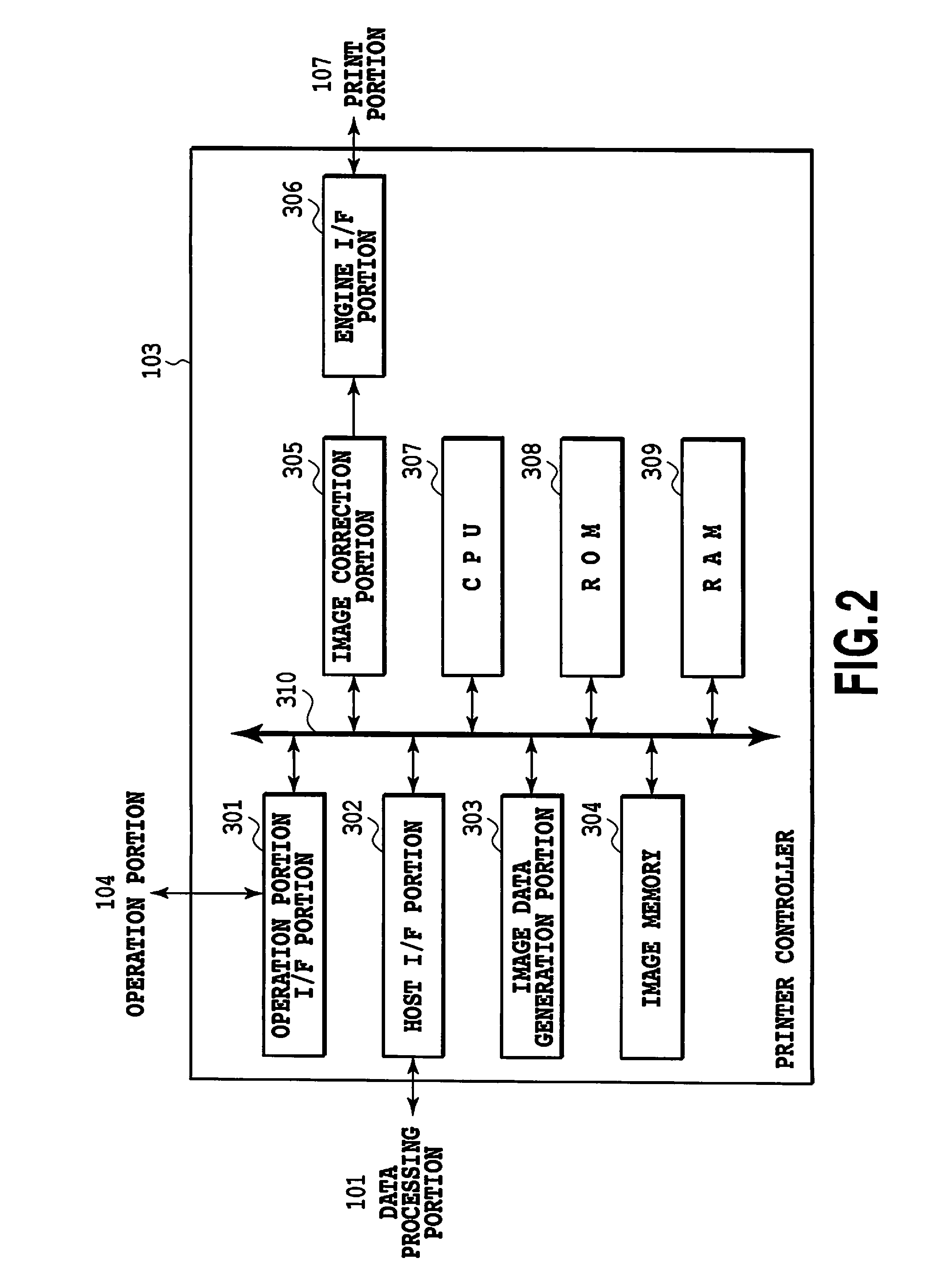

Image forming apparatus and image forming method for correcting registration deviation

ActiveUS9195160B2Improve memory access performanceReduce in quantityDigitally marking record carriersDigital computer detailsImage resolutionImage correction

Since memory access is performed on a large number or discontinuous address regions, the performance of memory access is significantly degraded; in a system that performs high speed image formation, registration deviation cannot be corrected. Sub scanning position information corresponding to the profile of registration deviation is set, and image correction processing is divided into two stages, high resolution correction processing using line buffers formed with a memory which allows high speed random access and low resolution correction processing using a memory whose capacity is easily increased.

Owner:CANON KK

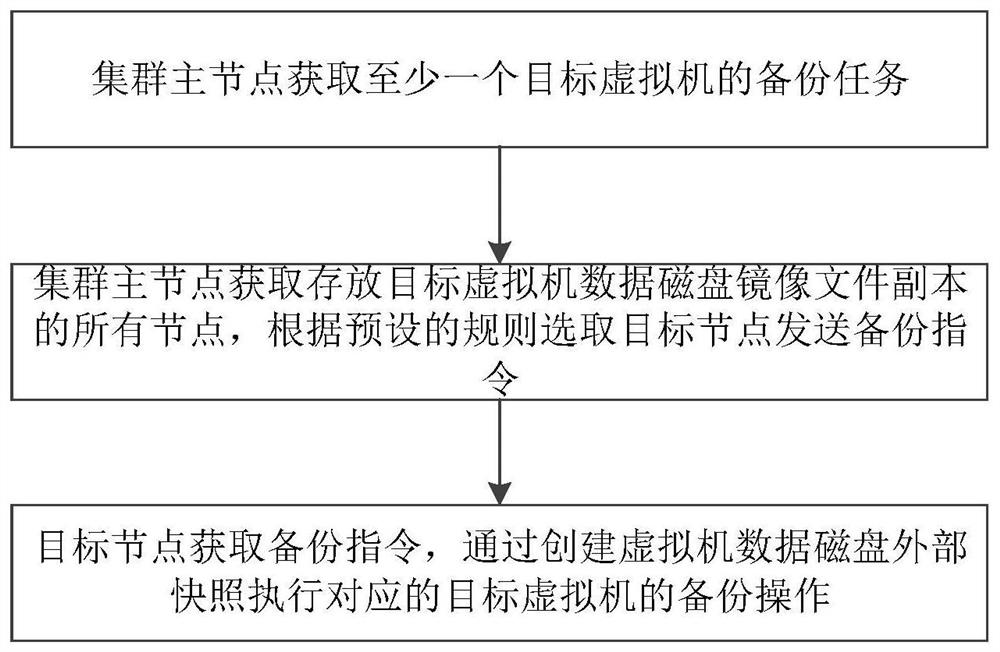

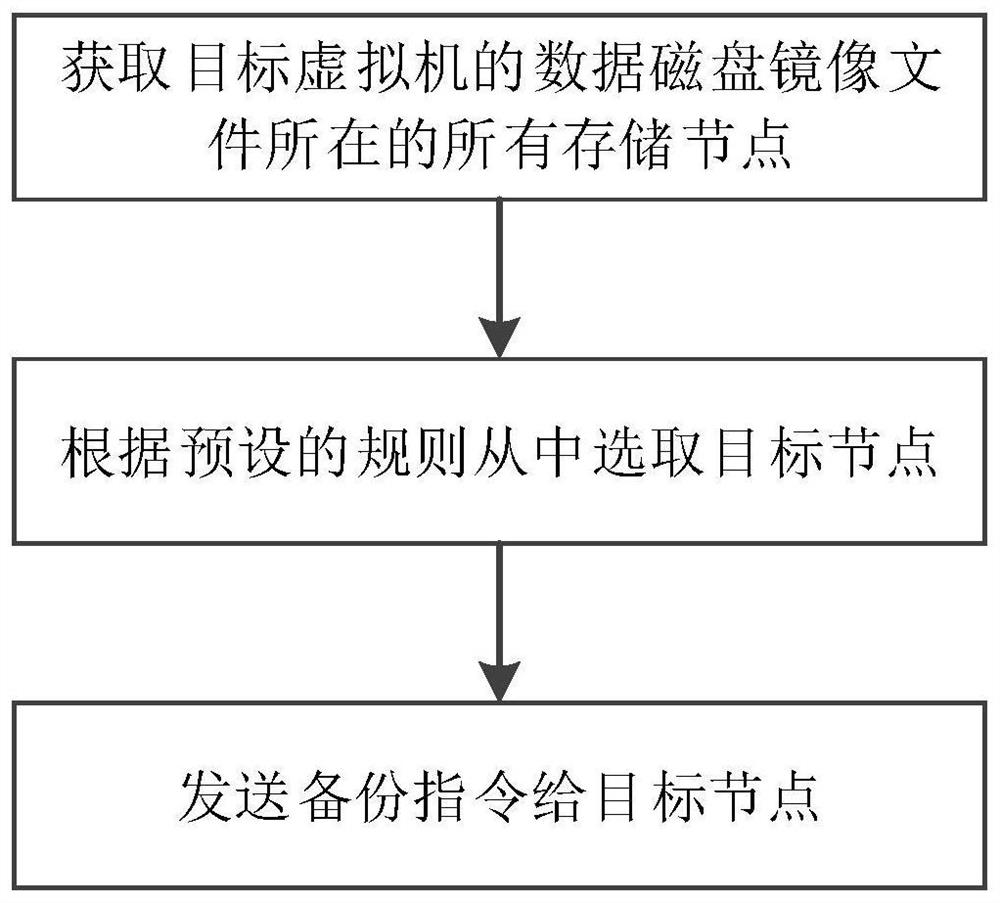

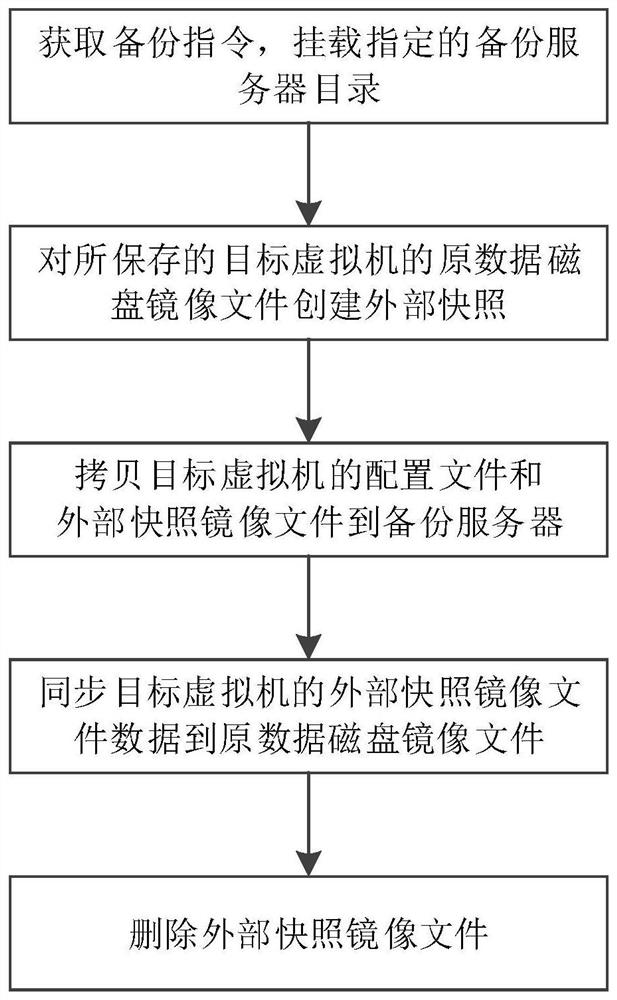

Virtual machine backup method and device in distributed storage environment and storage medium

PendingCN112685130ALoad balancingIncrease load capacitySoftware simulation/interpretation/emulationRedundant operation error correctionDisk mirroringShut down

The invention discloses a virtual machine backup method and device in a distributed storage environment, and a storage medium. The method comprises the steps of obtaining a backup task of at least one target virtual machine by a cluster main node; enabling the cluster main node to obtain all nodes storing a target virtual machine data disk mirror image file copy, selecting target nodes according to a preset rule to send a backup instruction, wherein the target nodes are in one-to-one correspondence with target virtual machines; and enabling the target node to acquire a backup instruction, and executing a backup operation of the corresponding target virtual machine by creating an external snapshot of the virtual machine data disk. The method is used in a distributed storage cloud desktop server cluster environment, and compared with the prior art, the virtual machine backup efficiency can be improved, the network overhead can be reduced, the virtual machine does not need to be shut down during backup, the operation of a service system in the virtual machine is not influenced, and the purpose of online backup of the virtual machine is achieved.

Owner:湖南麒麟信安科技股份有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com