Three-dimensional point cloud registration method adopting CPD (coherent point drift) algorithm based on affine transformation model

An affine transformation model, three-dimensional point cloud technology, applied in computing, image data processing, instruments, etc., can solve the problems of low registration accuracy, long program running time, poor robustness, etc., to achieve high registration accuracy, shorten the operation time effect of time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

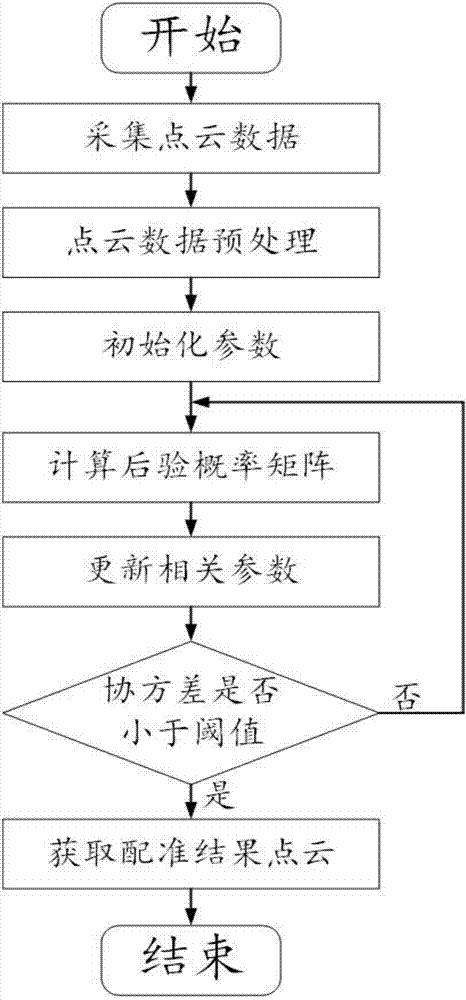

[0021] Specific implementation mode one: combine figure 1 Describe this specific embodiment, the three-dimensional point cloud registration method based on the affine transformation model CPD algorithm of this embodiment, specifically implement according to the following steps:

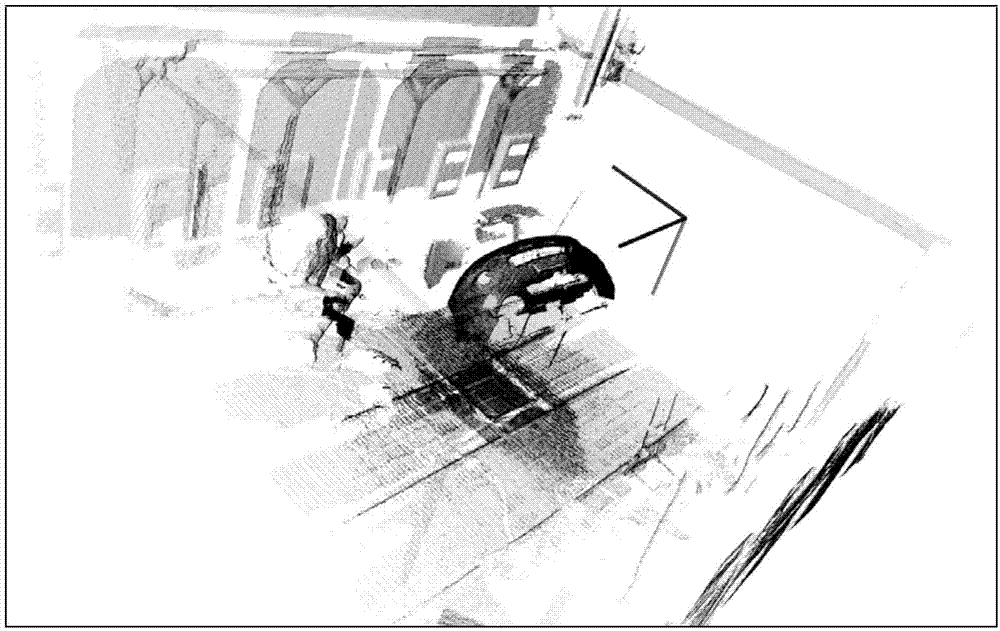

[0022] Step 1. Use the image acquisition device in the painting robot to scan the object to be painted, and collect a set of three-dimensional point cloud data as the point cloud to be registered; figure 2 ;

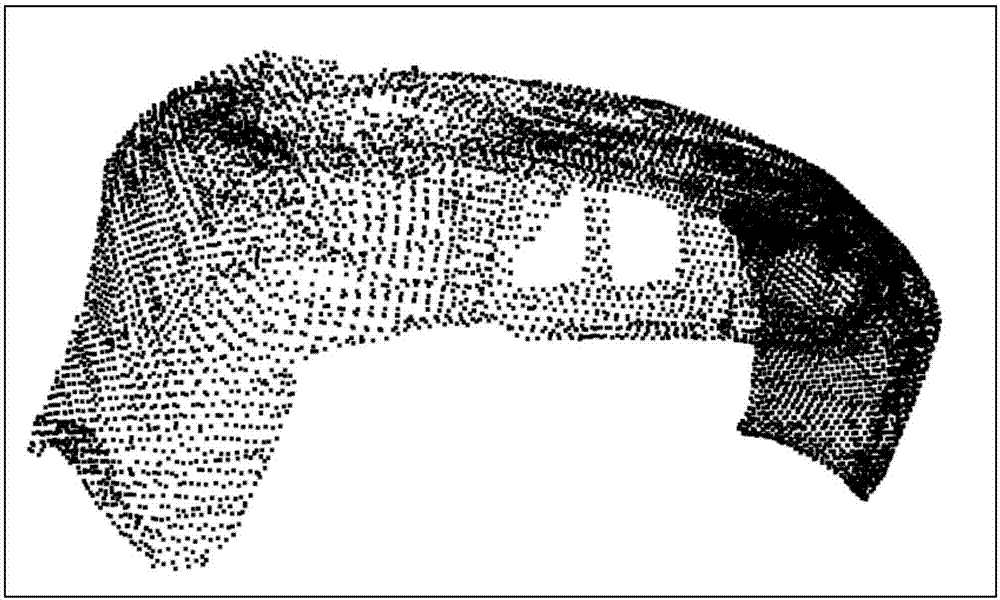

[0023] Step 2. Preprocess the point cloud to be registered collected in step 1, and use the obtained point cloud data as a reference point set; image 3 ;

[0024] Step 3. Calculate the covariance σ between the reference point set and the corresponding saved template point set obtained in step 2 2 , and initialize the affine transformation matrix B and translation vector t;

[0025] Step 4. According to the σ obtained in Step 3 2 , B, t three parameters and the template point set and refer...

specific Embodiment approach 2

[0028] Specific embodiment 2: The difference between this embodiment and specific embodiment 1 is that in the step 2, the point cloud to be registered obtained in the step 1 is preprocessed, and the obtained point cloud data is used as a reference point set; the specific process is :

[0029] Step 21, delete the background point cloud data that does not need to be registered in the point cloud to be registered collected in step 1, and obtain the point cloud after removing the background;

[0030] Step 22, using the statistical filter and the radius filter to delete the outlier points in the point cloud after removing the background obtained in step 21, to obtain the filtered point cloud;

[0031] Step two and three, down-sampling the filtered point cloud obtained in step two or two, to obtain the down-sampled point cloud; with sparse point cloud data, the purpose of reducing the amount of point cloud data is achieved;

[0032] Step 24: Save the down-sampled point cloud obtain...

specific Embodiment approach 3

[0034] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is: the covariance σ of the reference point set and the corresponding saved template point set is obtained in the calculation step two in the step three 2 , and initialize the affine transformation matrix B and the translation vector t; the specific process is:

[0035] Step 31. Calculating the reference point set X N×D =(x 1 ,...x N ) T and template point set Y M×D =(y 1 ,...y M ) T The covariance of :

[0036]

[0037] Among them, M and N are the number of points in the reference point set and the template point set respectively, and the value is a positive integer; D is the dimension of the point set, x n is the D-dimensional vector of the nth point in the reference point set, y m is the D-dimensional vector of the mth point in the template point set;

[0038] Step 32, initialize the affine transformation matrix B and the translation vector t, the affine ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com