Adaptive clothes animation modeling method based on visual perception

A visual perception and self-adaptive technology, applied in the field of virtual reality and computer graphics, can solve the problems that it is difficult to ensure the visual fidelity of clothing animation, and only consider the objective authenticity of clothing movement, etc., to achieve realistic visual effects and improve simulation efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0022] A method for modeling adaptive clothing animation based on visual perception, comprising the following steps:

[0023] 1. Construct a clothing visual saliency model that conforms to the characteristics of the human eye

[0024] 1.1 Eye movement data collection and preprocessing

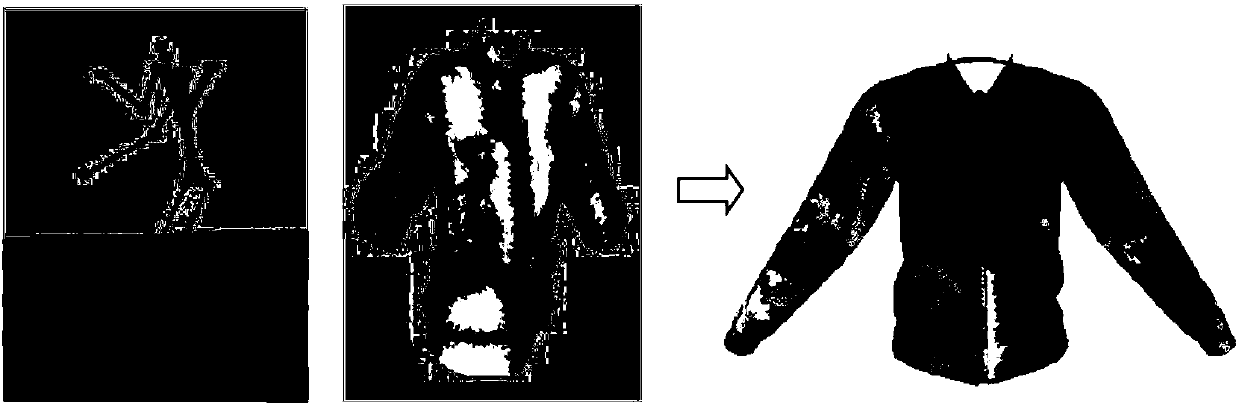

[0025] The present invention uses a telemetry eye tracker to collect real eye movement data. People watch clothing animation videos in front of the screen. Focus map and heat map of the video. Gaussian convolution was performed on the focus maps superimposed by multiple experimenters to obtain a continuous smooth "groundtruth" saliency map (such as figure 1 shown). From left to right in the figure, they are the original image, focus map, heat map and groundtruth saliency map.

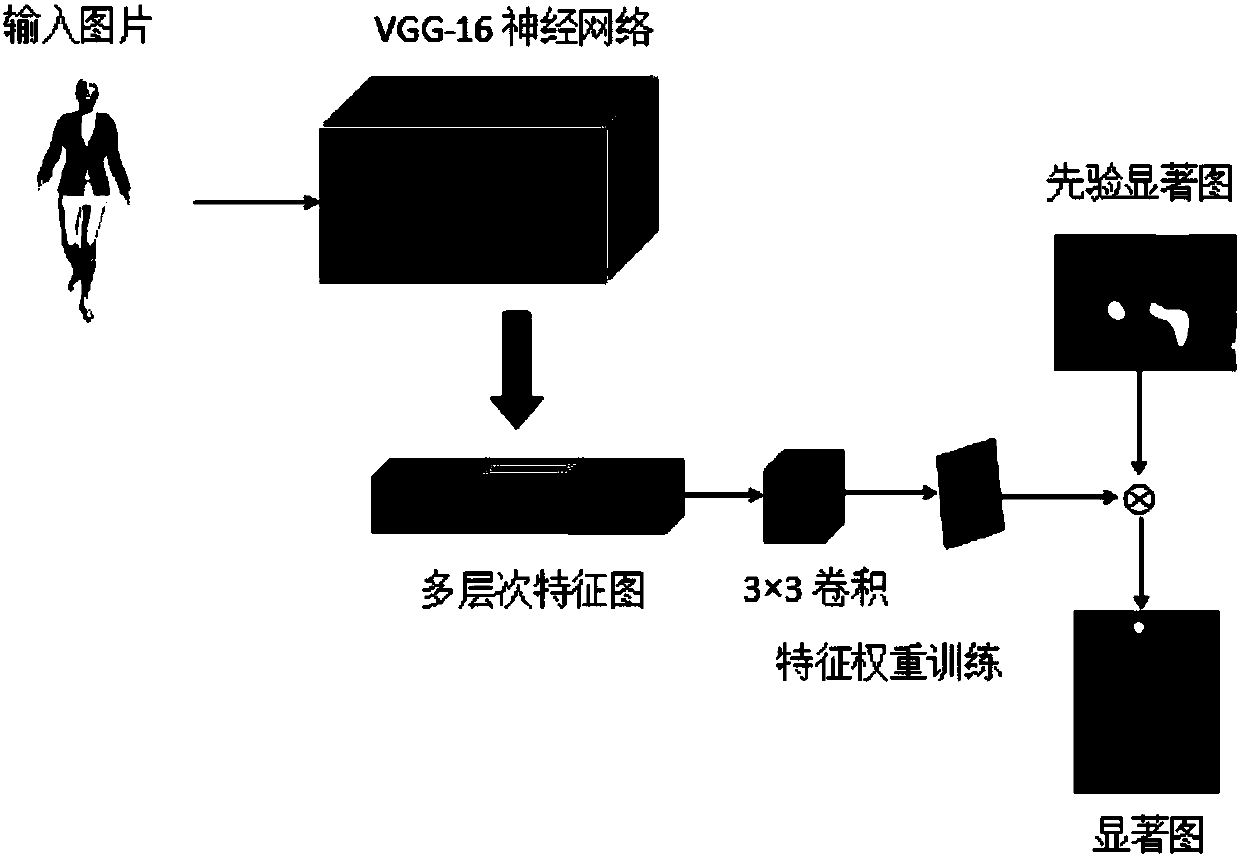

[0026] 1.2 Constructing a visual saliency model using deep learning methods

[0027] In the pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com