A Target Tracking Method Based on Sparse Representation Based on Multi-Feature Fusion

A multi-feature fusion and sparse representation technology, applied in the field of image processing to reduce computational complexity, improve reliability, and eliminate interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

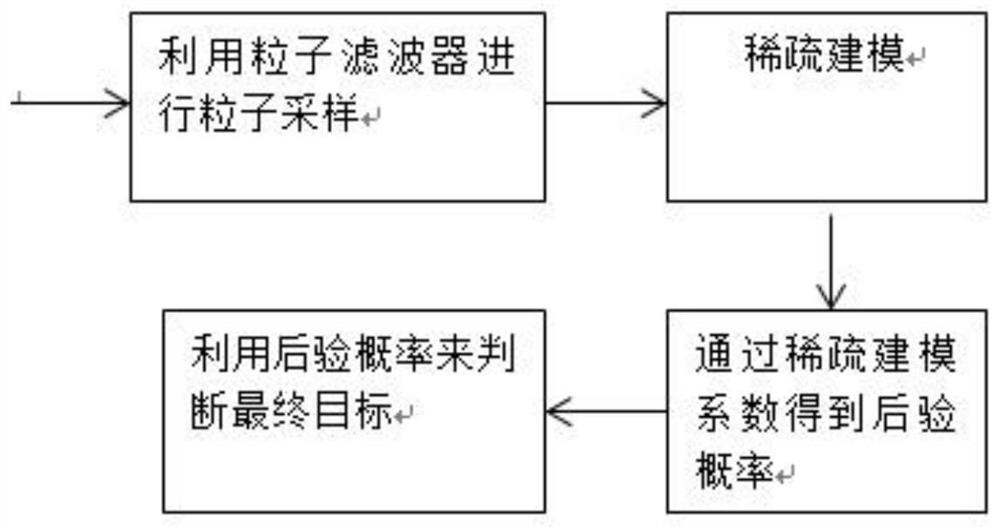

[0049] The present invention is described in detail now in conjunction with accompanying drawing. The sparse representation target tracking method based on multi-feature fusion proposed by the present invention, its structure and content are as follows figure 1 shown.

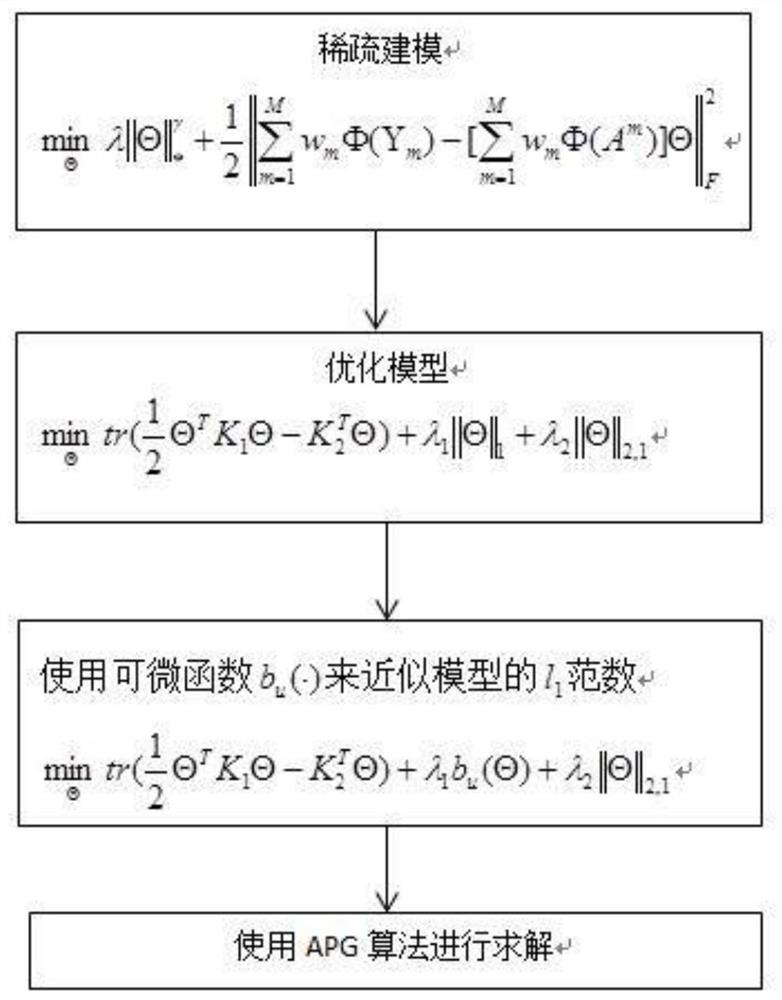

[0050] The present invention is aimed at a video sequence set, and its processing flow is: use the first frame of the video to train the kernel weight, pass the current frame of the video through a particle filter to obtain the particle observation value, and use a model based on multi-feature sparse representation to estimate the particle observation value matrix Sparse means sparse, and use the sparse means coefficients to predict the motion position of the next frame. The detailed implementation steps of the entire target tracking are as follows, in which the sparse modeling flow chart is as follows figure 2 Shown:

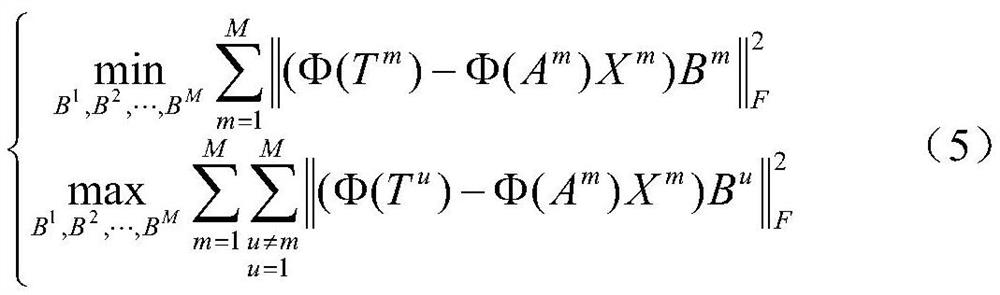

[0051] Step 1: Train the kernel weight, the weight training model is:

[0052]

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com