Hierarchical structure-based neural-network machine translation model

A hierarchical structure and neural network technology, applied in the field of neural network machine translation models, can solve problems such as large amount of calculation of the attention mechanism, alignment divergence, gradient explosion, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

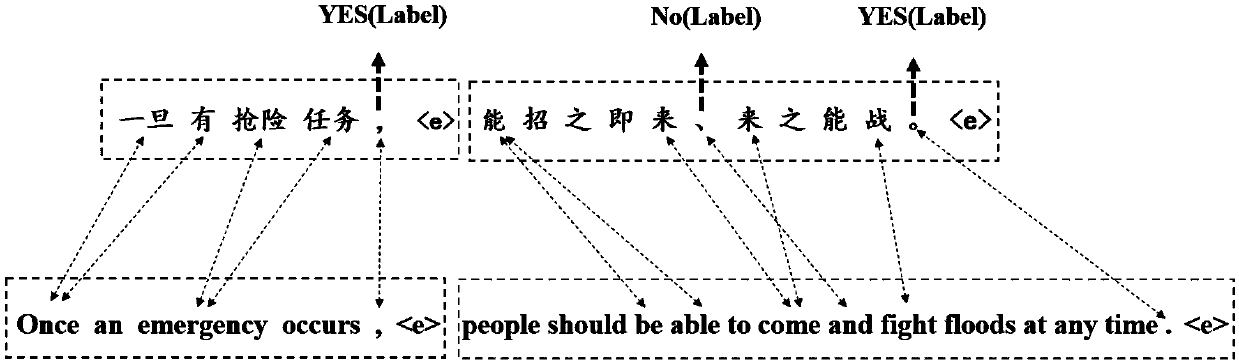

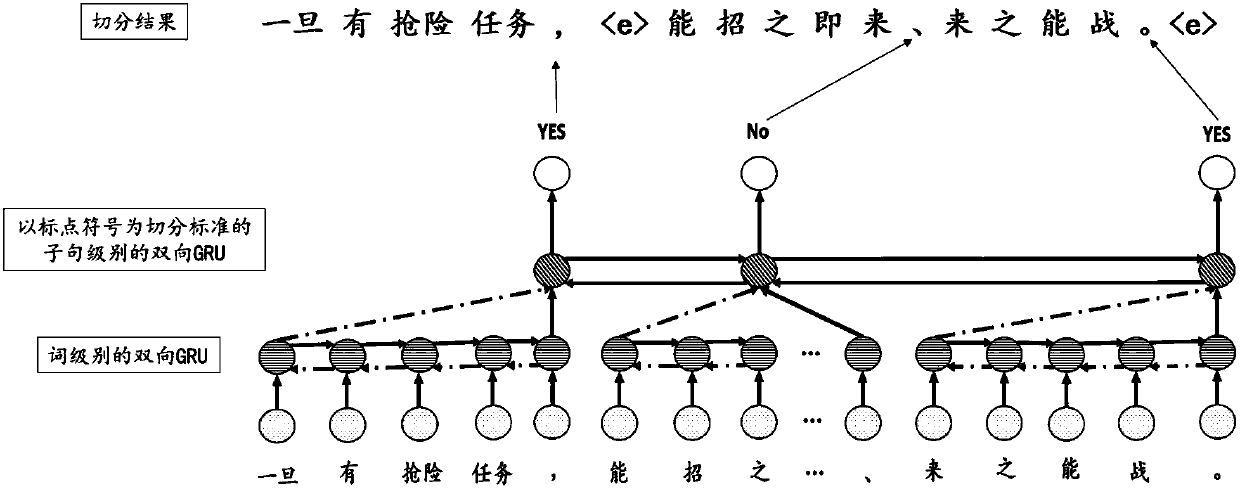

[0036] In the first step, according to the word-level alignment information as the segmentation constraint of the short clauses of the sentence, the classifier is trained using the training data of the divided clauses;

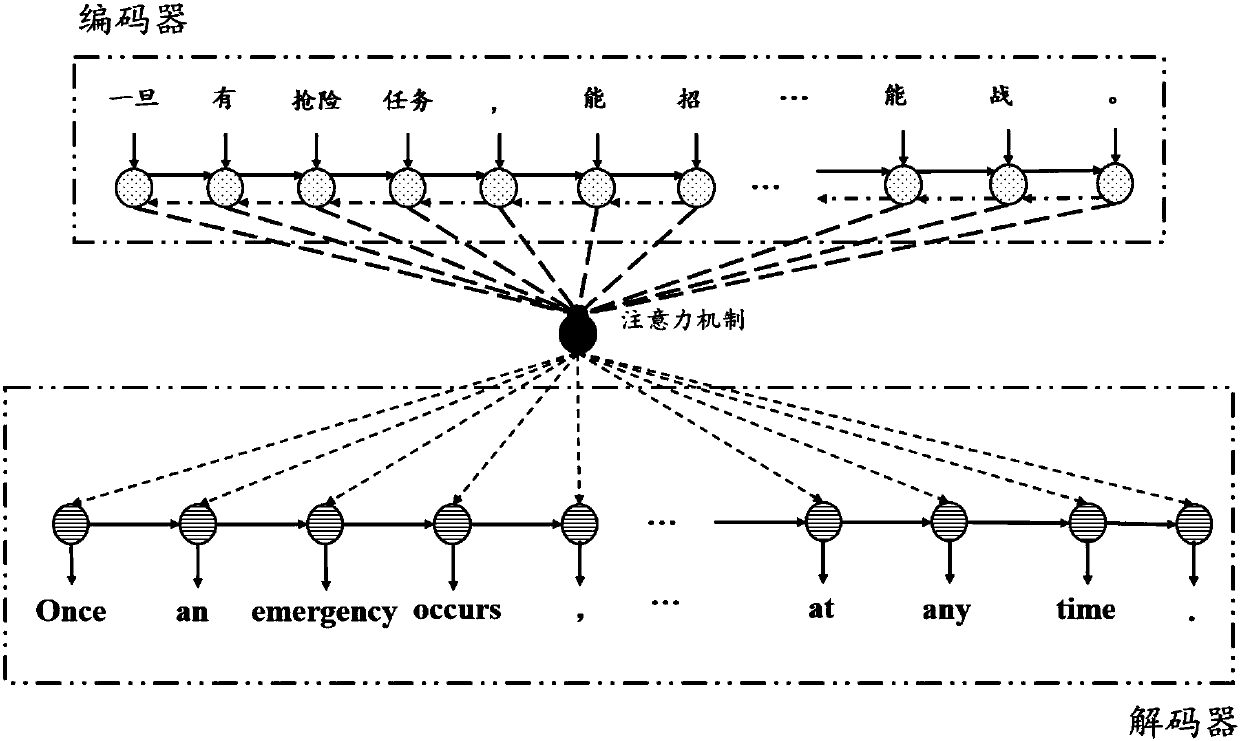

[0037] In the second step, the underlying cyclic neural network is used to encode word-level information in each clause to obtain the semantic representation of the clause;

[0038] The third step is to use the high-level recurrent neural network to encode the information between each clause to obtain the overall semantic representation of the sentence;

[0039] In the fourth step, in the decoder process, the bottom attention mechanism pays attention to the alignment information of the words in the clauses, and the high-level attention mechanism pays attention to the alignment information of each clause, and calculates The obtained translation probability is used as the objective function to train the whole neural network machine translation model.

[0040] T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com