Error analysis method based on vision stitching measurement

An error analysis and error technology, which is applied in the field of error analysis based on visual stitching measurement, and can solve problems such as unexplained quantitative influence relationships.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

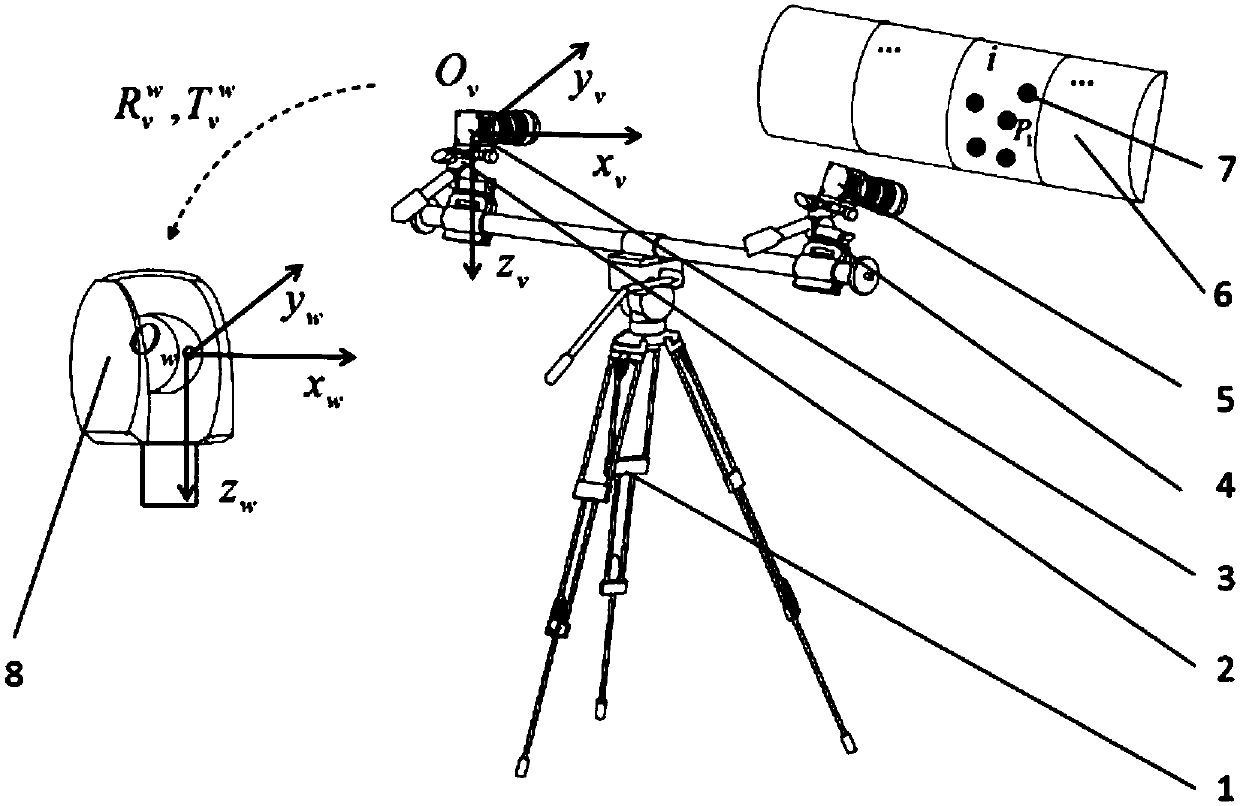

[0054] Example 1, first set up as attached figure 1 The laser tracker-based binocular vision splicing measurement system shown above uses Leica AT960 MR as the measuring head 8 of the laser tracker, and the measurement range is 1‐20m. The left camera 3 and the right camera 5 use VC-12MC-M, with a resolution of 3072*4096 and a maximum frame rate of 60Hz. And use the checkerboard to calibrate the binocular vision system, the obtained camera calibration parameters are as follows: the coordinate value of the principal point of the left camera u 01 = 2140.397824, v 01 =1510.250152; equivalent focal length f x1 =6447.987913, f y1 =6454.015281; the coordinate value of the principal point of the right camera u 02 = 2124.090030, v 02 =1526.184441, equivalent focal length f x2 =6417.044403, f y2 =6420.363610, and the transformation matrix from the left camera to the right camera Arrange 9 public points in the public field of view of the laser tracker and the visual measurement ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com