Text classification method and system based on deep learning of hybrid automatic encoder

An autoencoder and hybrid automatic technology, applied in text database clustering/classification, unstructured text data retrieval, instrumentation, etc., can solve high-dimensional and sparse classification time, high training and classification time overhead, Reduce classification accuracy and other issues, to achieve the effect of text classification, ideal feature learning effect, and improve classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

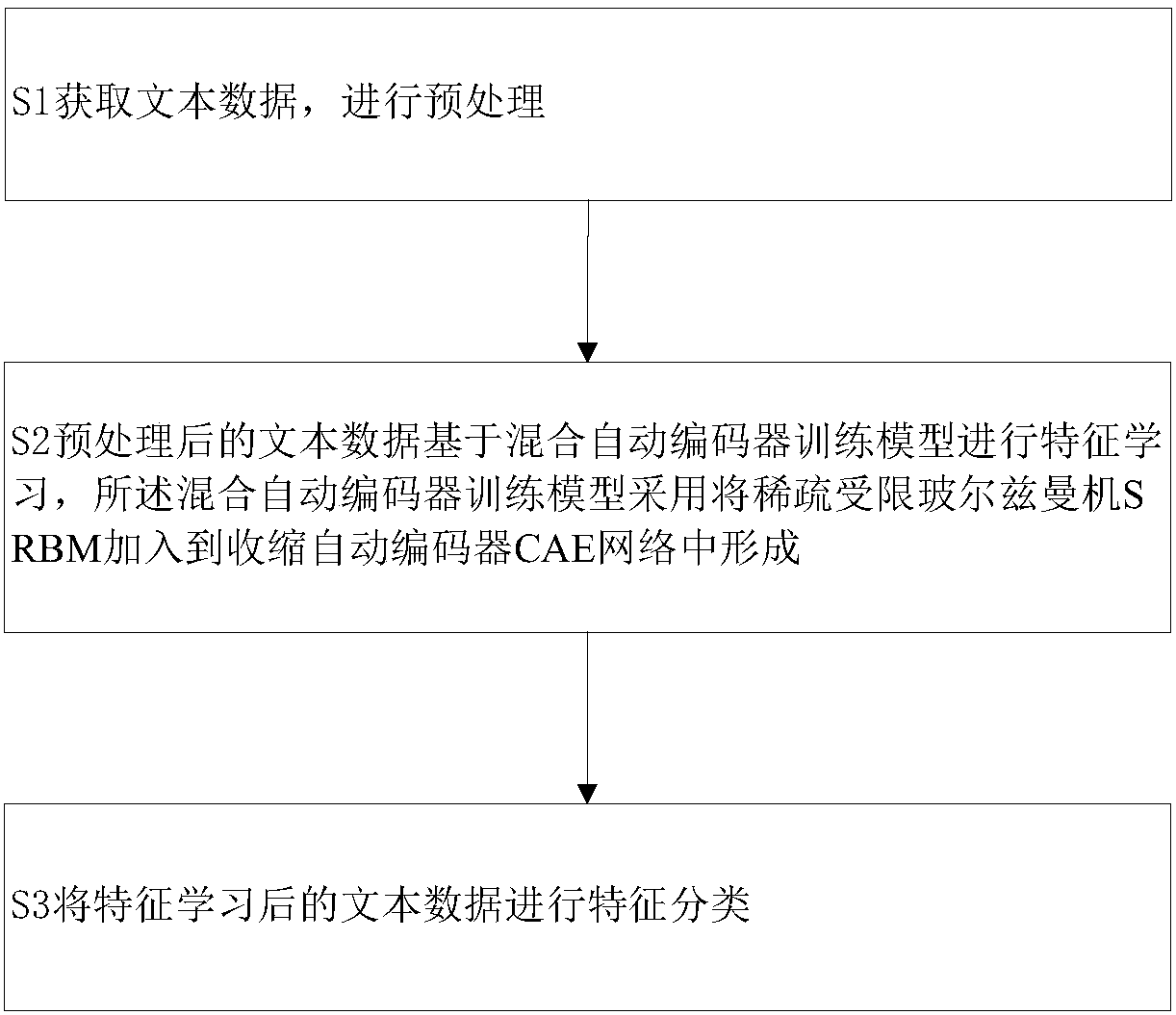

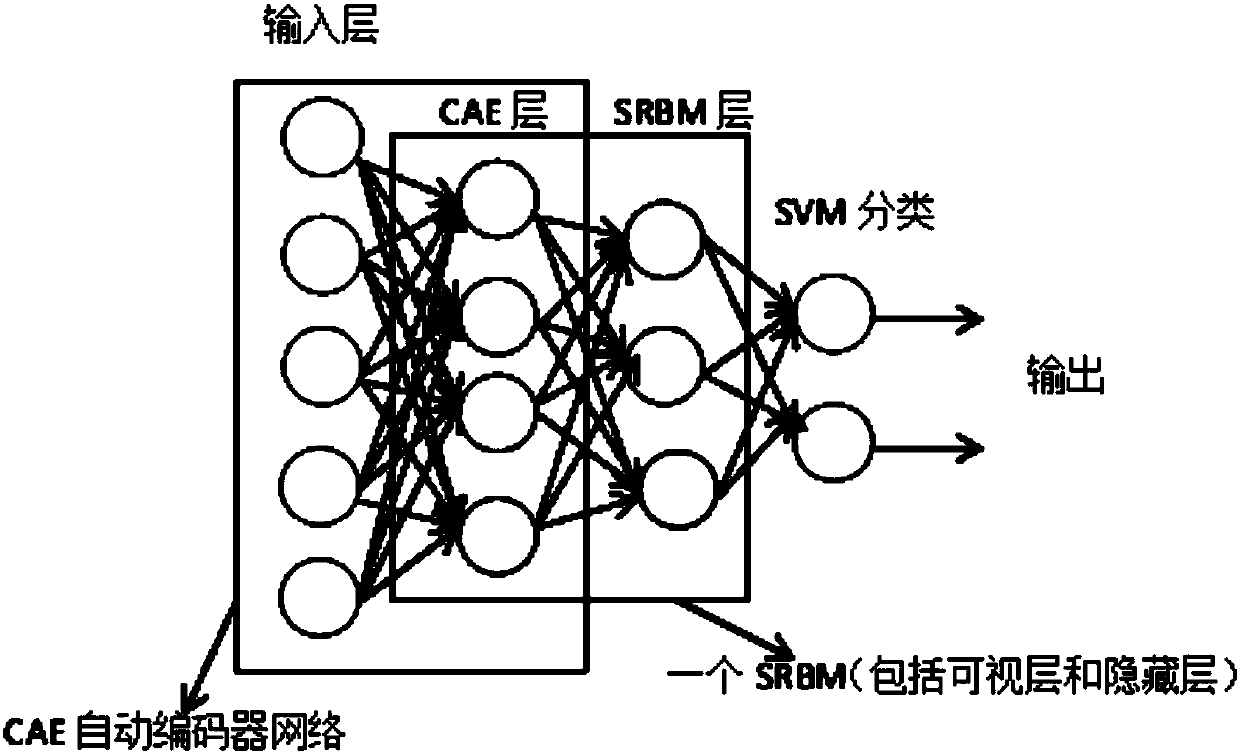

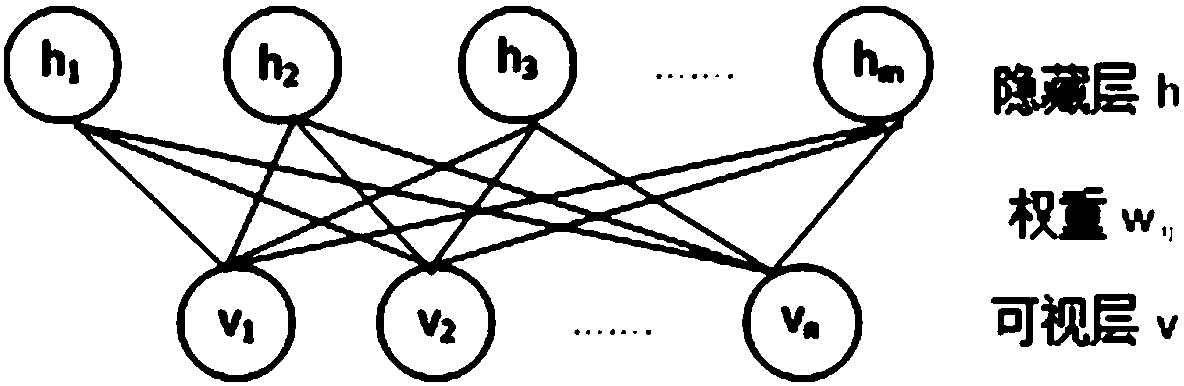

[0073] The purpose of Embodiment 1 is to provide a text classification method based on hybrid autoencoder deep learning. Specifically, it is a method that combines the sparse restricted Boltzmann machine SRBM and the shrinkage autoencoder CAE to form a hybrid autoencoder training model, which combines the robust feature extraction advantages of the shrinkage autoencoder CAE and the sparse restricted glass The feature representation of the SRBM sparsity of the Ertzmann machine is combined with the advantages of fast learning using contrastive divergence to enhance the learning ability of the hybrid autoencoder. The unsupervised layer-by-layer greedy learning algorithm is used to train the model, and Polyak Averaging is added to speed up the parameters when updating parameters. Convergence speed, the backpropagation BP algorithm fine-tunes the model, and finally through the support vector machine SVM classification, the classification accuracy requirements for the classification ...

Embodiment 2

[0123] The purpose of Embodiment 2 is to provide a computer-readable storage medium.

[0124] In order to achieve the above object, the present invention adopts the following technical scheme:

[0125] A computer-readable storage medium, in which a plurality of instructions are stored, and the instructions are adapted to be loaded by a processor of a terminal device and perform the following processing:

[0126] Obtain text data and perform preprocessing;

[0127] The preprocessed text data is based on the hybrid autoencoder training model for feature learning, and the hybrid autoencoder training model is formed by adding the sparse restricted Boltzmann machine SRBM to the shrinkage autoencoder CAE network;

[0128] Classify the text data after feature learning.

[0129] In this embodiment, examples of the computer-readable recording medium include magnetic storage media (for example, ROM, RAM, USB, floppy disk, hard disk, etc.), optical recording media (for example, CD-ROM ...

Embodiment 3

[0131] The purpose of Embodiment 3 is to provide a terminal device.

[0132] In order to achieve the above object, the present invention adopts the following technical scheme:

[0133] A terminal device, including a processor and a computer-readable storage medium, the processor is used to implement instructions; the computer-readable storage medium is used to store multiple instructions, and the instructions are suitable for being loaded by the processor and performing the following processing:

[0134] Obtain text data and perform preprocessing;

[0135] The preprocessed text data is based on the hybrid autoencoder training model for feature learning, and the hybrid autoencoder training model is formed by adding the sparse restricted Boltzmann machine SRBM to the shrinkage autoencoder CAE network;

[0136] Classify the text data after feature learning.

[0137] Beneficial effects of the present invention:

[0138] 1. In the text classification method and system based on h...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com