Word embedding deep learning method based on knowledge graph

A technology of embedding depth and knowledge graph, applied in the field of machine learning of word embedding, it can solve the problems of lack of semantic association of word vectors, failure to consider the semantic information between training data, and difficulty in capturing the deep feature representation of words, so as to improve the generalization ability. , easy to implement, and the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

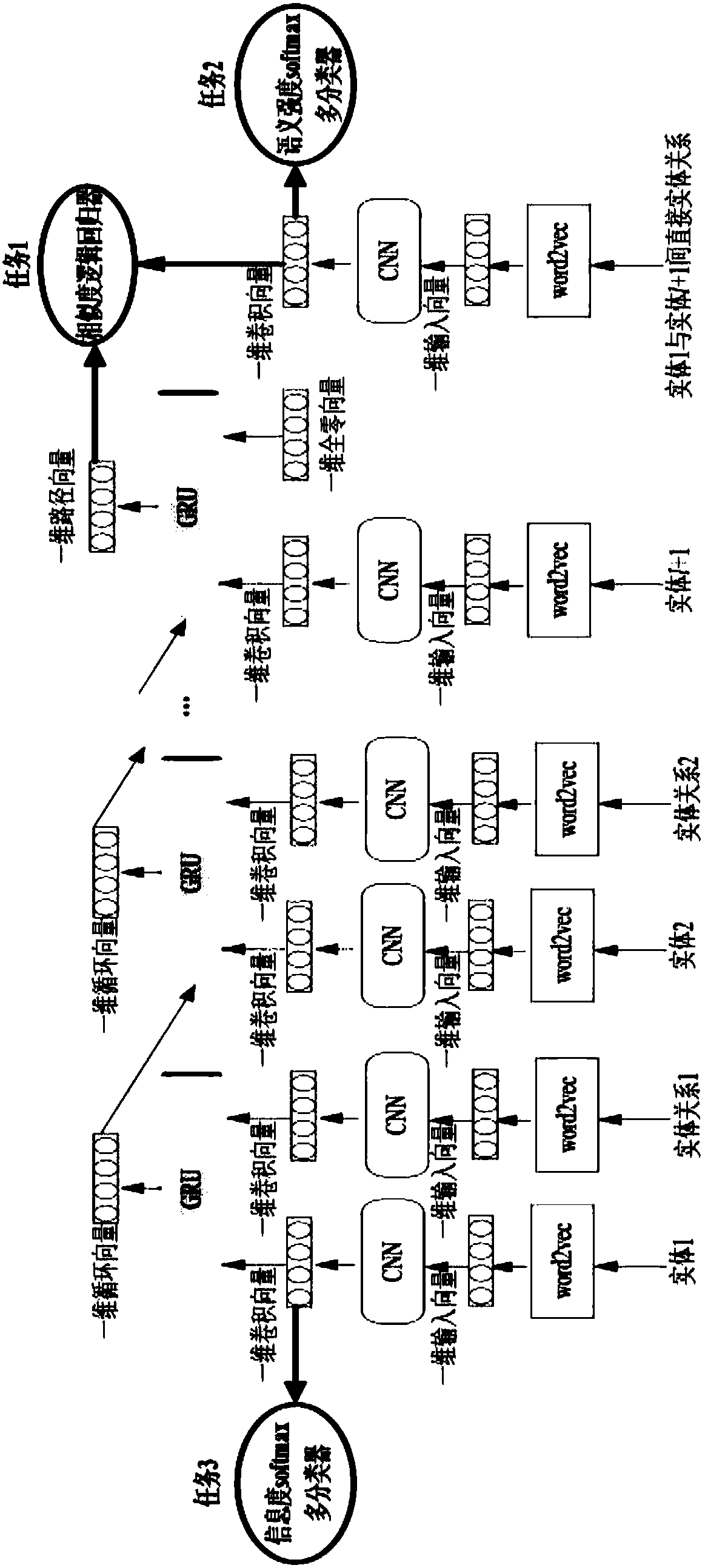

[0022] The technical solution of the present invention will be introduced below in conjunction with the drawings.

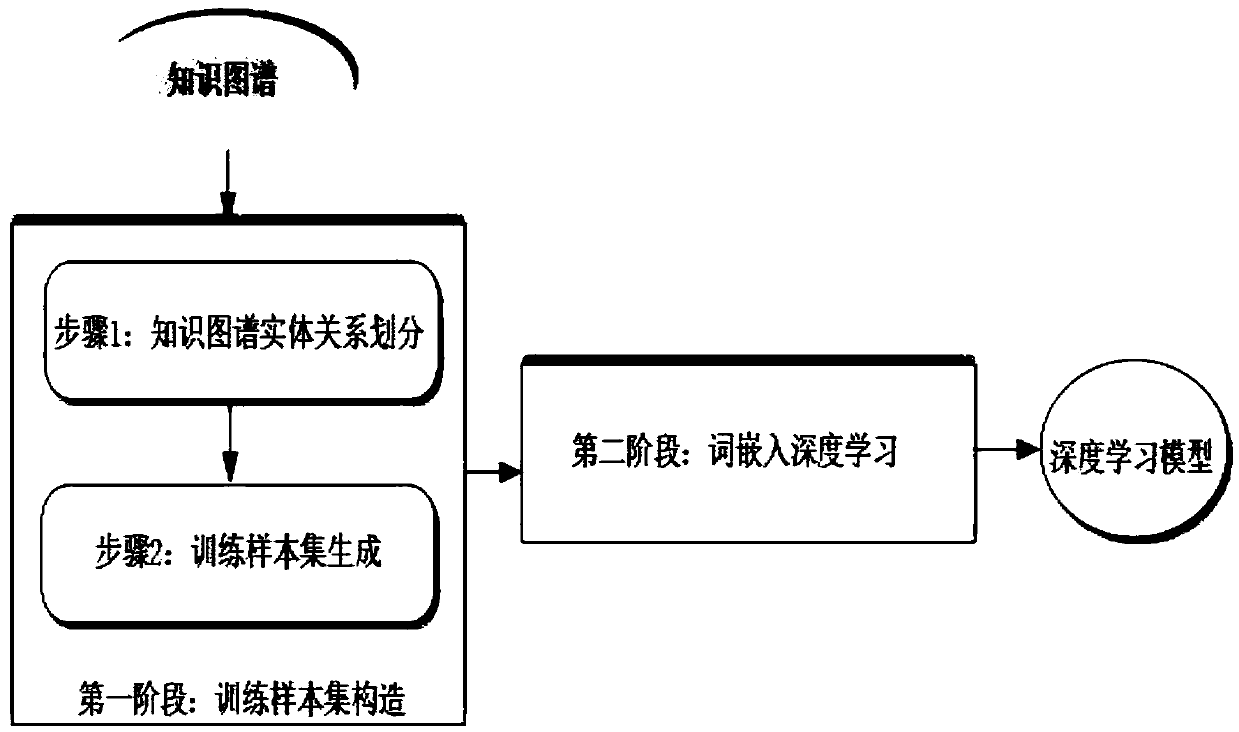

[0023] The purpose of the present invention is to provide a word embedding deep learning method with high accuracy, strong generalization ability, and simple and easy implementation in order to solve the defects of the above-mentioned existing methods. The technical framework is as follows figure 1 Shown.

[0024] The invention mainly consists of two stages of training sample set construction and word embedding deep learning.

[0025] The first stage (training sample set construction) mainly includes two steps, namely, the entity relationship division of the knowledge graph and the training sample set generation.

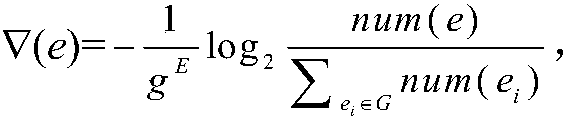

[0026] In step 1, the present invention first takes the knowledge graph as input, calculates the information degree of all entities therein, and sorts the entities according to their information degree from large to small or from small to large, and then divide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com