Unmanned plane and barrier data fusion method

A technology of data fusion and obstacles, applied in the reflection/re-radiation of radio waves, computer components, radio wave measurement systems, etc., can solve the problem of damage to drones, delays in quick understanding of on-site disaster relief, drone collisions, etc. problems, to achieve the effect of reducing uncertainty and decision-making risk

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

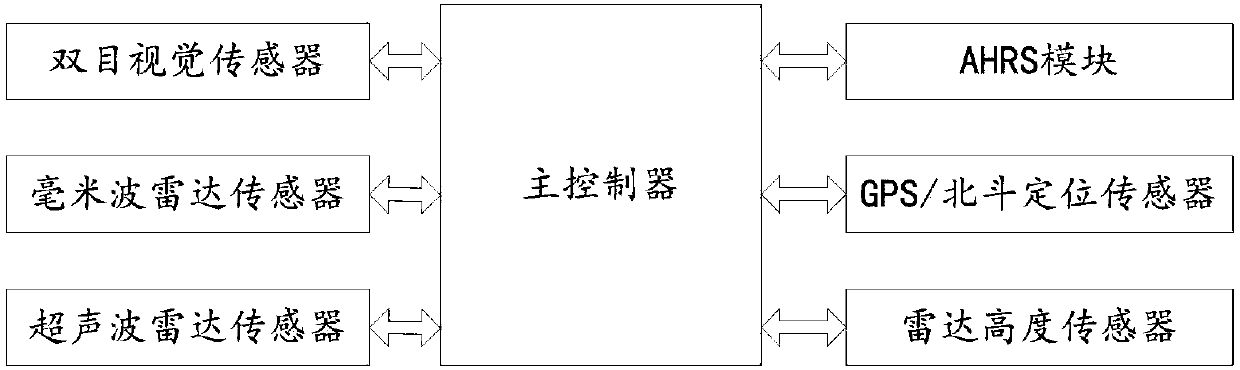

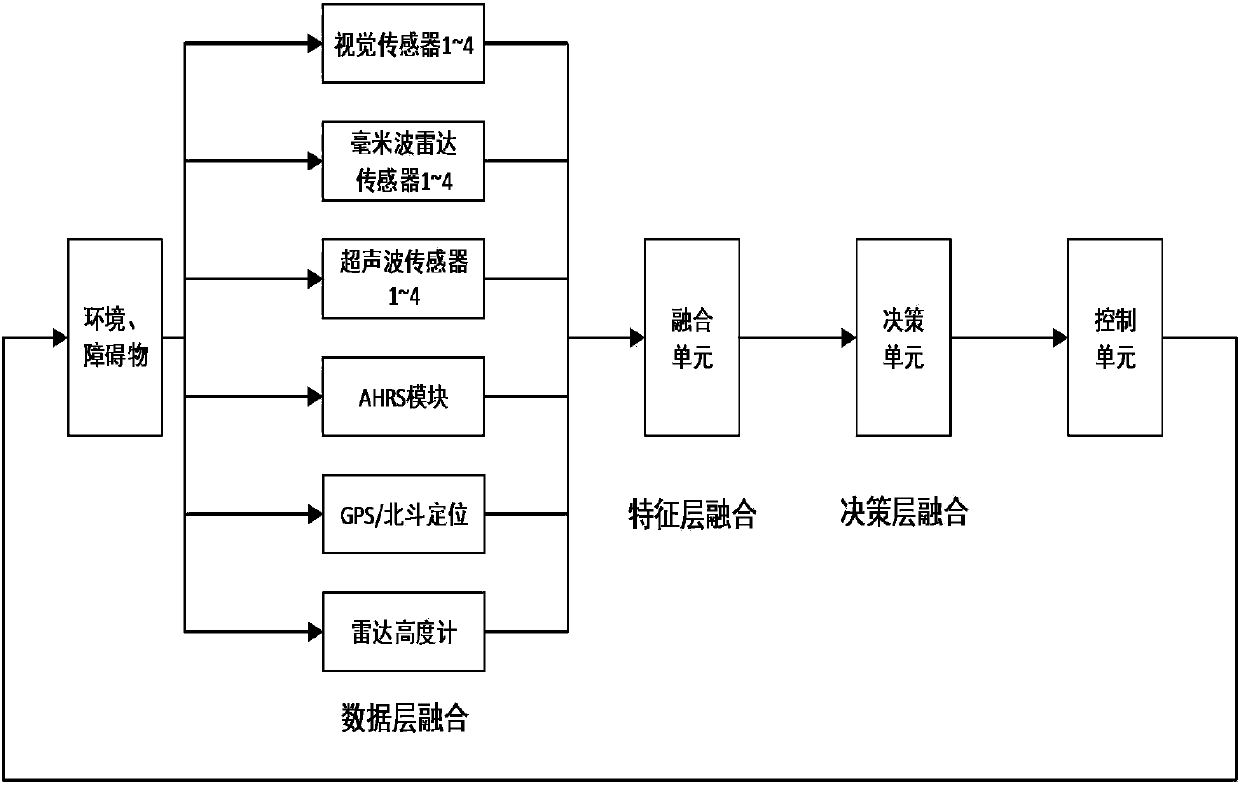

[0058] This embodiment provides a data fusion method between a drone and an obstacle, including: a data fusion layer, a feature layer, a decision layer, and a detection device;

[0059] The detection device includes:

[0060] Radar height sensor to measure the vertical distance of the UAV to the ground;

[0061] GPS / Beidou positioning sensor for real-time positioning to realize tasks such as the fixed-point hovering of the drone, and can realize the measurement of the height of the drone and the measurement of the relative speed of the drone;

[0062] The AHRS module collects the flight attitude and navigation information of the UAV; the AHRS module includes the MEMS three-axis gyroscope, accelerometer and magnetometer. The output data is three-dimensional acceleration, three-dimensional angular velocity and three-dimensional geomagnetic field strength.

[0063] The millimeter wave radar sensor adopts a chirp triangle wave system to realize long-distance measurement from obstacles to th...

Embodiment 2

[0070] As a further limitation to Embodiment 1: The data fusion layer processes the data collected by each sensor:

[0071] 1) The output data of the millimeter wave radar sensor is the relative distance R1, the relative speed V1, and the angle between the obstacle and the radar normal, including the azimuth angle θ1 and the pitch angle ψ1;

[0072] 2) The ultrasonic radar sensor inputs the relative distance R2 between the UAV and the obstacle;

[0073] 3) The binocular vision sensor outputs the object area S, azimuth angle θ2 and relative distance R3;

[0074] 4) The radar height sensor outputs the height value R4 between the drone and the ground;

[0075] 5) GPS / Beidou positioning sensor mainly obtains the altitude H2 and horizontal speed V2 of the drone;

[0076] GPS data follows the NMEA0183 protocol, and the output information is in a standard and fixed format. Among them, GPGGA and GPVTG sentences are closely related to UAV navigation. Their data format is specified as follows:

...

Embodiment 3

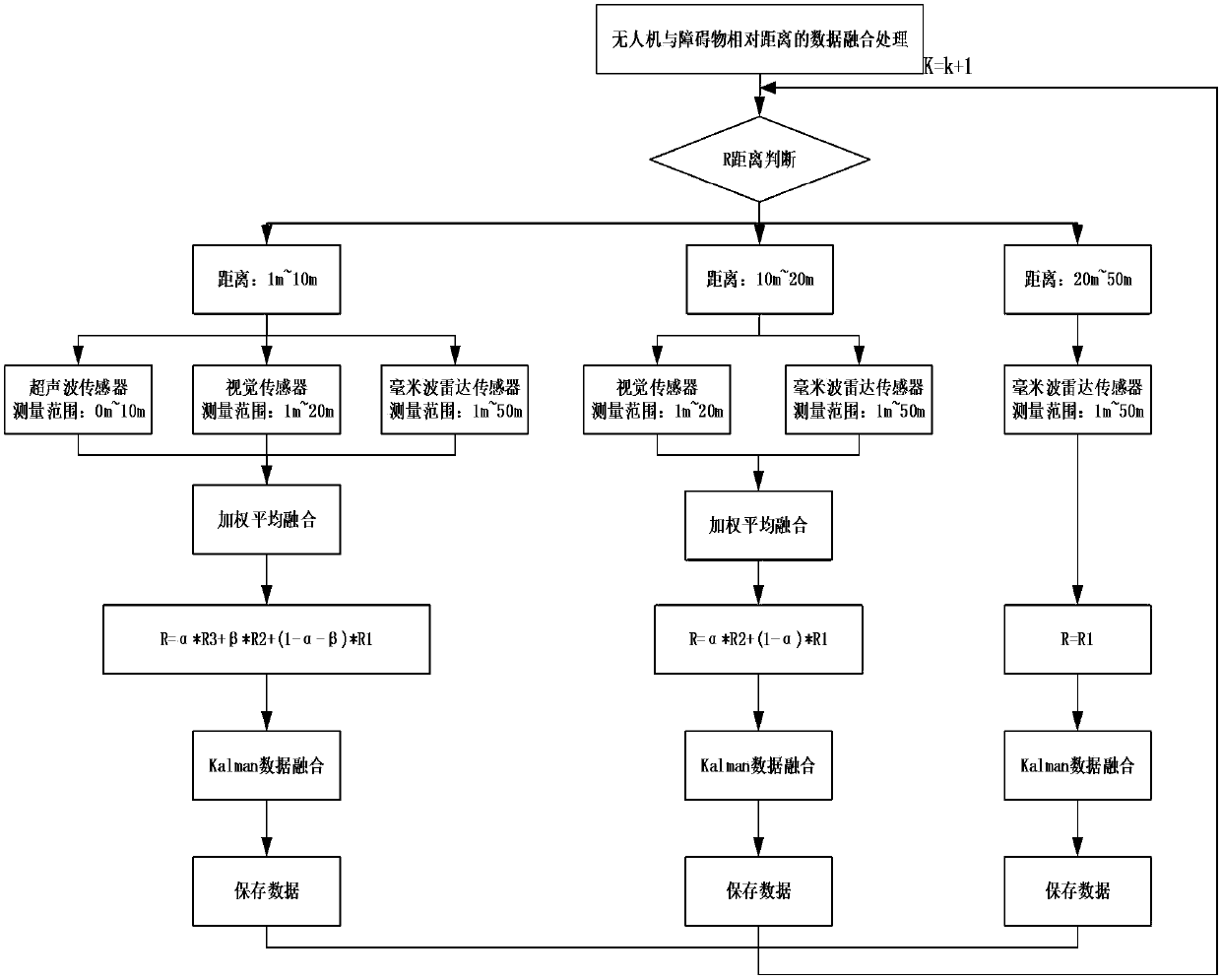

[0083] As a supplement to Embodiment 1 or 2, the feature layer performs data fusion of the relative distance between the drone and the obstacle, the data fusion of the relative height of the drone and the ground, the data fusion of the relative speed of the drone and the obstacle, and Obtain the size, shape and other attributes of obstacles;

[0084] The data fusion of the relative distance between the drone and the obstacle is processed according to the distance range:

[0085] A. Ultrasonic radar sensors, binocular vision sensors, and millimeter wave radar sensors perform detection within the range of 0m to 10m, but the relative accuracy of these radars is different. In the short range, the accuracy of ultrasonic is higher. However, in order to improve the accuracy of the calculation of the height, the weighted average is used, that is, the weighted values of α and β are introduced to carry out the weighted average of the ultrasonic radar sensor, the binocular vision sensor and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com