Face feature extraction method based on depth learning

A face feature and deep learning technology, applied in the direction of neural learning methods, instruments, biological neural network models, etc., can solve the problems of inaccurate positioning of faces, large dimension of feature vectors, low recognition efficiency, etc., and achieve recognition rate Excellent, high accuracy, good robustness effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

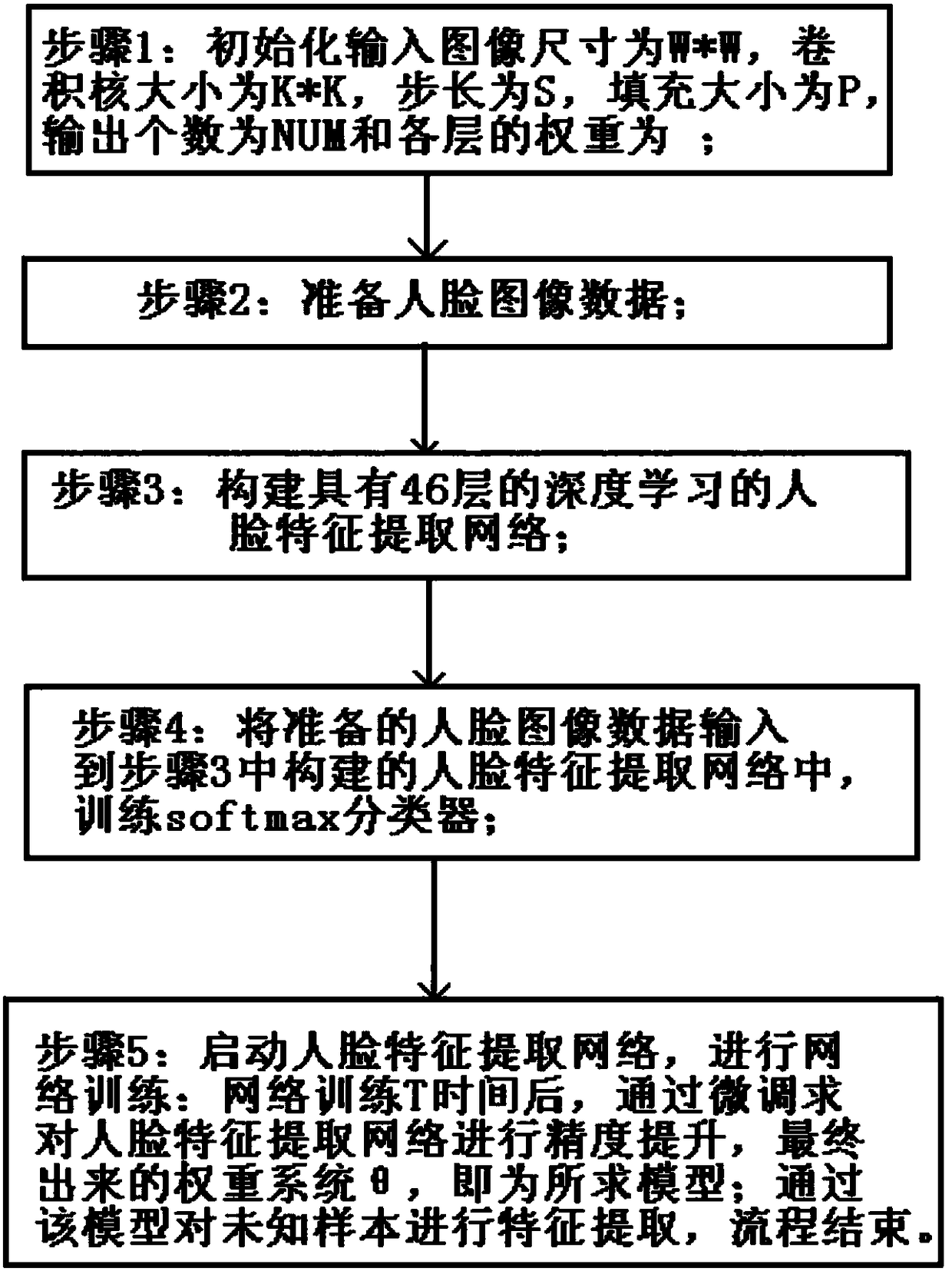

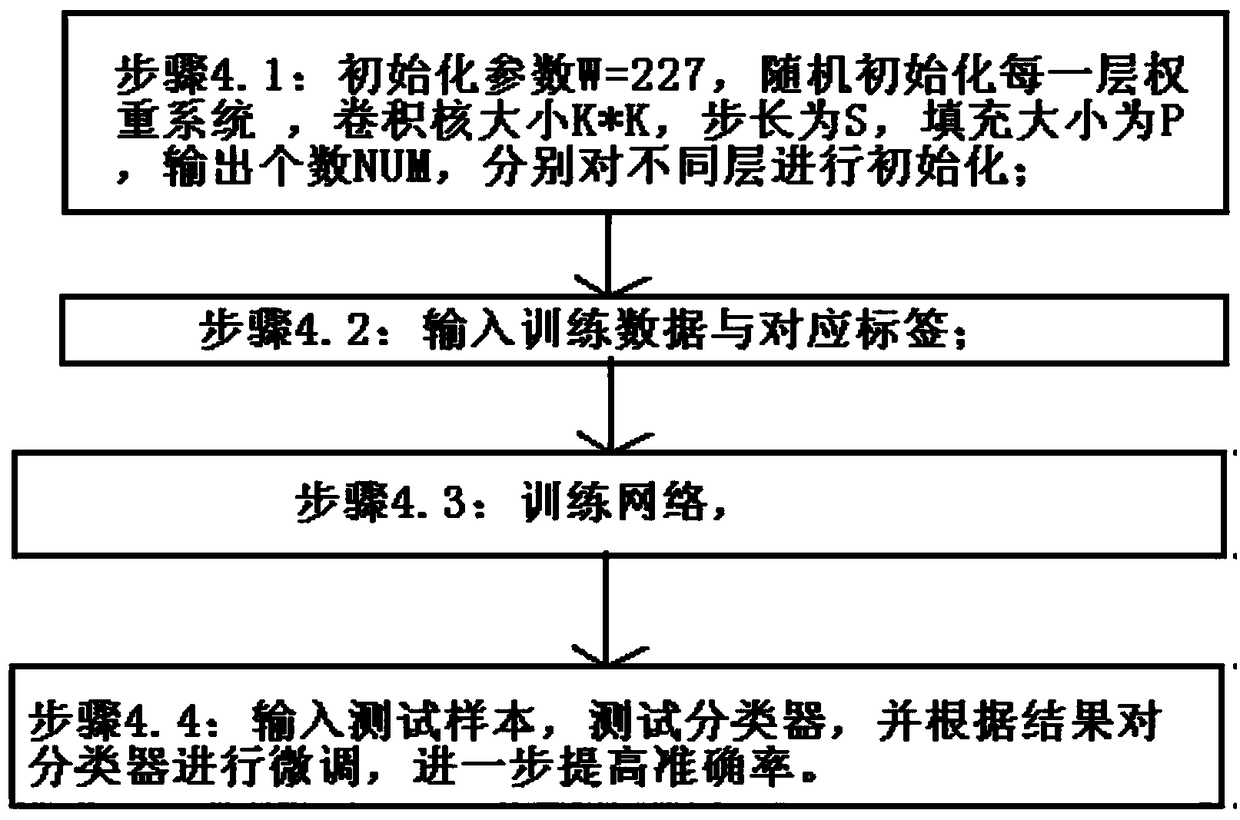

[0098] The first embodiment of the present invention: the initial input image size is set to 227*227, the convolution kernel size is set to K*K, the step size is set to S, the padding size is set to P, the output number is set to NUM, and the weight is initialized randomly The system is set to θ i .

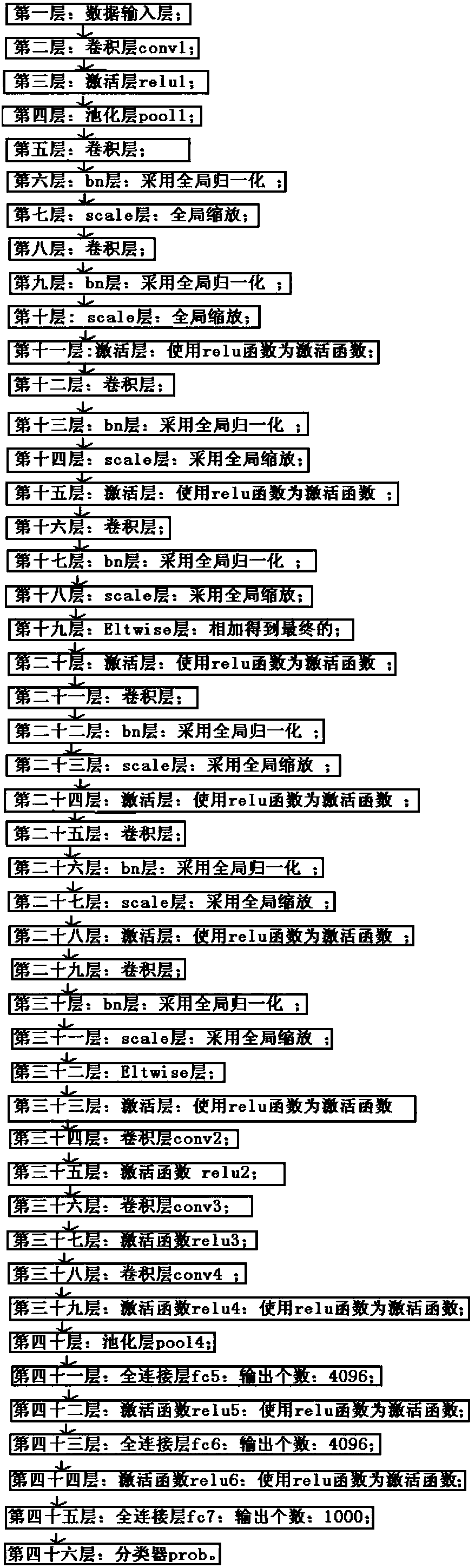

[0099] combine figure 2 Shown: the construction of the face feature extraction network in the described step 3 is as follows:

[0100] The first layer: data input layer, input image data: data length and width are 227*227, image data value range: 0-255;

[0101] The second layer: convolution layer conv1: convolution kernel size: 11*11; step size: 4; output number: 96;

[0102] The third layer: activation layer relu1: use the relu function as the activation function;

[0103] The fourth layer: pooling layer pool1: convolution kernel size: 3*3; step size: 2; pooling type: maximum value; padding: 1;

[0104] The fifth layer: convolution layer: convolution kernel size: 1*1; ste...

no. 2 example

[0147] The network training described in step 5 includes performing convolution operations, pooling, and activation operations on the original data to obtain the final features;

[0148] The convolution refers to performing a convolution operation on an image with a template,

[0149] The pooling operation refers to sampling the image, and the pooling operation is average pooling and maximum pooling; average pooling refers to calculating the average value of the image area as the value after pooling of the area; maximum pooling means to select the maximum value of the image area as the pooled value of the area;

[0150] The activation operation refers to using the convolution kernel to calculate the activation value of the neuron, providing the nonlinear modeling capability of the network, thereby increasing the feature description capability of the network, specifically defined as the following formula:

[0151] f(x)=max(0,x)

[0152] Where x is the value of the current neu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com