Cloud data center load prediction method based on LSTM (Long Short-Term Memory)

A cloud data center and long-short-term memory technology, applied in the field of cloud computing, can solve problems such as the inability to optimally allocate resources, and achieve the effect of short training time and high learning efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

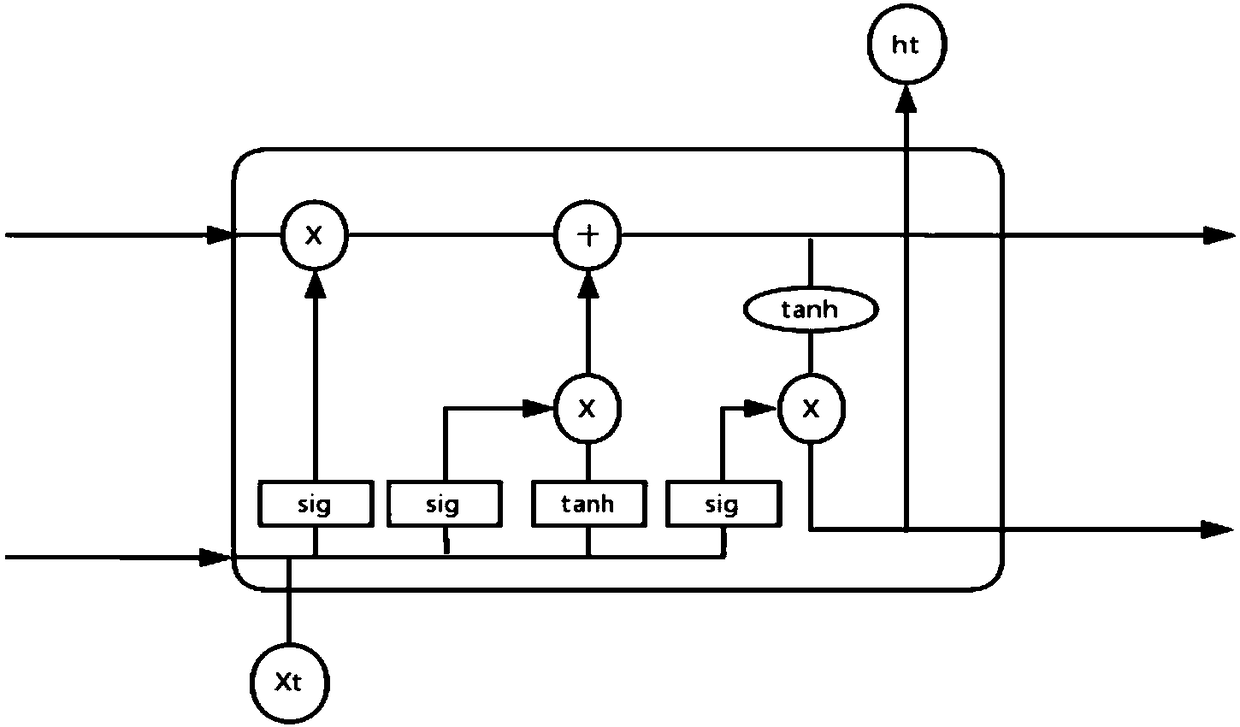

Method used

Image

Examples

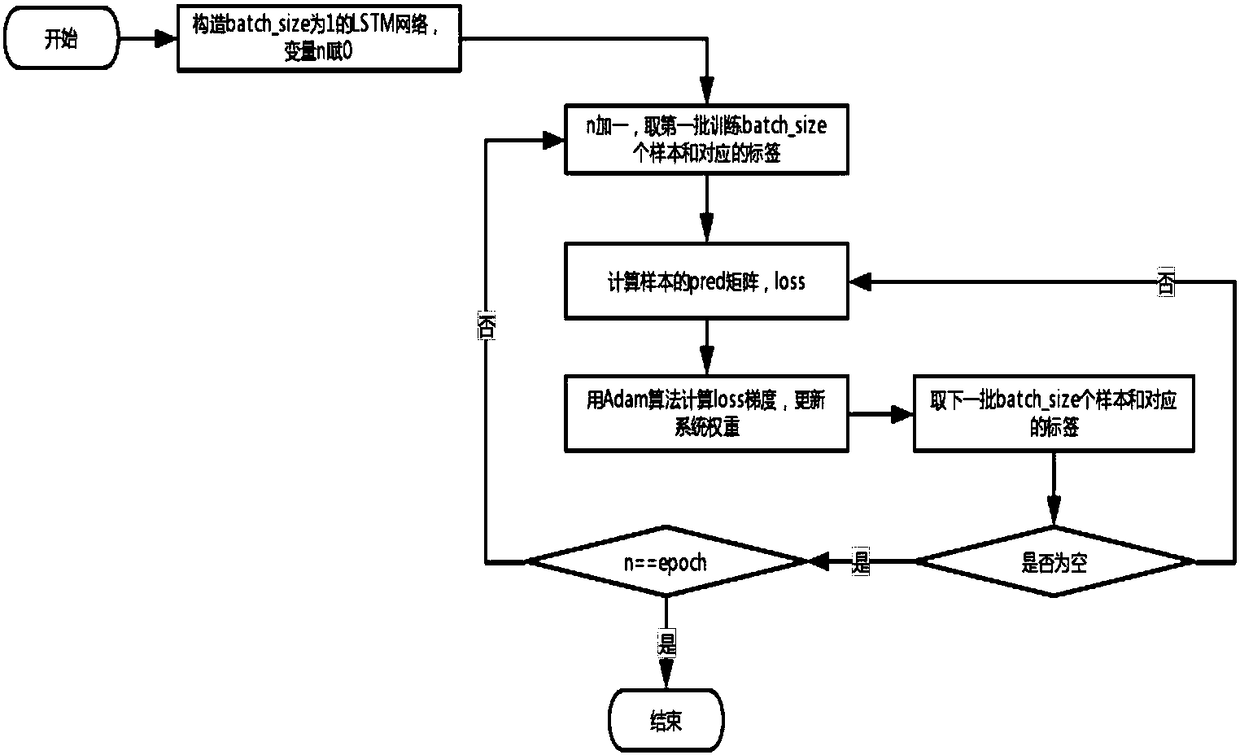

Embodiment Construction

[0050] The implementation process and precautions of the present invention will be further elaborated below. As mentioned above, there are six indicators to be predicted in the cloud data center, but most of the content of the algorithm is applicable to predict these six indicators. If a certain step has different processing methods for different types of forecasts, there will be special instructions. The algorithm is written in python language, and Tensorflow, data analysis package pandas, numerical calculation extension package numpy and matplotlib.pyplot for drawing images are imported. In this part, an indicator to be predicted is always referred to as "H", and the forecasting methods of the other five indicators are roughly the same.

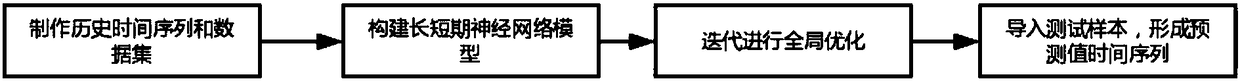

[0051] S1. Make historical time series and datasets with data stored in files;

[0052] Historical data is often stored in csv format files. To predict H, the first step is to read the historical data of H from the file to form a time se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com