Non-cooperative target relative navigation motion estimation method and system based on multi-source information fusion

A multi-source information fusion, non-cooperative target technology, applied in the field of non-cooperative target relative navigation motion estimation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0077] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

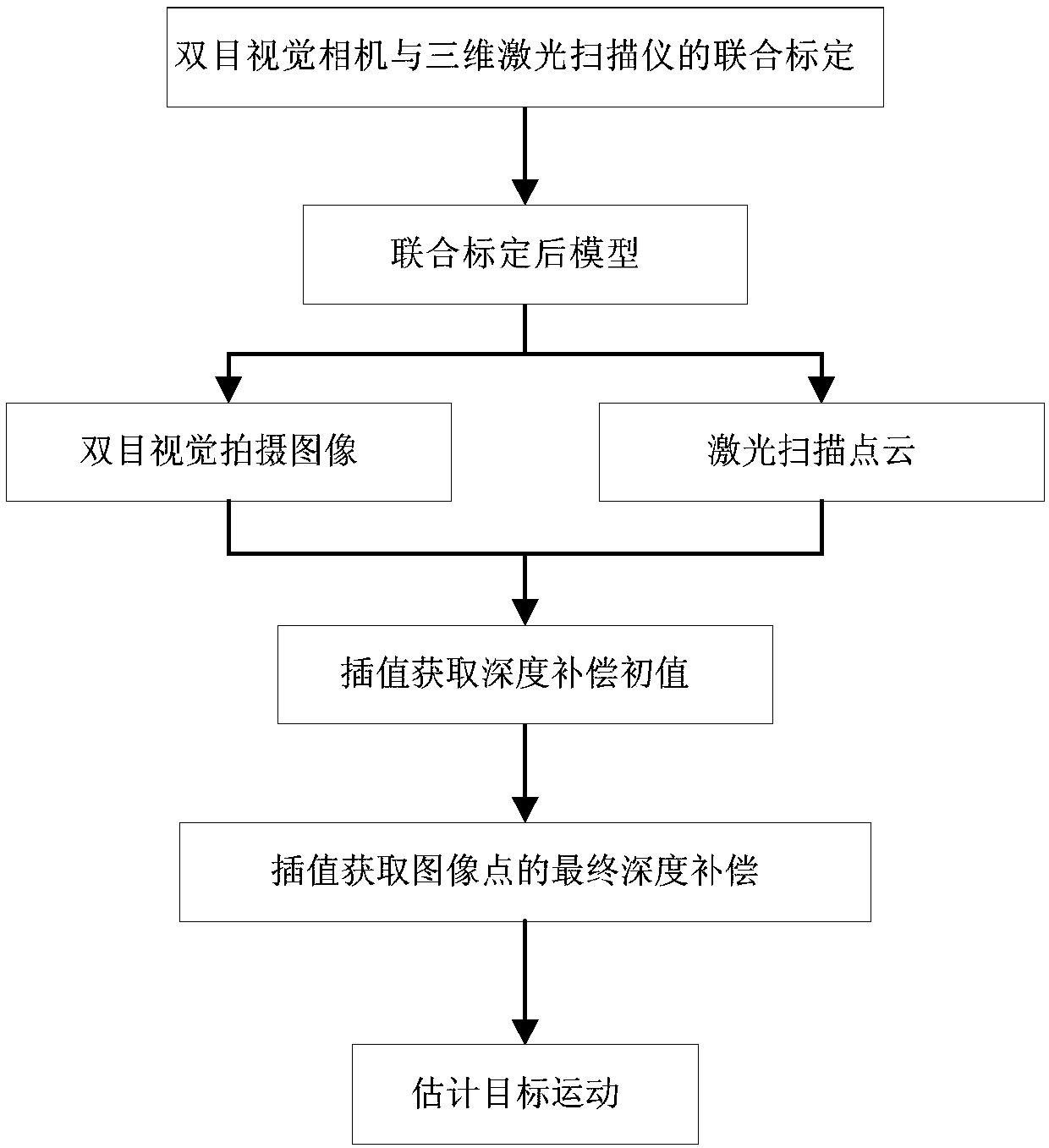

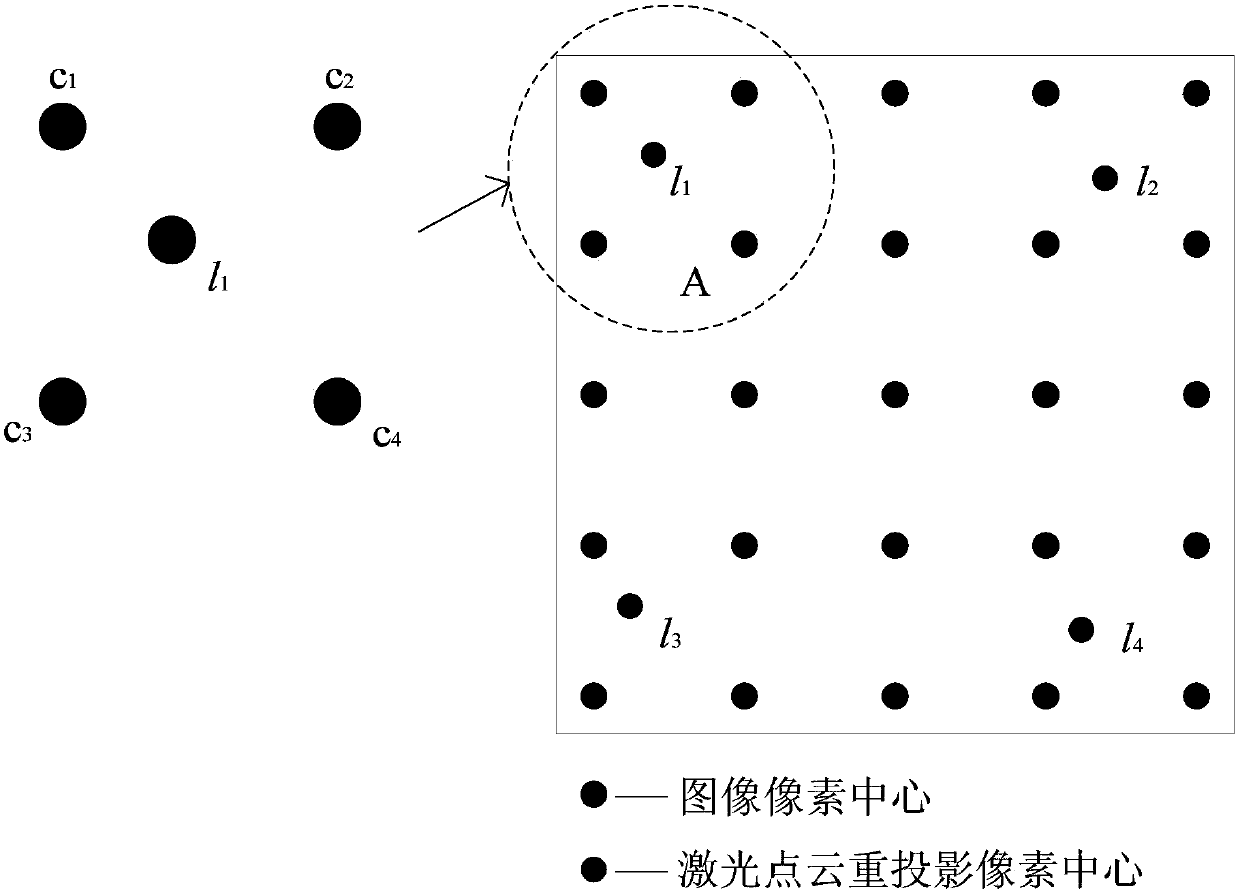

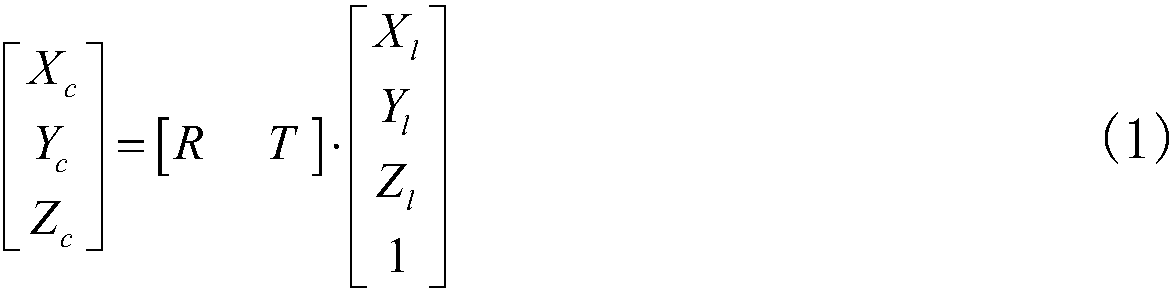

[0078] The basic idea of the present invention is: a non-cooperative target relative navigation motion estimation method and system based on multi-source information fusion. Firstly, a joint calibration method of the vision-laser measurement system is given to obtain the relative position between the two sensor coordinate systems. pose relationship; further obtain the image information of the visual camera and the point cloud information of the 3D laser scanning surface, and fuse the image information and the point cloud information through the interpolation method; finally, obtain the kinematic parameters through the feature points to further complete the 3D motion estimation of the target.

[0079] The binocular vision camera is installed on the installation platform to ensure that the spatial position and posture of the binocular ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com