Method for generating mouth shape video based on voice

A technology of video and mouth shape, which is applied in the fields of computer vision and graphics processing, can solve the problems of unable to meet the basic requirements of video chat, powerless, and unable to guarantee real-time performance, and achieve the effect of small memory usage, small calculation amount, and real-time video

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The present invention will be further described below in conjunction with the accompanying drawings and embodiments, and the present invention includes but not limited to the following embodiments.

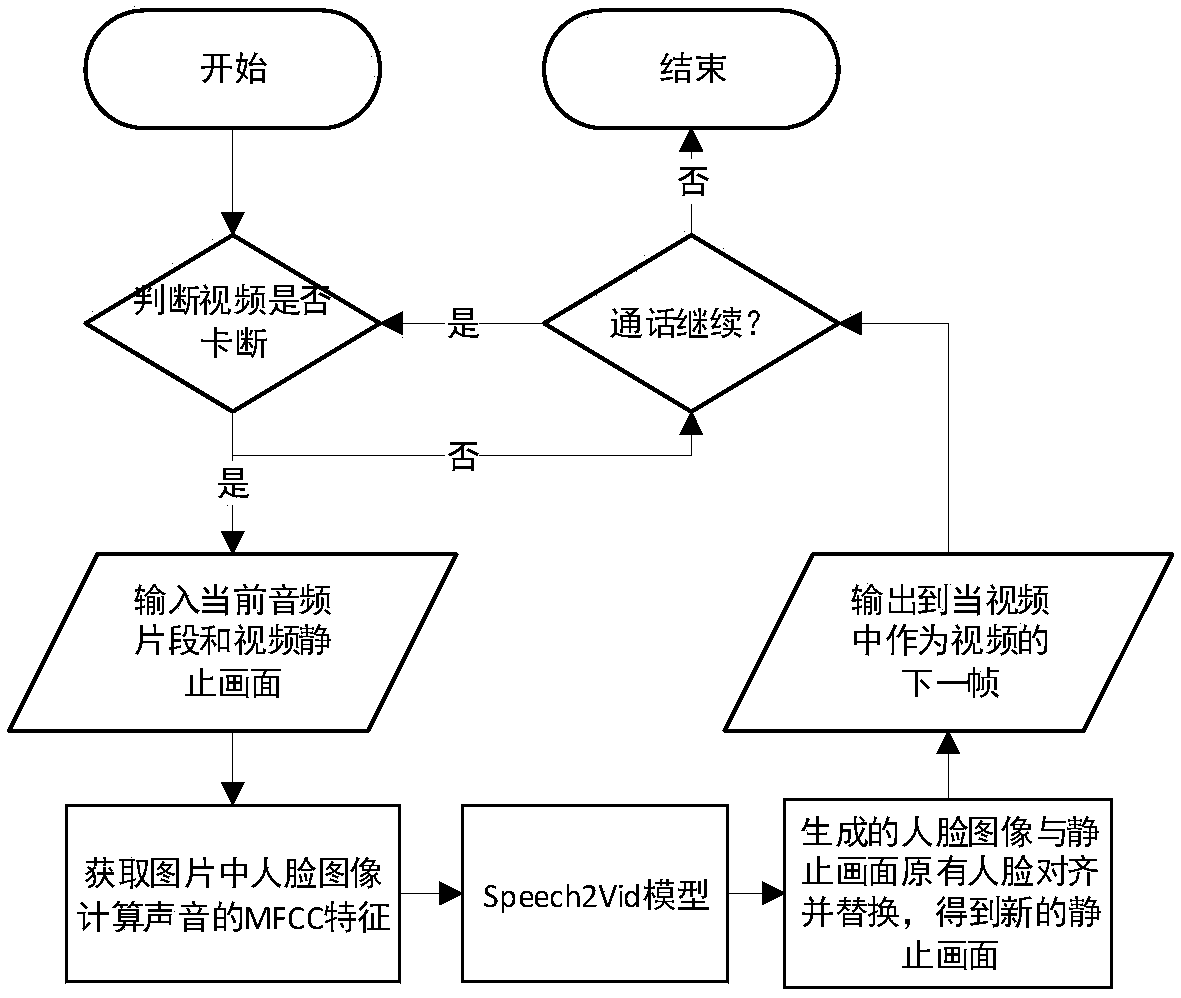

[0016] The present invention provides a method for generating lip video based on speech, such as figure 1 As shown, it mainly includes the following steps:

[0017] 1. First, check the fluency of the current video call to determine whether the video screen freezes. Since the speaker's mouth shape only depends on the currently spoken phoneme (the smallest unit in speech), a 0.35-second audio clip can obtain enough mouth shape information. Furthermore, online video generally does not exceed 25 frames, considering that it is impossible for a person's mouth shape to change 25 times a second. Therefore, set the time interval to 0.35 seconds, that is, compare the current picture with the picture 0.35 seconds ago. If they are completely consistent, it can be judged that the vide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com