Human action recognition method based on deep motion map (DMM) generated by motion history point cloud (MHPC)

A point cloud generation and motion history technology, applied in the fields of computer vision and image processing, can solve problems such as high computational complexity and cumbersome algorithms, and achieve the effects of reducing computational complexity, increasing robustness, and increasing numbers

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The human behavior recognition method based on the depth motion map generated by the motion history point cloud provided by the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

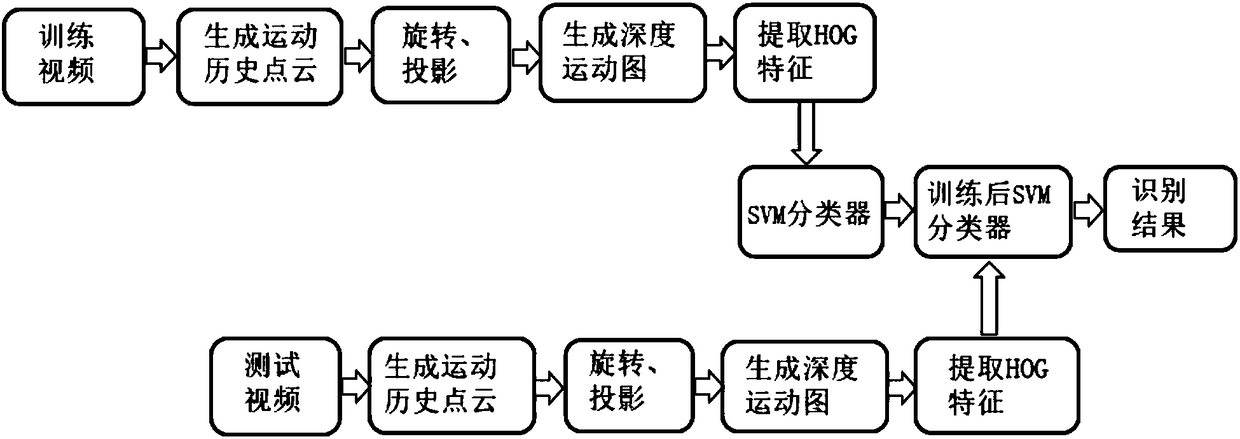

[0027] Such as figure 1 As shown, the human behavior recognition method based on the depth motion map generated by the motion history point cloud provided by the present invention includes the following steps carried out in order:

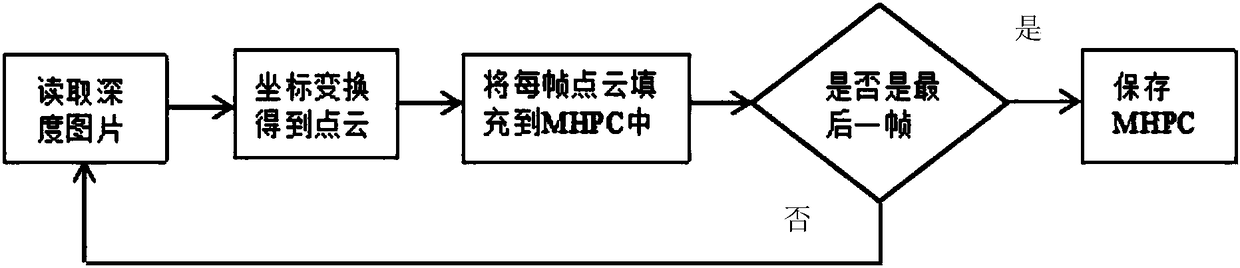

[0028] (1) Get the point cloud of each frame of the depth image by coordinate mapping the multi-frame depth image that has extracted the foreground in each human action sample, and then fill it into the MHPC until the depth images of all frames are traversed to get the point cloud of the action MHPC, to record the space and time information of the action;

[0029] The specific method is as follows:

[0030] The human action samples are selected from the MSR Action3D database. The depth ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com