Front-vehicle ranging method based on monocular vision and image segmentation under vehicle-borne camera

A distance measurement method and monocular vision technology, applied in the field of computer vision, can solve the problems of providing security, reducing detection efficiency, and easily introducing errors, etc., to achieve the effect of saving depth calculation time, ensuring driving vision, and reasonable driving judgment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

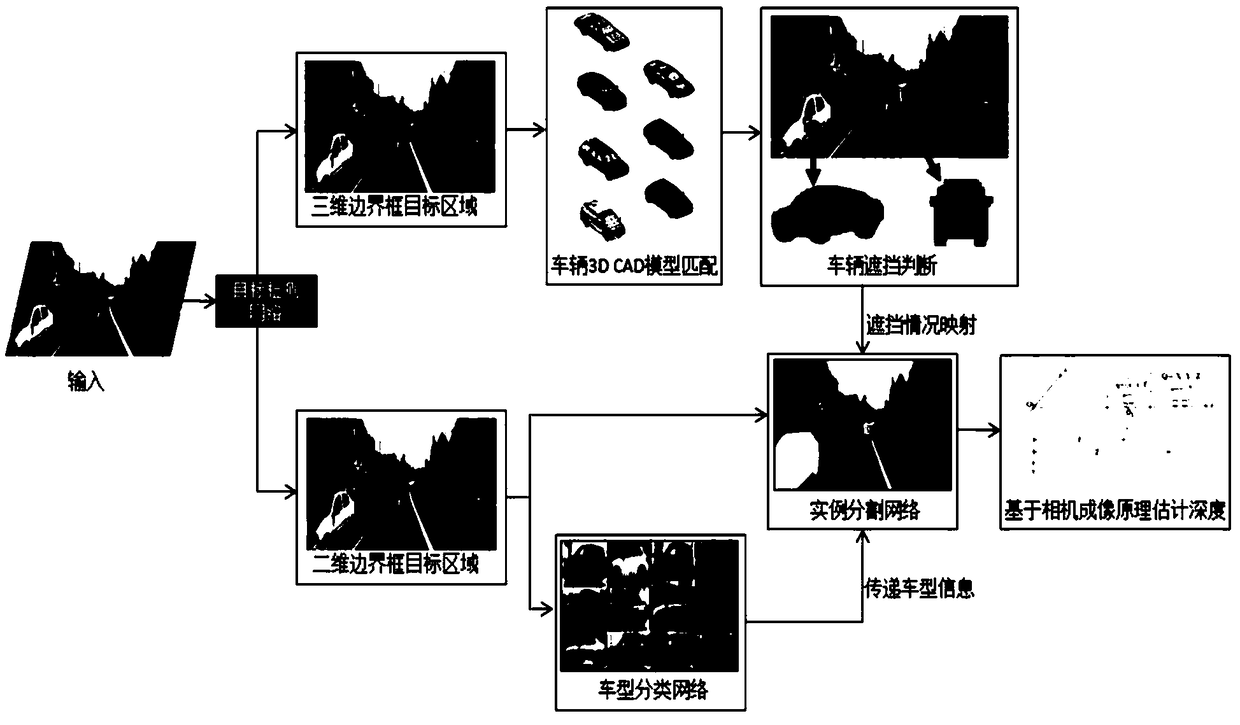

[0047] Such as figure 1 As shown, a front vehicle ranging method based on monocular vision and image segmentation under a vehicle camera, including the following steps:

[0048] Step S1: read the image frame by frame from the video stream captured by the vehicle camera;

[0049] Step S2: Carry out object detection on the vehicle, and extract the position information of the vehicle in the image, including two-dimensional bounding box information and three-dimensional bounding box information;

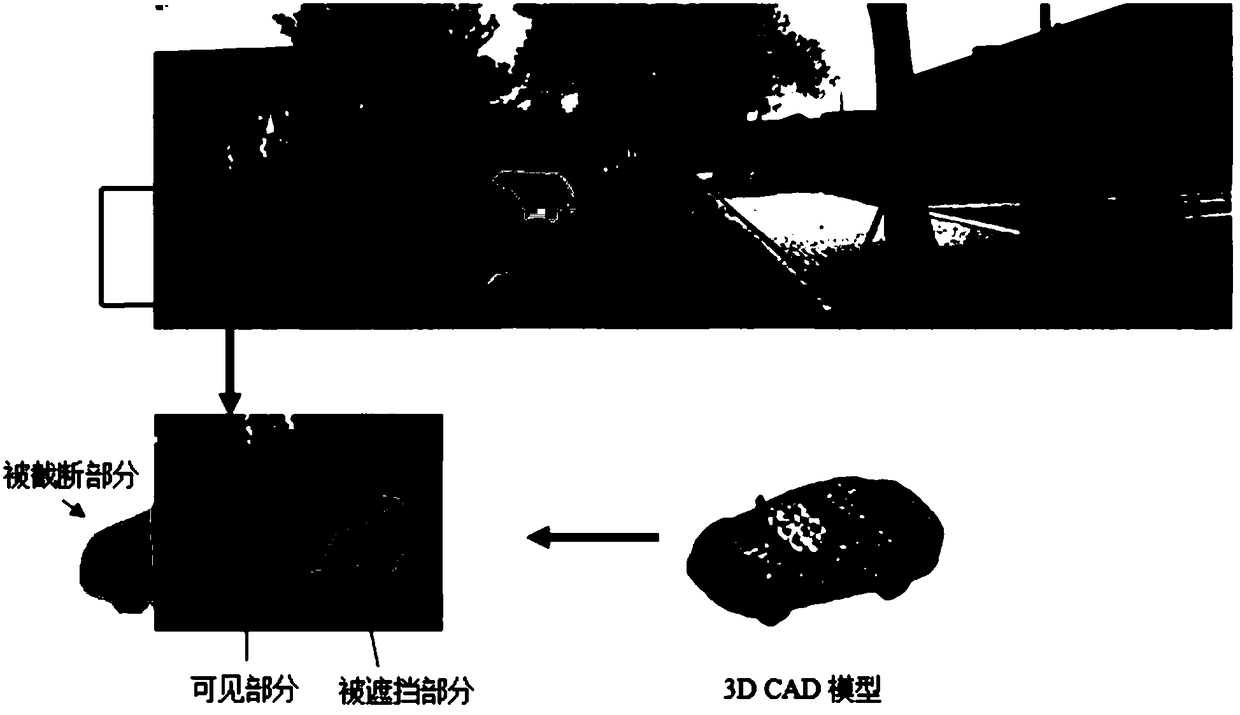

[0050] Step S3: matching the CAD model of the corresponding vehicle in the preset vehicle 3D CAD model library according to the three-dimensional bounding box information;

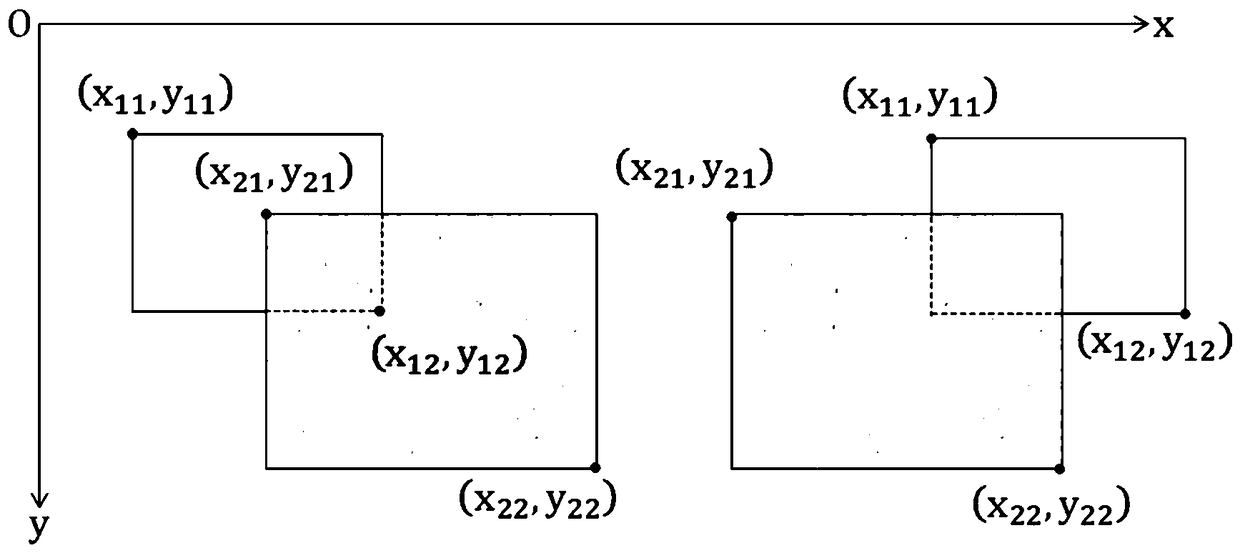

[0051] Step S4: Determine the distance of the overlapping vehicle according to the two-dimensional bounding box information of the vehicle, and determine the occlusion information of the veh...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com