End-to-end unsupervised scene passable area cognition and understanding method

A traffic area, unsupervised technology, applied in the field of traffic control, which can solve problems such as unsatisfactory results, mutual interference, and adverse effects of smart cars.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention is described in further detail below in conjunction with accompanying drawing:

[0030] Such as figure 1 As shown, an end-to-end unsupervised scene road area determination method includes the following steps:

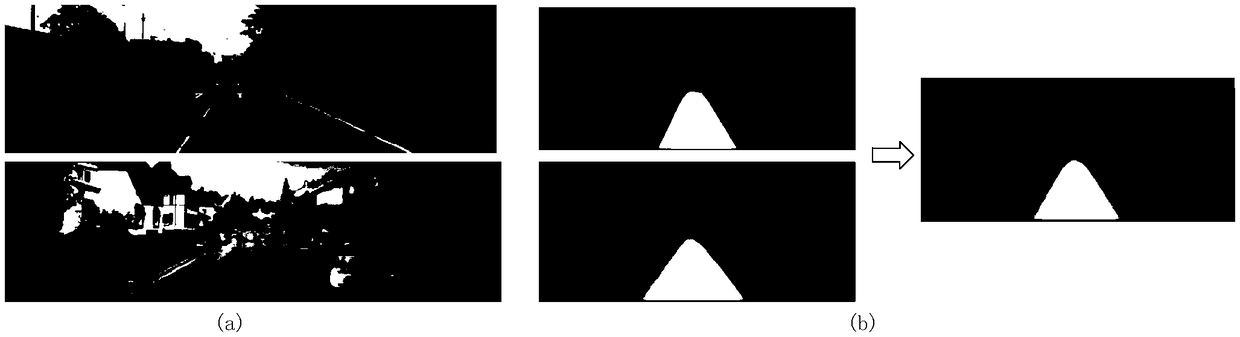

[0031]1) Using the distribution law of the road area in space and images, the road location prior probability distribution map is constructed based on statistics and directly added to the convolutional layer as a feature map of the detection network, and the location prior information is constructed in the The prior probability distribution map of the location of the passable area that can be flexibly applied in the actual road traffic environment;

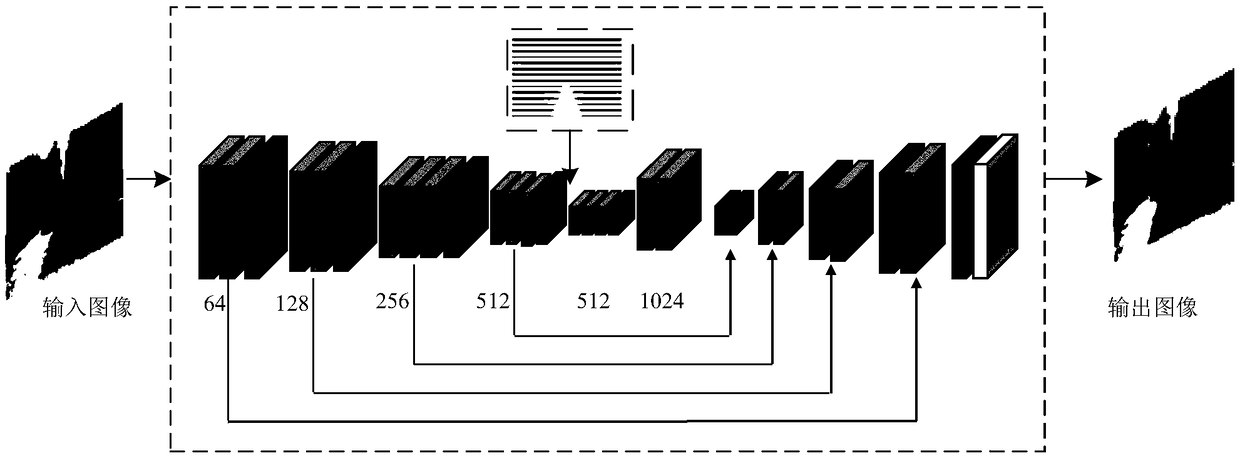

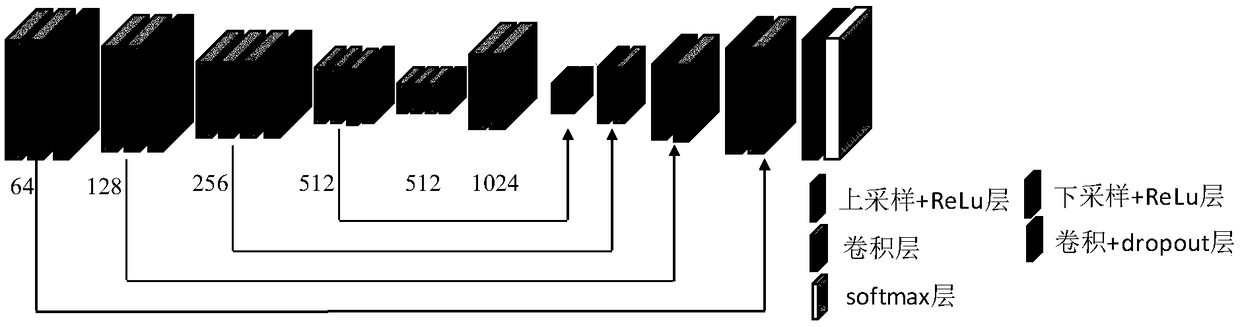

[0032] 2), Aiming at the cognition and understanding method of the passable area, which is the problem of road surface detection and segmentation, a new deep network architecture—UC-FCN network is constructed by combining the fully convolutional network (FCN) and U-NET, as the main network for ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com