Indoor attitude estimation method based on thermodynamic map for single image

A technology for indoor object and attitude estimation, applied in computing, design optimization/simulation, biological neural network models, etc., can solve problems such as inability to handle smooth and textureless objects, application requirements that cannot meet accuracy, sensitivity to illumination and occlusion, etc. , to achieve the effects of wide application range, improved positioning accuracy, and robust occlusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to make the technical solution of the present invention clearer, the content of the invention will be described in more detail below in conjunction with the examples, but the protection scope of the invention is not limited to the following examples.

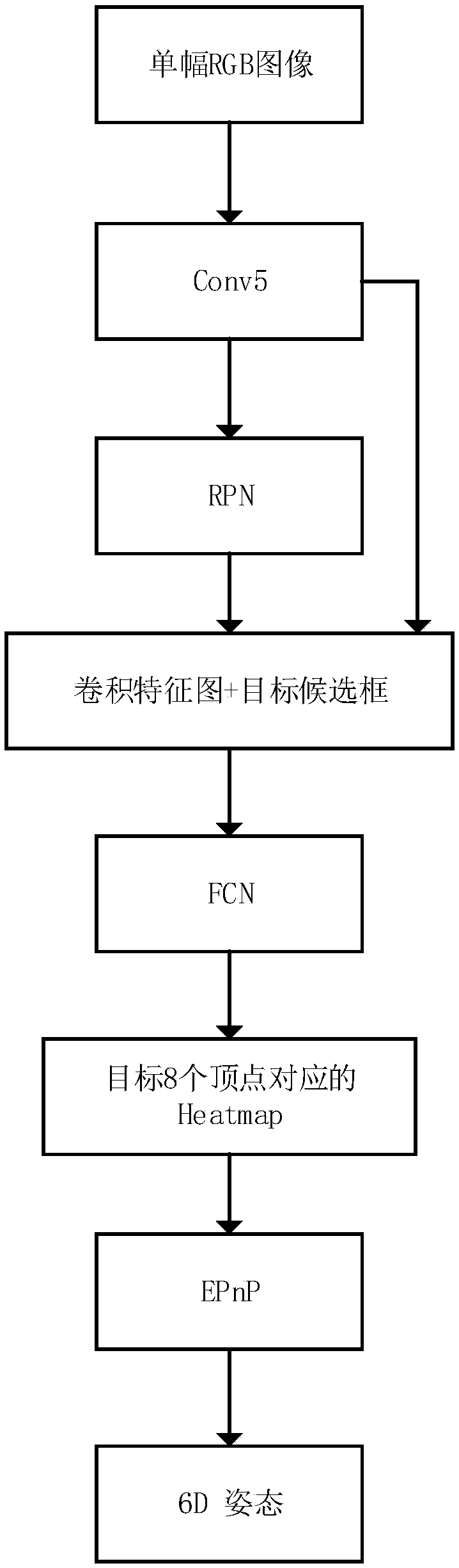

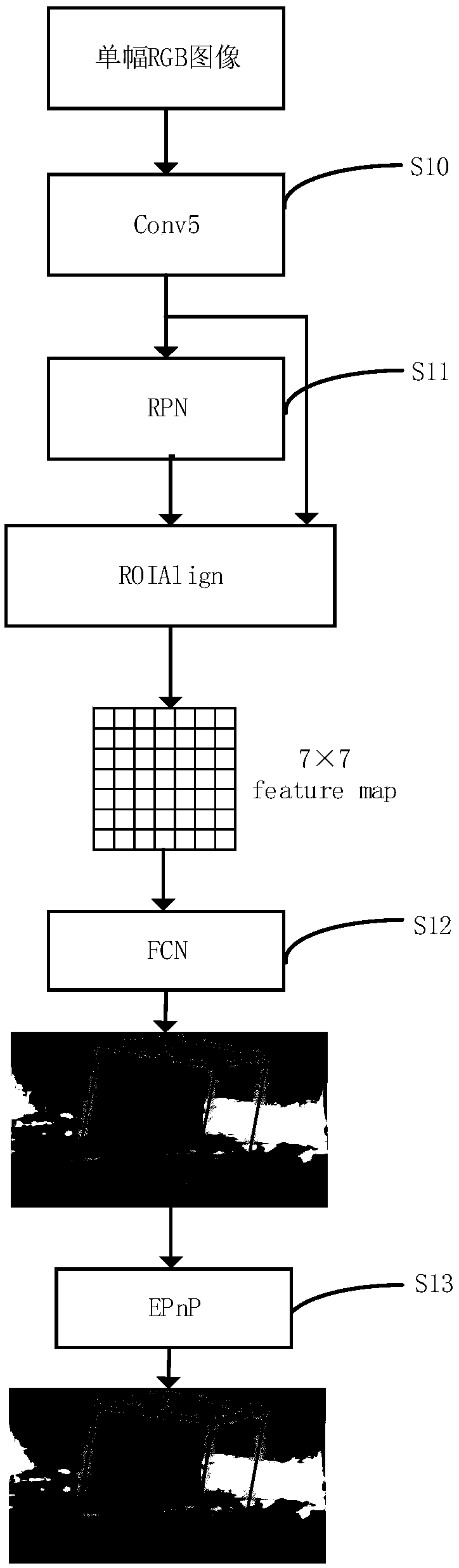

[0038] Given a single RGB image and the data synthesized by ShapeNet as a CAD model library, the pose estimation of the target object in a single indoor scene picture is completed. The overall flow chart is as figure 2 Shown:

[0039] S10: extracting features of the target object through the CONV5 convolutional neural network;

[0040] S11: Then predict the target candidate frame (object in the indoor scene) by using the RPN neural network;

[0041] S12: Predict the heat map corresponding to the 8 vertices of the target object by using the FCN according to the previously obtained target object features and the target candidate frame;

[0042] S13: Calculate the 6D attitude of the object by using EPnP based on...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com