Hardware architecture of accelerated artificial intelligence processor

A technology of artificial intelligence and hardware architecture, applied in the field of artificial intelligence, can solve problems such as inapplicability, achieve high performance, improve scalability, and accelerate the work of artificial intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention is described in further detail now in conjunction with accompanying drawing.

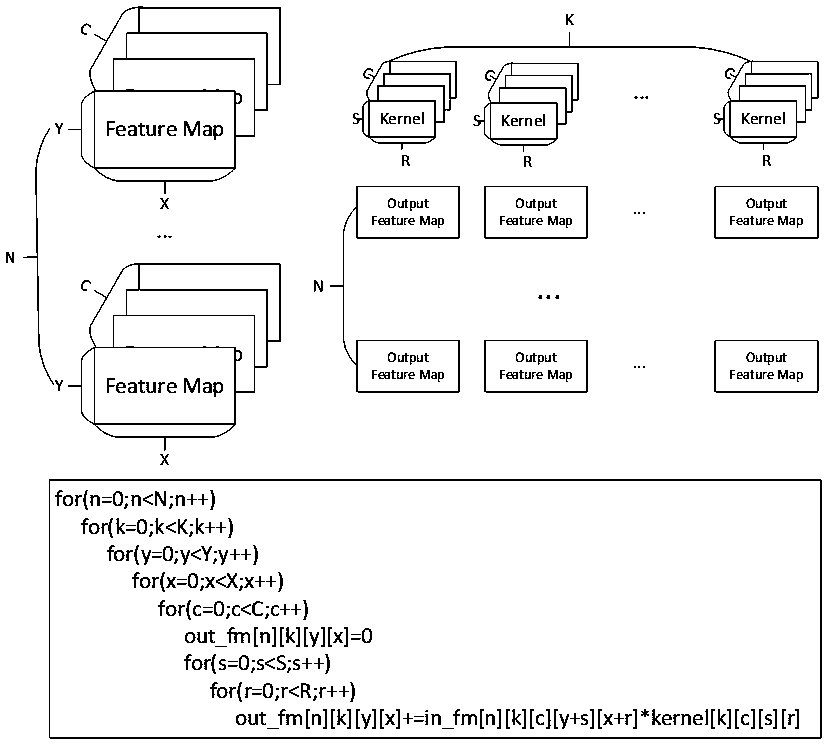

[0024] Such as figure 1 As shown, the artificial intelligence feature map can usually be described as a four-dimensional tensor [N, C, Y, X]. These four dimensions are, feature map dimension: X, Y; channel dimension: C; batch dimension: N. A kernel can be a 4D tensor [K,C,S,R]. The AI job is to give the input feature map tensor and kernel tensor, we according to figure 1 The formula in computes the output tensor [N,K,Y,X].

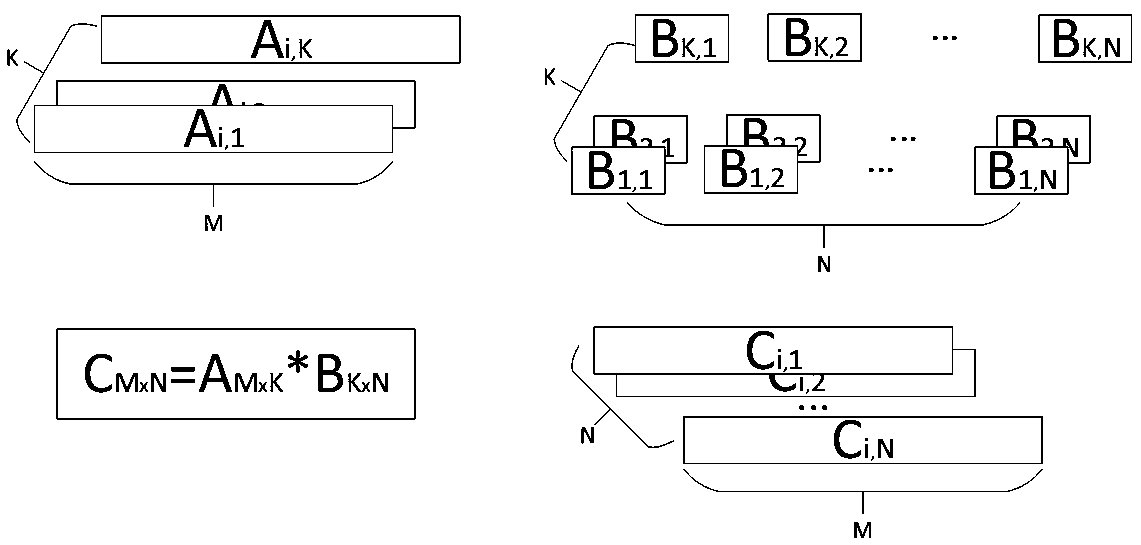

[0025] Another important operation in AI is matrix multiplication, which can also be mapped to feature map processing. exist figure 2 In , matrix A can be mapped to tensor [1,K,1,M], matrix B can be mapped to tensor [N,K,1,1], and the result C is tensor [1,N,1,M].

[0026] In addition, there are other operations, such as normalization and activation, which can be supported in general-purpose hardware operators.

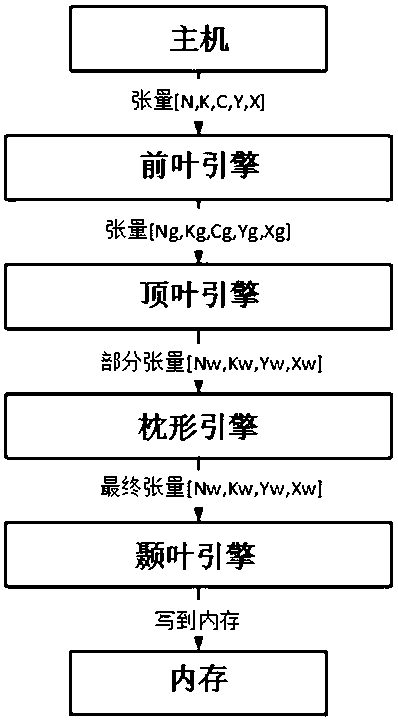

[0027] We propose a hardwar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com