An on-line soft-spaced kernel learning algorithm based on step size control

A soft interval and kernel learning technology, applied in the field of online soft interval kernel learning algorithm, can solve the problem that the classification method cannot efficiently handle the data stream classification problem, and the online learning algorithm cannot suppress the influence of noise, etc., so as to improve the classification accuracy and reduce the computational complexity. degree, the effect of reducing the running time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

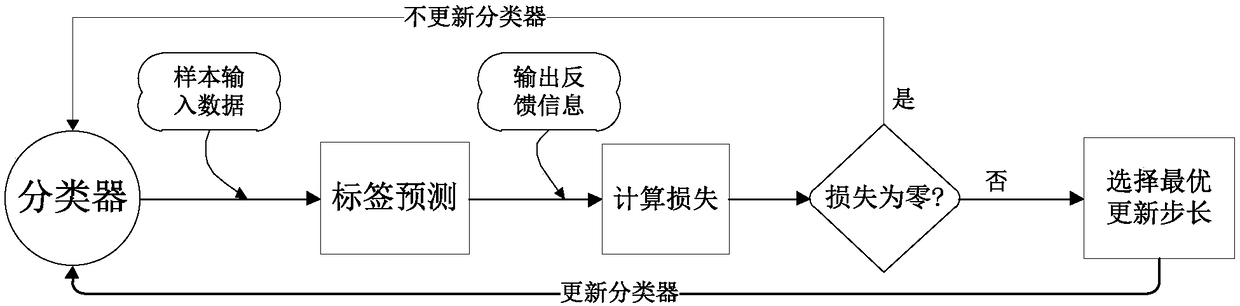

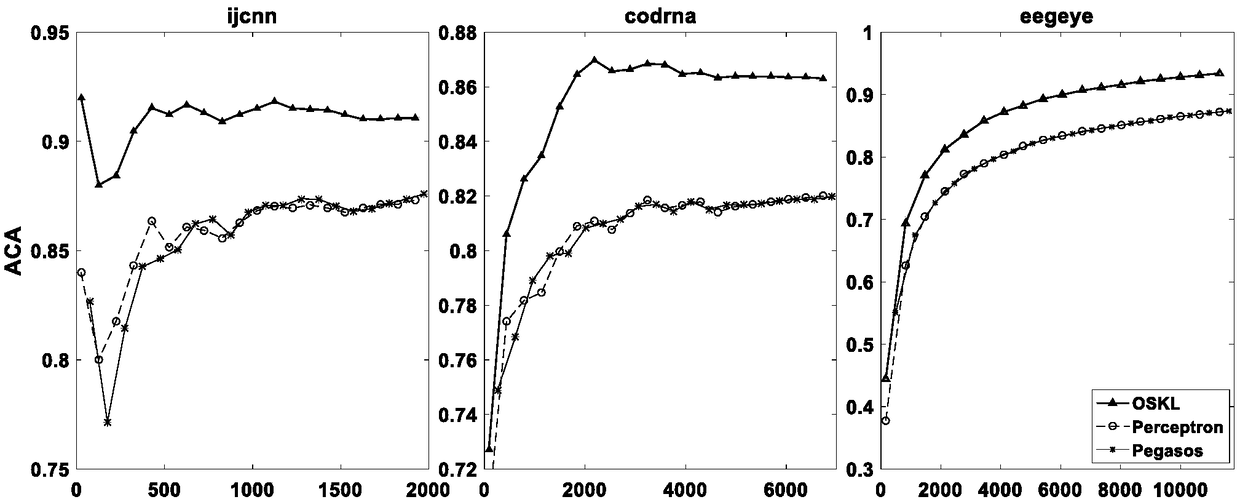

[0033] Embodiment 1: Take the online classification experiment on the original benchmark data sets ijcnn, codrna, and eegeye as an example for illustration. like figure 1 Shown is a schematic diagram of an online soft interval kernel learning algorithm based on step size control provided according to an embodiment of the present invention. The online learning algorithm includes the following steps:

[0034] Step 1: Initialize model parameters, decision function and model kernel function. The specific steps are:

[0035] Initialize the model threshold parameter C=0.05, initialize the decision function f of the binary classification problem 0 =0, specify the Gaussian kernel function as the model kernel function, namely k(x i ,x j )=exp(-‖x i -x j ‖ 2 / d), where d is taken as the dimensionality of the sample input x.

[0036] Step 2: collect the data stream, and use the classification decision function to predict the category label of the data stream sample. The specific...

Embodiment 2

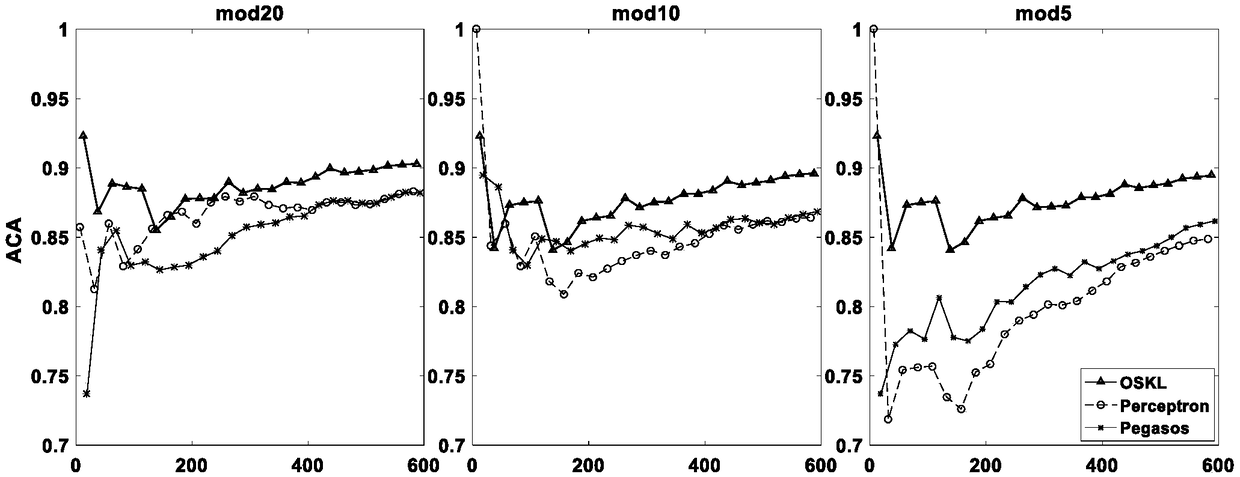

[0046] Embodiment 2: On the basis of the original benchmark data sets ijcnn, codrna, and eegeye, noise labels are added, and an online classifier is trained on the data sets containing noise labels. The difference from Embodiment 1 is that in this embodiment, in Step 1, 30% of the data set is randomly selected as a test set, and the rest of the data is added to noise labels to construct a training set. Specifically, we respectively modulo 20, modulo 10, and modulo 5 the sample indices, and multiply the sample point labels with a remainder of 0 by -1 to obtain the noise label data.

[0047] Figure 3-5 For training online classifiers KernelPerceptron, Pegasos and OSKL on datasets ijcnn, codrna and eegeye with noisy labels, and the average classification performance (average test accuracy, ACA) on the original 30% dataset without noise test dataset. The experimental results show that as the noise of the training samples indexed by mod20, mod10 and mod5 increases, the classifica...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com