Aircraft landing pose estimation method based on vision-inertia tight coupling

A pose estimation and aircraft technology, applied in the field of integrated navigation, can solve the problems of uncertain calculation time, long calculation time, poor solution accuracy, etc., and achieve strong robustness, low design and maintenance costs, and high pose accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

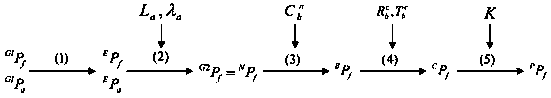

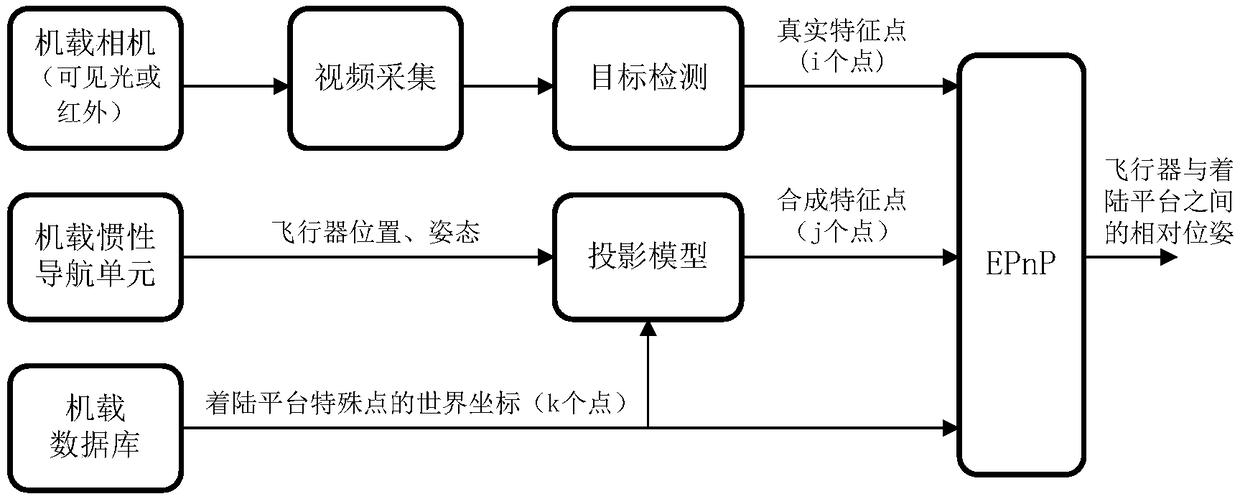

[0015] As mentioned above, the aircraft landing pose estimation method based on visual-inertial tight coupling of the present invention mainly includes the following processes:

[0016] 1. Framework of aircraft landing pose estimation method based on visual-inertial tight coupling

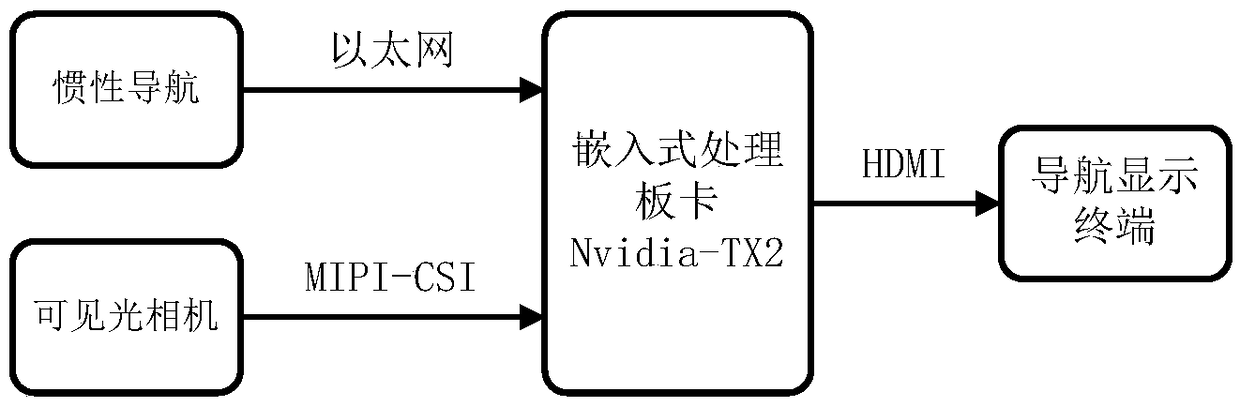

[0017] A complete vision-assisted inertial navigation system includes image sensors, inertial navigation units, onboard databases, graphics and image processing components, and navigation display terminals to support pose estimation during approach and landing phases. Among them, the image sensor can be a visible light camera (VIS), a short-wave infrared camera (SWIR), a long-wave infrared camera (LWIR) or a combination thereof, which is used to obtain a downward-looking or front-down-looking image; the inertial measurement unit can be an inertial navigation system ( INS) or Heading and Attitude Reference System (AHRS), etc., are used to obtain the motion state of the aircraft; the airborne databas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com