A video flame detection method based on two-stream convolution neural network

A convolutional neural network and flame detection technology, applied in the field of video image detection, to achieve the effect of improving the effect and reducing the time complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

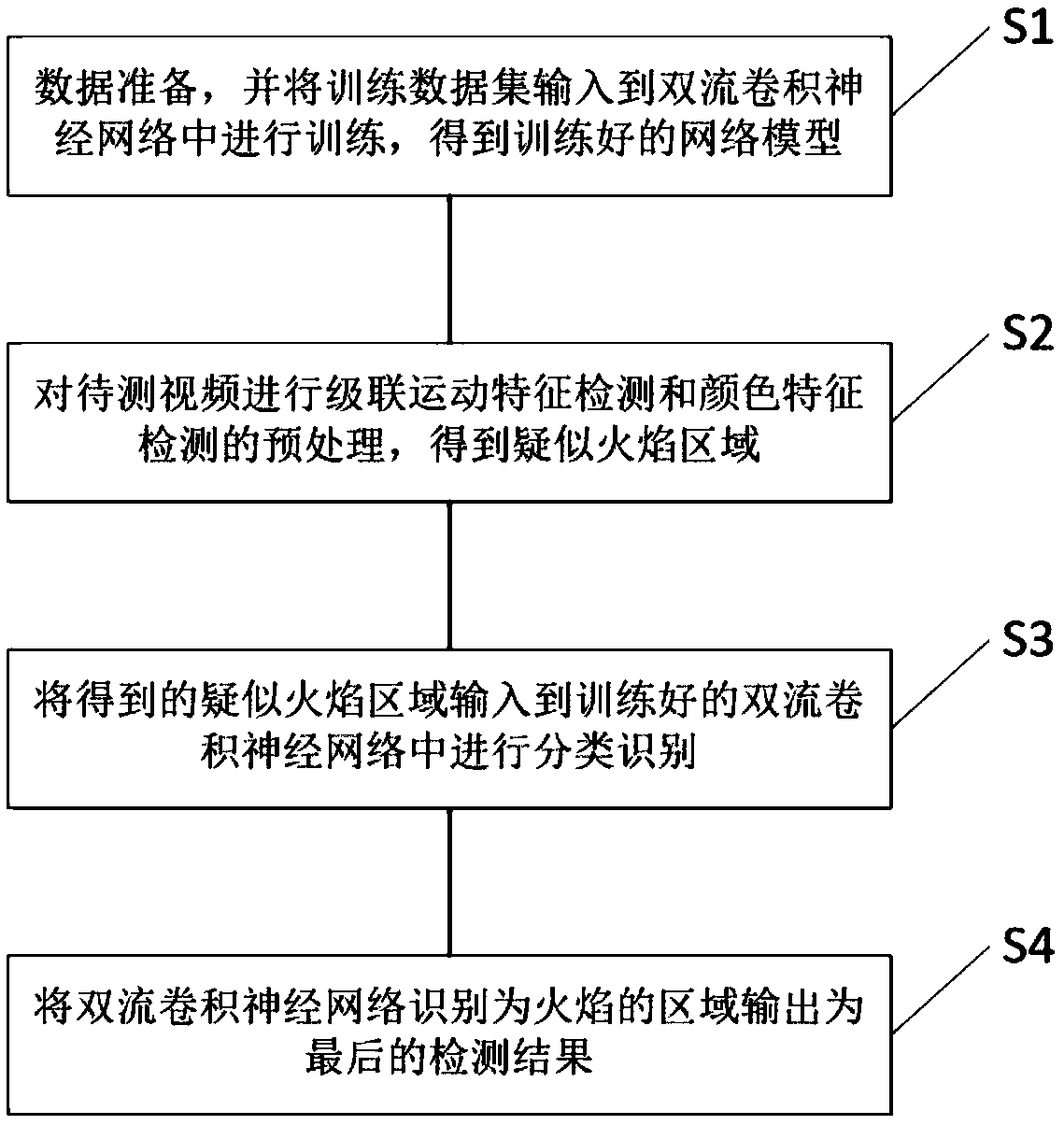

[0051] figure 1 It is a schematic flow chart of the main steps of the video flame detection method based on the dual-stream convolutional neural network, and the specific implementation plan is:

[0052] S1: Data preparation, input the training data set into the dual-stream convolutional neural network for training, and obtain the trained network model;

[0053] Specific steps include:

[0054] S1.1 Data set preparation

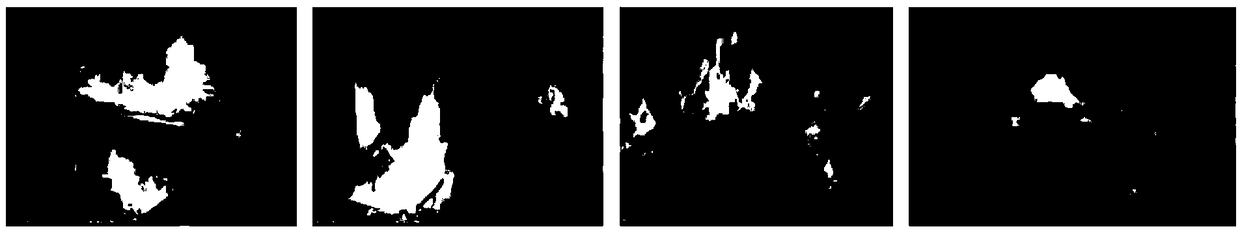

[0055] figure 2 It is a schematic diagram of the flame data set of the video flame detection method based on the dual-stream convolutional neural network. The data set used is composed of 4000 RGB images and 400 dynamic videos collected by myself; the data set is the training data set, and the training data set includes RGB images and dynamic video.

[0056] S1.2 Construction of network model

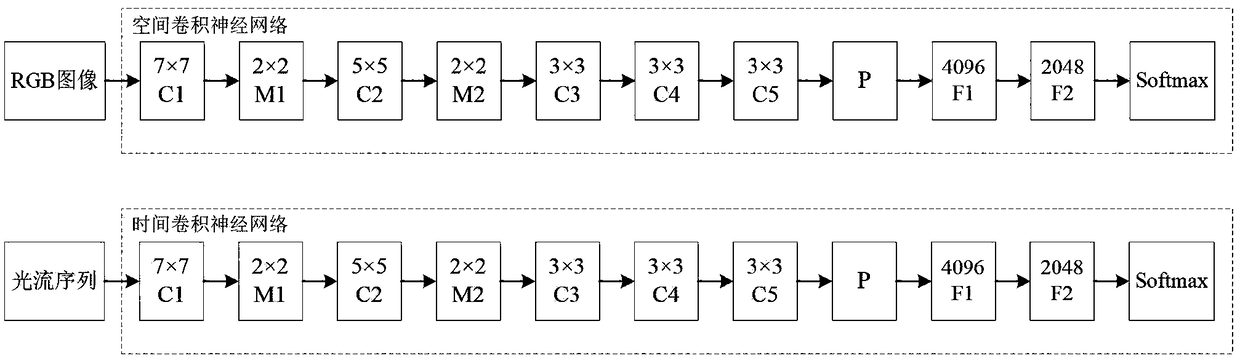

[0057] image 3 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com