Artificial hand control method based on deep learning

A control method and deep learning technology, applied in neural learning methods, prosthetics, medical science, etc., can solve problems such as low classification accuracy and difficulty in extracting motor imagery EEG signal features, achieve high accuracy and avoid incompleteness Sexuality and labor-saving effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] Embodiments of the present invention will be described below in conjunction with the accompanying drawings.

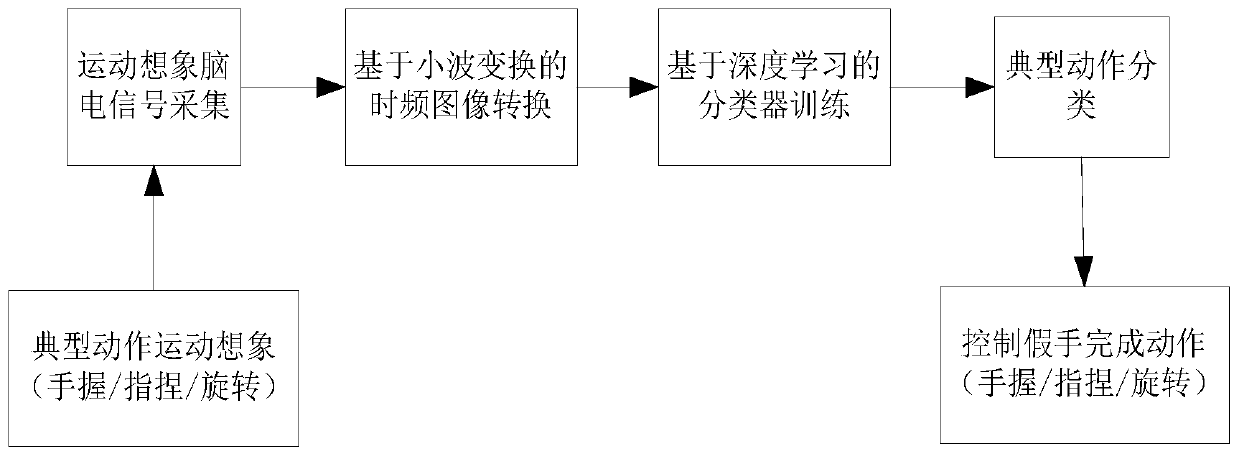

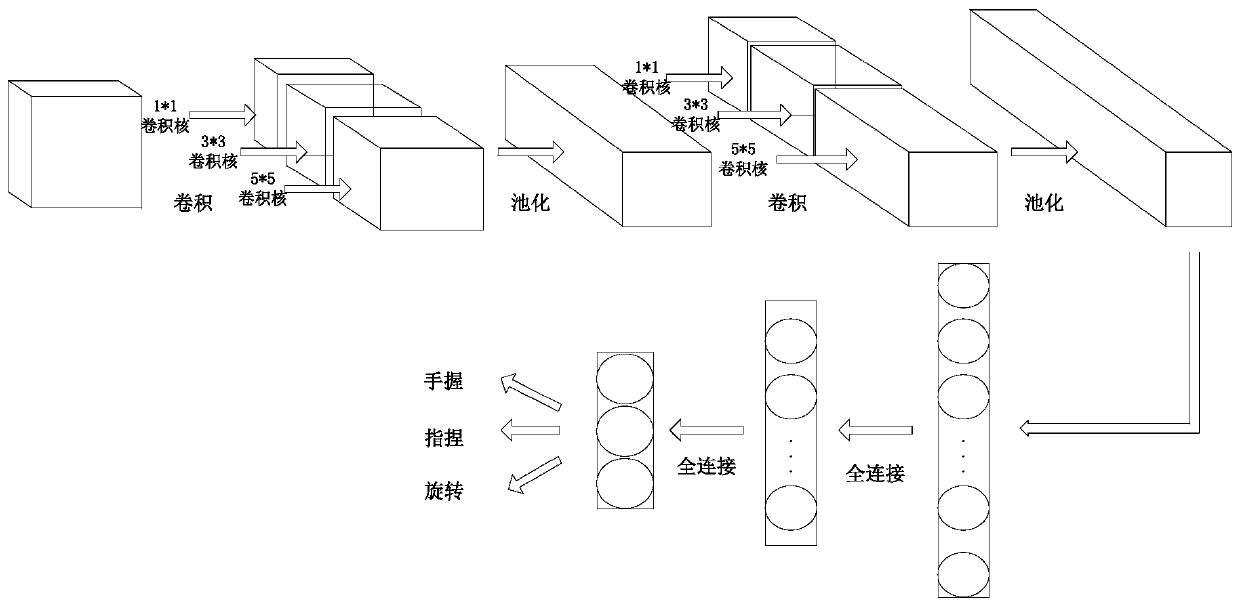

[0033] Such as figure 1 As shown, the present invention has designed a kind of prosthetic hand control method based on deep learning, and this method specifically comprises the following steps:

[0034] Step 1. Select hand movements; the present invention specifically selects three types of hand movements: hand grip, finger pinch and rotation, all of which are common actions in real life scenarios.

[0035] Because traditional prosthetic hand control is controlled by imagining the movements of the left and right hands, feet and tongue, but there are uncoordinated and unnatural contradictions between the imagined actions and the actions performed by the prosthetic hand, the present invention selects three commonly used actions in daily life: , pinch and rotate three action categories as the classification target, making it closer to the real life scene.

[0036...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com