Data distributed operation method and device, storage medium and processor

An operating method and operating device technology, applied in the field of communications, can solve problems such as the inability to specify specific equipment in the code, and achieve the effect of improving the efficiency of the system algorithm

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

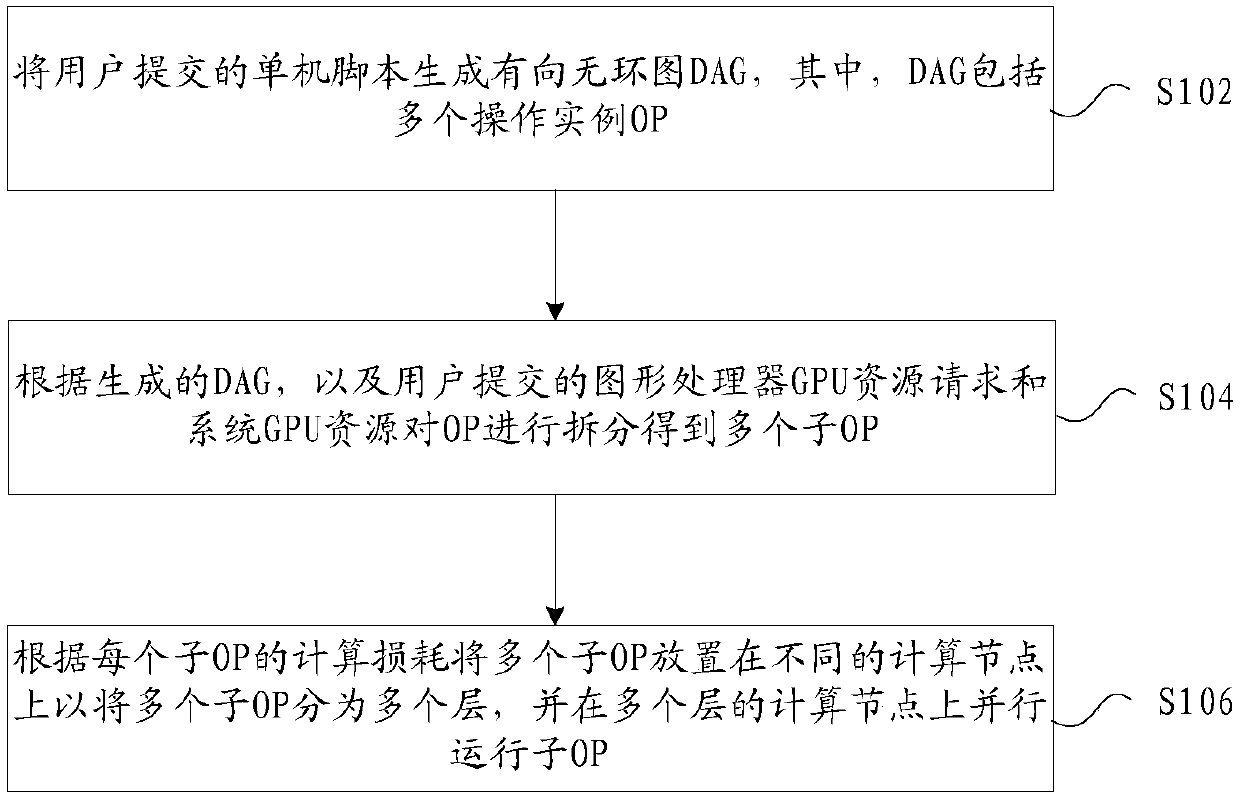

[0023] In this embodiment, a method for distributed operation of data is provided, figure 1 is a flowchart of a method for distributed operation of data according to an embodiment of the present invention, such as figure 1 As shown, the process includes the following steps:

[0024] Step S102, generating a directed acyclic graph DAG from the stand-alone script submitted by the user, wherein the DAG includes a plurality of operation instances OP;

[0025] Step S104, according to the generated DAG, and the graphics processor GPU resource request submitted by the user and the system GPU resource, the OP is split to obtain multiple sub-OPs;

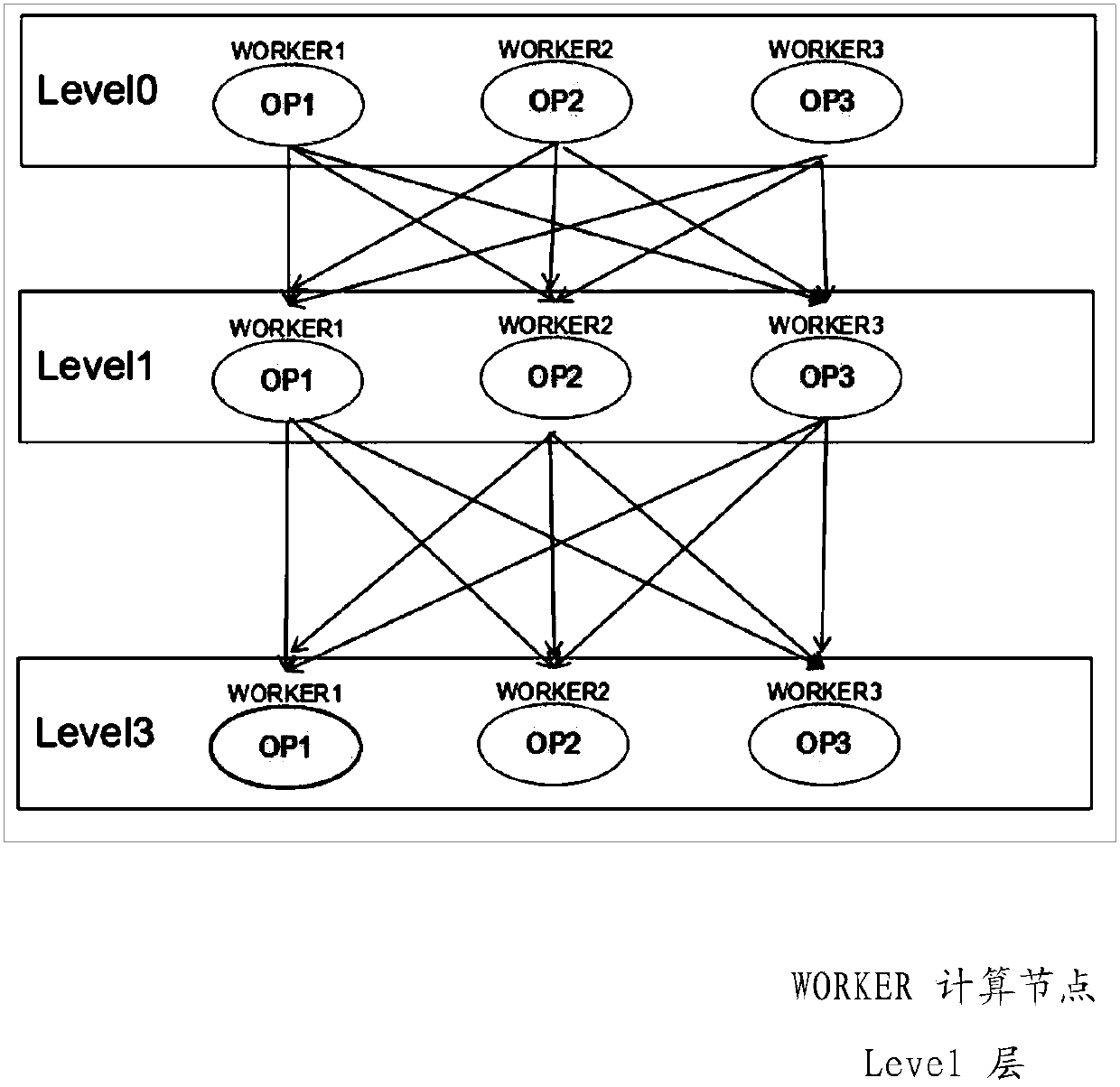

[0026] Step S106, according to the calculation loss of each sub-OP, place multiple sub-OPs on different computing nodes to divide the multiple sub-OPs into multiple layers, and run the sub-OPs in parallel on the computing nodes of multiple layers; wherein, the current layer The calculation loss of the sub-OP is smaller than the calculation ...

Embodiment 2

[0061] In this embodiment, a device for distributed data operation is also provided, which is used to implement the above embodiments and preferred implementation modes, and what has already been described will not be repeated. As used below, the term "module" may be a combination of software and / or hardware that realizes a predetermined function. Although the devices described in the following embodiments are preferably implemented in software, implementations in hardware, or a combination of software and hardware are also possible and contemplated.

[0062] Figure 5 is a structural block diagram of a data distributed operation device according to an embodiment of the present invention, such as Figure 5 As shown, the device includes:

[0063] The generation module 52 is used to generate a directed acyclic graph DAG from the stand-alone script submitted by the user, wherein the DAG includes a plurality of operation instances OP;

[0064] The splitting module 54 is coupled...

Embodiment 3

[0073] In this embodiment, according to the GPU resources of the platform cluster, OP scheduling and OP diagram dismantling are performed on the user's training script, and automatic distributed parallel running of training tasks is realized in the cloud environment, so as to achieve the intelligence of the user's deep learning training tasks. Perform high-concurrency and high-performance operations.

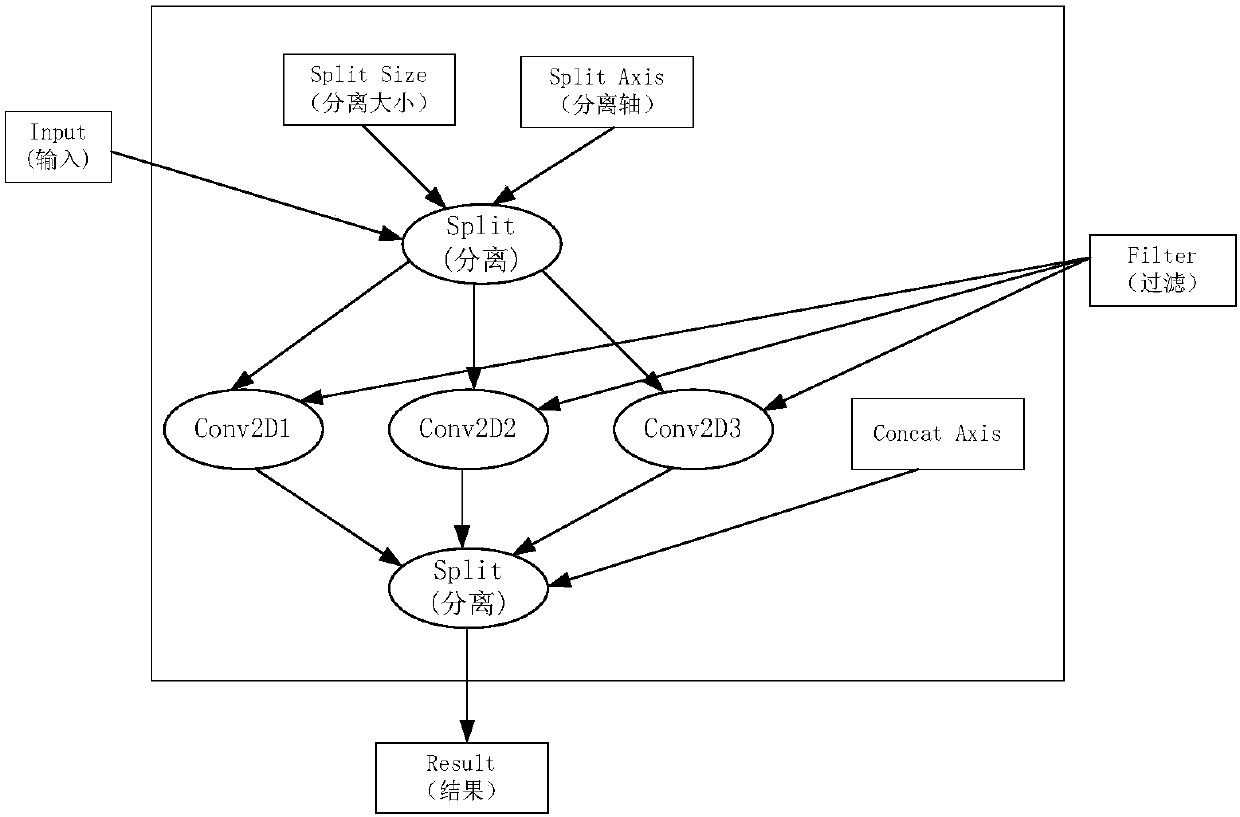

[0074] In general: generate a DAG calculation graph from the stand-alone script submitted by the user, and then generate a parallel scheme according to the resource request submitted by the user and the system GPU resource characteristics, realize automatic distributed parallel operation in this system, and reduce the difficulty of algorithm development .

[0075]Among them, when generating an automated parallel solution, OP is split and the calculation loss of each OP is calculated, and each OP is placed on a suitable GPU for execution, so as to achieve the balance of computing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com