Visual SLAM method based on multi-feature fusion

A multi-feature fusion and vision technology, applied in image data processing, instrumentation, 3D modeling, etc., can solve problems such as the decrease of constraint force based on point features, the inability to perform stable tracking on point features, and the inability to construct environmental maps, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0087] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

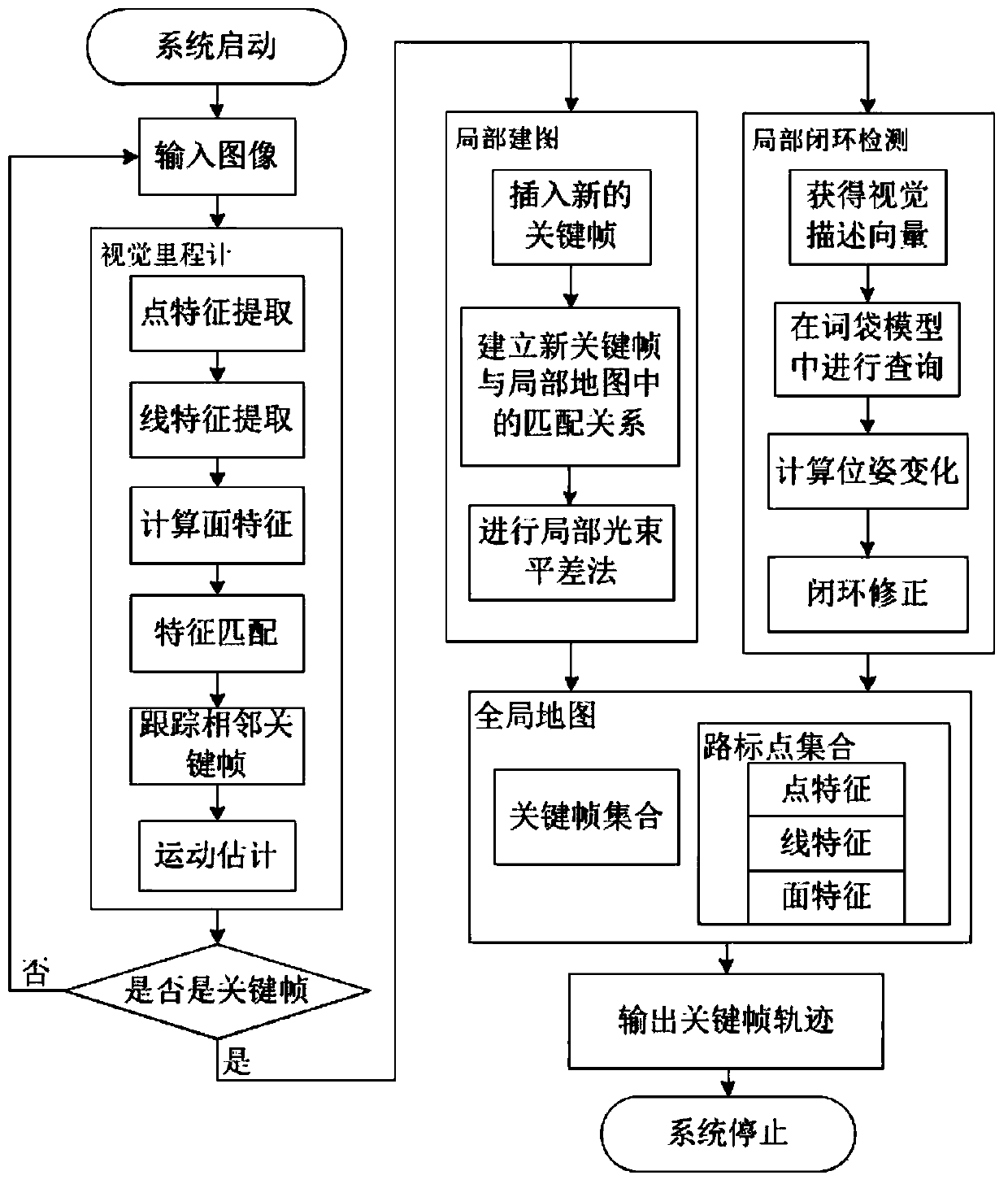

[0088] The technical solution adopted in the present invention is: a visual SLAM method based on multi-feature fusion, the specific implementation process is shown in figure 1 , mainly including the following steps:

[0089] Step 1, carry out the calibration of the depth camera, and determine the internal reference of the camera;

[0090] Step 2, for the video stream data acquired by the camera on the mobile robot platform, Gaussian filtering is performed to reduce the influence of noise on subsequent steps;

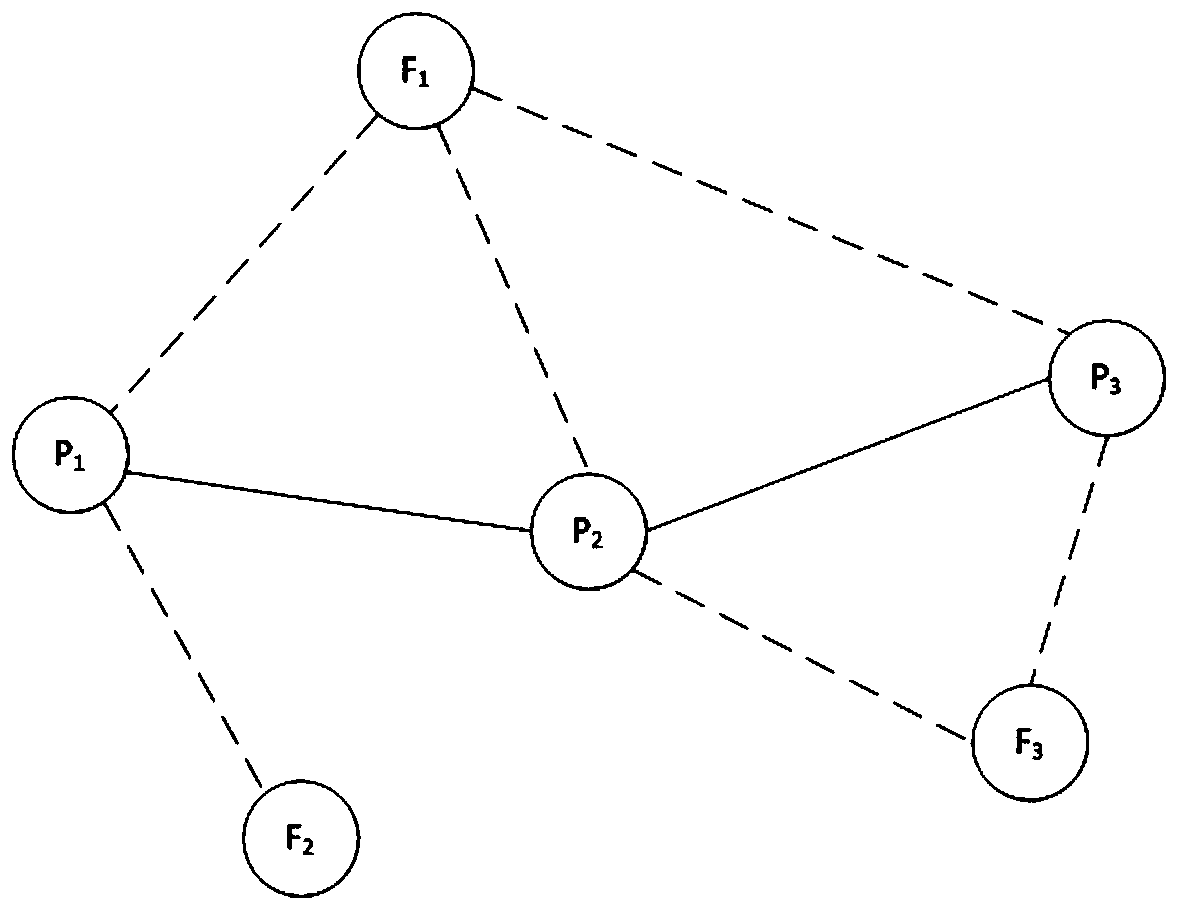

[0091] Step 3, perform feature extraction on the corrected image, extract point features and line features of each frame online, specifically include: extract FAST corner feature for each frame image, use rBRIEF descriptor to describe, and use LSD algorithm for line features Perform detection and use the LBD descriptor to describe, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com