Human body posture visual identification method of transfer carrying nursing robot

A human body posture and visual recognition technology, applied in the field of human body posture visual recognition, can solve the problems of unrecognized or misrecognized, troublesome, large amount of algorithm calculation, etc., to reduce the dependence on joint positions, adapt to the family environment, and protect people. The effect of machine safety

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

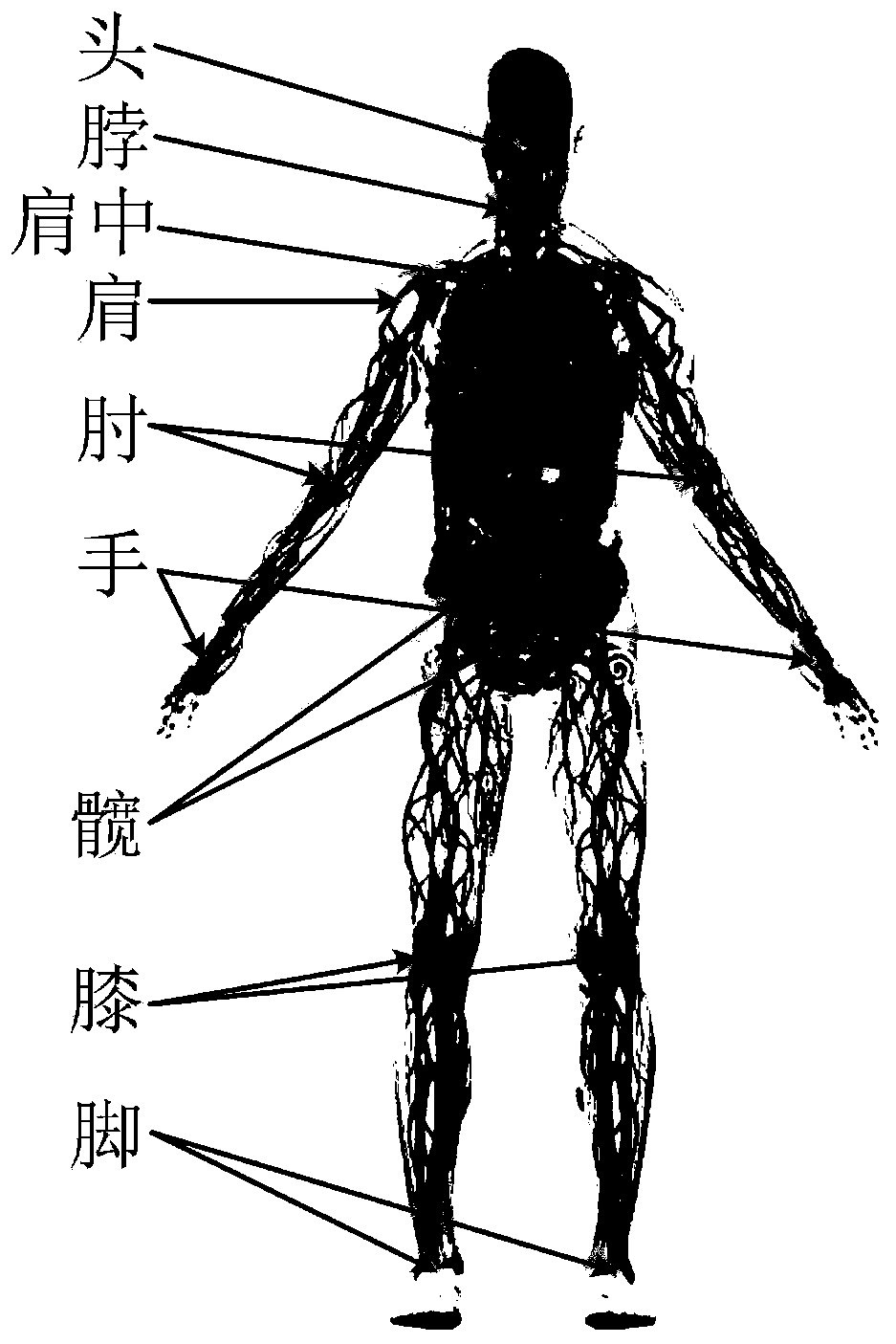

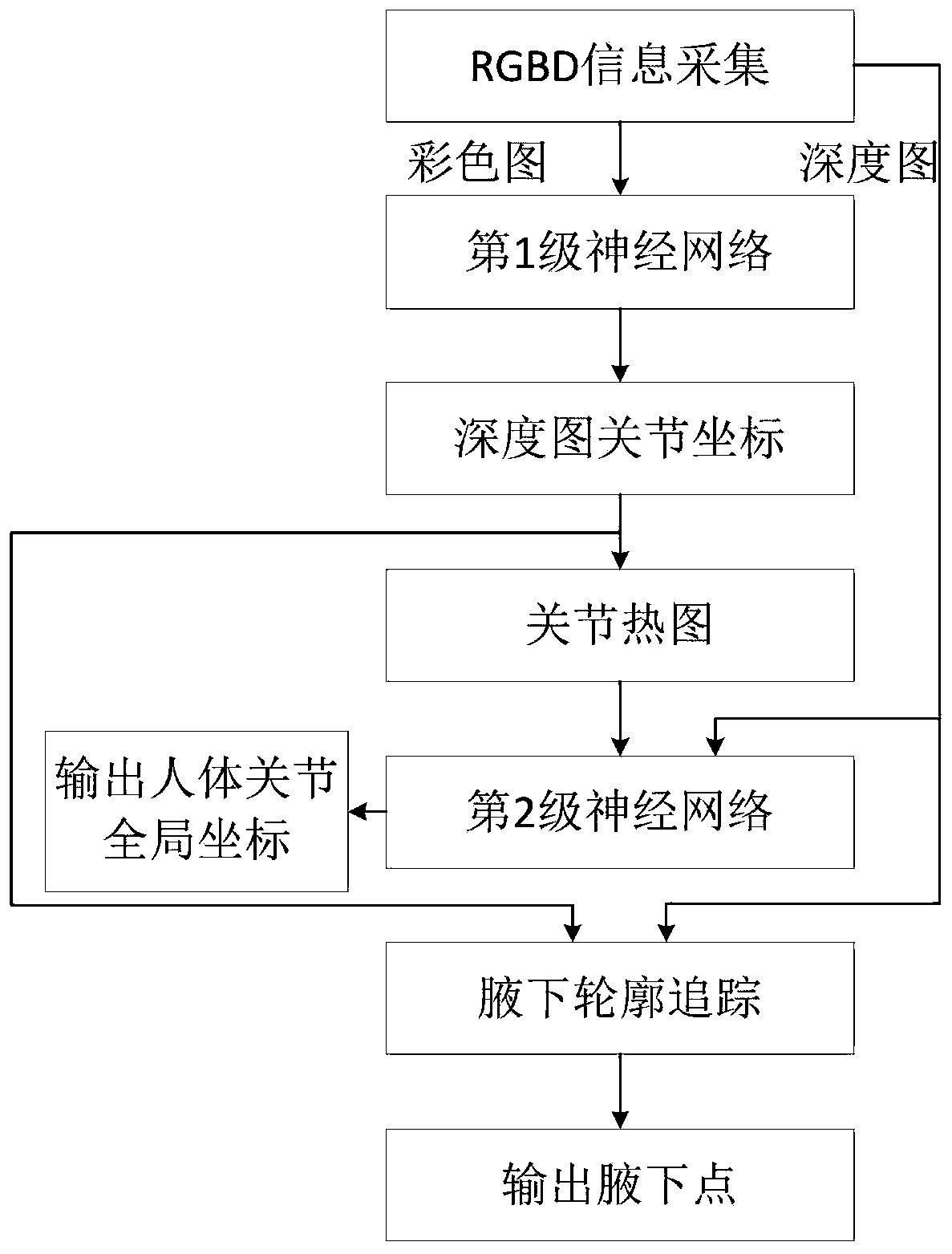

[0055] Define human body posture, such as figure 2 As shown, there are a total of 15 joint points (the number of human body posture joint points can be set according to the requirements). The technical solution for realizing the close-range posture visual recognition of people is: make full use of RGBD information, and use the first neural network to estimate the human body joint pixels in the color image Coordinates to realize the adaptability of the human joint recognition algorithm to close-range human postures, and then use the second-level neural network based on the depth map and joint heat map to upgrade the dimension and optimize the accuracy of the joint 2D coordinate estimation of the first-level neural network. 3D human pose. The flow process of the human body joint recognition algorithm of the present invention is as follows: image 3 shown.

[0056] In order to realize the estimation of the human body posture in the color image, the present invention adopts the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com