Video abstraction method based on attention expansion coding and decoding network

A technology of video summarization and attention, applied in the field of video summarization, can solve the problems of outlier processing without a clear solution, failure to make full use of video semantic information, and failure to comprehensively consider the global constraints of generating summaries. time of resources, enhanced robustness, improved search experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

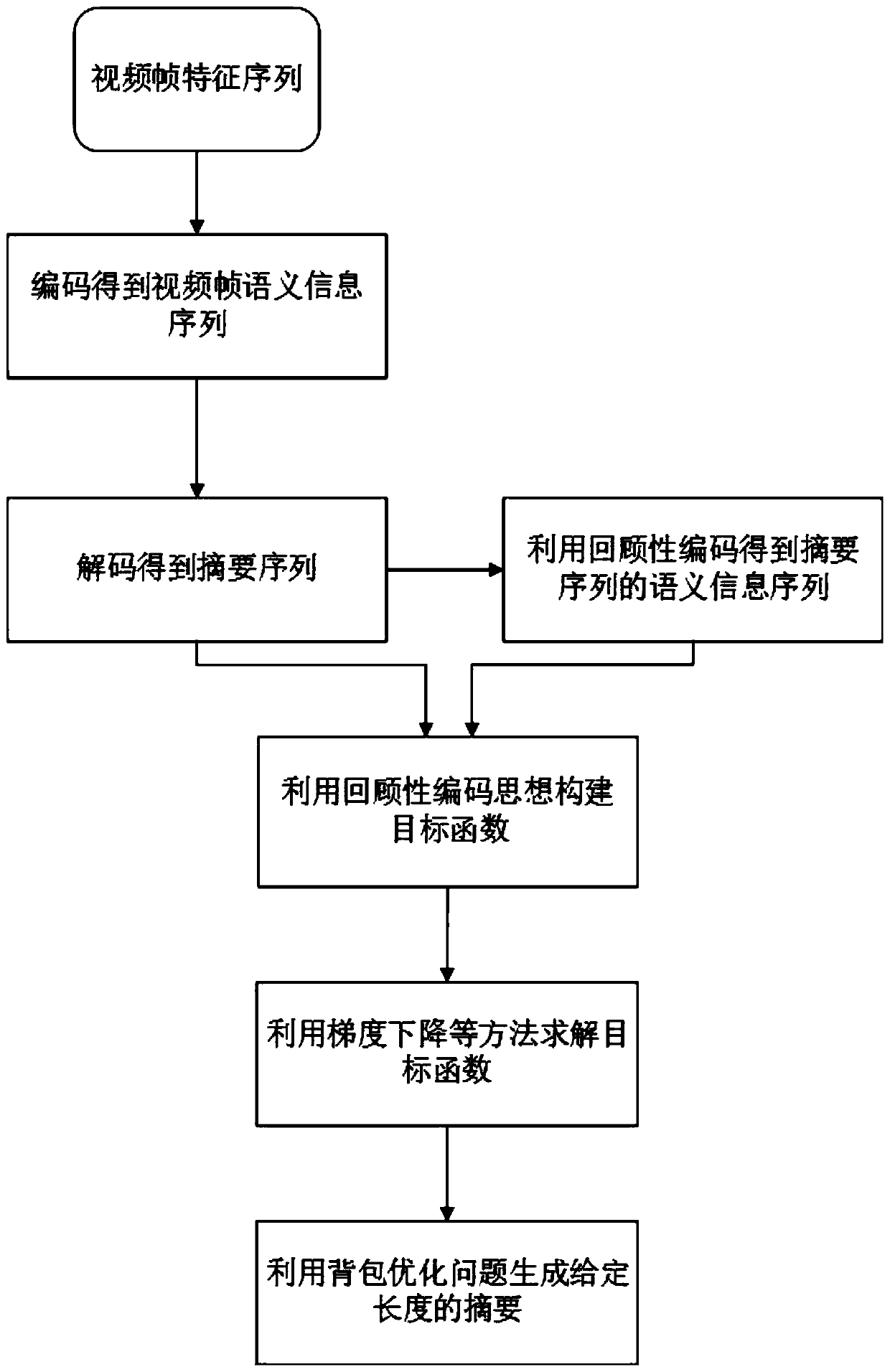

[0026] The video summarization method based on the attention extension codec network of the present invention will be described in detail below with reference to the embodiments and the accompanying drawings.

[0027] In order to enhance the ability of generating summaries to retain the important and relevant information of the original video, the present invention draws on the idea of retrospective coding, introduces a global semantic discrimination loss, uses the semantic information of the original video to guide the generation of summaries, constrains the summarization generation process as a whole, and Label information is not required in this process, alleviating the model's dependence on labeled data. However, different from retrospective coding, the present invention starts from obtaining the video frame width information and constructing the maximum semantic information constraint, integrates the video frame context information, does not introduce the mismatch loss b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com