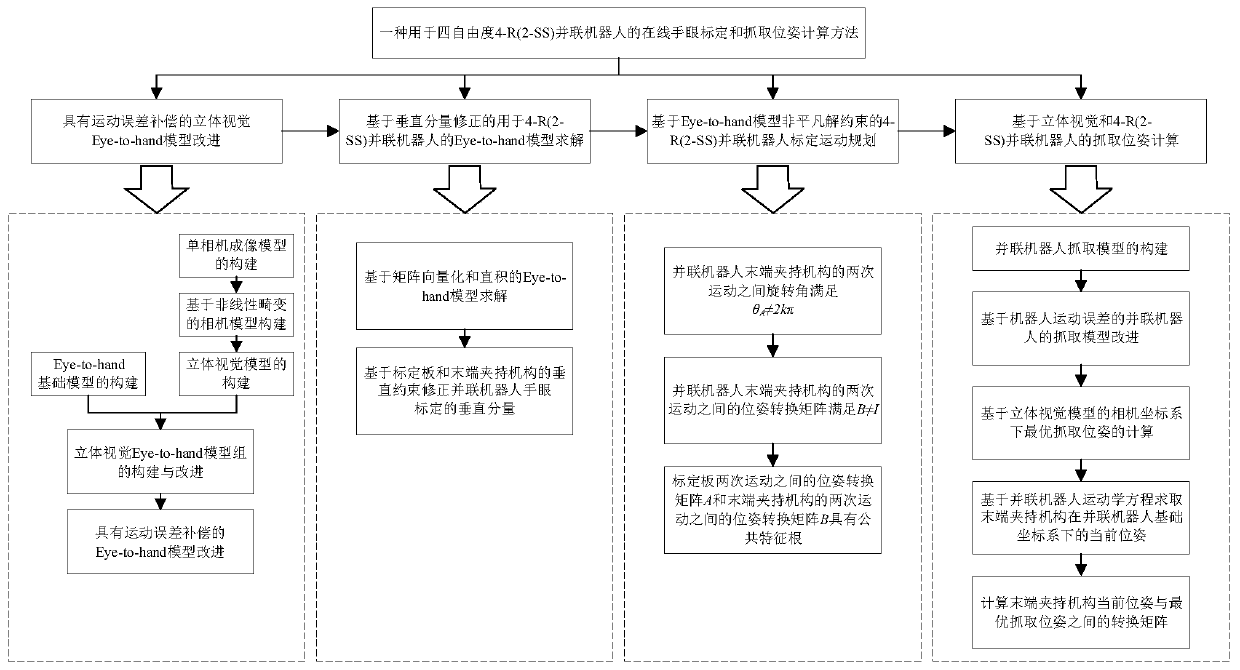

Online hand-eye calibration and grabbing pose calculation method for three-dimensional vision hand-eye system of four-degree-of-freedom parallel robot

A technology of stereo vision and hand-eye calibration, applied in computing, manipulators, program-controlled manipulators, etc., can solve the problem that the vertical component of parallel robots cannot be accurately obtained.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

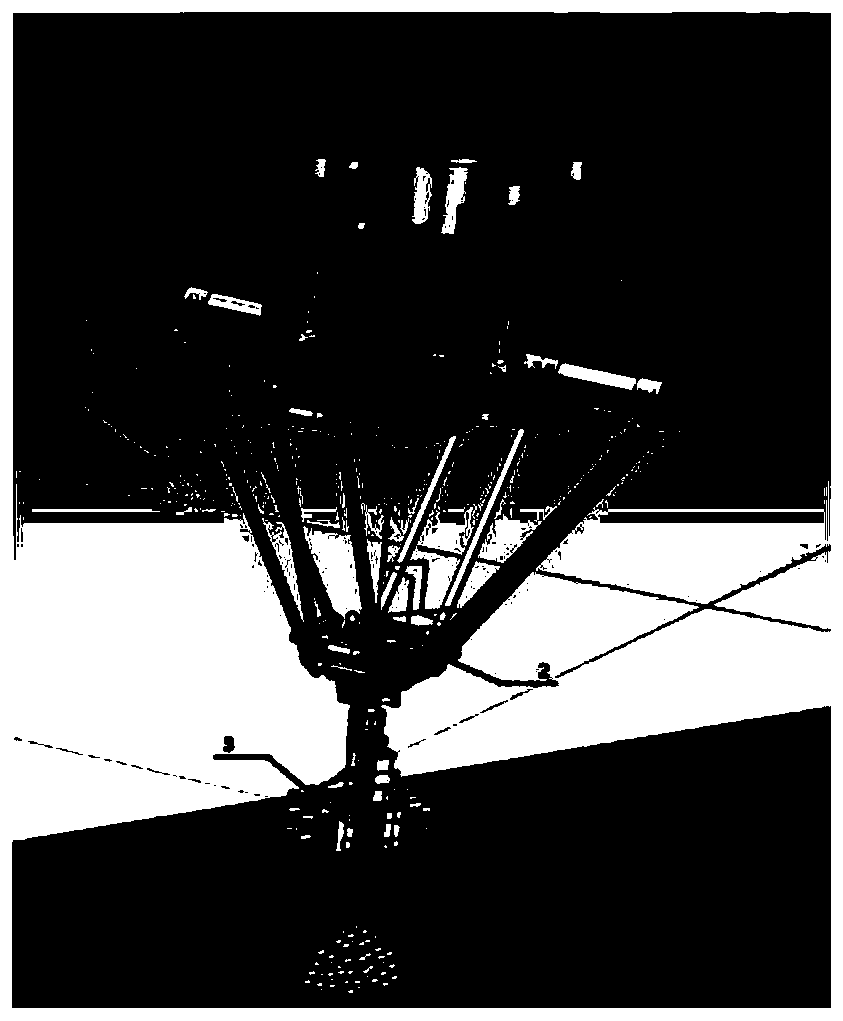

[0139] Specific embodiments are described by taking a new type of 4-R (2-SS) parallel robot fruit sorting system developed by our research group as an example, and white rosa grape bunches as the grasping objects. Its specific implementation is as follows:

[0140] 1. Improved stereo vision Eye-to-hand model with motion error compensation. Specific steps are as follows:

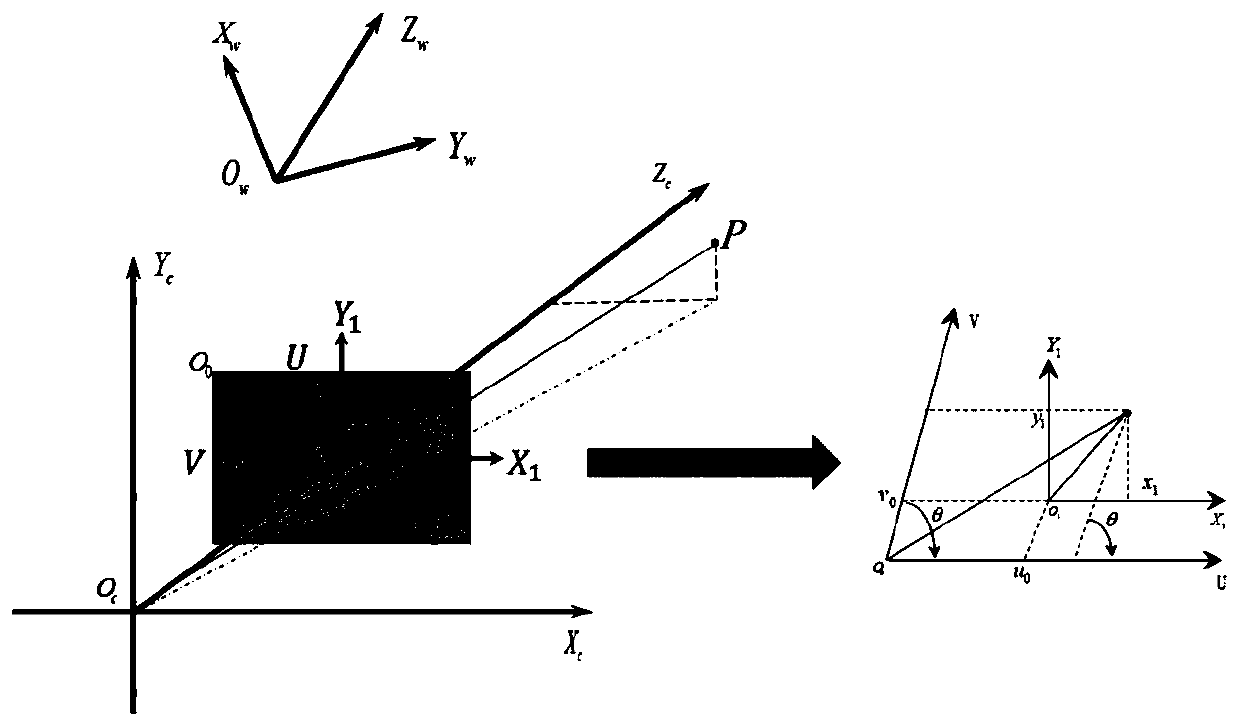

[0141] (1) Construction and improvement of stereo vision Eye-to-hand model group. The present invention improves the Eye-to-hand basic model AX=XB based on the results of binocular calibration and in combination with the relative poses of the color camera and the infrared camera. First, the color camera and the infrared camera are modeled separately, and the following can be obtained:

[0142]

[0143] Based on the stereo vision model, formula (1) is transformed, and the improved stereo vision Eye-to-hand model group is obtained:

[0144]

[0145] (2) Improved Eye-to-hand model with motion error com...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com