Mobile side vision fusion positioning method and system, electronic equipment

A technology that integrates positioning and mobile terminals. It is applied in the intersection of artificial intelligence and geographic information technology. It can solve the problems of no semantic information utilization, cumbersome preparation work, and only relative positioning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0064] In order to make the purpose, technical solution and advantages of the present application clearer, the present application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present application, not to limit the present application.

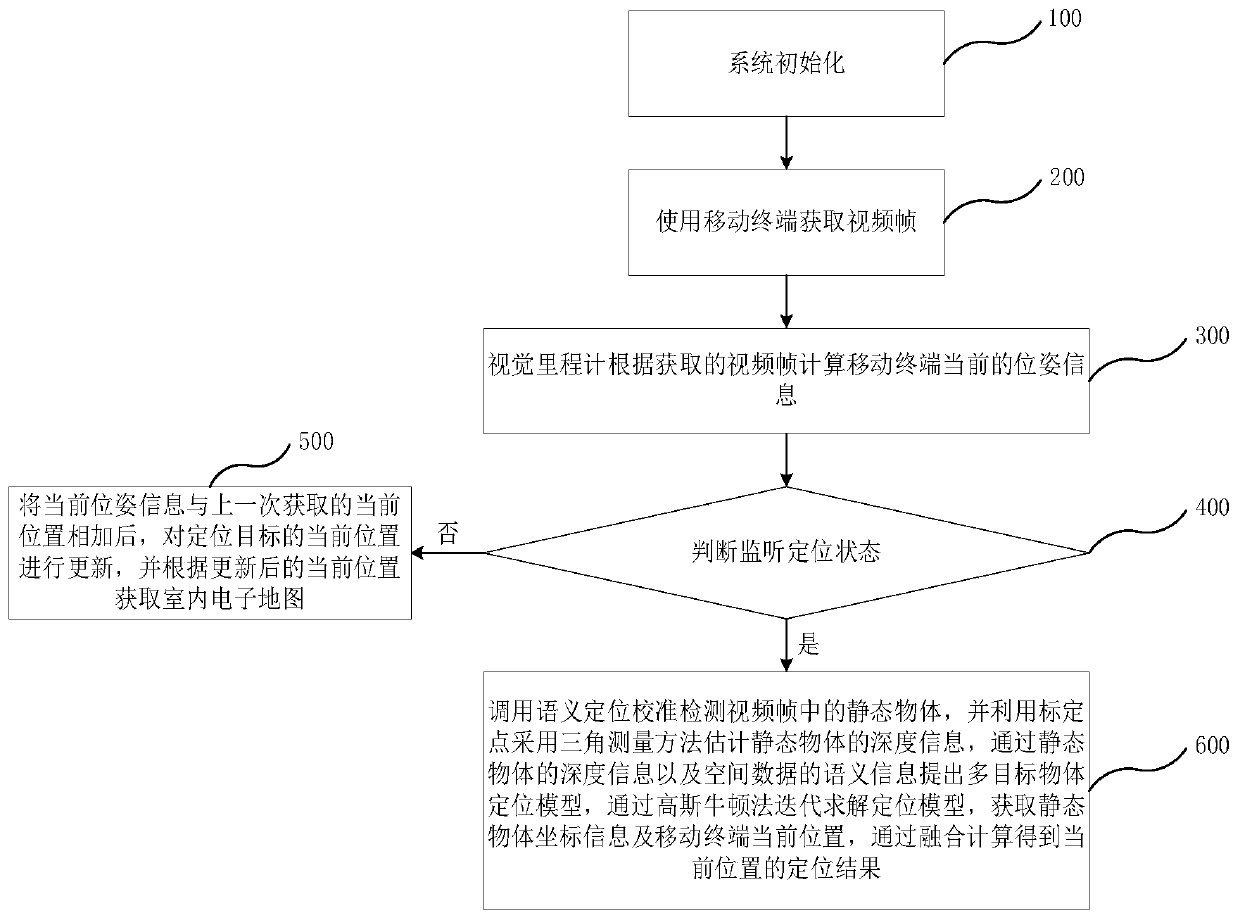

[0065] see figure 1 , is a flow chart of the mobile terminal visual fusion positioning method according to the embodiment of the present application. The mobile terminal vision fusion positioning method in the embodiment of the present application includes the following steps:

[0066] Step 100: system initialization;

[0067] In step 100, system initialization includes the following steps:

[0068] Step 110: initialization of visual odometry;

[0069] In step 110, the initialization of the visual odometry includes: the memory allocation of the pose manager, the initial value assignmen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com