A robot positioning method combining camera, imu and lidar

A technology of robot positioning and laser radar, applied in the direction of instruments, electromagnetic wave re-radiation, image analysis, etc., can solve the problems of low accuracy and stability, point cloud matching, etc., and achieve the effect of eliminating cumulative errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below in conjunction with accompanying drawing:

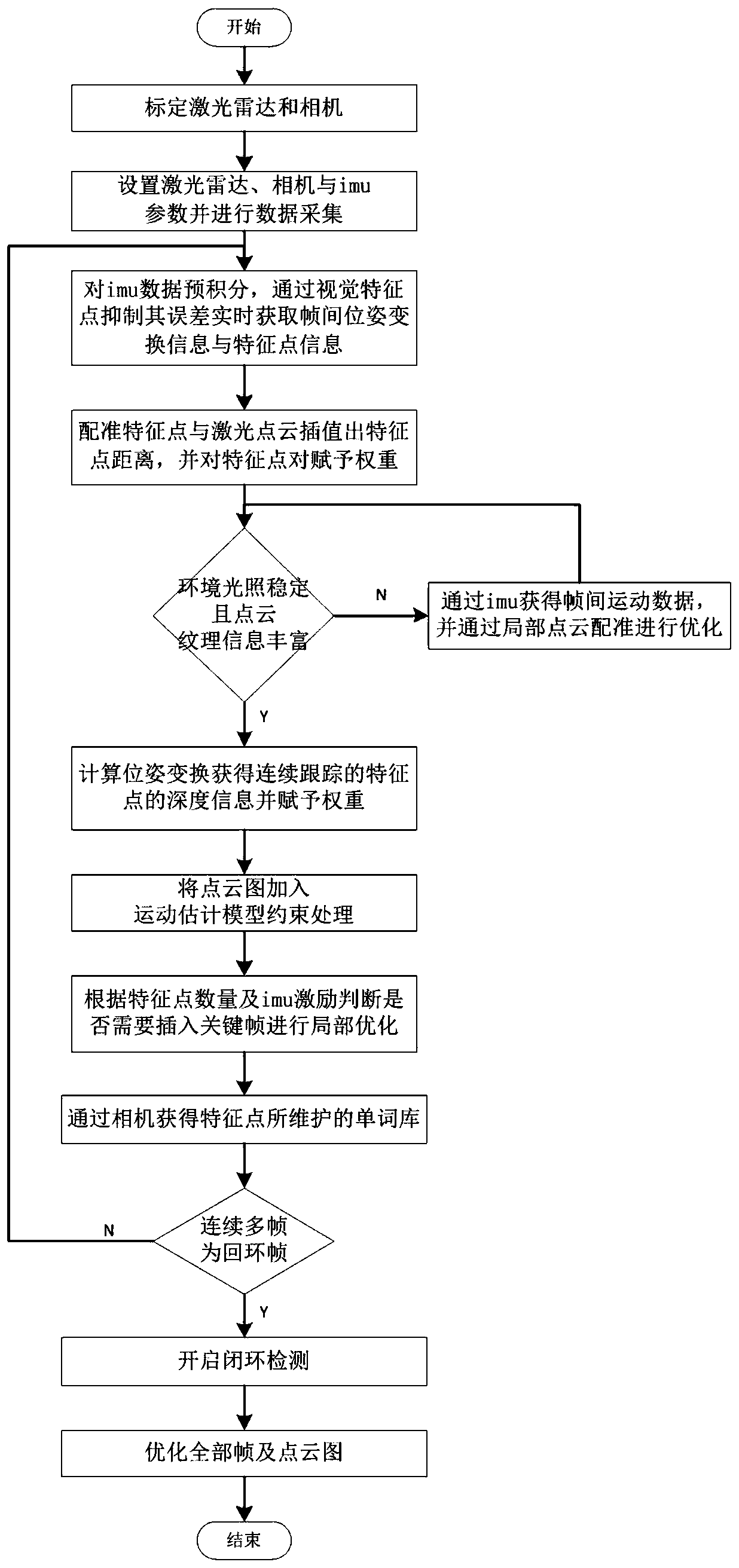

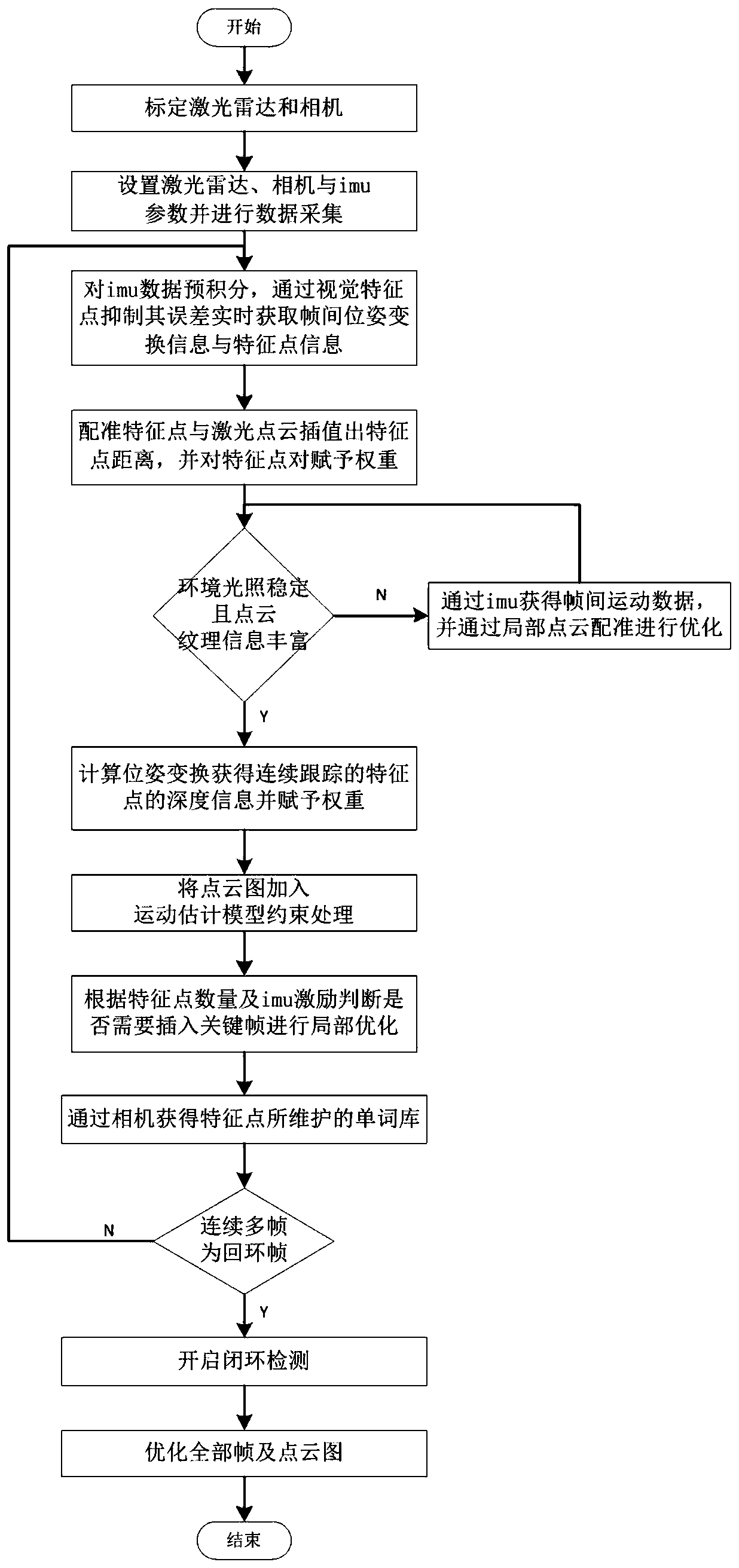

[0032] Such as figure 1 As shown, the purpose of the present invention is to provide a robot positioning method combining camera, imu and laser radar in order to solve the above problems, including the following steps:

[0033] S1: Calibrate the lidar and camera so that the laser points and pixels can achieve pixel-level accuracy;

[0034] S2: Set the lidar scanning frequency, scanning angle, camera frame rate and imu frequency and collect data;

[0035] S3: Pre-integrate the imu data, and suppress its error through visual feature points, and obtain inter-frame pose transformation information and feature point information in real time;

[0036] S4: Register feature points and laser point cloud, select feature point ray to be located in the feature point pair of laser point cloud curvature smaller area, interpolate out feature point distance, and assign primary weight to f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com