Intelligent recognition shooting method and system for multi-person scene and storage medium

A technology for intelligent identification and shooting systems, applied to parts of TV systems, parts of color TVs, TVs, etc., can solve problems such as insufficient clarity, insufficient flexibility, inability to independently complete person shooting and person recognition, etc., to achieve The effect of improving recognition efficiency and improving flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

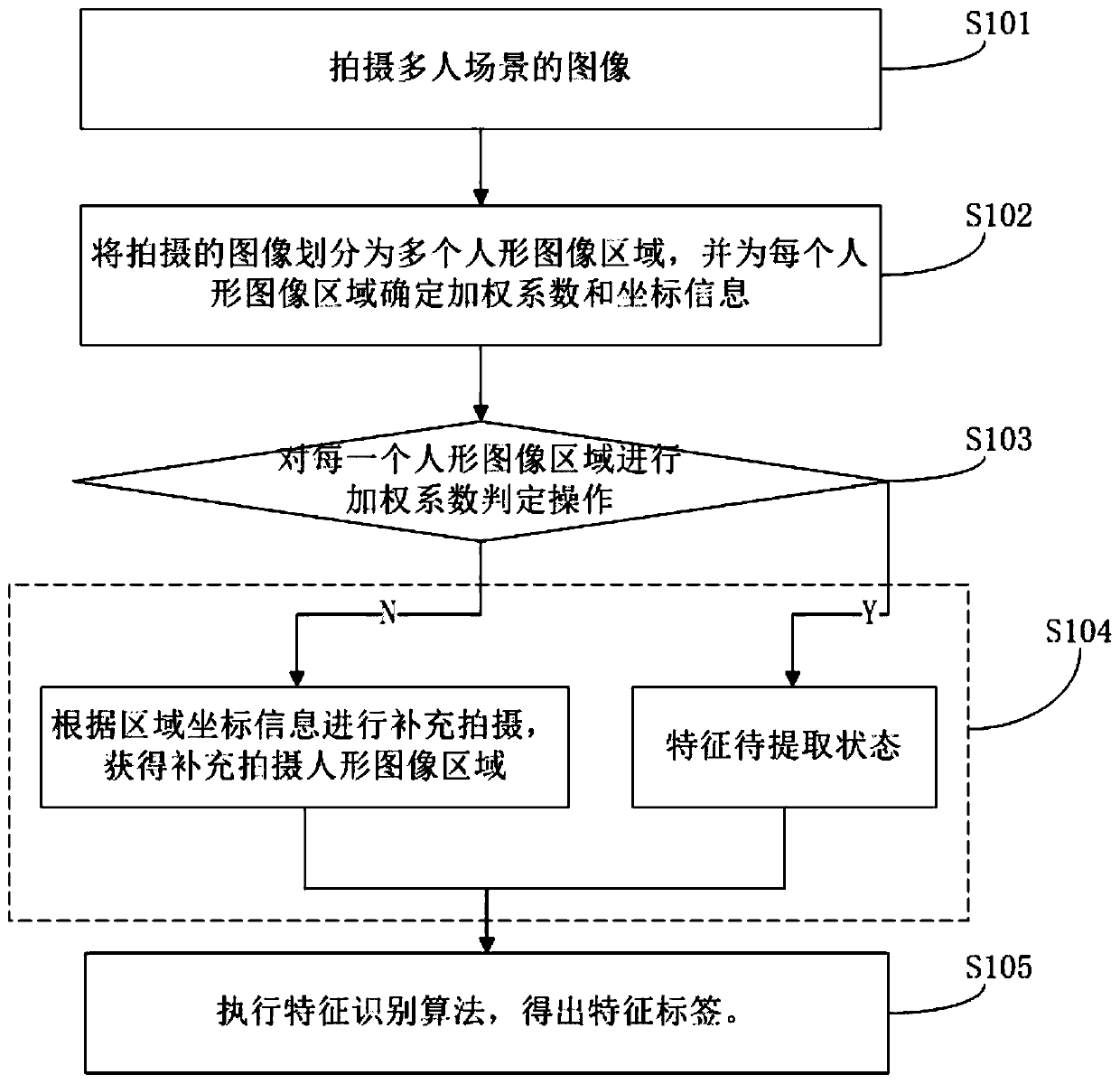

[0077] see figure 1 , the embodiment of the present invention provides a method for intelligent recognition and shooting of a multi-person scene, including the following steps:

[0078] S101. Capture an image of a multi-person scene to obtain a main image image. Wherein, the main screen image includes a plurality of human-shaped images.

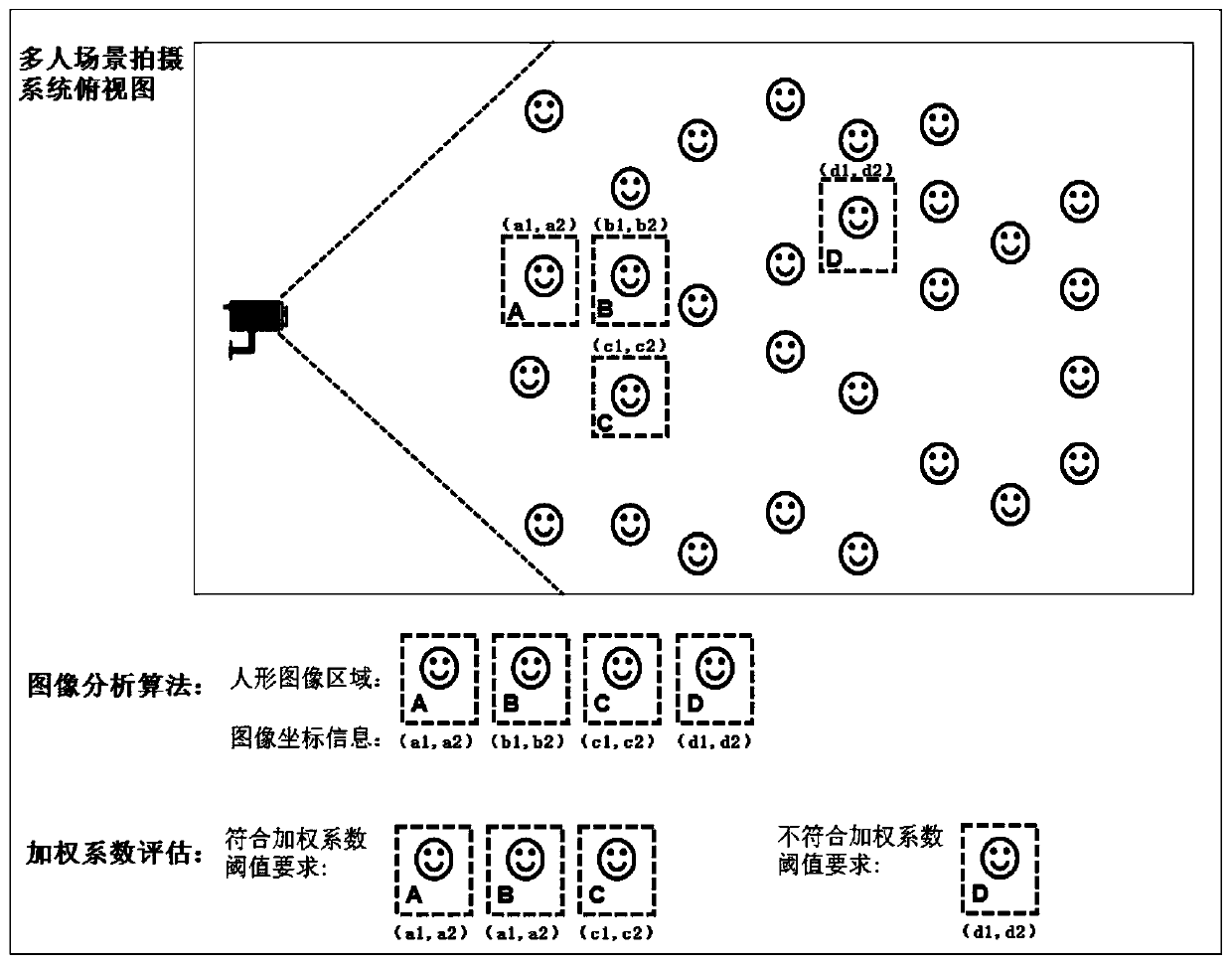

[0079] Such as figure 2 As shown, the images of the multi-person scene are captured by the camera.

[0080] It should be pointed out that, in the embodiment of the present invention, the human-shaped image in the main screen image may be collected by a camera device, and the camera device includes a camera, an intelligent terminal with an image capture function, and the like.

[0081] S102. Execute an image analysis algorithm on the image, the image analysis algorithm divides the captured image into multiple human-shaped image regions, and determines weighting coefficients and coordinate information for each human-shaped image region.

...

Embodiment 2

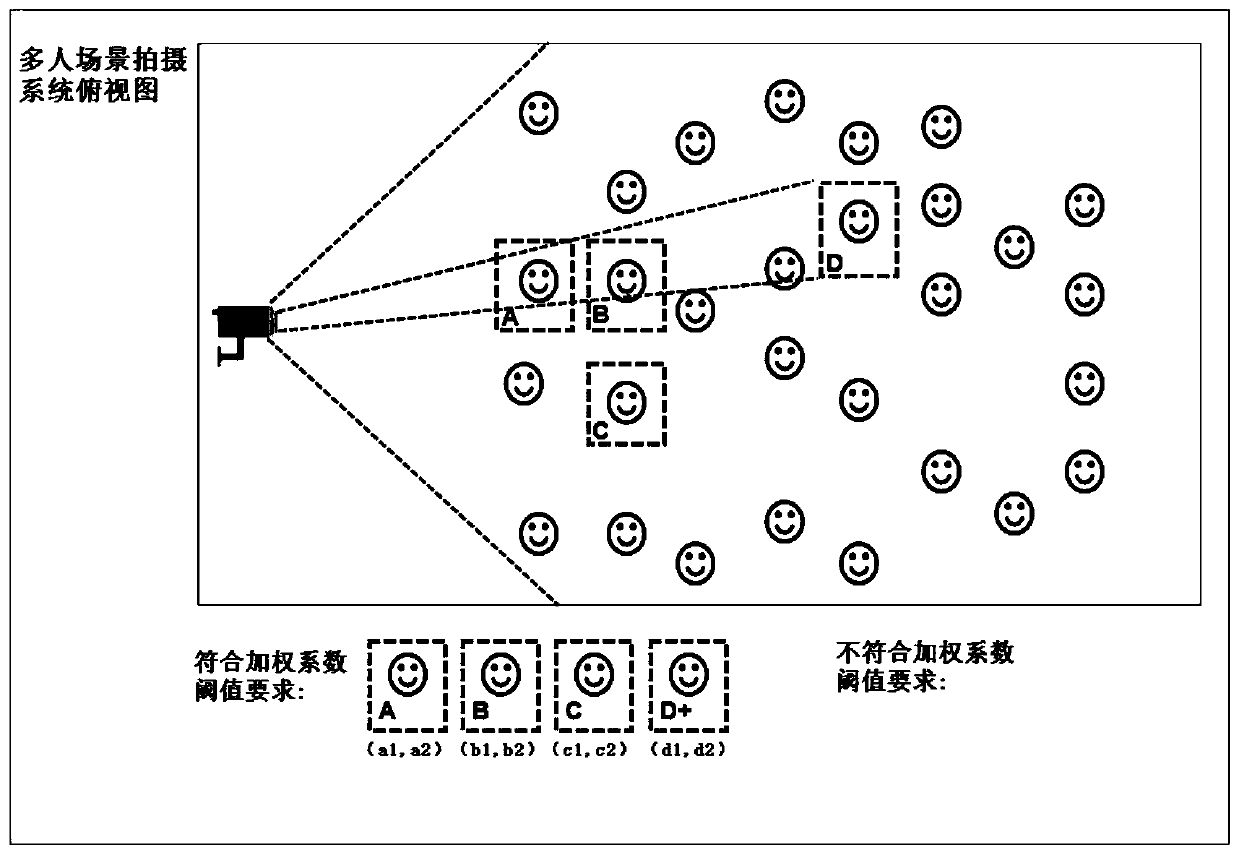

[0103] Embodiment 2 is basically the same as Embodiment 1, but step S205 is added between steps S104 and S105 of Embodiment 1. At this time, the specific process is as follows Figure 5 As shown, the specific step S205 is as follows:

[0104] S205. Repeat steps S202, S203 and S204 for the area of the supplementary captured human figure image that has not been subjected to the image analysis algorithm.

[0105] Although the embodiment performs supplementary shooting of the human figure image area that does not meet the weighting coefficient threshold value, it is impossible to know whether there will be too many people or insufficient clarity in the supplementary picture, which may cause the feature recognition algorithm in the later stage to fail to execute. . In order to ensure that the retaken pictures meet the requirements and can be executed by the feature recognition algorithm, steps S202, S203 and S204 need to be repeated. It should be pointed out that step S205 is a...

Embodiment 3

[0113] Embodiment 3 is basically the same as Embodiment 1, but step S306 is added after step S105 in Embodiment 1. At this time, the specific process is as follows Figure 7 As shown, the specific step S306 is as follows.

[0114] S306. Match the corresponding relationship between the coordinate information of the human figure image area and the feature label, and compound the feature label in the corresponding position on the main picture image according to the coordinate information.

[0115] In this way, the specific feature labels of each human figure can be seen intuitively on the screen. For example, in the actual remote teaching, the teacher can know the names of all the students who are attending the class, which is convenient for the teacher to roll call and teaching interaction; (Because the relevant information of irrelevant personnel has not been entered in advance, so their characteristics will not be in the characteristic database, so the comparison operation wi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com