Machine vision indoor positioning method based on improved convolutional neural network structure

A convolutional neural network and network structure technology, applied in the field of machine vision indoor positioning, can solve problems such as difficulty in labeling training samples, achieve strong robustness, high accuracy, and broaden thinking

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below in conjunction with specific examples.

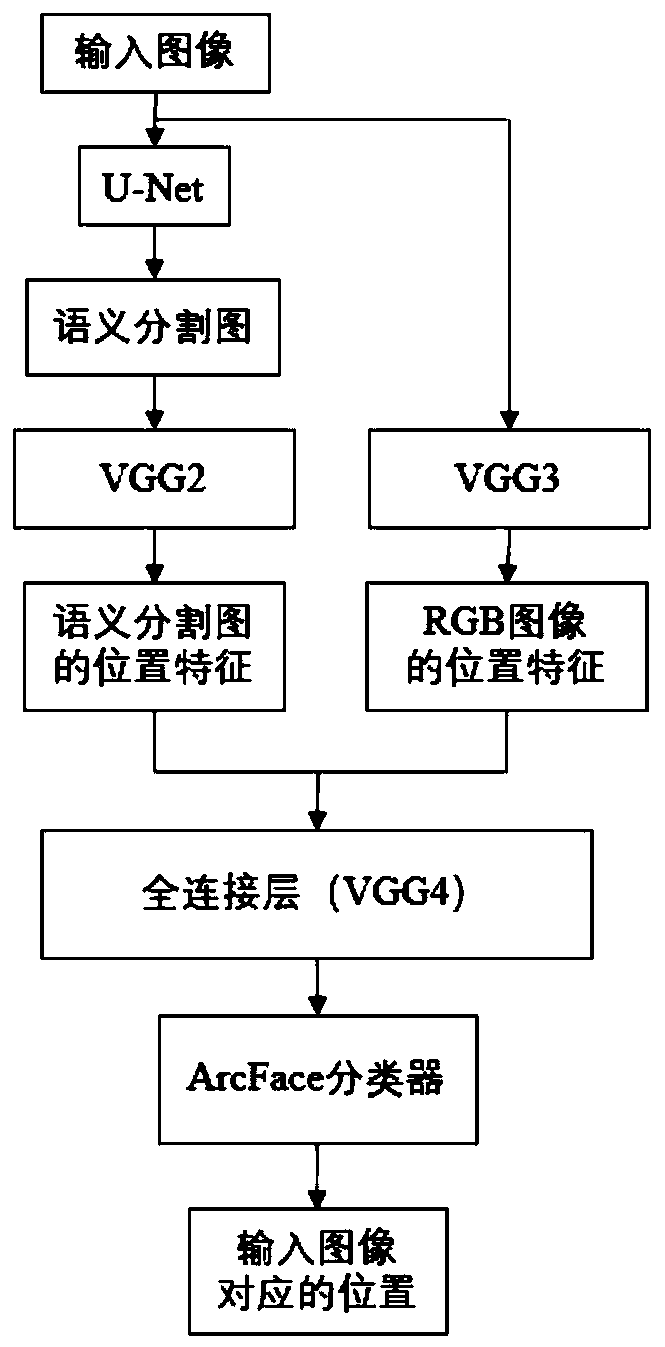

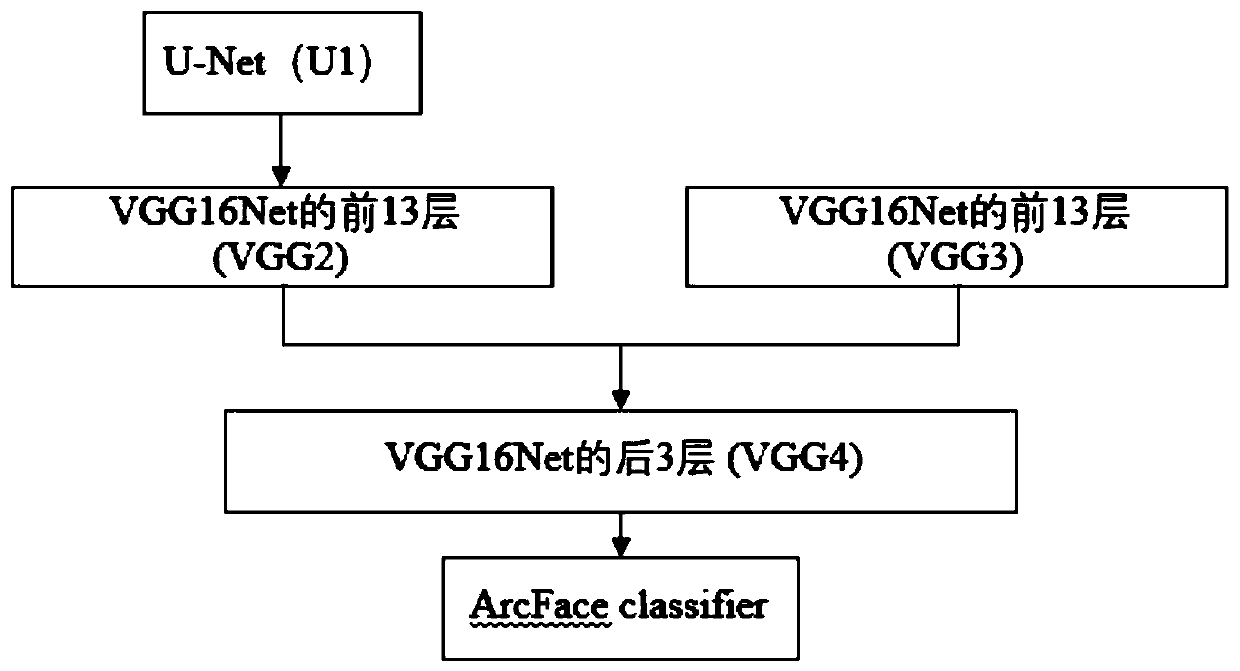

[0032] Such as figure 1 As shown, the machine vision indoor positioning method based on the improved convolutional neural network structure provided in this embodiment mainly proposes an improved convolutional neural network structure and a neural network model training method for the structure, and finally passes the training The final convolutional neural network classifies the input video images to obtain the indoor position of the mobile robot equipped with an RGB camera. Among them, the convolutional neural network functions include: extracting the positional features of semantically segmented images and RGB images, using these two types of positional features to determine the real-time indoor location of the mobile robot.

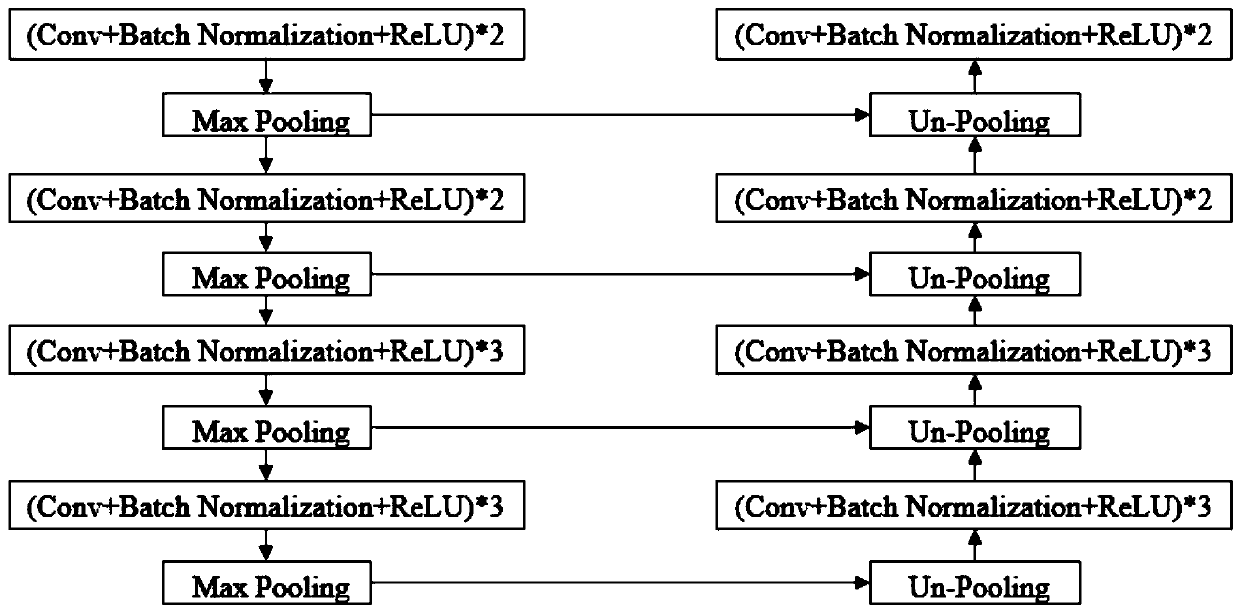

[0033] The improved convolutional neural network structure in this embodiment is the product of the combination of U-Net, the first 13 layers of two...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com