Neural network acceleration circuit and method

A technology of neural network and acceleration circuit, which is applied in the field of neural network, can solve the problems of insufficient signal establishment time and long time, achieve high computing parallelism and improve computing power

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

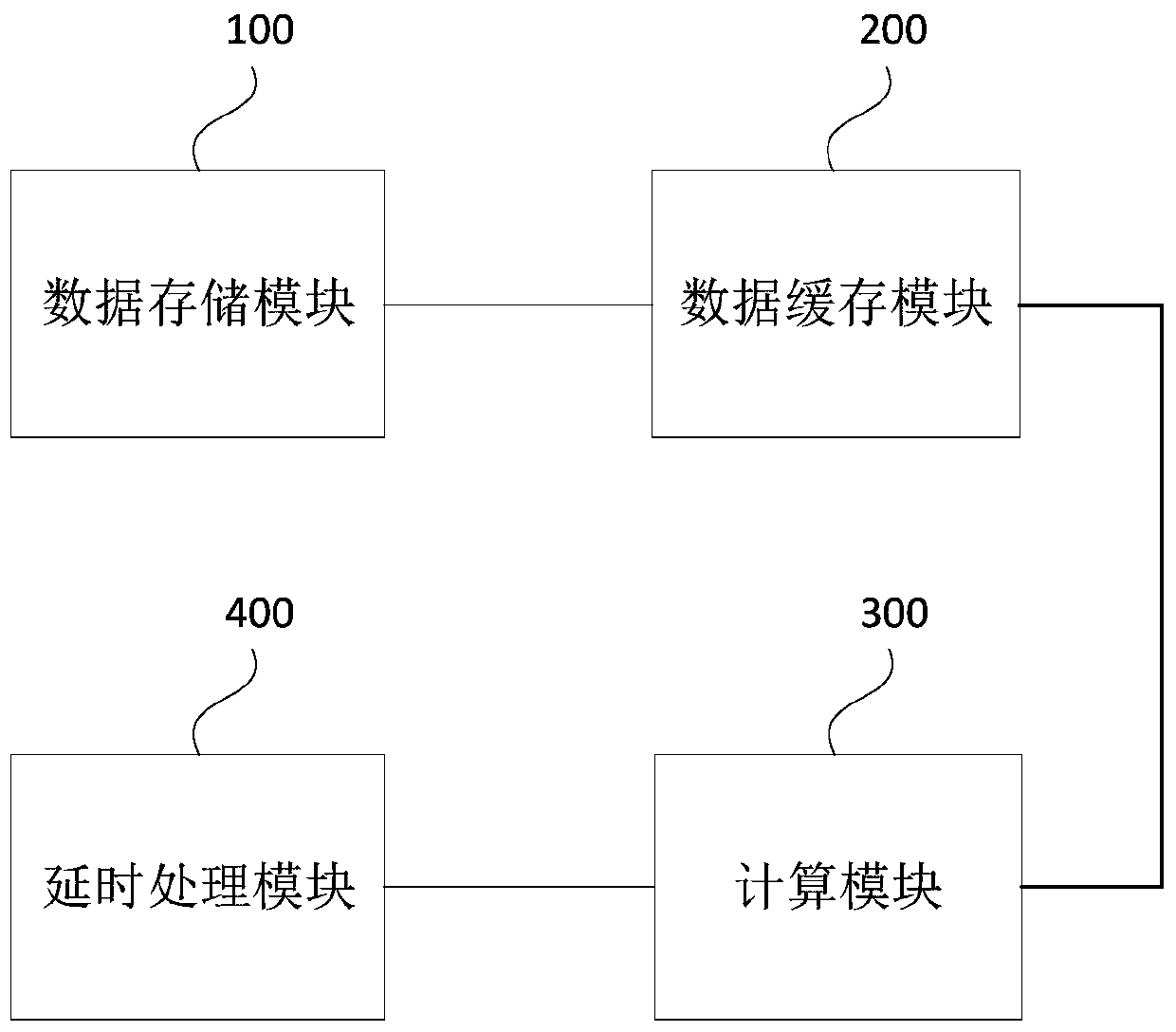

[0032] figure 2 It is a schematic structural diagram of a neural network acceleration circuit provided in Embodiment 1 of the present invention, which is applicable to the calculation of neural networks. Such as figure 2 As shown, a neural network acceleration circuit provided by Embodiment 1 of the present invention includes: a data storage module 100 , a data cache module 200 , a calculation module 300 and a delay processing module 400 .

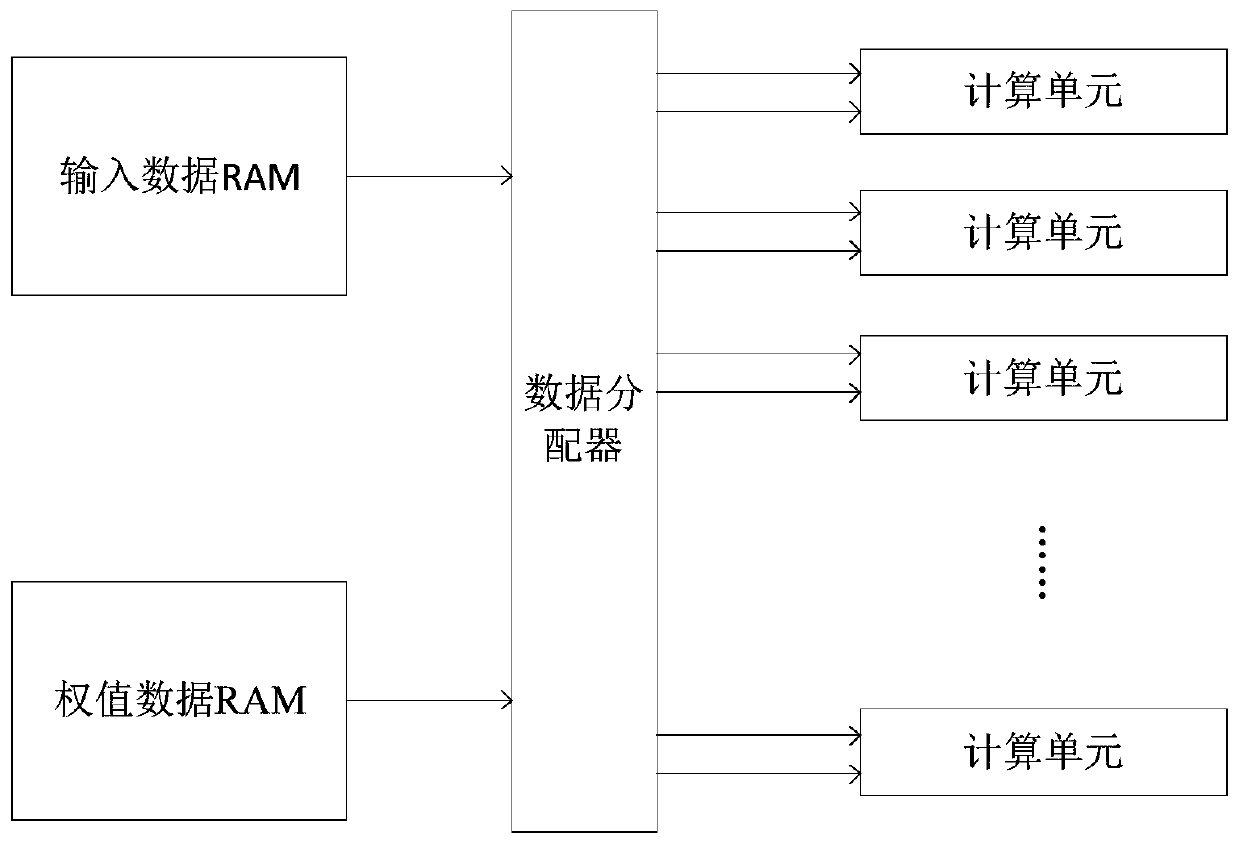

[0033] Specifically, the data storage module 100 is used for storing input data required for neural network calculation. Neural network is a complex network system formed by extensive interconnection of a large number of simple processing units (also called neurons), which reflects many basic characteristics of human brain function and is a highly complex nonlinear dynamic learning system. Calculation of a neural network usually requires a large amount of input data, and in the neural network acceleration circuit, these input data are...

Embodiment 2

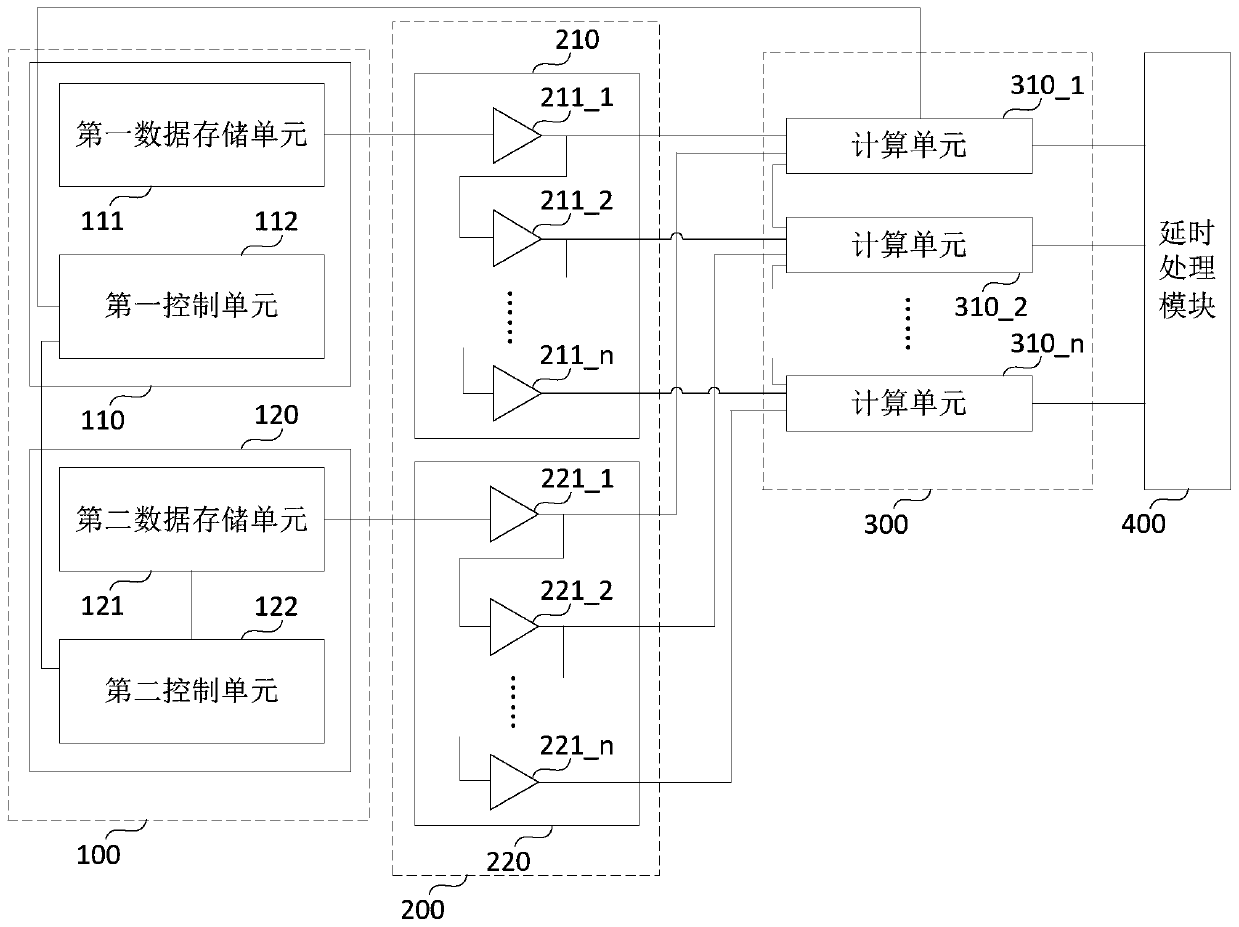

[0039] image 3 It is a schematic structural diagram of a neural network acceleration circuit provided by Embodiment 2 of the present invention. This embodiment is a further refinement of the foregoing embodiments. Such as image 3 As shown, a neural network acceleration circuit provided by Embodiment 2 of the present invention includes: a data storage module 100, a data cache module 200, a calculation module 300, and a delay processing module 400, wherein the data storage module 100 includes a first data storage module module 110 and a second data storage sub-module 120, the first data storage sub-module 110 includes a first data storage unit 111 and a first control unit 112, and the second data storage sub-module 120 includes a second data storage unit 121 and a second control unit Unit 122, the data cache module 200 includes a first register unit 210 and a second register unit 220, the first register unit 210 includes n first registers 211_1~211_n, and the second register ...

Embodiment 3

[0051] Figure 4 It is a schematic flowchart of a neural network acceleration method provided in Embodiment 3 of the present invention, which is applicable to the calculation of neural networks. A neural network acceleration method provided in this embodiment may be implemented by a neural network acceleration circuit provided in any embodiment of the present invention. For content not described in detail in Embodiment 3 of the present invention, reference may be made to the description in any system embodiment of the present invention.

[0052] Such as Figure 4 As shown, a neural network acceleration method provided by Embodiment 3 of the present invention includes:

[0053] S410. Acquire input data required for neural network calculation.

[0054] Specifically, the neural network is a complex network system formed by a large number of simple processing units (also called neurons) that are widely connected to each other. It reflects many basic characteristics of human bra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com