Method and system for controlling emotion lamp based on expressions

An emotion and expression technology, applied in the field of artificial intelligence, can solve the problems of single reference object and not applicable to all groups, and achieve the effect of fewer training parameters, excellent classification performance, and reduced calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

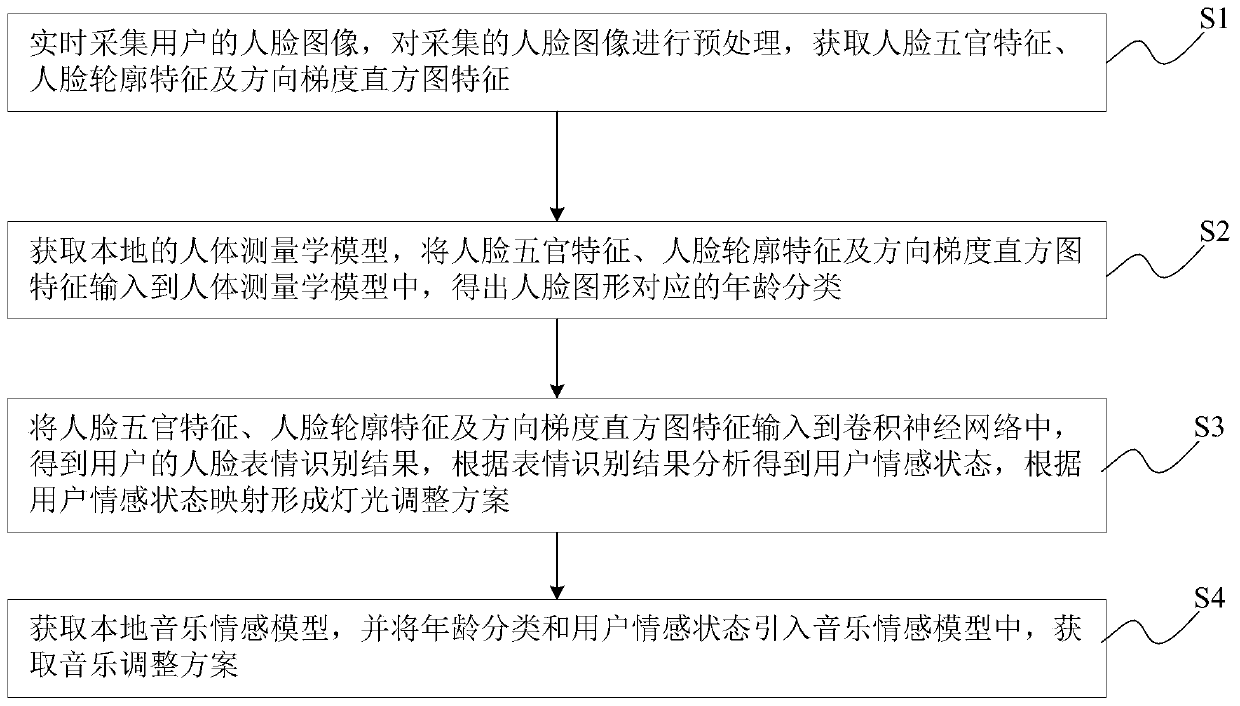

[0041] Such as figure 1 As shown, a method and system for controlling emotional lights based on expressions of the present invention includes the following steps:

[0042] S1. Collect the user's face image in real time, preprocess the collected face image, and obtain facial features, facial contour features, and directional gradient histogram features;

[0043] Further preferably, the preprocessing includes: grayscale processing, image cropping and data increment processing.

[0044] Among them, the gray-scale processing is specifically: converting the input image into a single-channel gray-scale image without using techniques such as intensity normalization.

[0045] Image cropping is specifically: using OpenCV's Haar feature cascade classifier to detect and cut human faces. The Haar feature cascade classifier returns face position information in the picture. After obtaining the face position information, all people in the picture can be The face image is extracted, and the extracted...

Embodiment 2

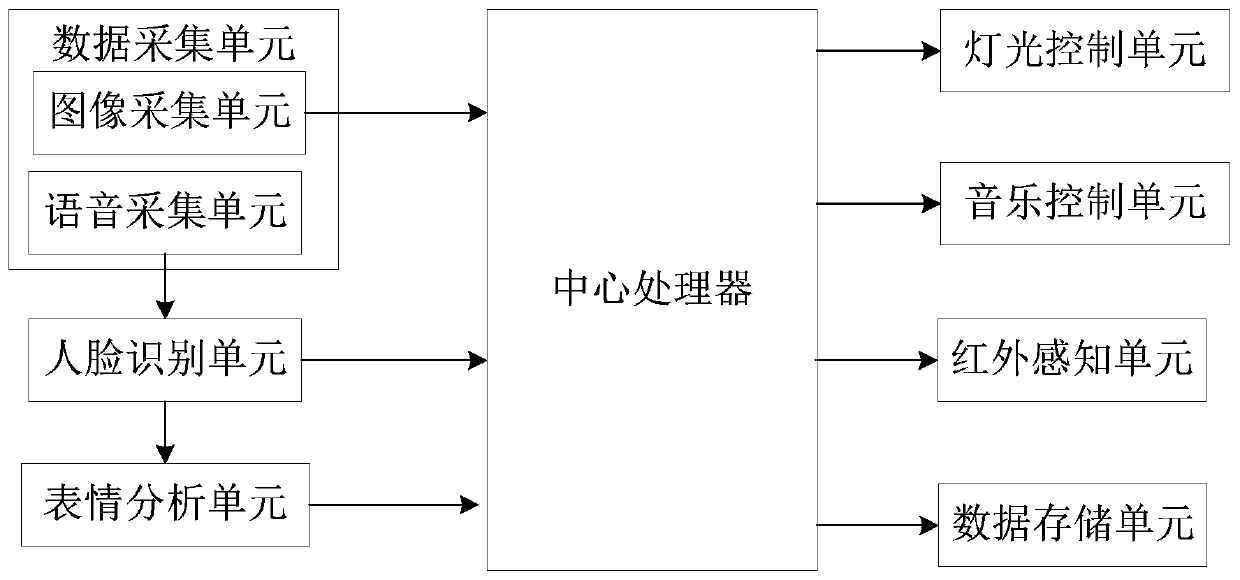

[0070] Such as figure 2 As shown, this embodiment provides a system for controlling emotional lights based on expressions on the basis of embodiment 1, which includes a central processor, a data acquisition unit, a face recognition unit, an expression analysis unit, a light control unit, and an infrared sensing unit , Data storage unit and music control unit.

[0071] The data collection unit collects real-time dynamic pictures of the room and voice command information, transmits the real-time dynamic pictures to the face recognition unit, and sends the voice command information to the central processor. In this embodiment, the data collection unit includes an image collection unit and a voice collection unit; the image collection unit collects screen images through a high-definition camera, infrared lighting components or high-fidelity MIC head, and transmits the screen images to the face recognition unit; voice collection The unit collects the user's voice instruction informa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com