Model training method based on knowledge distillation and image processing method and device

A model training and image processing technology, applied in the field of image recognition, can solve problems such as inability to obtain optimal performance, limited capacity of student models, and impossibility to perfectly learn teacher models.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The principle and spirit of the present disclosure will be described below with reference to several exemplary embodiments. It should be understood that these embodiments are given only to enable those skilled in the art to better understand and implement the present disclosure, rather than to limit the scope of the present disclosure in any way.

[0028] It should be noted that although expressions such as "first" and "second" are used herein to describe different modules, steps, data, etc. of the embodiments of the present disclosure, expressions such as "first" and "second" are only for A distinction is made between different modules, steps, data, etc., without implying a particular order or degree of importance. In fact, expressions such as "first" and "second" can be used interchangeably.

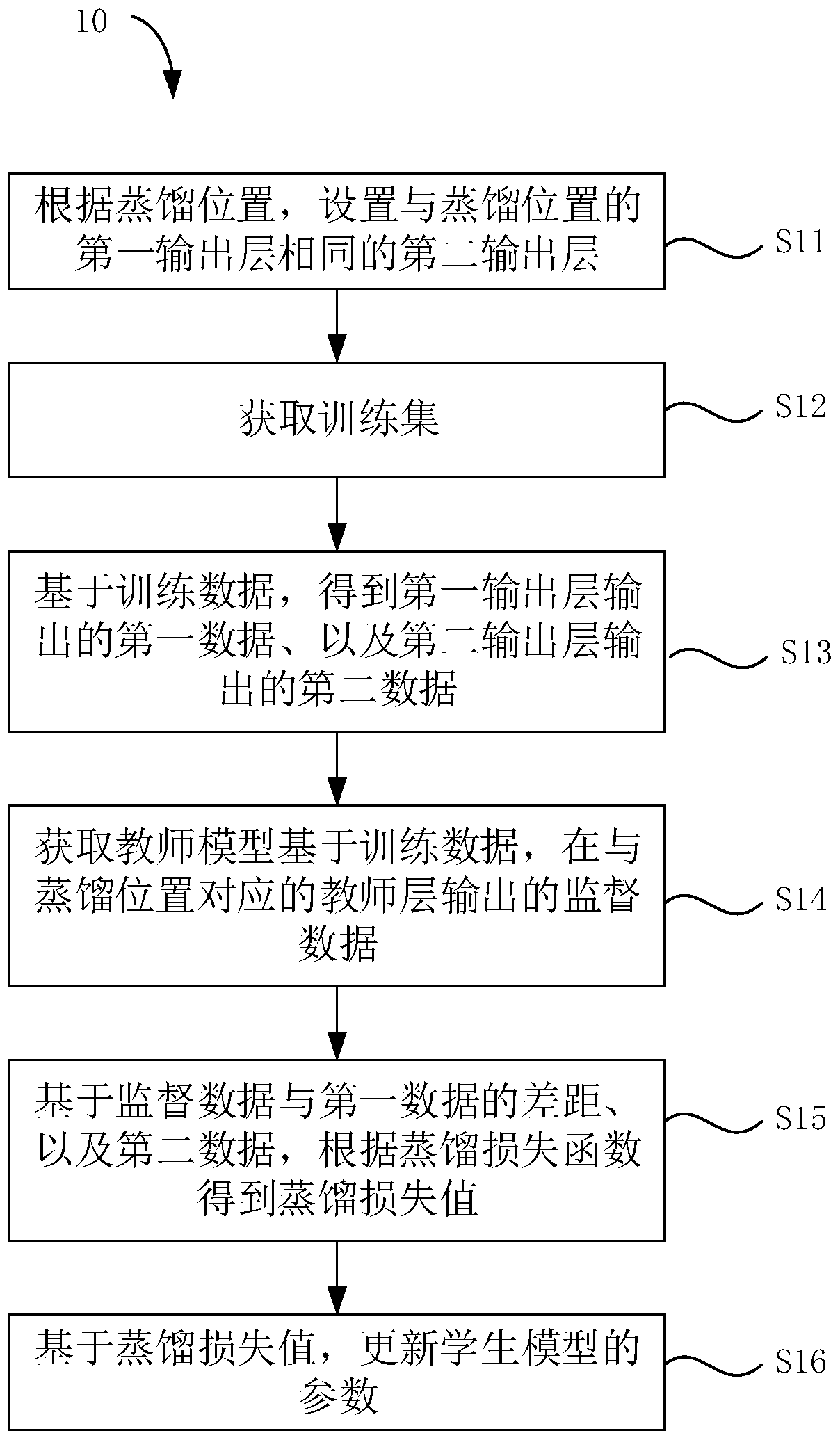

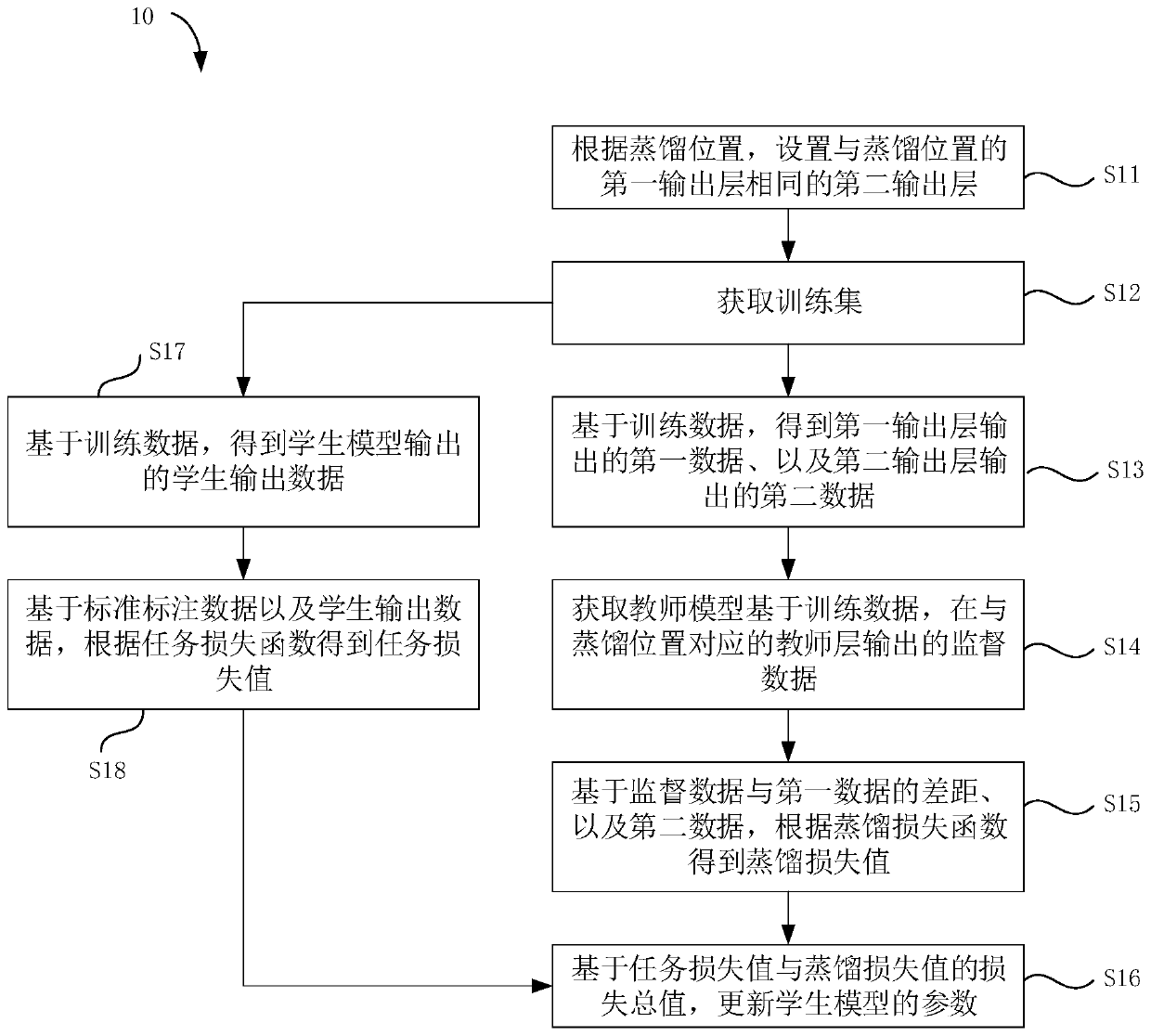

[0029] In order to make the process of training the student network through knowledge distillation more efficient, and transfer more reliable and useful knowledge from the comp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com