Multi-modal fusion sign language recognition system and method based on graph convolution

A recognition system and multi-modal technology, applied in character and pattern recognition, neural learning methods, biological neural network models, etc., can solve the problems of complex video data, poor robustness and low accuracy of fusion features, and achieve enhanced Coherence and Accuracy, Strong Representation Ability, Effect of Improving Accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The specific implementation technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings.

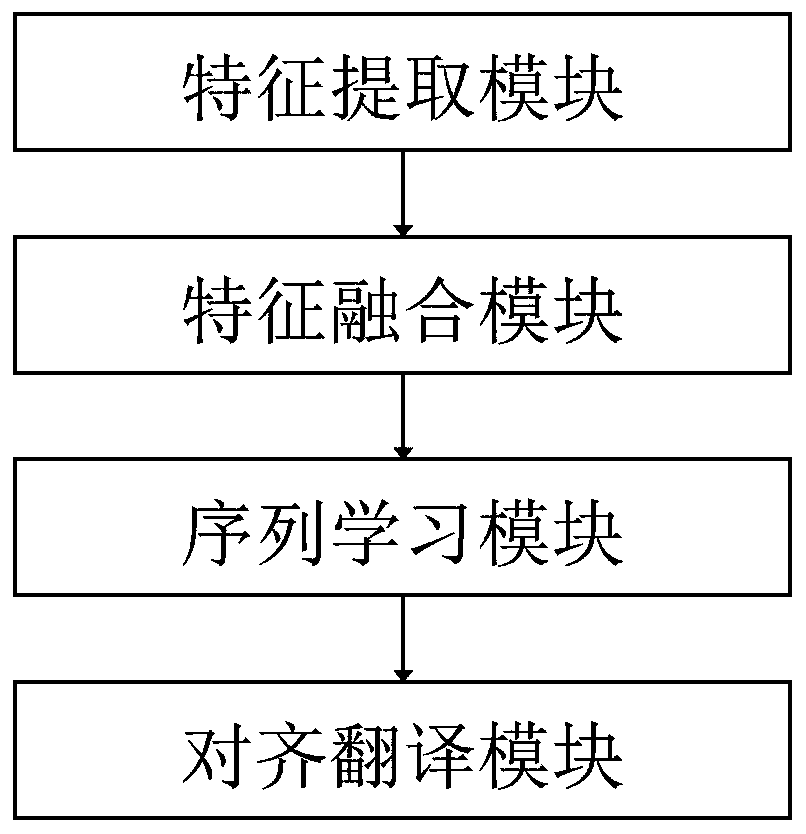

[0038] In this embodiment, a multimodal fusion sign language recognition system based on graph convolution, such as figure 1 As shown, it includes: feature extraction module, feature fusion module, sequence learning module and alignment translation module.

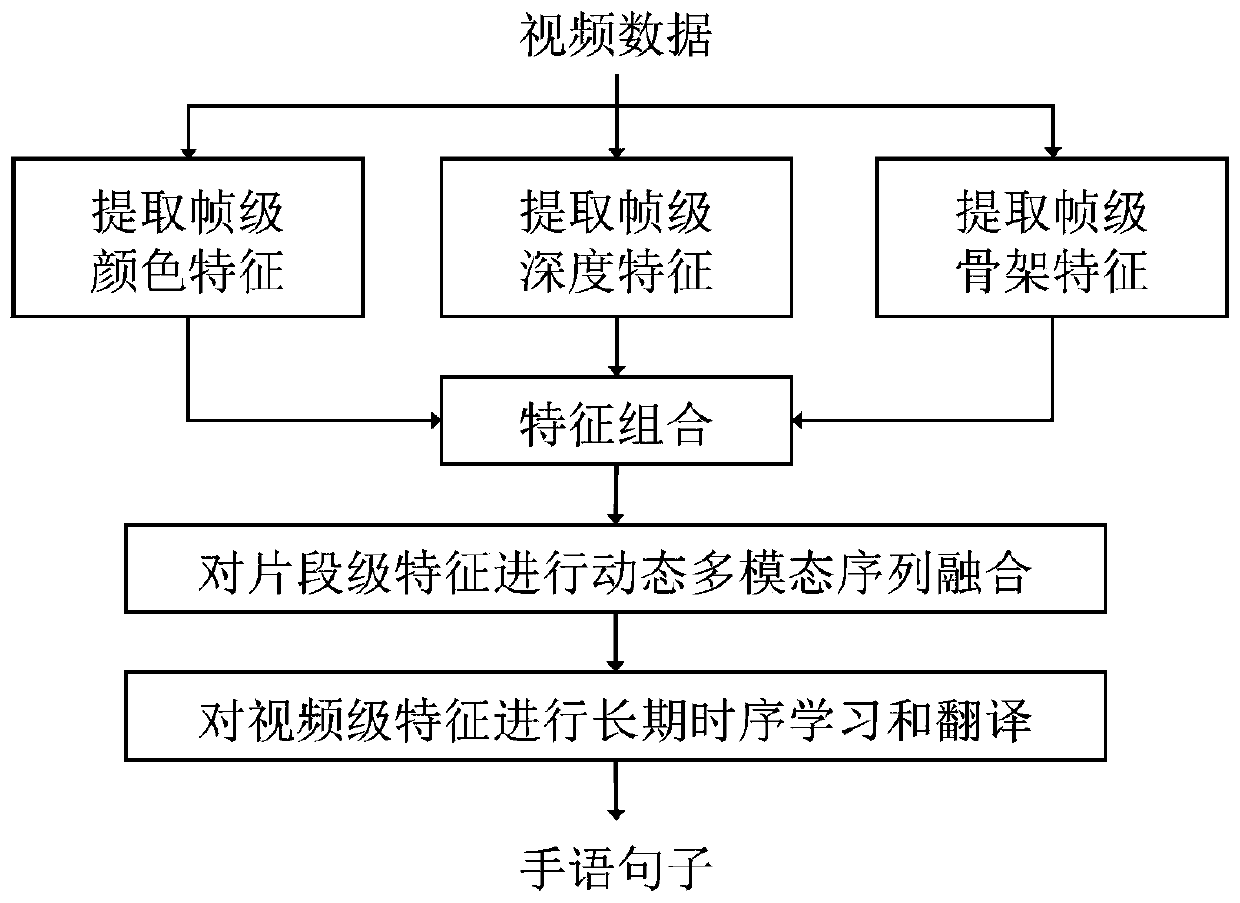

[0039] Among them, the feature extraction module is to extract the color feature u of the video frame from the sign language video database c , depth feature u d and the skeleton feature u s , and dimensionally align all the extracted features to obtain the multimodal feature f;

[0040] In this embodiment, the sign language video database contains sign language video data of 100 common sentences, and 50 people demonstrate the sign language corresponding to each sentence, with a total of 5000 videos.

[0041] In the specific implementation, the ResNet-18 netw...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com