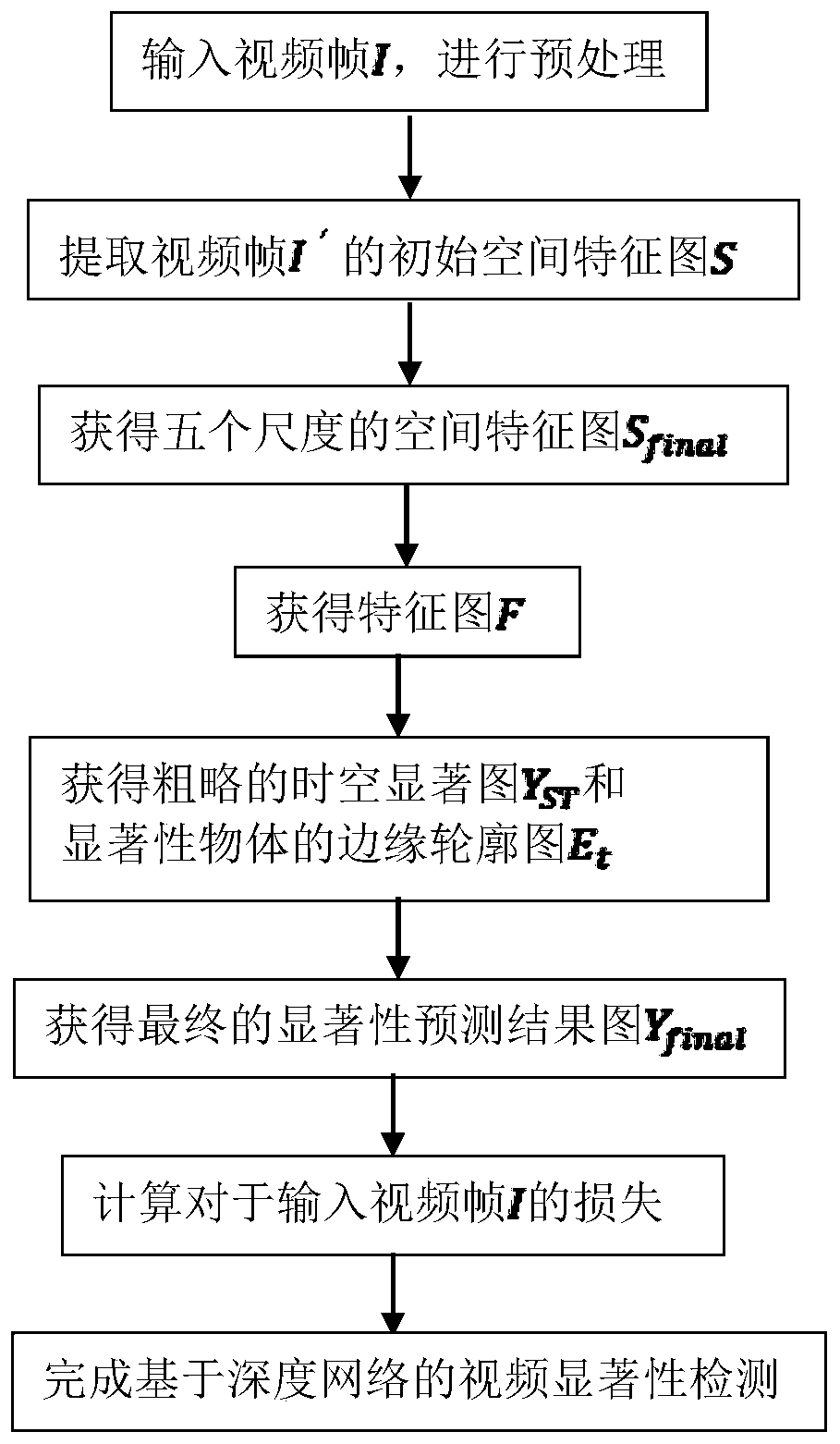

Video saliency detection method based on deep network

A deep network and detection method technology, applied in biological neural network models, image data processing, instruments, etc., can solve the problems of inaccurate detection and incomplete detection of algorithms

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0077] In this embodiment, the salient objects are a cat and a box. The video saliency detection method based on the deep network described in this embodiment, the specific steps are as follows:

[0078] The first step, input video frame I, carry out preprocessing:

[0079] Input a video frame I whose salient target is a cat and a box, unify the size of the video frame to be 473×473 pixels in width and height, and subtract the value of the corresponding channel from each pixel value in the video frame I Mean value, wherein, the mean value of the R channel of each video frame I is 104.00698793, the mean value of the G channel in each video frame I is 116.66876762, and the mean value of the B channel in each video frame I is 122.67891434, so, input to ResNet50 The shape of the video frame I before the deep network is 473×473×3, and the video frame after such preprocessing is recorded as I′, as shown in the following formula (1):

[0080] I'=Resize(I-Mean(R,G,B)) (1),

[0081] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com