Video foreground and background separation method based on cascaded convolutional neural network

A convolutional neural network and foreground-background separation technology, applied in the field of computer vision, can solve problems such as foreground leakage, inability to effectively capture foreground motion information, inaccurate detection of foreground moving objects, etc., to improve learning ability, easy to implement, and program simple effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

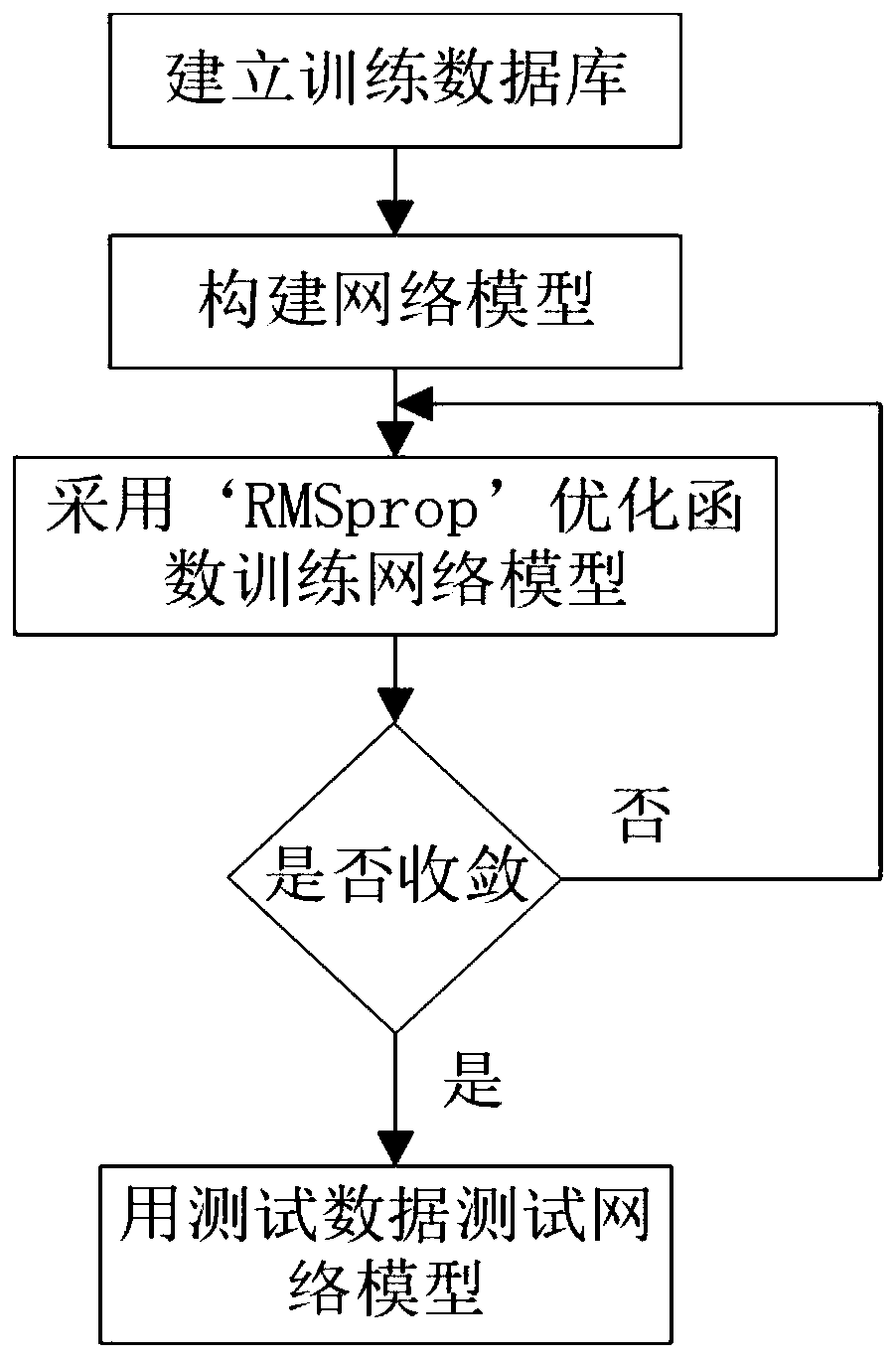

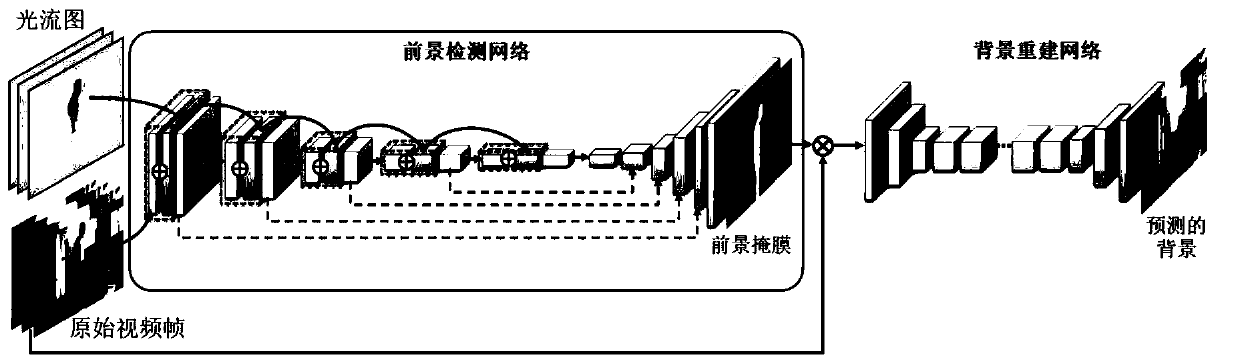

[0047] In order to make up for the deficiencies of the prior art, the present invention proposes a cascaded convolutional neural network incorporating spatio-temporal cues, which consists of two encoder-decoder type sub-networks, namely the foreground detection network (FD network) and the background reconstruction network (BR network). The FD network is used to generate a binary foreground mask, and the BR network uses the output of the FD network and the input video frame to reconstruct the background image. To introduce spatial clues, the present invention takes three consecutive video frames as input. To improve the network applicability, the optical flow maps corresponding to the original video frames are simultaneously input into the FD network as spatial cues. The specific method includes the following steps:

[0048] 1) Establish a training database.

[0049]11) Use the ChangeDetection2014 (abnormal object detection) database, which is a public dataset that contains...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com